Upgrade the VMware vSphere Environment from Version 7 to 8 – Part 2 – Upgrade ESXi Hosts

In this post we will see how we can upgrade our ESXi Hosts from version 7.0.3 to 8.0.2 by using the vSphere Lifecycle Manager (vLCM).

In Part 1 we already upgraded our vCenter Server Appliance from version 7.0.3 to 8.0.2.

Source: https://docs.vmware.com/en/VMware-vSphere/8.0/vsphere-esxi-80-upgrade-guide.pdf

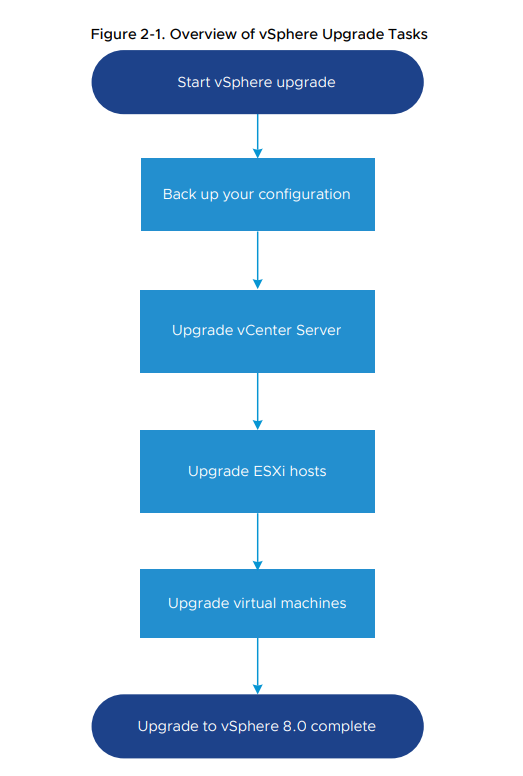

Upgrading ESXi Hosts

After you upgrade vCenter Server, upgrade your ESXi hosts. You can upgrade ESXi 6.7 and 7.0

hosts directly to ESXi 8.0.

If you upgrade hosts managed by vCenter Server, you must upgrade vCenter Server

before you upgrade the ESXi hosts. If you do not upgrade your environment in the correct order,

you can lose data and lose access to servers.Source: https://docs.vmware.com/en/VMware-vSphere/8.0/vsphere-esxi-80-upgrade-guide.pdf

Upgrade Hosts by using the vSphere Lifecycle Manager (vLCM)

vLCM allows administrators to define a desired state for their ESXi hosts and clusters. This includes the ESXi version, firmware, drivers, and other software components.

vLCM continuously monitors the compliance of the infrastructure against this desired state and automates remediation actions when deviations are detected.

vLCM can automatically apply patches and upgrades to ESXi hosts according to the defined desired state, ensuring consistency and reducing downtime.

When upgrading to, or installing, vSphere 7, customers are still using vSphere Update Manager (VUM). vLCM can to be enabled per cluster. Enabling vLCM by configuring a cluster image is easy, following the wizard under Cluster > Updates > Setup Image. The compatibility check includes verification of currently installed vibs (kernel modules).

VIB stands for vSphere Installation Bundle. At a conceptual level a VIB is somewhat similar to a tarball or ZIP archive in that it is a collection of files packaged into a single archive to facilitate distribution.

Source: https://blogs.vmware.com/vsphere/2011/09/whats-in-a-vib.html

For example, you might see warnings about vibs when you are using a vendor specific ISO. These modules can be added as vendor addon in the image. Please note that QuickBoot is supported for vLCM, but has to be enabled manually.

VMware ESXi Quick Boot for the Hypervisor

https://blog.matrixpost.net/vmware-virtual-machine-using-dedicated-efi-system-partition-disk-boots-directly-into-grub2-command-shell-instead-booting-the-os/#quickboot_esxi

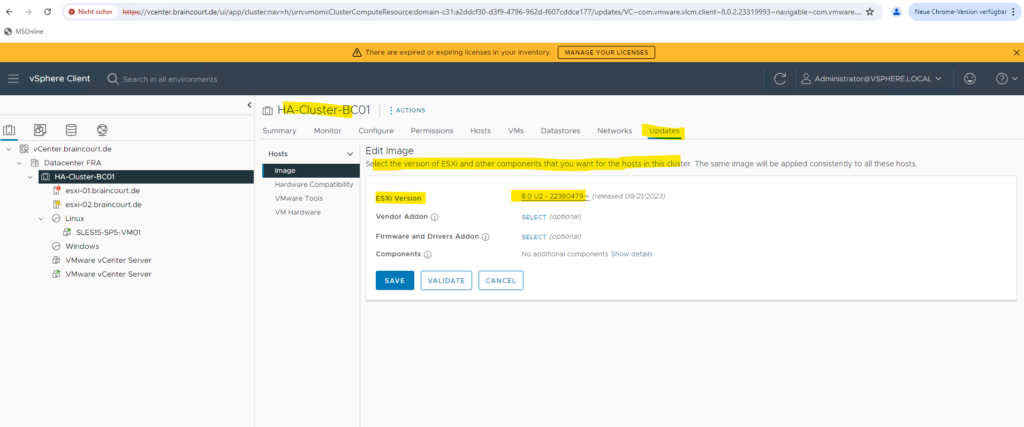

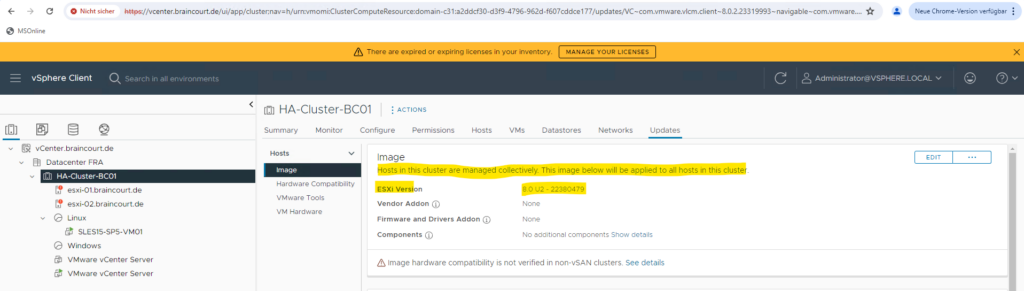

Select the ESXi Host version for the cluster image that you want to upgrade your ESXi Hosts to and click on Save.

Inventory -> Cluster -> Updates -> Hosts -> Image

This is finally the desired state for all ESXi Hosts in the cluster against vLCM continuously monitors the compliance of the ESXi Hosts and automates remediation actions when deviations are detected.

As mentioned previously we can also select below firmware, drivers and other software components which should be applied to the ESXi Hosts in the cluster.

For my lab environment and because I am using here two old IBM x3650 M4 and M5 server, I will leave this because there are no actual packages available anymore.

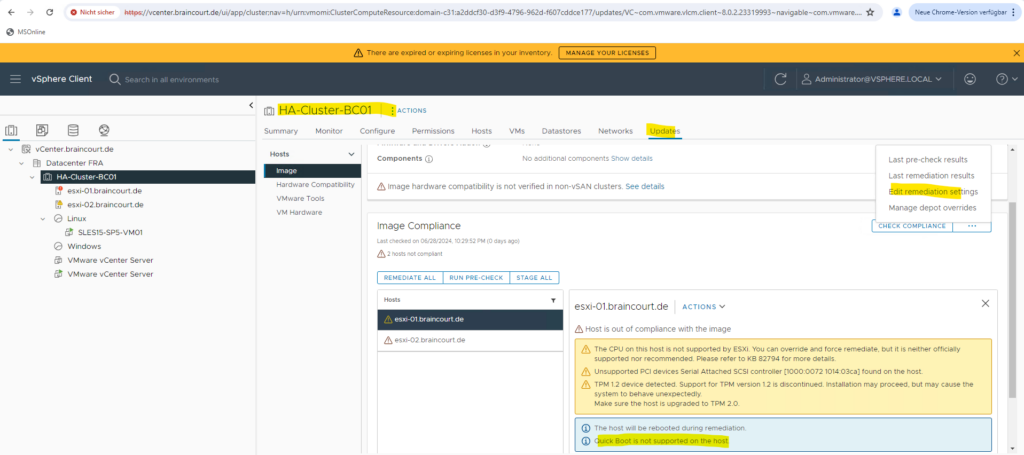

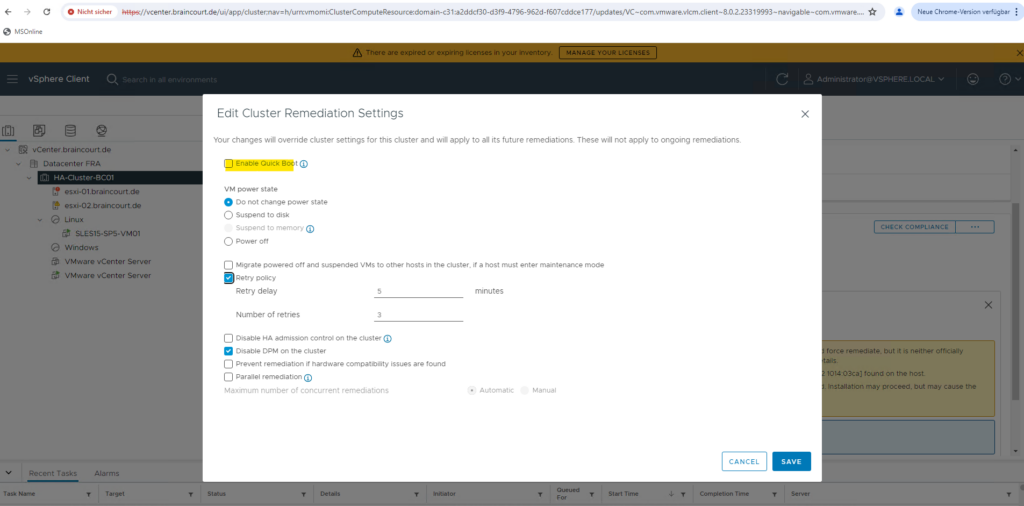

Because my server don’t support the previously mentioned Quick Boot mode, I will disable it for the cluster remediation settings. Therefore click on the three dots below.

Select Edit remediation settings.

Uncheck Quick Boot.

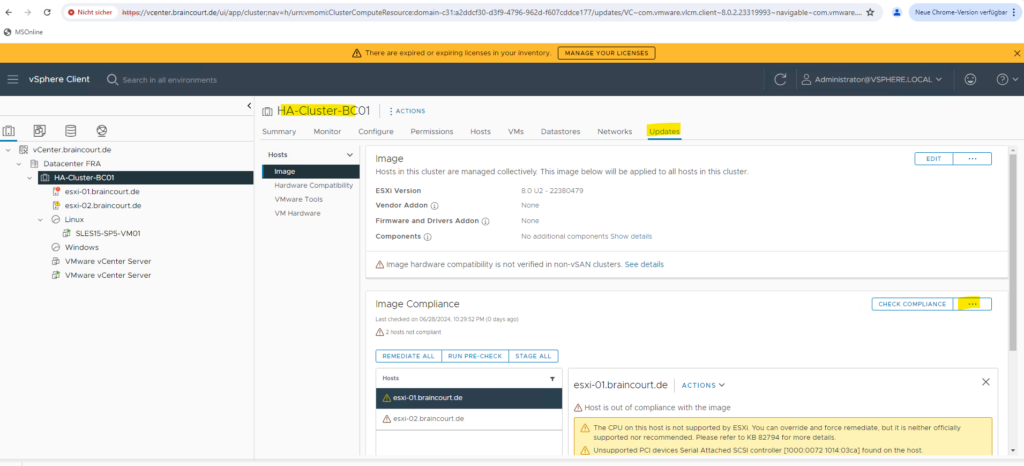

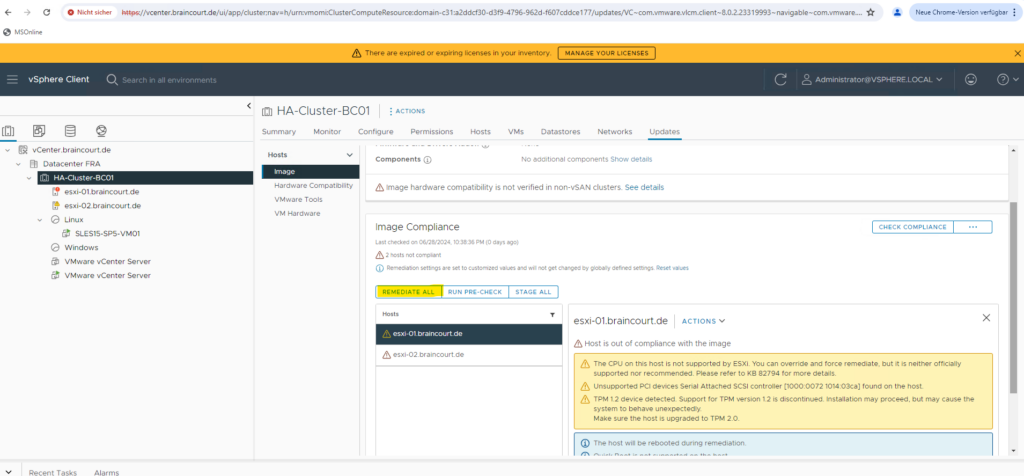

Remediation is the process during which vSphere Lifecycle Manager applies patches, extensions, and upgrades to ESXi hosts. Remediation makes the selected vSphere objects compliant with the attached baselines and baseline groups.

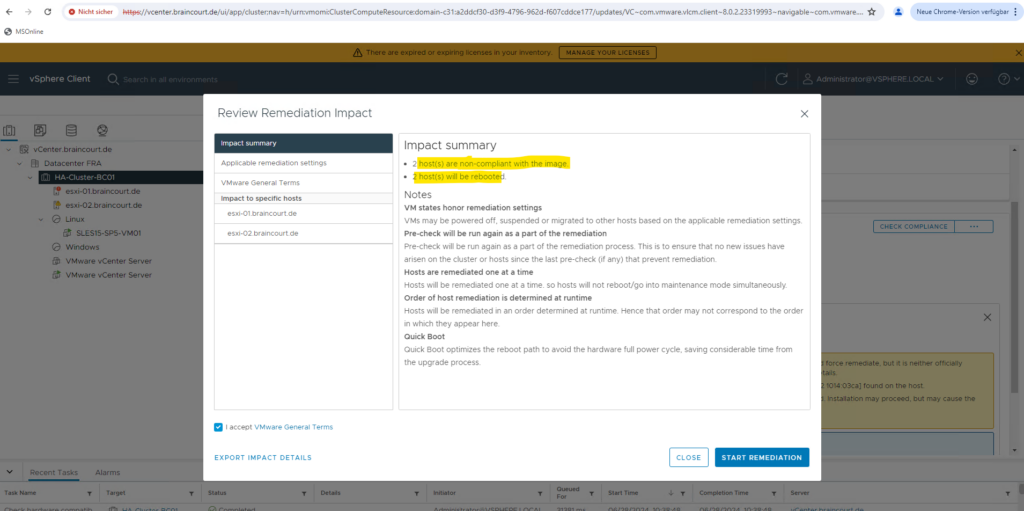

We can now trigger the upgrade for our ESXi Hosts by clicking on Start Remediation.

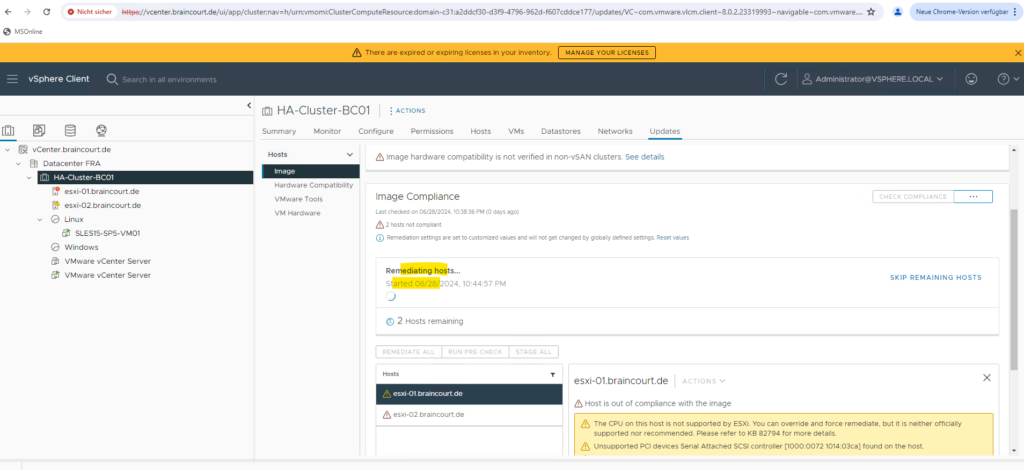

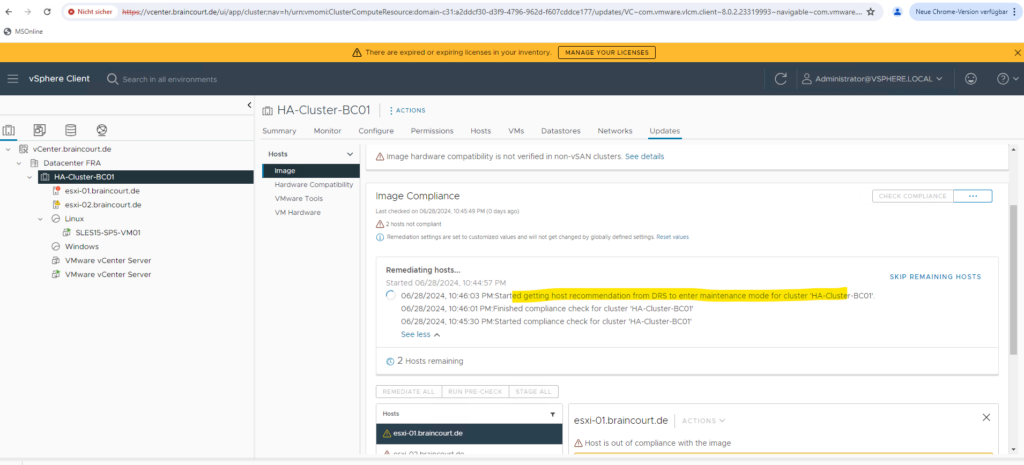

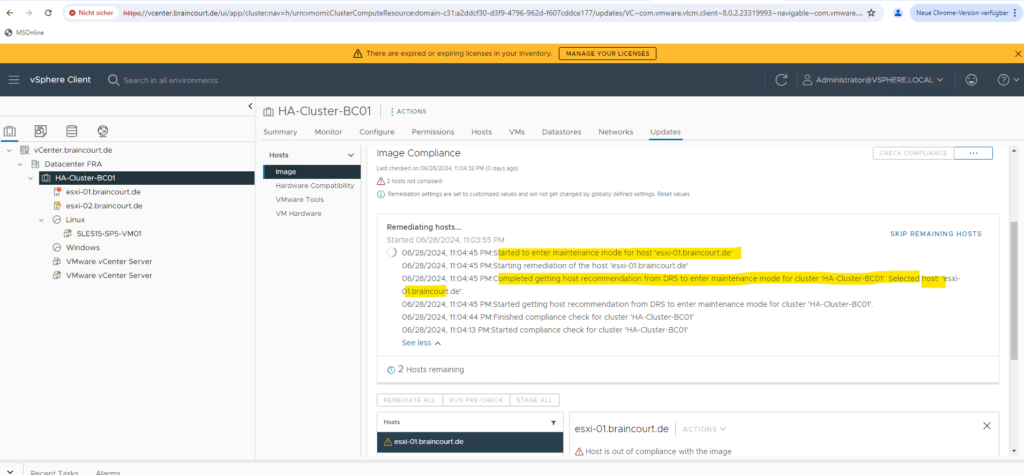

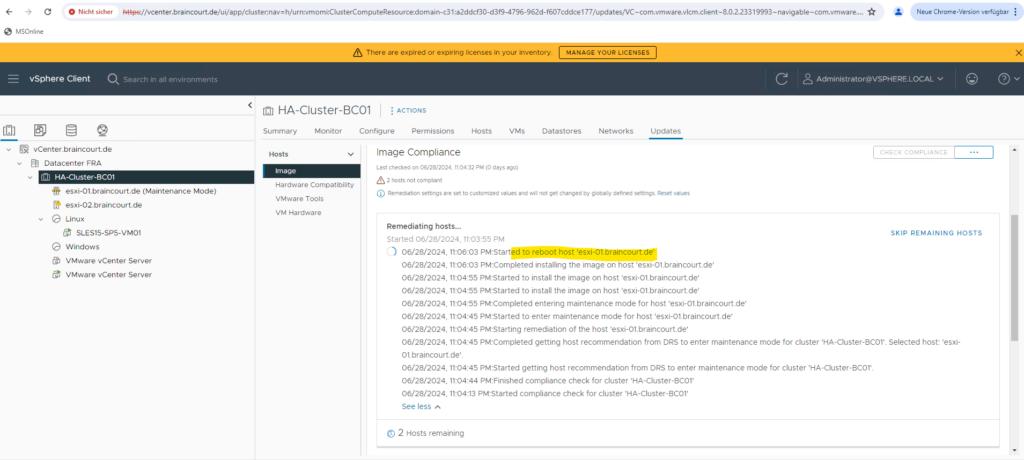

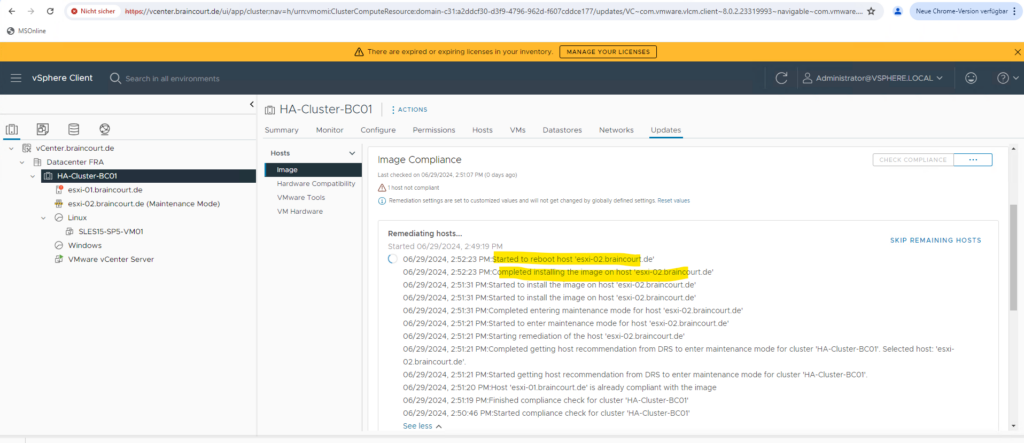

Remediation of our hosts starts.

Distributed Resource Scheduler

DRS spreads the virtual machine workloads across vSphere hosts inside a cluster and monitors available resources for you. Based on your automation level, DRS will migrate (VMware vSphere vMotion) virtual machines to other hosts within the cluster to maximize performance.Source: https://www.vmware.com/products/vsphere/drs-dpm.html

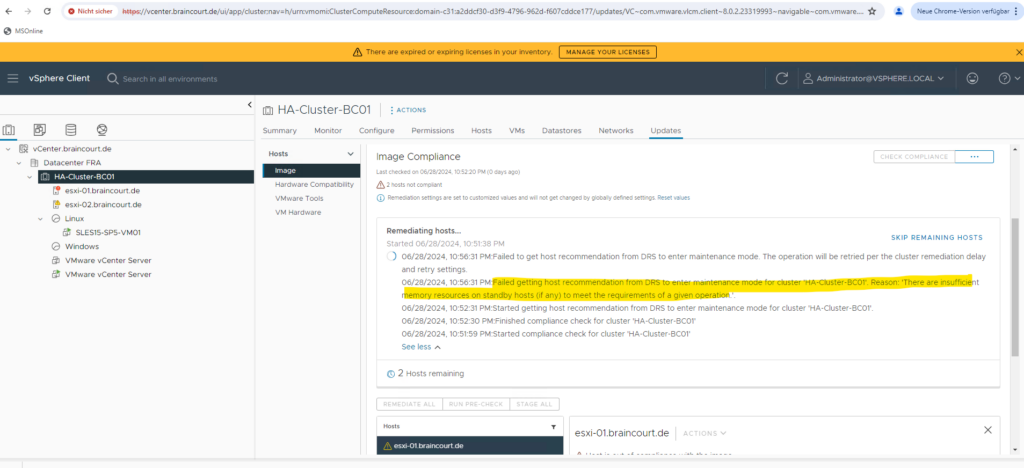

I was running into the following error first.

Failed getting host recommendation from DRS to enter maintenance mode for cluster ‘HA-Cluster-BC01’. Reason: ‘There are insufficient memory resources on standby hosts (if any) to meet the requirements of a given operation.’.

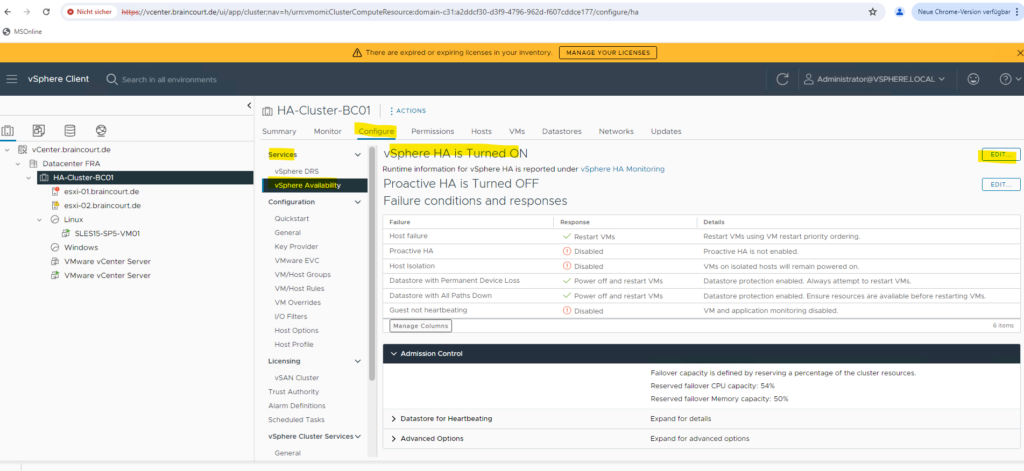

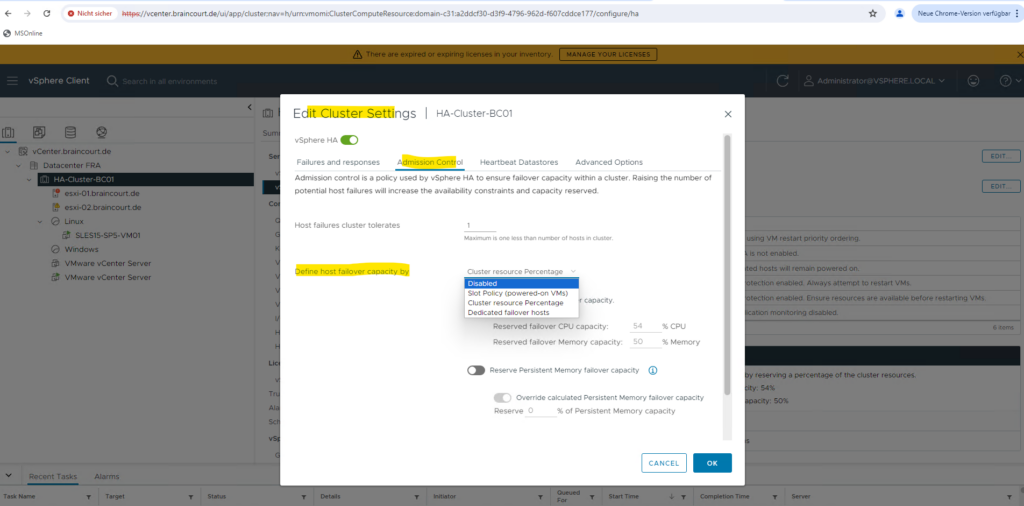

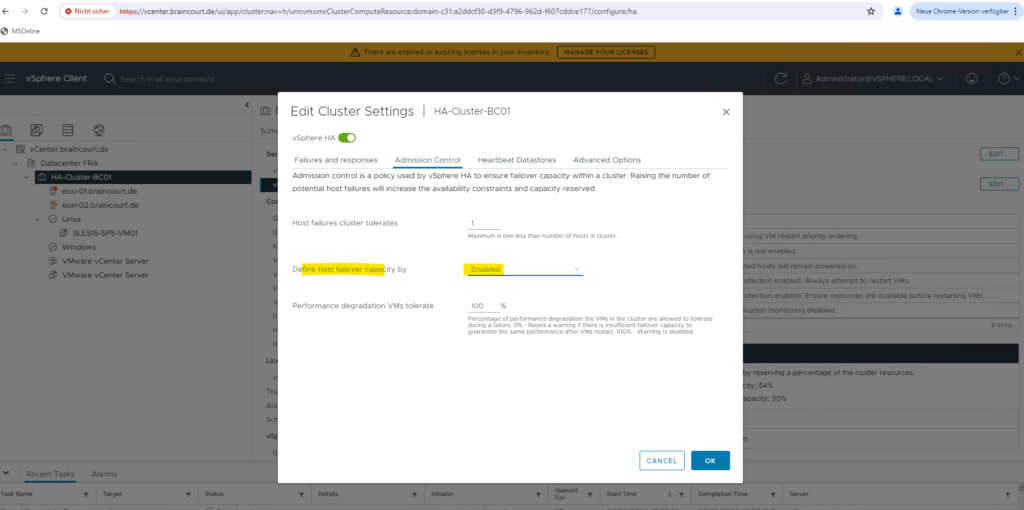

The reason for this issue are the admission control settings for my two node cluster. There is a setting for host failover capacity which will finally control how many host failures your cluster is allowed to tolerate and still guarantee failover.

ESX/ESXi host unable to enter maintenance mode in a two node cluster

https://knowledge.broadcom.com/external/article?legacyId=1017330vSphere HA Admission Control

https://docs.vmware.com/en/VMware-vSphere/7.0/com.vmware.vsphere.avail.doc/GUID-53F6938C-96E5-4F67-9A6E-479F5A894571.html

For a two node cluster we first need to disable the host failover capacity, otherwise vLCM couldn’t bring any ESXi Host in maintenance mode in order to upgrade the host.

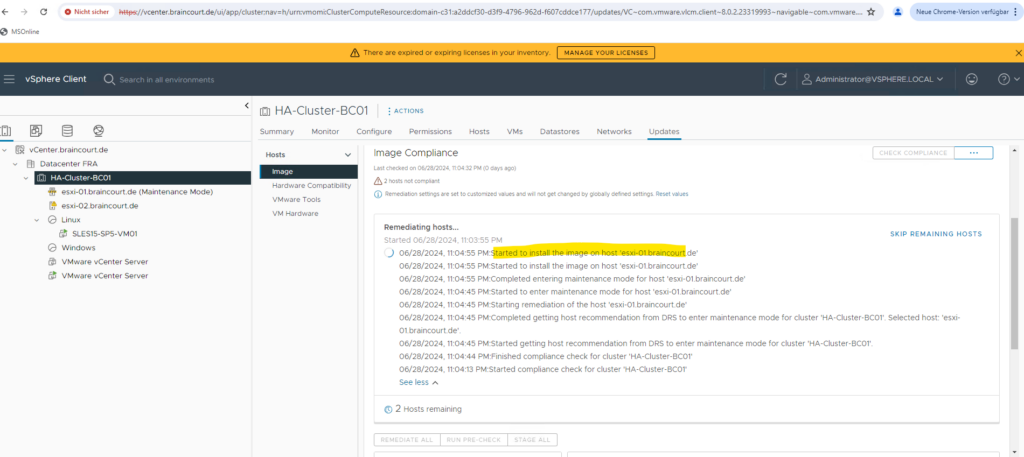

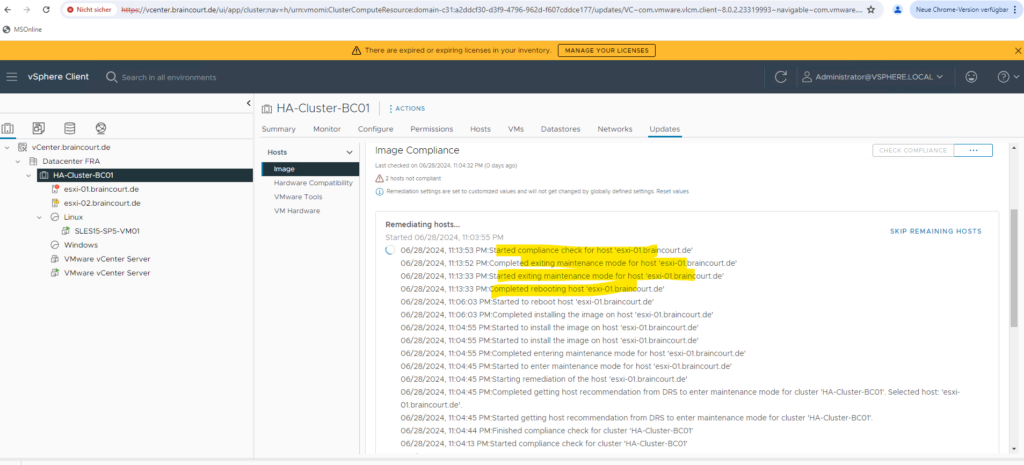

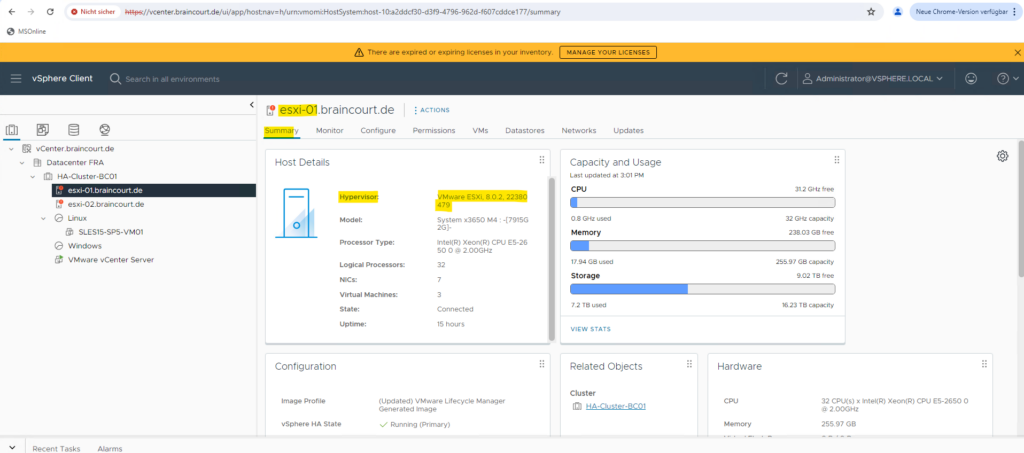

Now it works and vLCM was completed entering maintenance mode for my first ESXi Host with the FQDN esxi-01.braincourt.de.

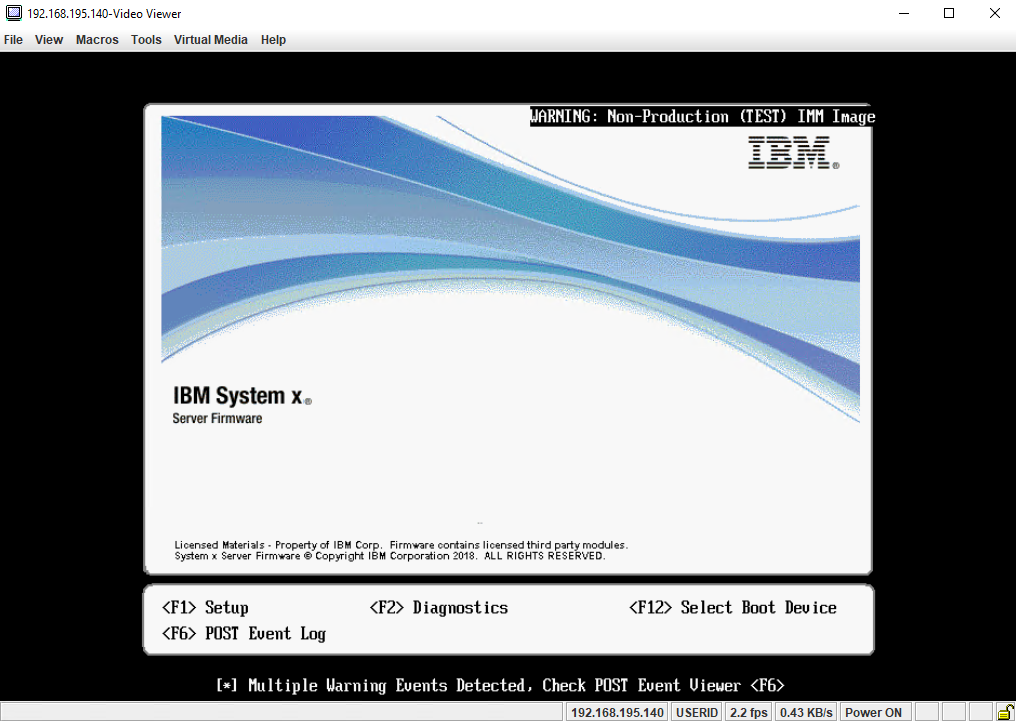

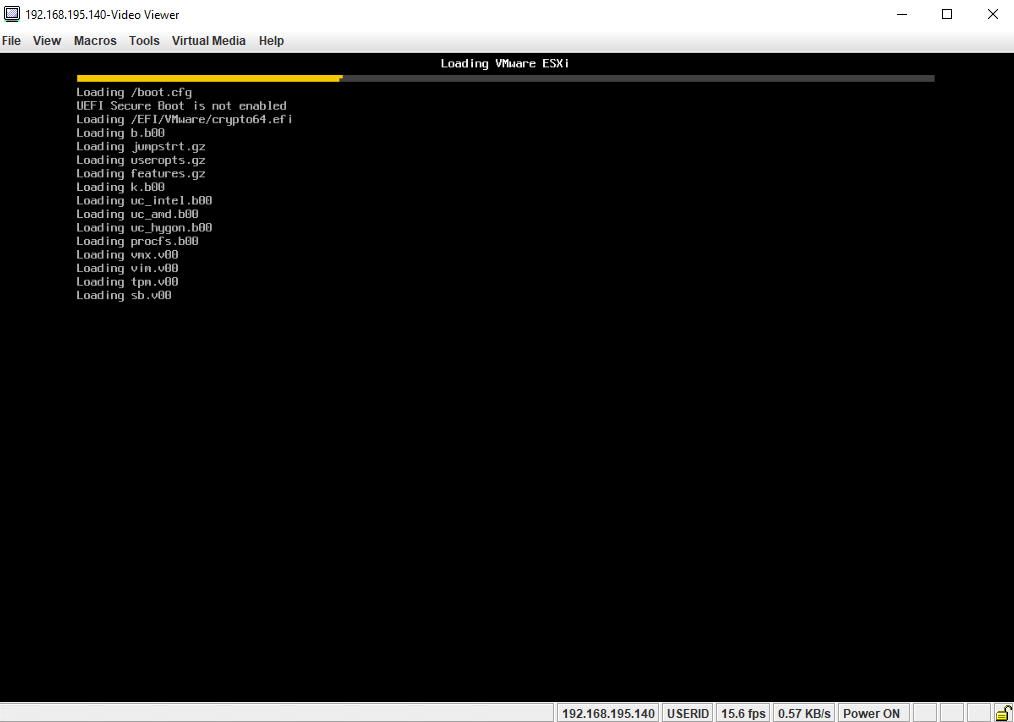

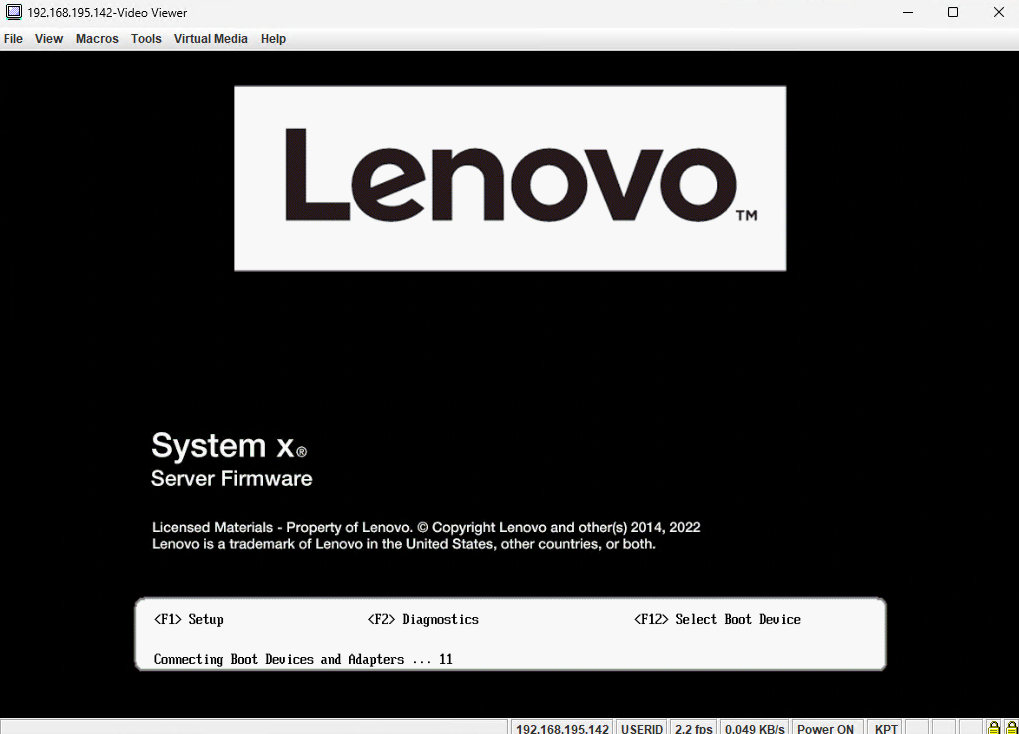

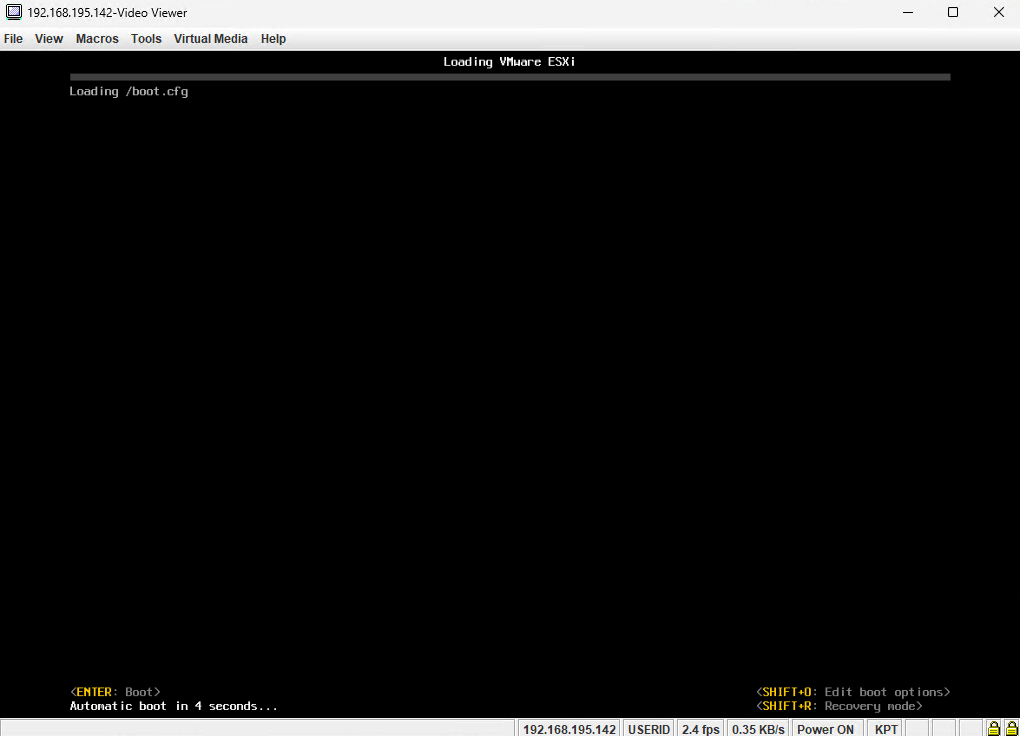

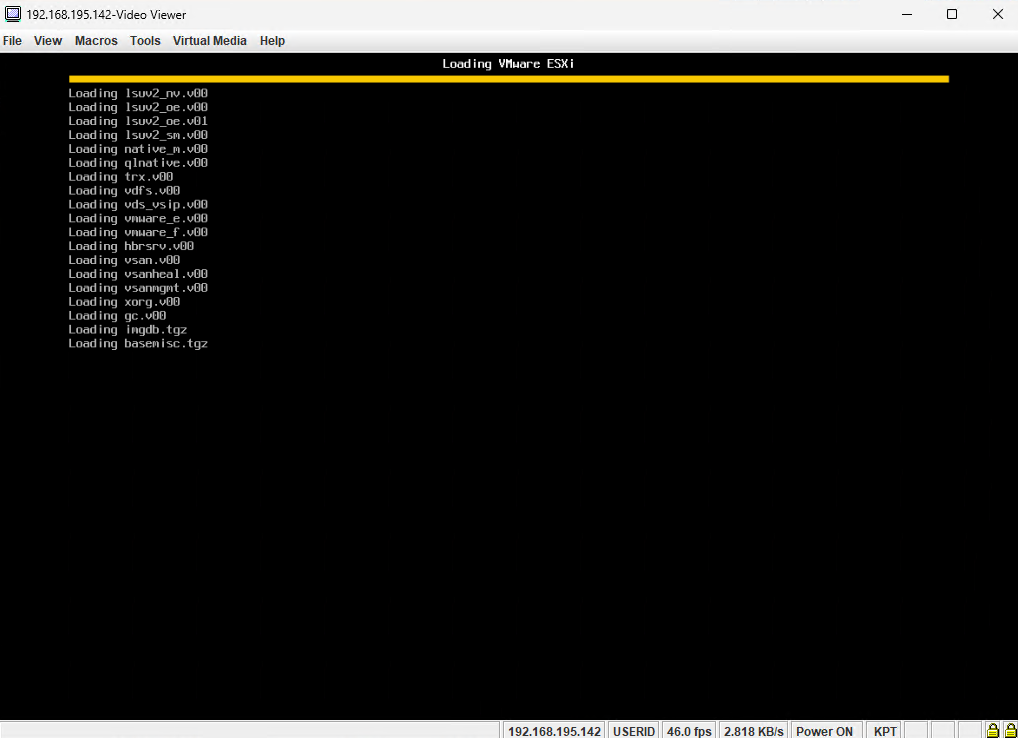

The host will get rebooted by the vLCM.

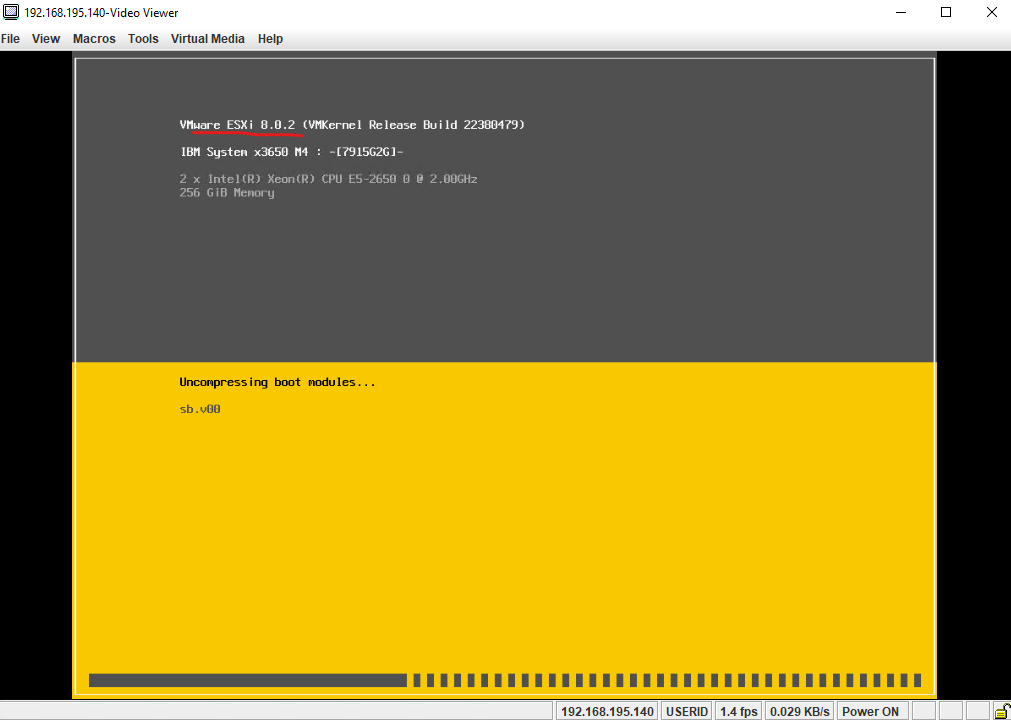

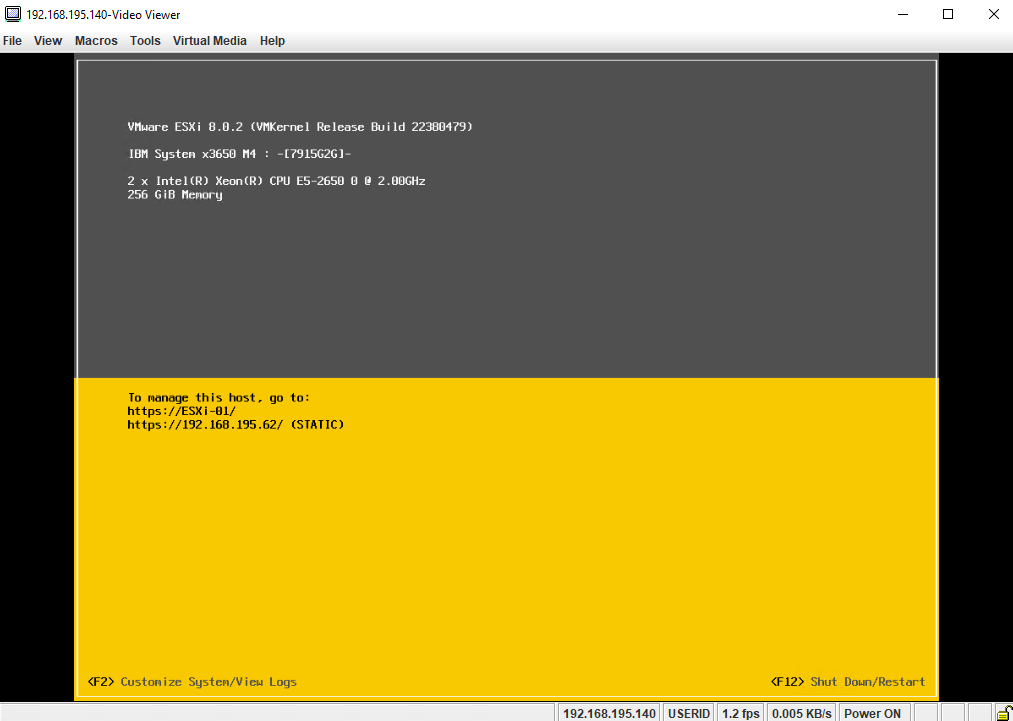

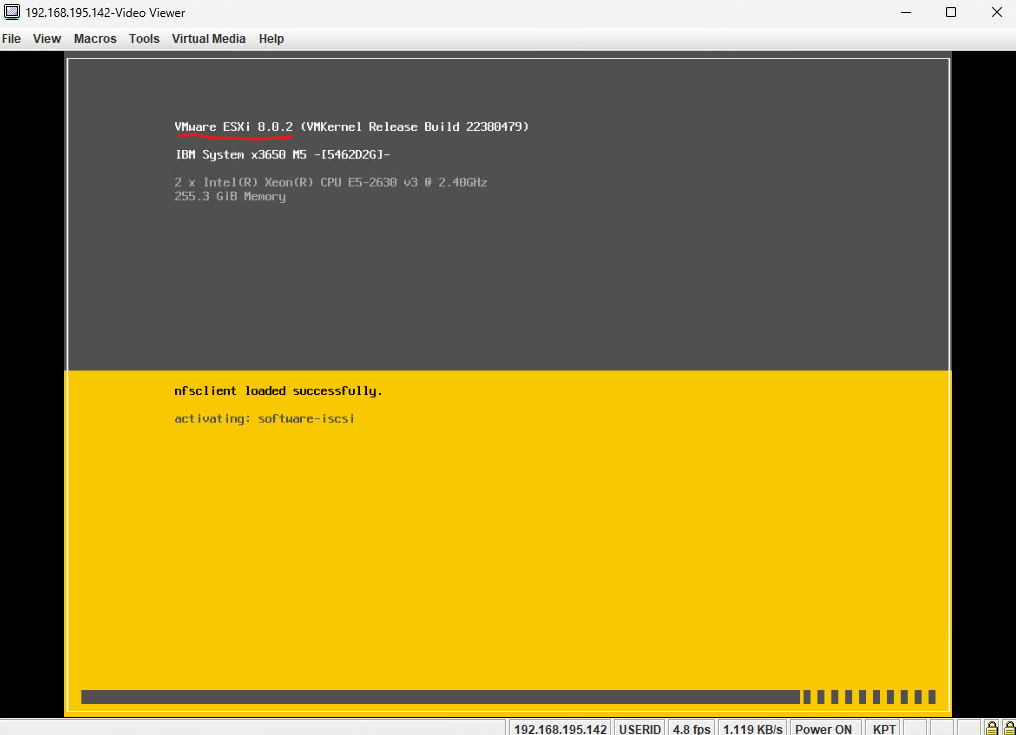

During the reboot we can already see the new ESXi Host version 8.0.2.

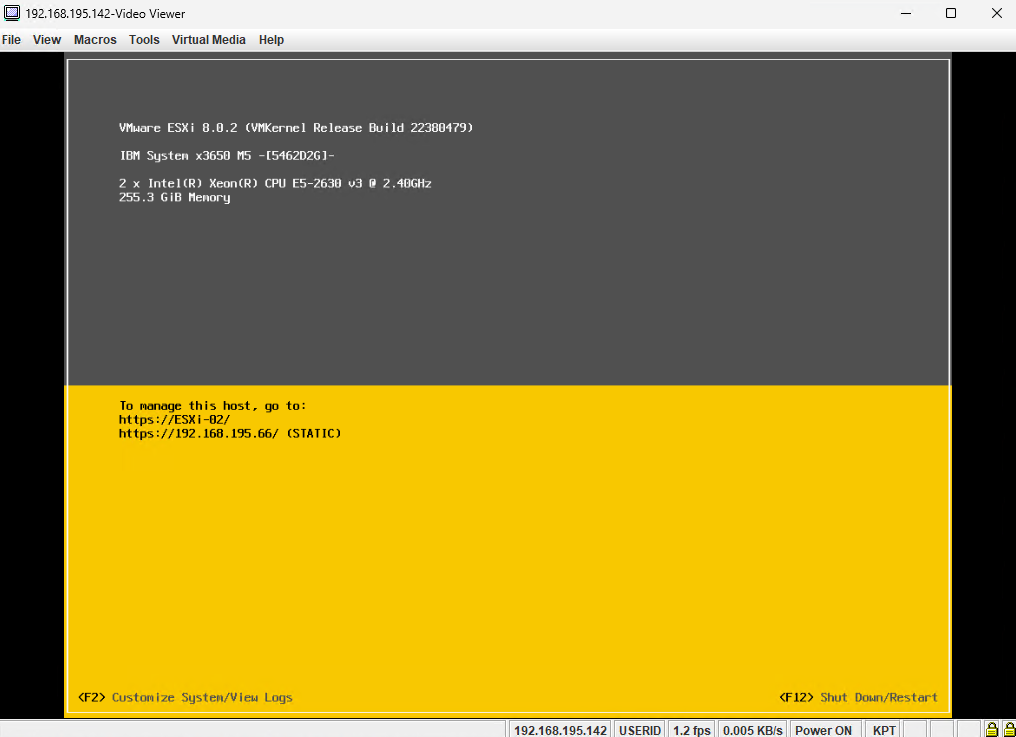

Finally the host is rebooted.

The vLCM will now exiting the maintenance mode for the upgraded host and will start another compliance check against.

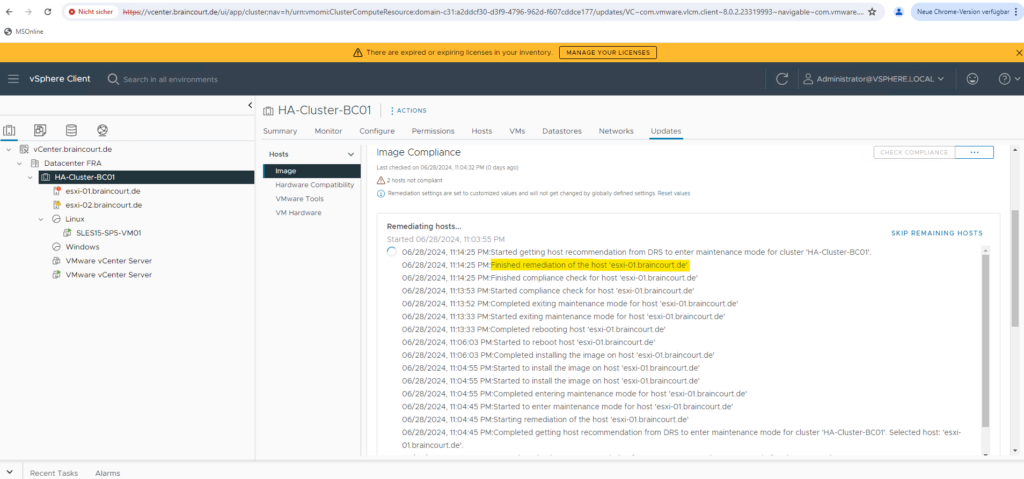

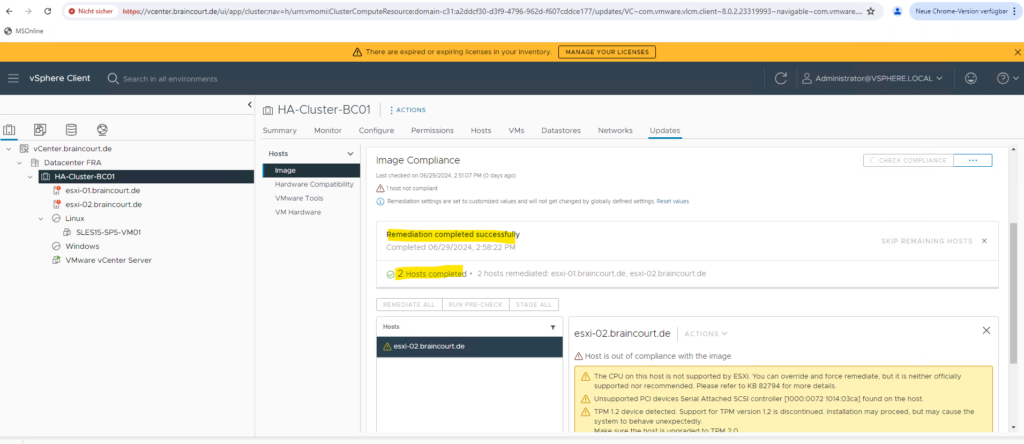

Finally remediation is finished and the upgrade from ESXi version 7.0.3 to 8.0.2 was done successfully.

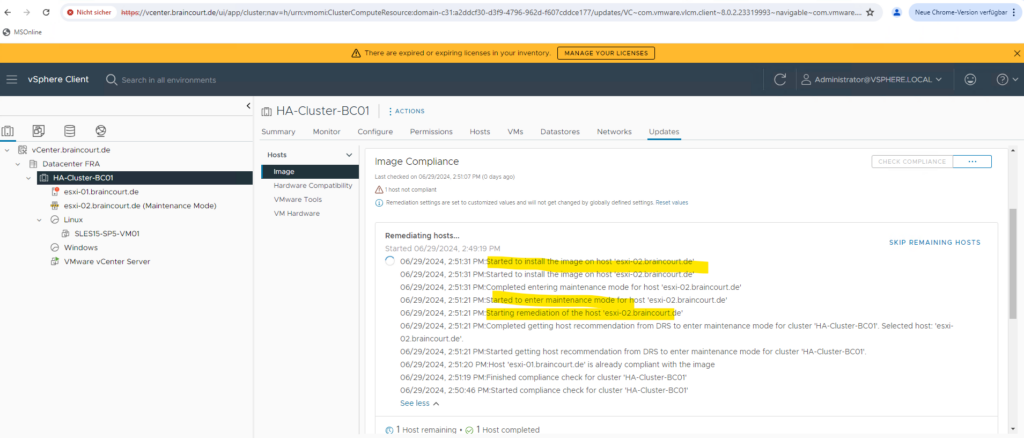

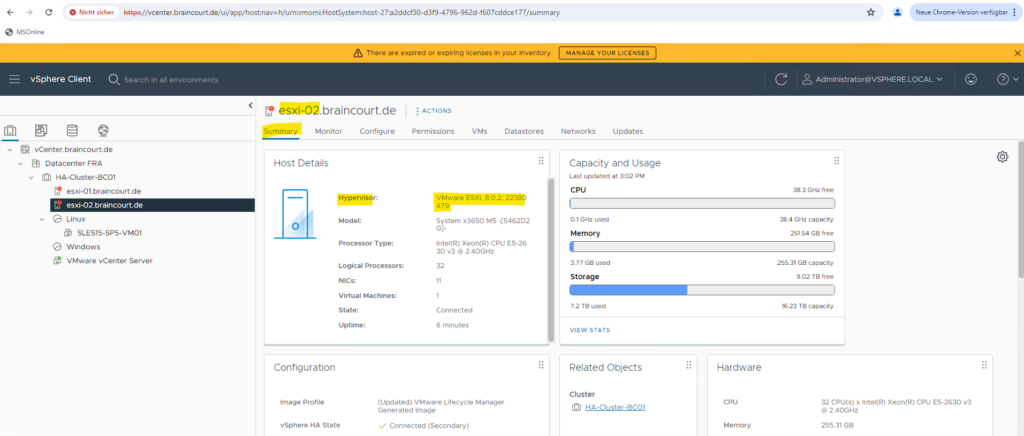

vLCM will now start to remediate and upgrade my second ESXi Host with the FQDN esxi-02.braincourt.de.

Finally both ESXi Hosts are upgraded successfully from version 7.0.3 to 8.0.2.

Links

Managing ESXi host lifecycle operations with vSphere Lifecycle Manager Images (vLCM)

https://knowledge.broadcom.com/external/article?legacyId=89519Introducing vSphere Lifecycle Management (vLCM)

https://core.vmware.com/resource/introducing-vsphere-lifecycle-management-vlcm

Tags In

Related Posts

Latest posts

Deploying NetApp Cloud Volumes ONTAP (CVO) in Azure using NetApp Console (formerly BlueXP) – Part 5 – Backup and Recovery

Follow me on LinkedIn