Building a Centralized Egress and Hybrid Connectivity Hub with Network Connectivity Center (NCC), HA VPN, and a Router Appliance in Google Cloud

In my previous posts I was configuring a hub-and-spoke architecture to support cross-VPC routing and hybrid connectivity.

About setting up a a hub-and-spoke architecture by using either VPC Network Peering or the Network Connectivity Center (NCC) shown here.

About outbound internet access for VM instances and especially by Deploying a centralized Next-Generation Firewall (NGFW) in a NCC managed hub-and-spoke architecture here.

In NCC, a hub can have only one routing VPC. Place your HA VPN (Cloud Router) and router-appliance in the same hub-VPC.

In the context of Network Connectivity Center (NCC), a routing domain refers to the single VPC network that acts as the control plane anchor for all hybrid attachments (such as HA VPN, Interconnect, or router appliances) within an NCC hub.

All route learning and advertisement for that hub occur through this one routing VPC, which defines a unified routing domain shared by all its attached spokes.

This is why both the HA VPN and router appliance must reside in the same hub-VPC, NCC supports exactly one routing domain per hub to ensure consistent and loop-free route propagation.

Keep the hub-VPC also attached as a VPC spoke so its local subnets are advertised to other spokes and supporting a full cross-VPC routing.

Introduction

In multi-VPC environments connected through Network Connectivity Center (NCC), we often want a single hub that terminates HA VPNs to on-premises, routes all internal traffic, and provides controlled egress to the internet.

While NCC makes hub-and-spoke routing simple, routing internet traffic from both spoke and hub VPCs through a VM-based firewall or router appliance introduces a tricky routing loop risk.

This post shows how to build the full topology and avoid that trap.

Architecture Overview

- Hub VPC: (Setup shown here)

- Ubuntu gateway VM with external IP (router appliance)

- Internal passthrough ILB fronting the gateway

- HA VPN connected to on-premises router (BGP for dynamic route exchange): (Setup with Juniper on-prem VPN device here or pfSense here)

- Cloud Router used for both HA VPN BGP

- Spoke VPCs: connected to the NCC hub for east-west and internet access (router appliance spoke): (Setup shown here)

- NCC Hub: handles intra-GCP routing between connected VPC spokes, it automatically exchanges subnet routes between the hub’s routing VPC and all attached spoke VPCs.

End-to-End Setup

In this post, we’ll bring together the configurations from the previous articles about setting up HA VPN for hybrid connectivity, building an NCC hub-and-spoke mesh topology for cross-VPC routing, and implementing centralized egress through a network firewall appliance.

The goal is to combine these components into a single, unified design: a Centralized Egress and Hybrid Connectivity Hub where all spoke VPCs and on-premises networks connect through one routing VPC that hosts the Cloud Router, HA VPN, and a router appliance (in this lab, a standard Ubuntu VM acting as the gateway).

This end-to-end setup demonstrates how to consolidate internet and on-premises traffic management in Google Cloud using native NCC routing and a NGFW appliance for production use (in my case for the lab environment just a lightweight firewall or gateway VM.

Set up HA VPN to connect our on-prem Network

Set up a Hub-and-Spoke Architecture

Set up a NGFW appliance for centralized outbound Internet

Final Architecture

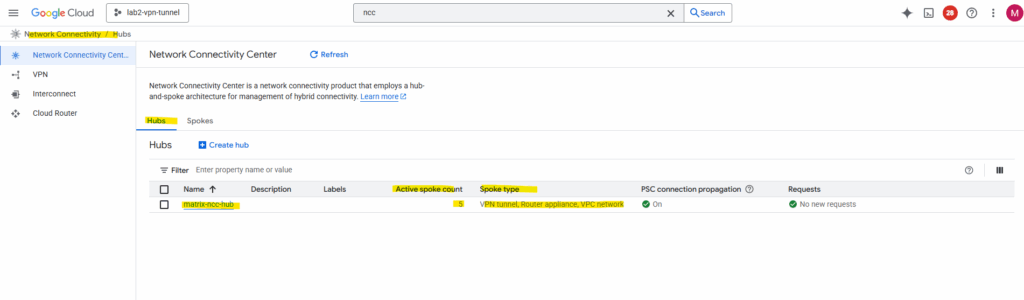

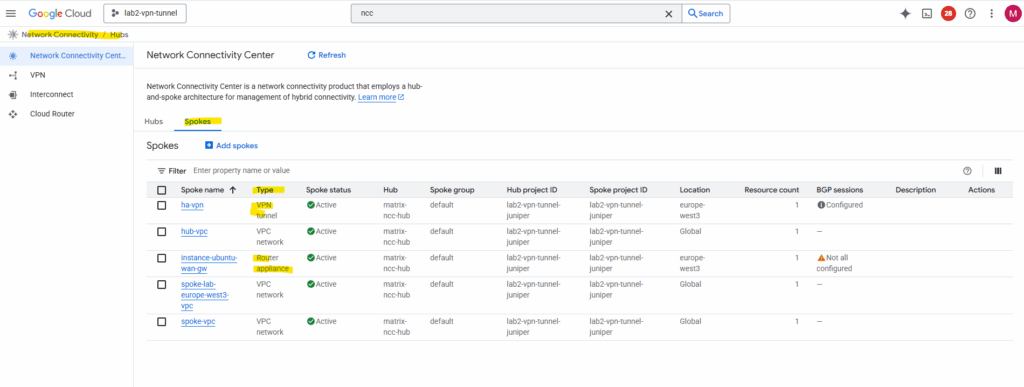

My NCC hub will finally contain 5 spokes and different spoke types: VPN tunnel, Router Appliance and VPC network as shown below.

The 5 spokes, ha-vpn is the spoke type VPN tunnel where I was adding my HA VPN (on-prem hybrid connection) to the NCC hub to make it available for all other connected spokes.

We also see our Router appliance spoke where I was adding the Ubuntu VM acting as centralized gateway for outbound internet access (workaround for NGFW appliance).

And all three classic VPC networks for cross-VPC routing.

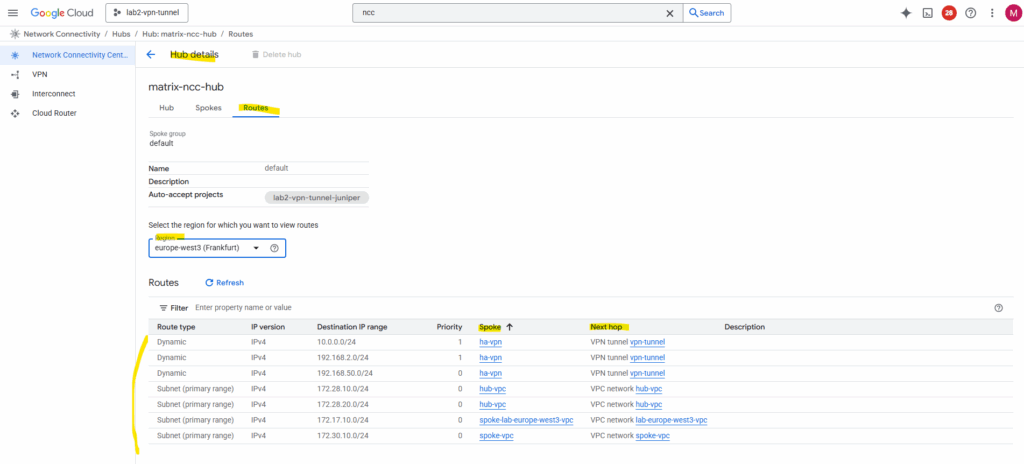

On the NCC hub details page, under Routes, we can see all routes that the hub has learned from its connected spokes and hybrid attachments, as well as the prefixes it will advertise or propagate to on-premises networks (via Cloud Router ==> BGP role) and to all connected VPC spokes.

Advertising refers to the prefixes that the Cloud Router announces to external BGP peers, such as on-premises routers over HA VPN or Interconnect. It’s BGP terminology and applies only to hybrid route exchange.

Propagation refers to the way NCC distributes learned routes between its connected VPC spokes and other hub attachments inside Google Cloud. It’s an internal NCC control-plane process, not a BGP session.

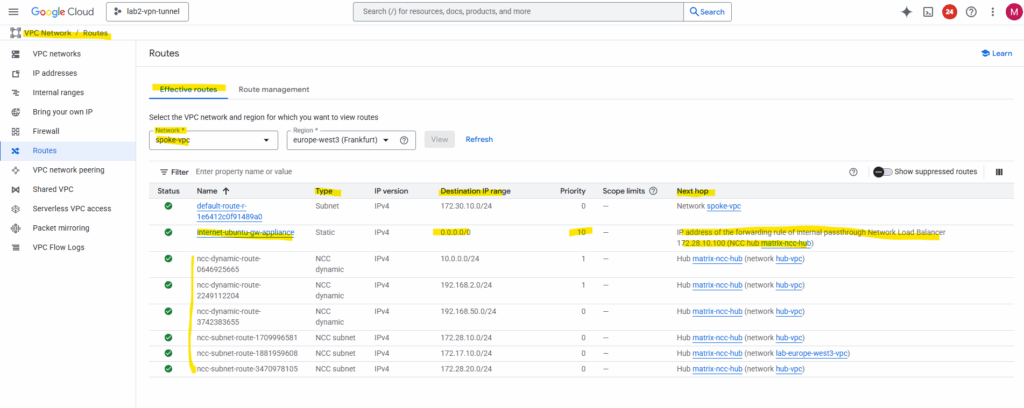

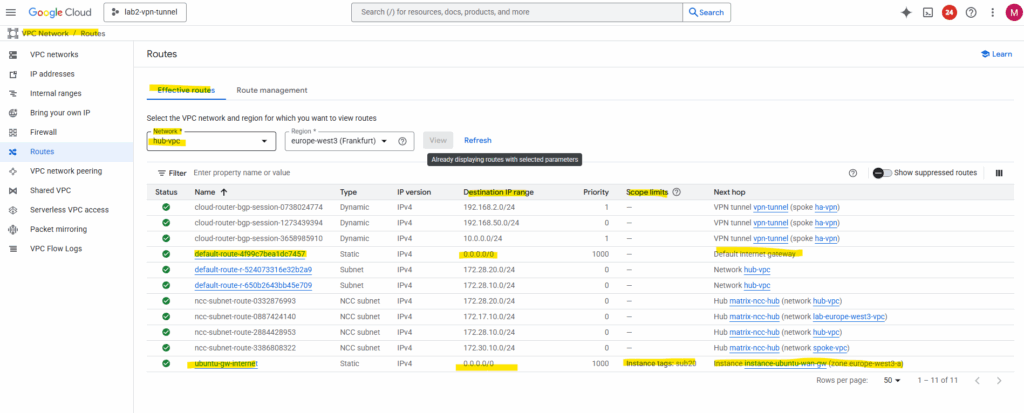

We can also check the effective routes for each VPC network under VPC Network -> Routes.

Here for my spoke VPC. We can see the custom default route (internet access) which points for the next hop to the Ubuntu gateway VM (workaround for NGFW appliance).

We will also see the propagated NCC routes (connected spoke VPCs).

The destination range with 10.0.0.0/24, 192.168.2.0/24 and 192.168.50.0/24 are my on-prem networks by the way and connected through the HA VPN tunnel attached as spoke to the NCC hub.

Here for my hub VPC which contains the HA VPN and NGFW appliance (my Ubuntu gateway VM).

We can see the custom default route (internet access) which points for the next hop to the Ubuntu gateway VM (workaround for NGFW appliance) to route outbound internet traffic from the hub-VPC itself through the same gateway appliance.

More about why we need to use a scope to exclude the gateway VM for this route here.

With the hybrid Network Connectivity Center (NCC) setup in place, all networks, on-premises and in Google Cloud, are now part of a unified routing domain.

Routes learned via BGP over the HA VPN and routes propagated by the NCC hub enable full connectivity between on-prem resources, the hub-VPC, and all spoke-VPCs.

In this design, any VM or host can reach another across these environments as long as the necessary firewall rules permit the traffic.

This creates a single, integrated hybrid network fabric with centralized egress and policy control.

Links

Distributed, hybrid, and multicloud

https://cloud.google.com/docs/dhm-cloudCross-Cloud Network inter-VPC connectivity using Network Connectivity Center

https://cloud.google.com/architecture/ccn-distributed-apps-design/ccn-ncc-vpn-raHub-and-spoke network architecture

https://cloud.google.com/architecture/deploy-hub-spoke-vpc-network-topologyInternal passthrough Network Load Balancer overview

https://cloud.google.com/load-balancing/docs/internalInternal passthrough Network Load Balancers and connected networks

https://cloud.google.com/load-balancing/docs/internal/internal-tcp-udp-lb-and-other-networksCode Lab: NCC VPC as a Spoke

https://codelabs.developers.google.com/ncc-vpc-as-spoke

Tags In

Related Posts

Latest posts

Deploying NetApp Cloud Volumes ONTAP (CVO) in Azure using NetApp Console (formerly BlueXP) – Part 4 – Antivirus VSCAN Configuration

Follow me on LinkedIn