Deploying NetApp Cloud Volumes ONTAP (CVO) in Azure using NetApp Console (formerly BlueXP) – Part 2

In Part 1 of this series, we completed the initial preparation and onboarding within the NetApp Console (formerly BlueXP), including Azure subscription setup, feature registration, and account associations.

With the foundation in place, it’s now time to move on to the actual deployment of a Cloud Volumes ONTAP HA pair in Microsoft Azure directly from the NetApp Console.

In this part, we’ll walk through the Cloud Volumes ONTAP system creation wizard step by step, including configuration choices for high availability, networking, VM sizes, and services.

In Part 3 (coming soon) we will see how to create storage objects or data services such as NFS or CIFS/SMB including SVM creation and volume provisioning.

Deploy a Cloud Volumes ONTAP HA pair in Azure

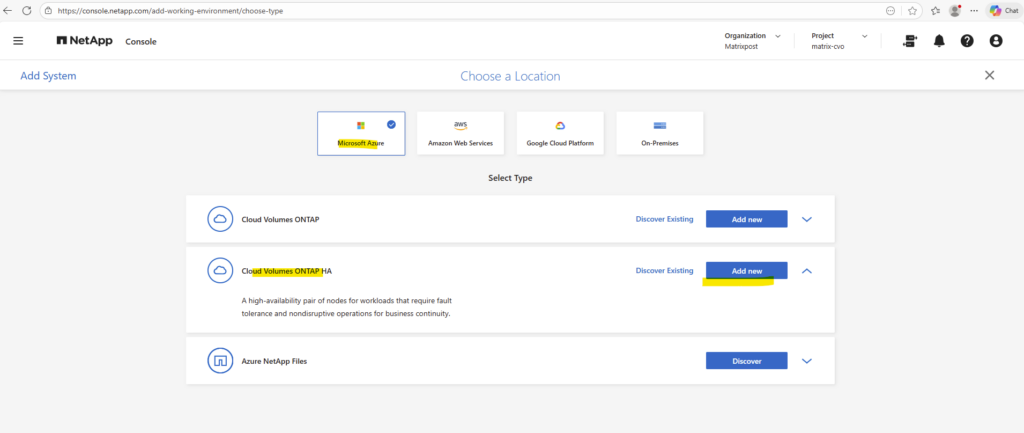

If you want to deploy a Cloud Volumes ONTAP HA pair in Azure, you need to create an HA system in the NetApp Console as shown below.

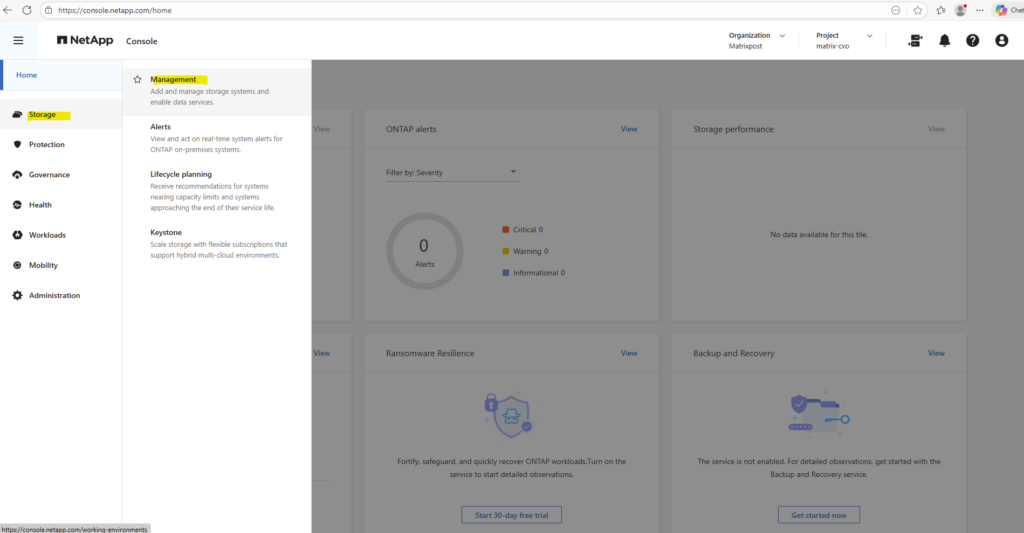

From the left navigation menu, select Storage > Management.

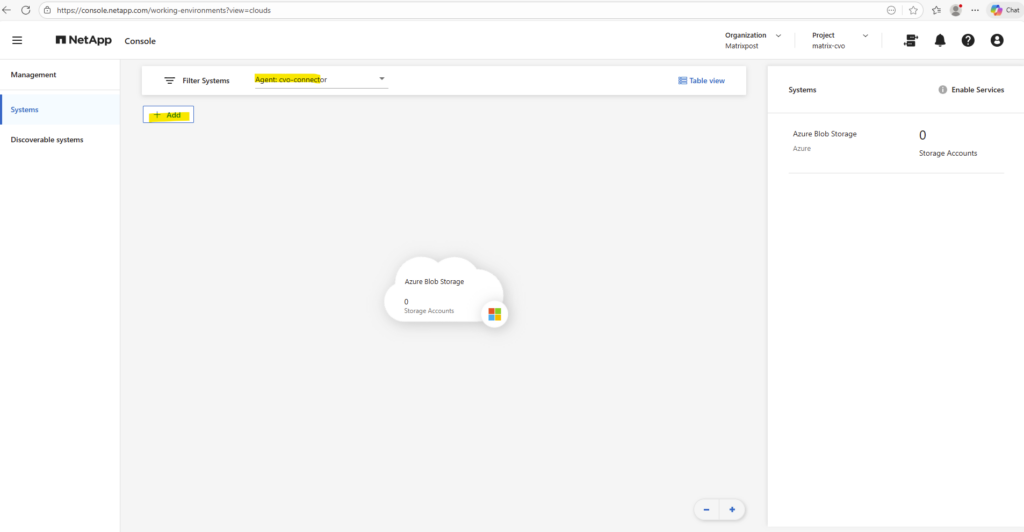

Select your previously deployed Agent (Connector VM) in your Azure subscription and click on + Add.

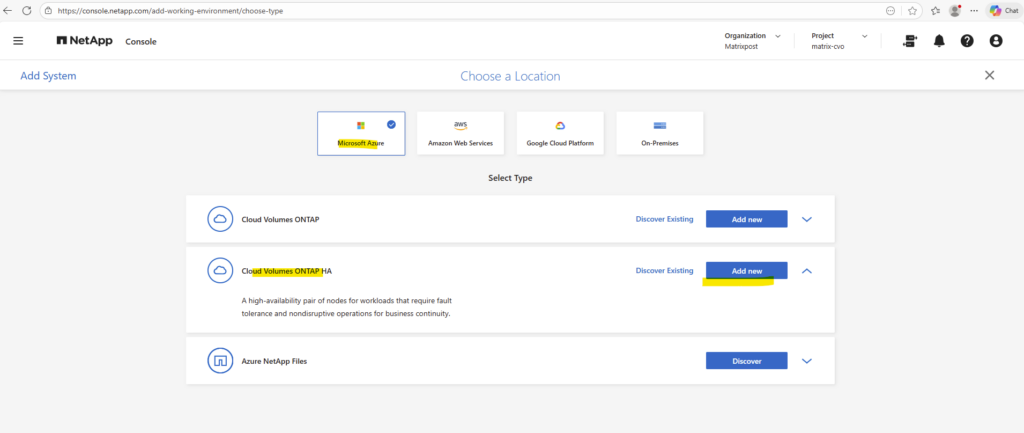

Below select Microsoft Azure and within Cloud Volume ONTAP HA click on Add new.

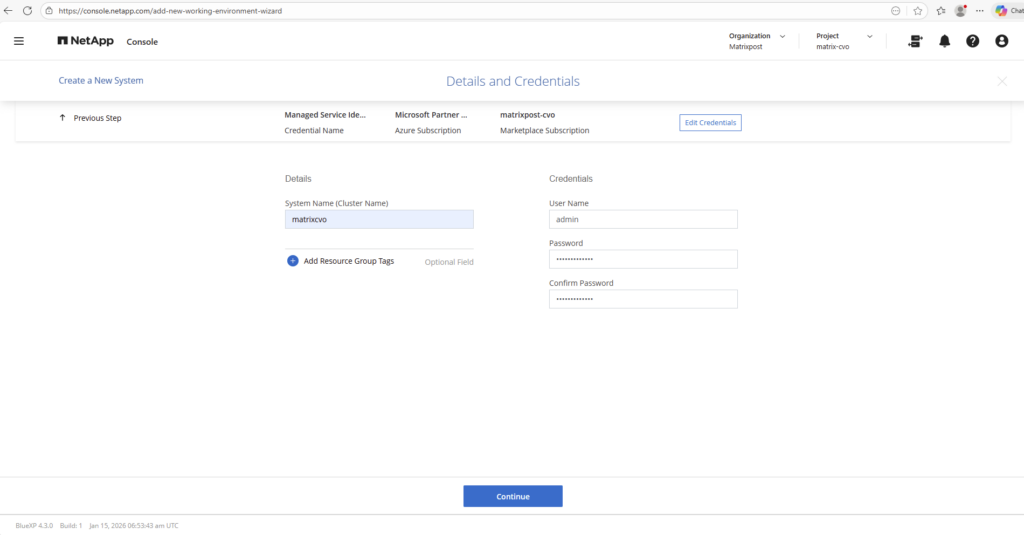

Define the cluster name and set the administrator credentials that will be used to manage the ONTAP operating system.

While

adminis the default and most commonly used username, you can choose a different name if required by your security or naming standards.

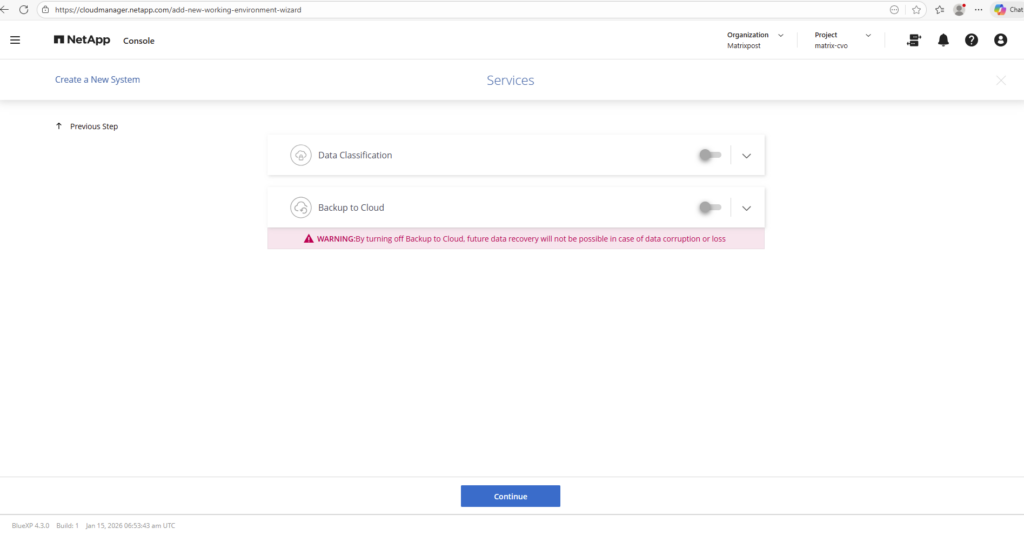

The features Data Classification and Backup to Cloud below I will first disable for my lab environment.

Data Classification provides visibility into the data stored on Cloud Volumes ONTAP by automatically scanning volumes and identifying sensitive information, data types, and usage patterns. This helps with compliance, security assessments, and understanding where critical or personal data resides.

Backup to Cloud enables automated, policy-based backups of ONTAP volumes to low-cost object storage, providing an additional layer of protection for disaster recovery and long-term retention independent of the primary system.

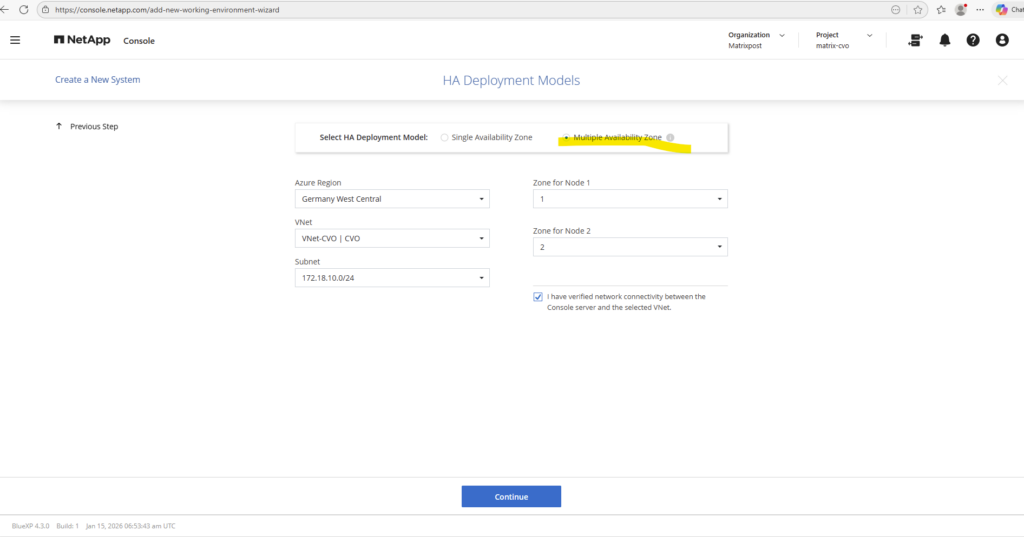

For the HA Deployment Mode I want to deploy the nodes into multiple availability zones.

For the HA deployment mode, the Cloud Volumes ONTAP nodes are deployed across multiple Azure Availability Zones to ensure high availability and fault tolerance.

This setup protects the system against zone-level failures by distributing the nodes and storage infrastructure across physically separate data centers. In the event of an outage in one zone, services can continue running from the remaining zone without manual intervention.

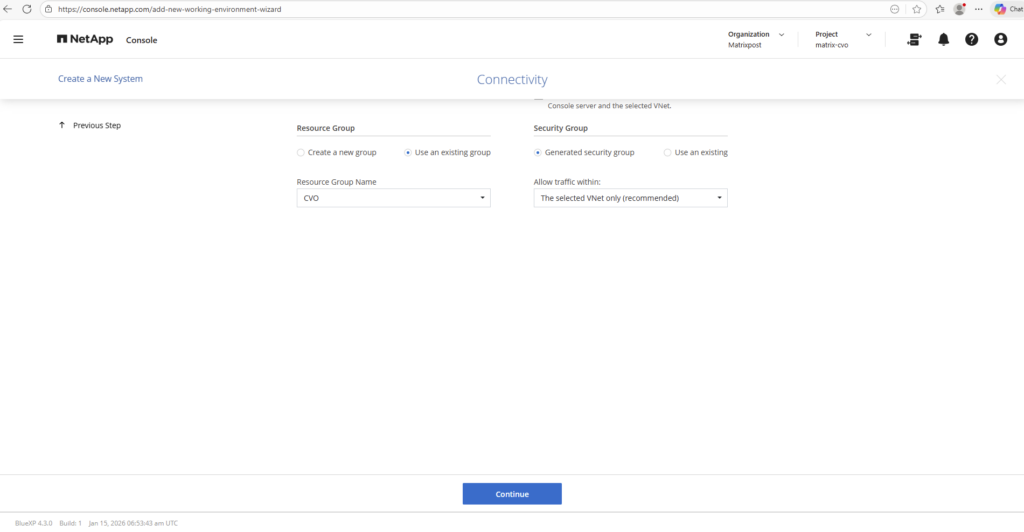

Below I will select for the security group to generate a new dedicated one.

During deployment, Cloud Volumes ONTAP allows you to either automatically generate the required security groups or use existing ones.

Choosing “Generate Security Groups” lets NetApp create all necessary inbound and outbound rules automatically based on best practices, which simplifies the setup and reduces the risk of misconfiguration.

Alternatively, using existing security groups is useful in environments with strict networking or security policies where firewall rules must be managed manually.

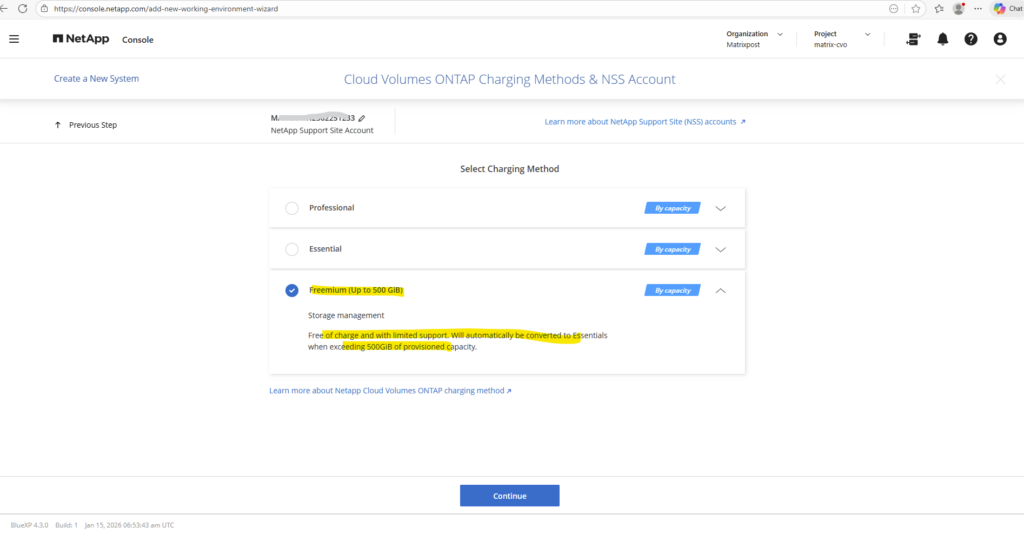

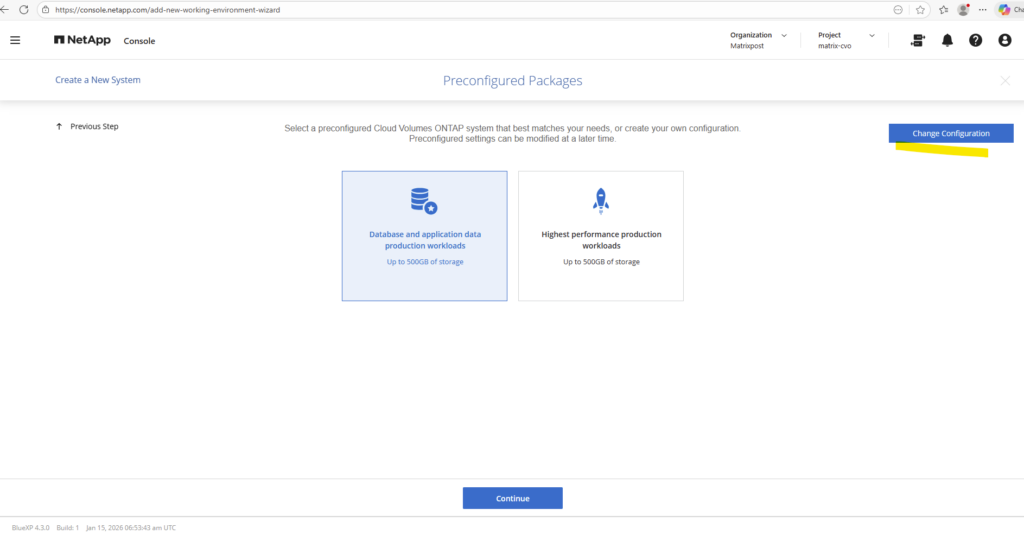

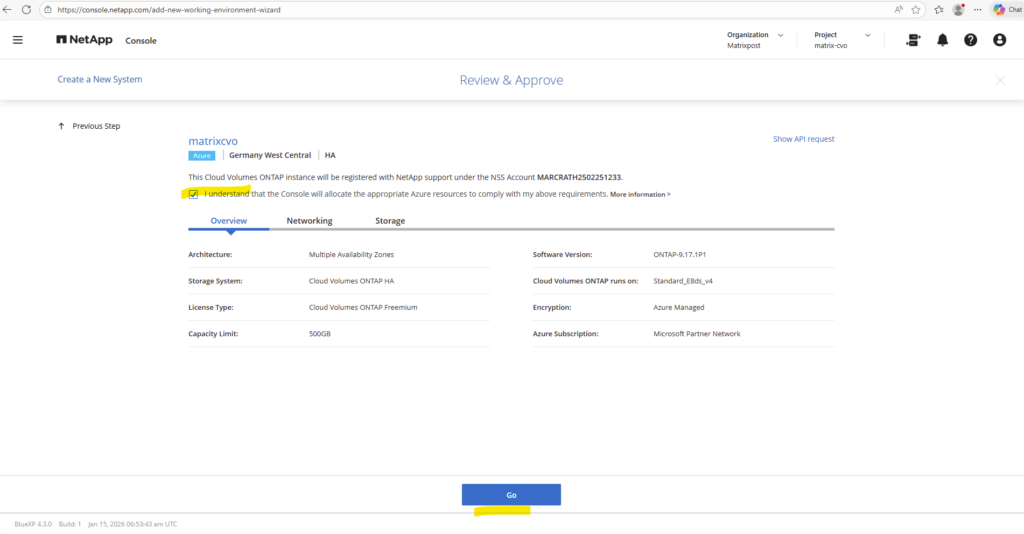

For my lab environment, I selected the Freemium charging model, which allows usage of Cloud Volumes ONTAP with up to 500 GiB of provisioned capacity at no cost.

This option is ideal for testing, learning, and proof-of-concept scenarios, as it provides full functionality without incurring licensing charges. It’s a convenient way to explore ONTAP features before moving to a paid subscription model.

However, standard Azure infrastructure costs (compute, storage, networking, and load balancer resources) will still apply.

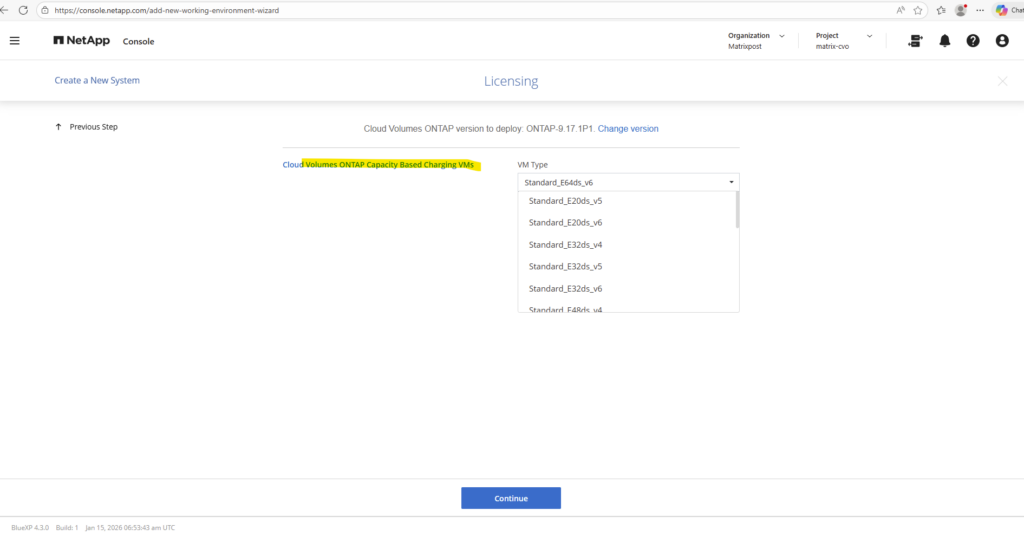

Click on Change Configuration to manually select a desired VM size for the deployed nodes (VMs).

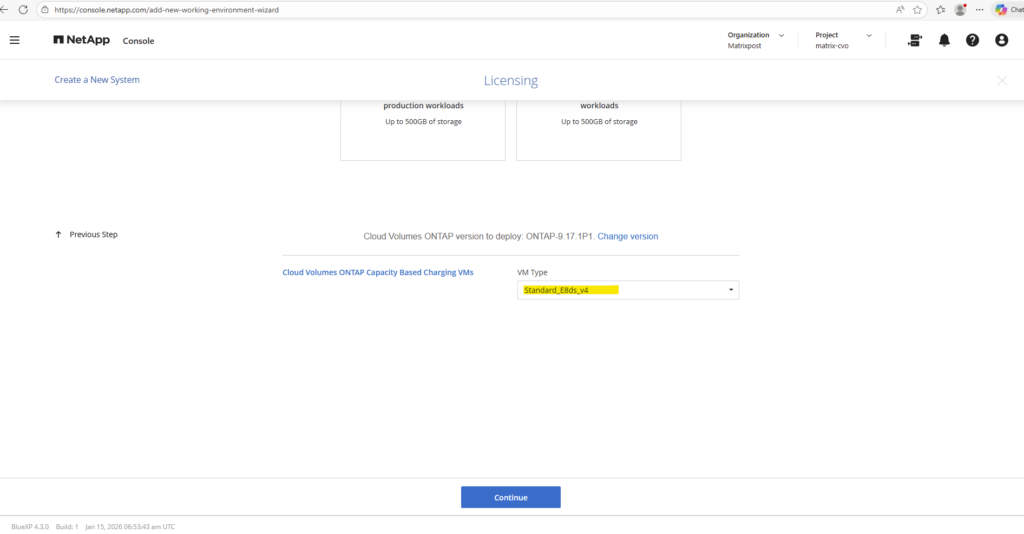

Select the desired VM Type.

For the virtual machine size, I selected Standard_D8s_v4, which provides a solid balance of CPU, memory, and overall performance for Cloud Volumes ONTAP in a lab environment. This VM size is a good fit for testing and evaluation while keeping Azure infrastructure costs reasonable.

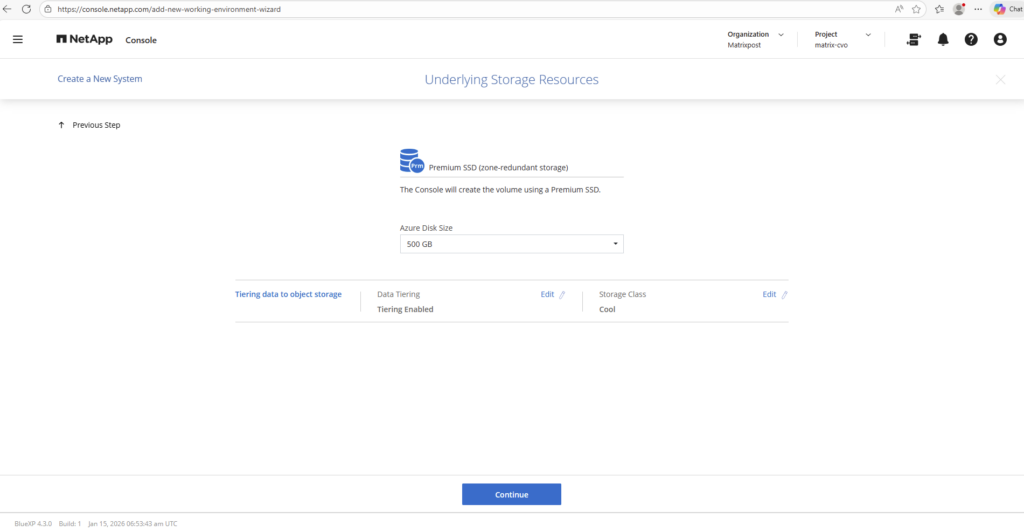

For data tiering, I enabled automatic tiering to Azure Blob Storage and selected the Cool storage tier. This allows inactive data blocks to be moved from performance disks to lower-cost object storage, reducing overall storage costs while keeping active data on high-performance tiers. It’s an efficient way to optimize storage usage in a lab or production environment without impacting performance-critical workloads.

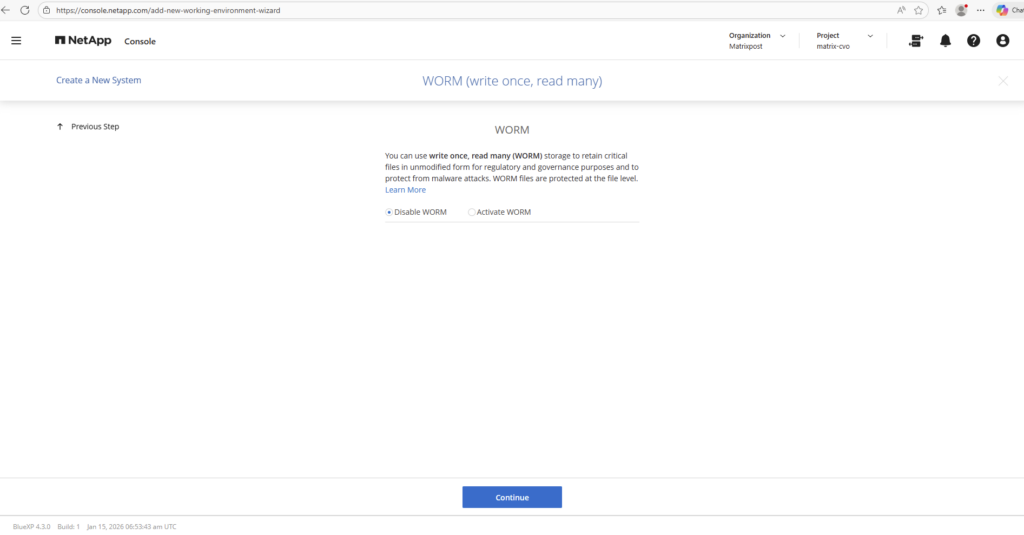

The WORM (Write Once, Read Many) feature, which provides immutable storage for compliance and data protection use cases, was disabled for this deployment.

Since this is a lab environment, immutability and regulatory retention requirements were not necessary. Disabling WORM also keeps the setup more flexible for testing and reconfiguration scenarios.

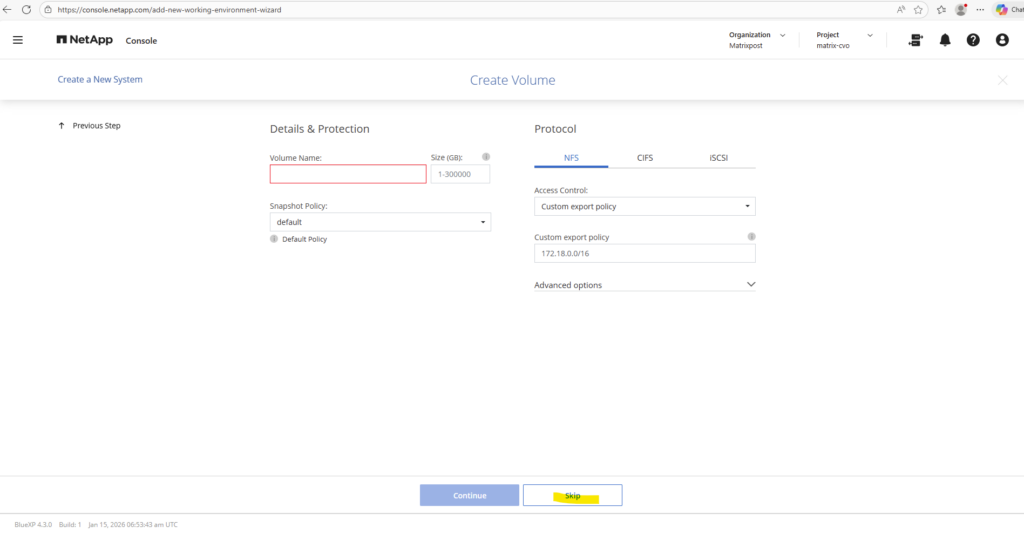

The Volume creation section I will skip here.

Volumes can be created later directly from the Cloud Manager or ONTAP System Manager once the environment is fully deployed and configured.

This approach keeps the initial setup minimal and allows more flexibility when defining storage requirements.

Finally we can click on Go to deploy the system in Azure.

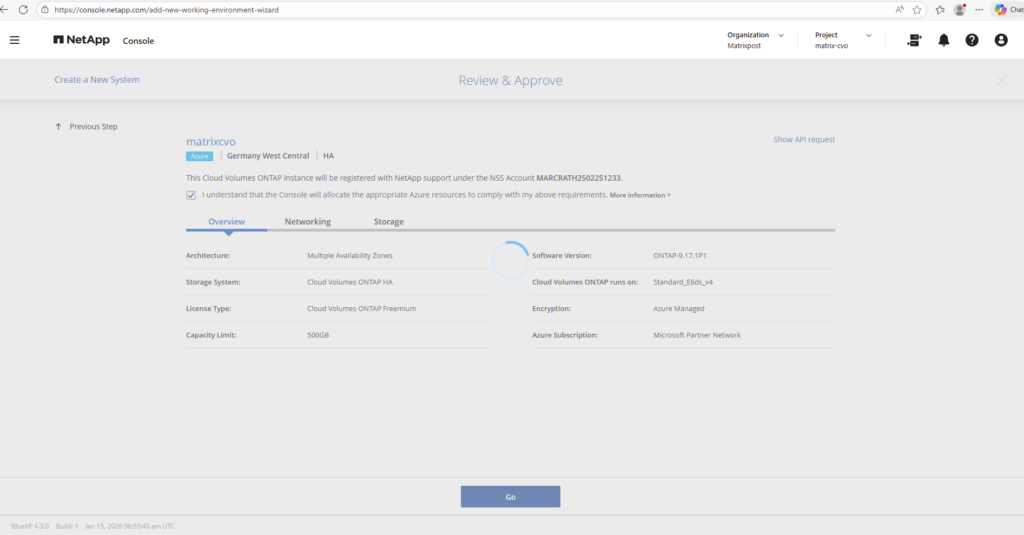

The deployment triggered and orchestrated from the NetApp console starts.

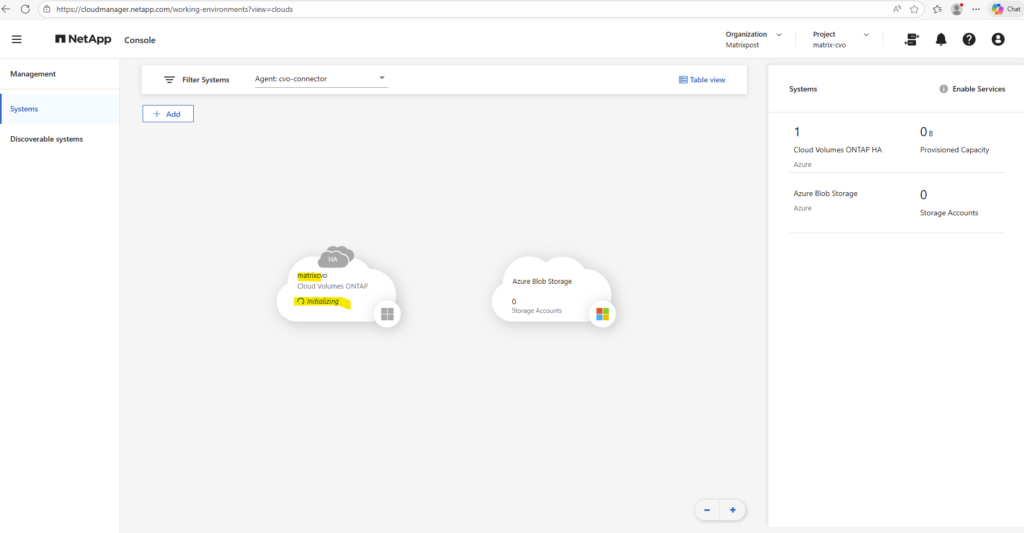

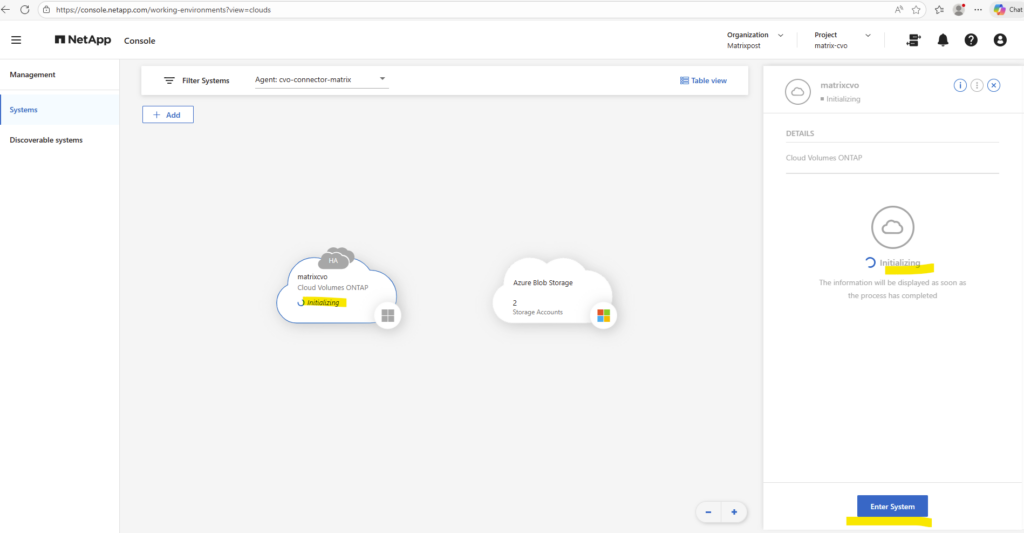

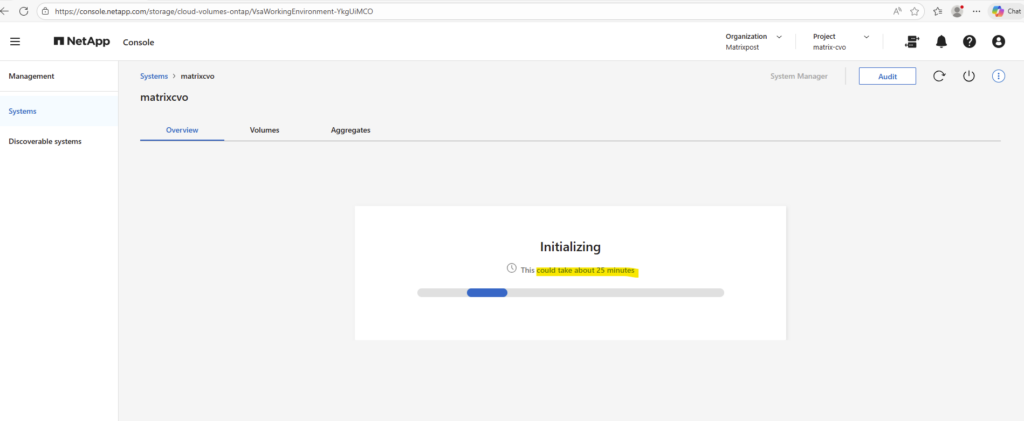

During deployment we can see below its state in Initializing.

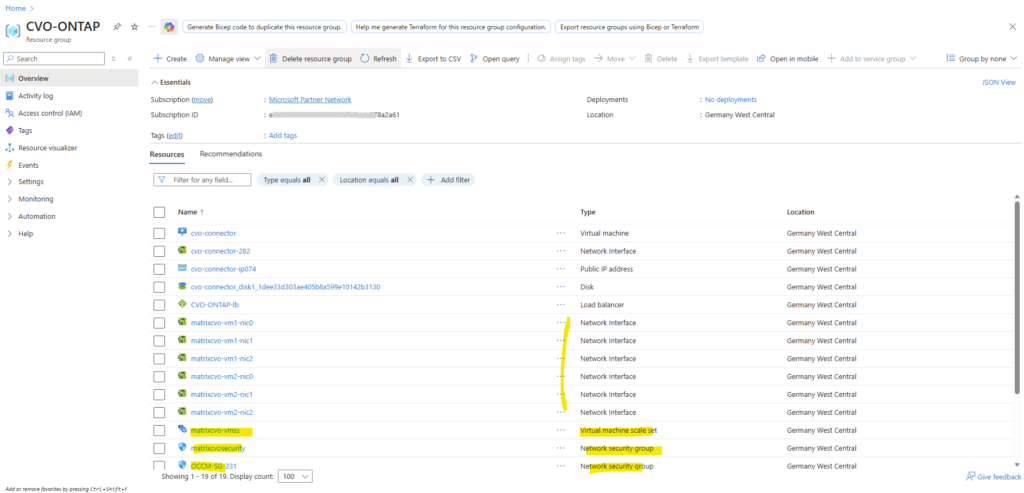

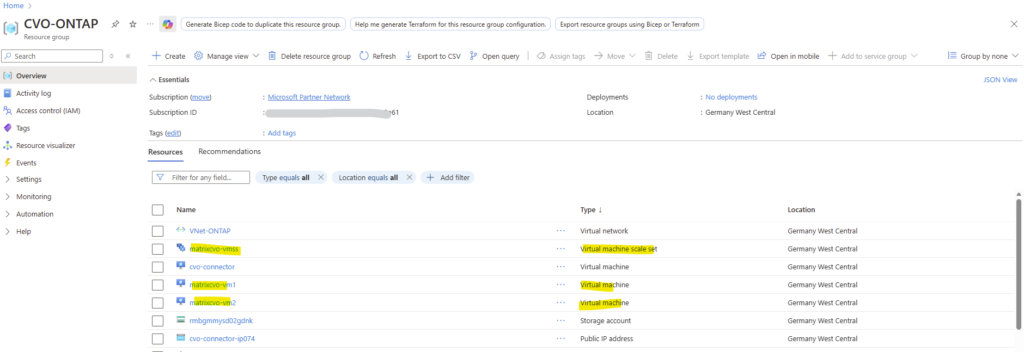

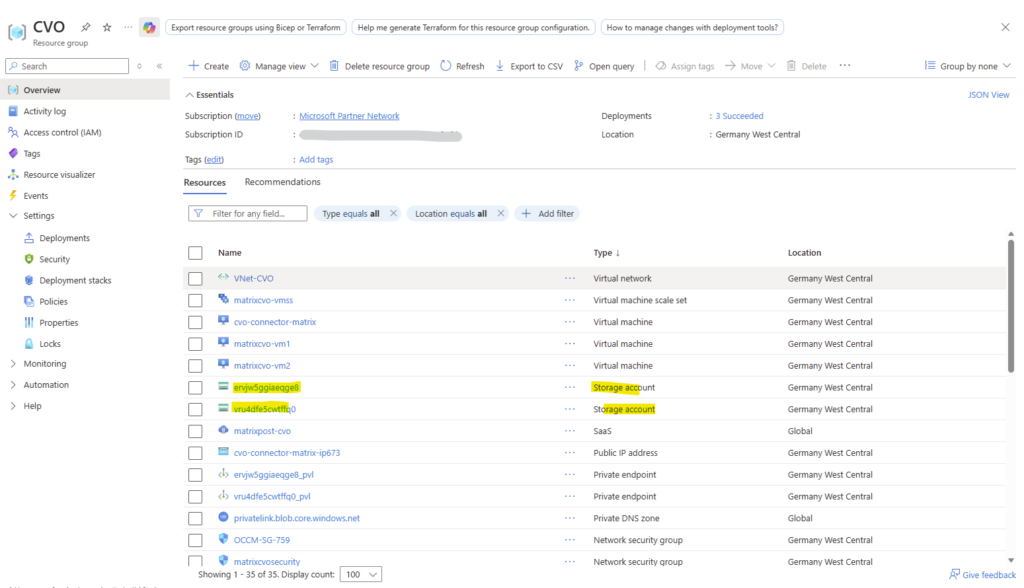

Meanwhile in Azure during the above initialization in the Netapp console we can already see that the resources will be created.

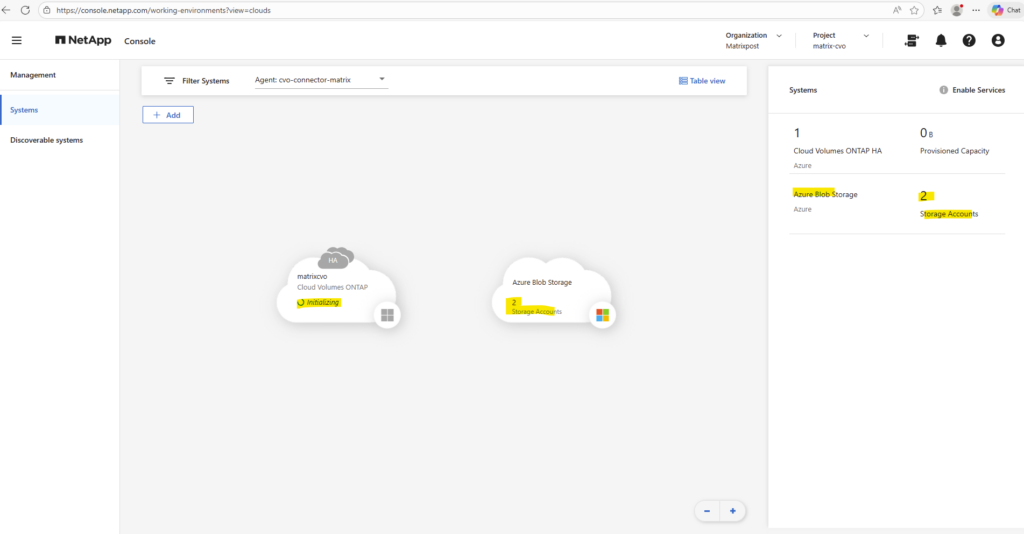

Meanwhile also an Azure storage accounts were provisioned.

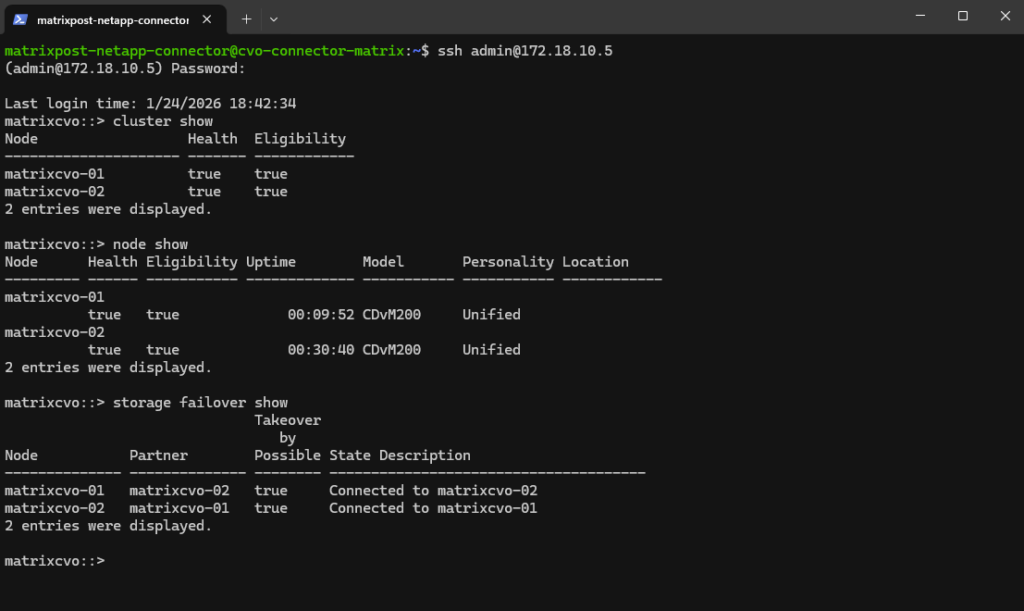

While the NetApp console is still in state Initializing, in ONTAP itself it looks already fine.

Below I will SSH into the ONTAP cluster directly from the Agent (Connector VM) where I am connected through the internet by using SSH and the Agent’s public IP address.

matrixcvo::> cluster show matrixcvo::> node show matrixcvo::> storage failover show

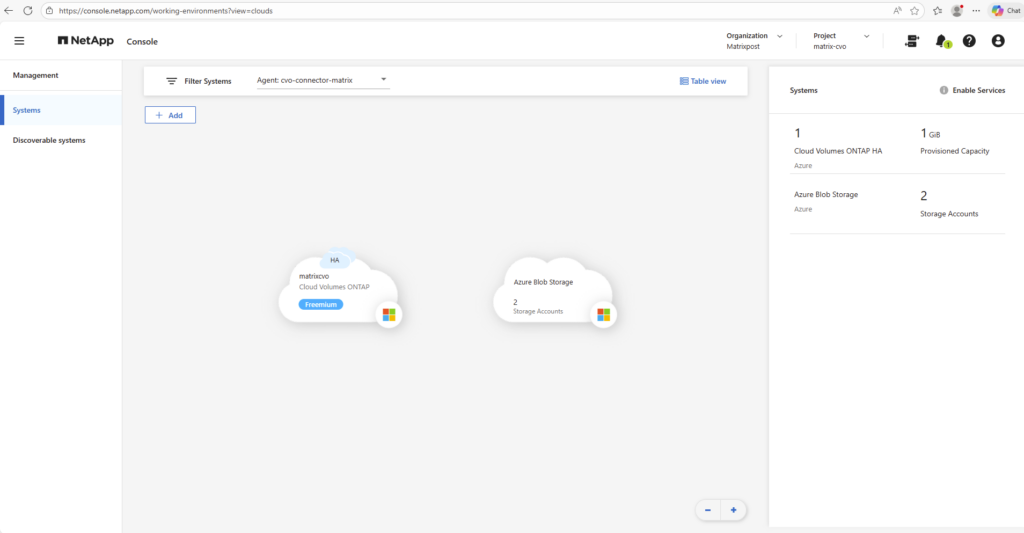

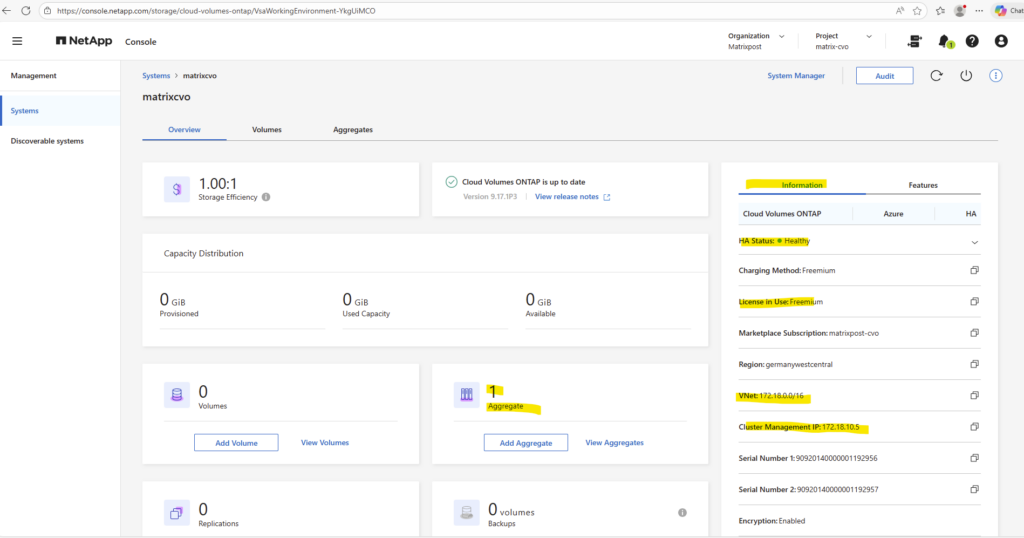

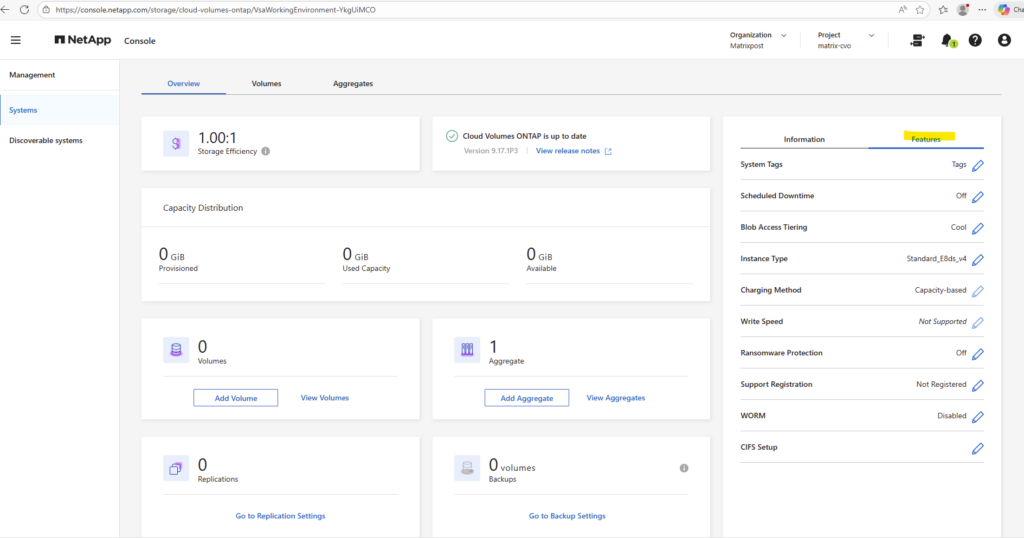

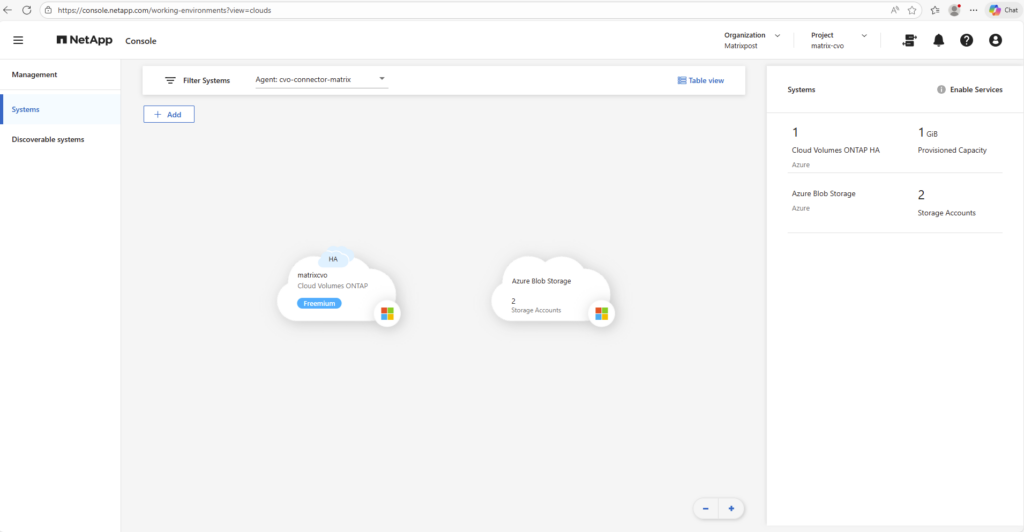

In my case, finally after around 70 Minutes instead of estimated 25 minutes also the NetApp console shows the Cloud Volumes ONTAP (Freemium) system in normal healthy state.

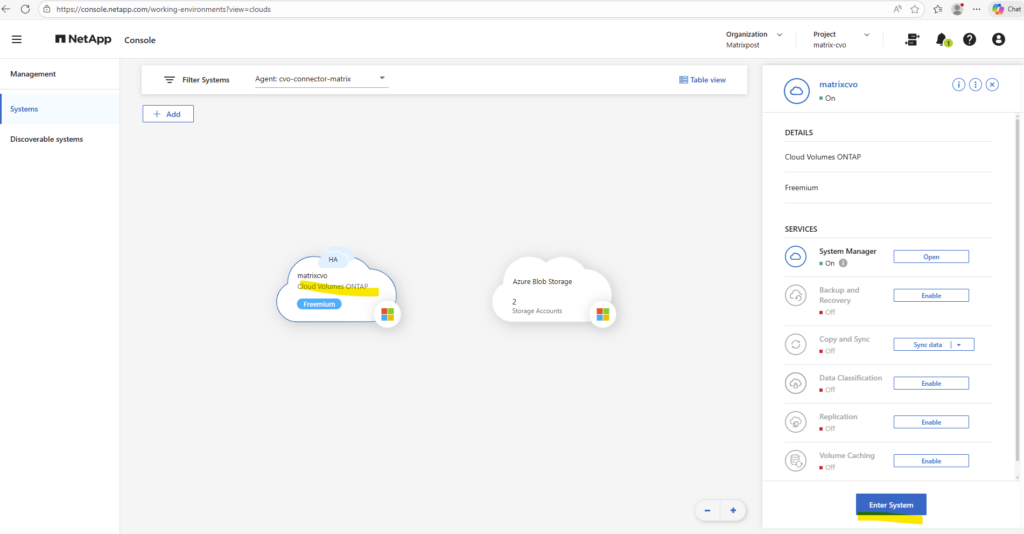

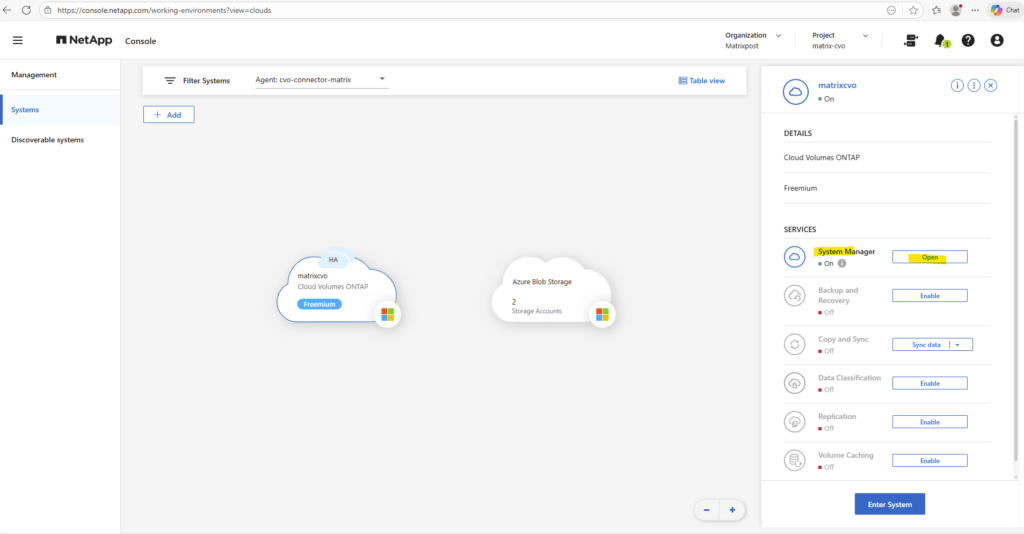

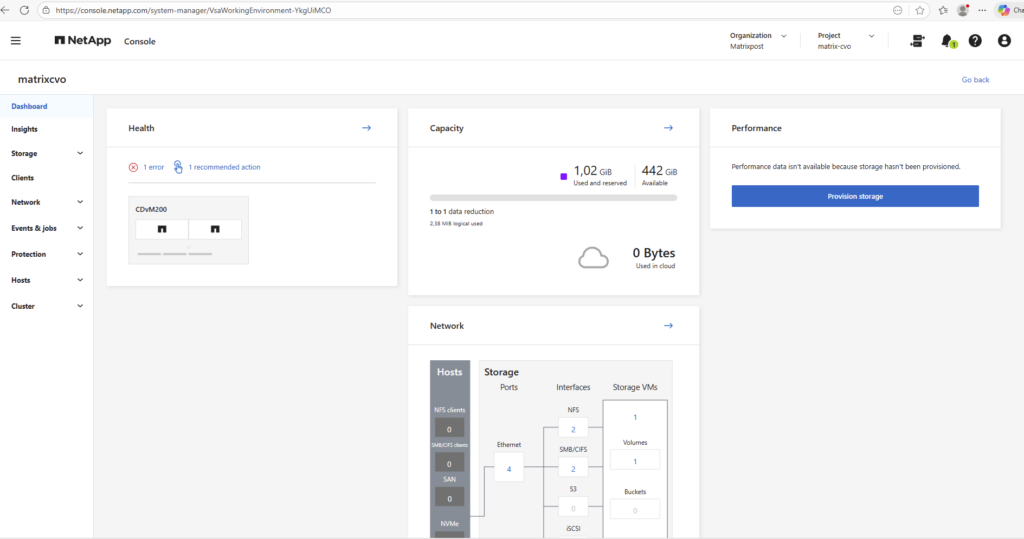

Below under Management -> Systems, selecting the Cloud Volume ONTAP tile and clicking on Enter System on the right-hand side, will open the ONTAP System Manager interface.

From there, the cluster can be fully managed, including storage, networking, and system configuration.

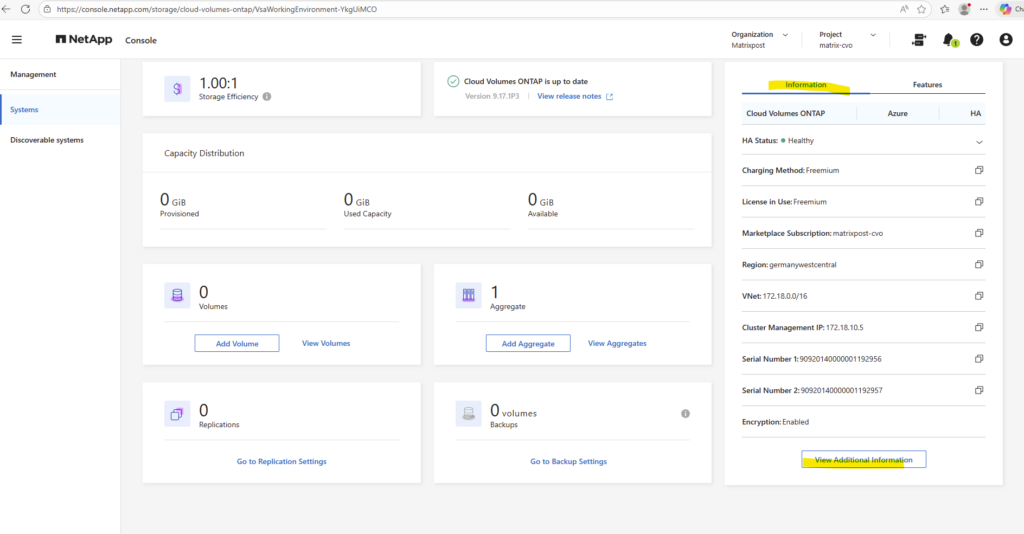

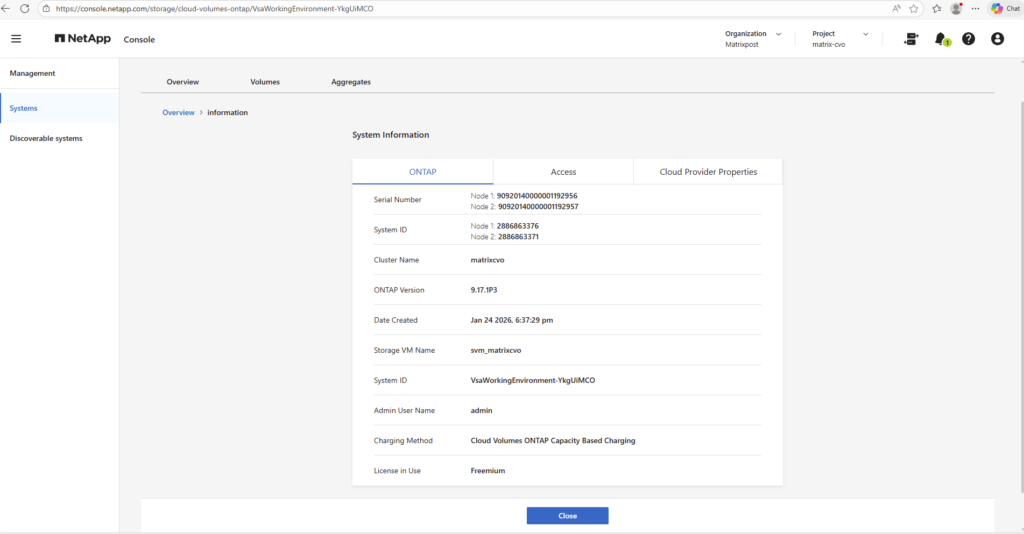

Click on View Additional Information.

By clicking on the Cloud Volumes ONTAP tile and selecting System Manager from the right-hand panel, the ONTAP System Manager web interface is opened. This provides full access to cluster configuration, storage management, networking, and monitoring features.

The well-known ONTAP web interface opens up, offering the same experience and management capabilities as a traditional on-premises deployment.

Shut down the HA pair gracefully in Azure

For lab environments, it’s recommended to shut down the Cloud Volumes ONTAP HA pair when not in use to avoid unnecessary Azure infrastructure costs.

Since the HA nodes run on dedicated virtual machines, keeping them powered on 24/7 can quickly generate avoidable expenses.

Performing a graceful shutdown ensures data consistency while helping to significantly reduce ongoing cloud costs.

When using

system node halt -node *, ONTAP performs a coordinated shutdown and requires healthy HA communication and successful LIF migration between nodes.

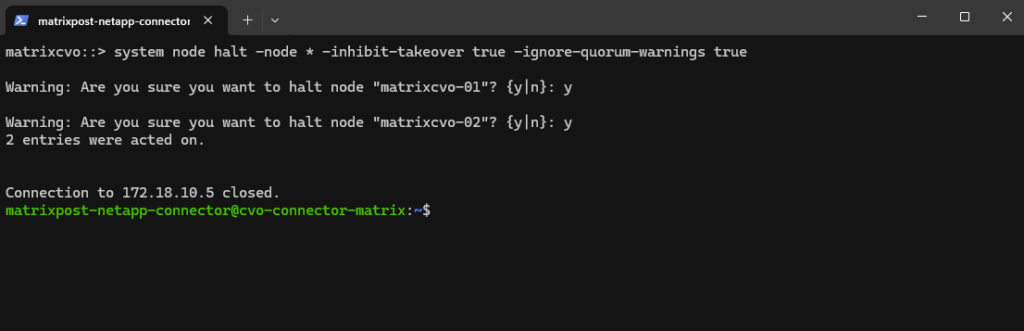

matrixcvo::> system node halt -node * -inhibit-takeover true -ignore-quorum-warnings true

If one node is already down or unreachable, the command fails by design to prevent data or management loss.

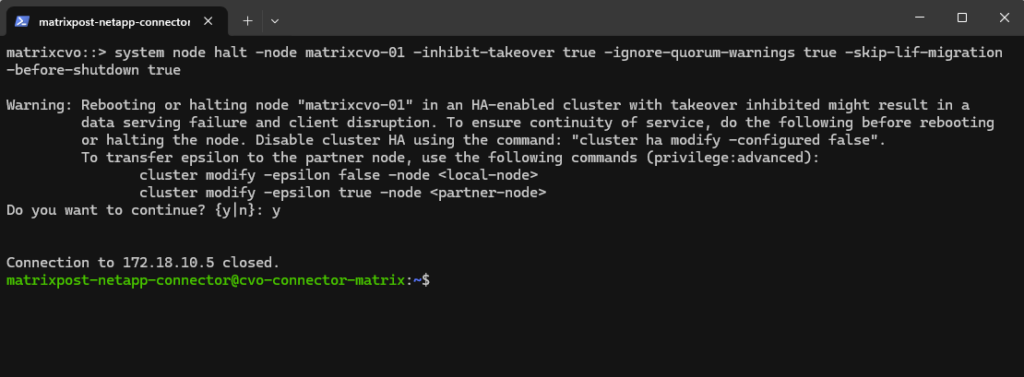

In such cases, a forced single-node shutdown like shown below for the first command using -skip-lif-migration-before-shutdown is required, but should only be used when HA is already broken and downtime is acceptable.

matrixcvo::> system node halt -node matrixcvo-01 -inhibit-takeover true -ignore-quorum-warnings true -skip-lif-migration-before-shutdown true

When Cloud Volumes ONTAP is shut down using the ONTAP CLI, the system enters a safe halted state indicated by

SRM_F_POWEROFF-VM.This is expected behavior and confirms that the storage system was cleanly stopped before deallocating the virtual machines.

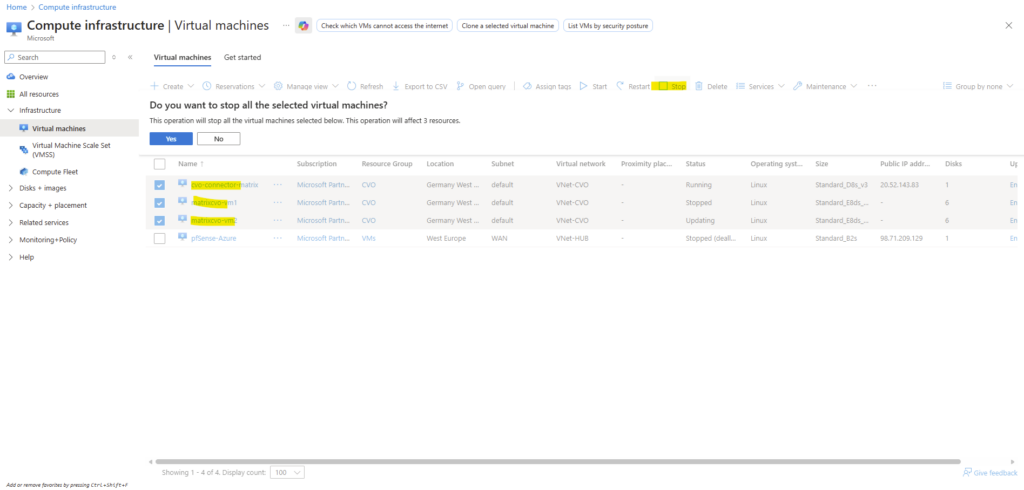

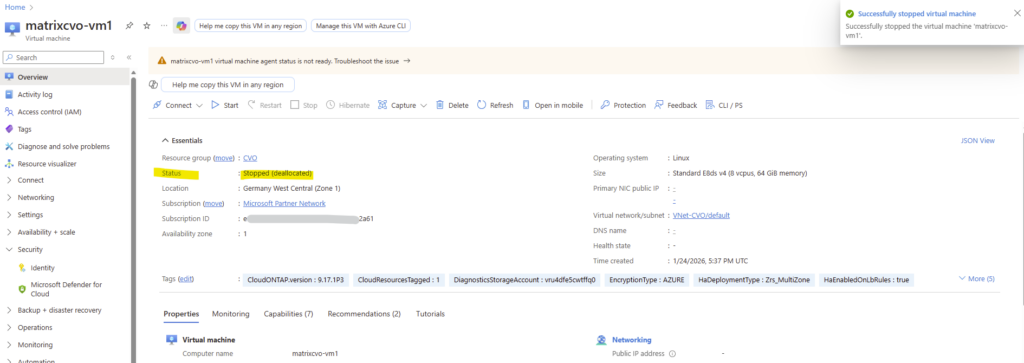

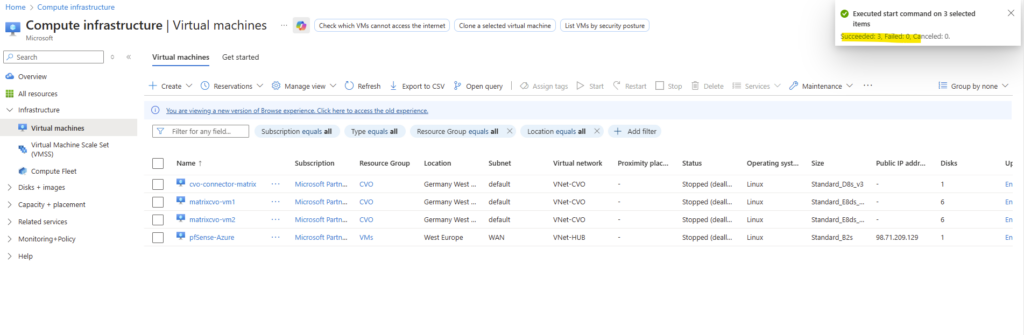

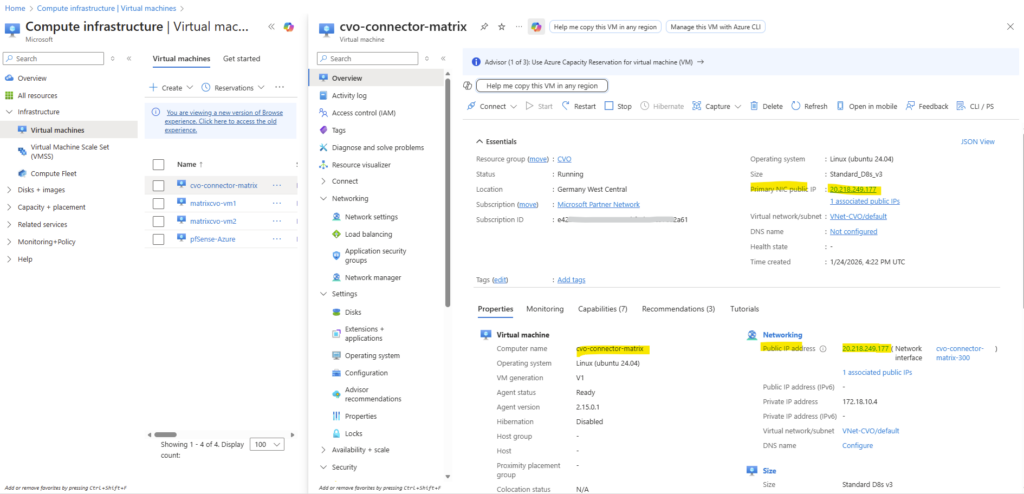

To finally deallocate both nodes (VMs) and also the Connector VM, click on Stop. The public IP address from the Connector VM we can release to avoid costs.

The public IP assigned to the BlueXP Connector is only required for administrative SSH access and is not used for any NetApp or Cloud Volumes ONTAP communication. Also not for the NetApp console.

BlueXP operates as a SaaS control plane that communicates with the Connector over outbound HTTPS, while all management of Cloud Volumes ONTAP occurs privately within the Azure virtual network.

The BlueXP Connector establishes an outbound HTTPS connection to the NetApp SaaS platform, allowing management of Cloud Volumes ONTAP without any inbound access to the Azure environment.

Stopped means the VM is powered off but still allocated to the host, so compute charges may continue to apply.

Stopped (deallocated) releases the VM’s compute resources back to Azure, stopping all compute costs while preserving disks and configuration.

For cost savings, especially in labs, always ensure VMs are in the Stopped (deallocated) state.

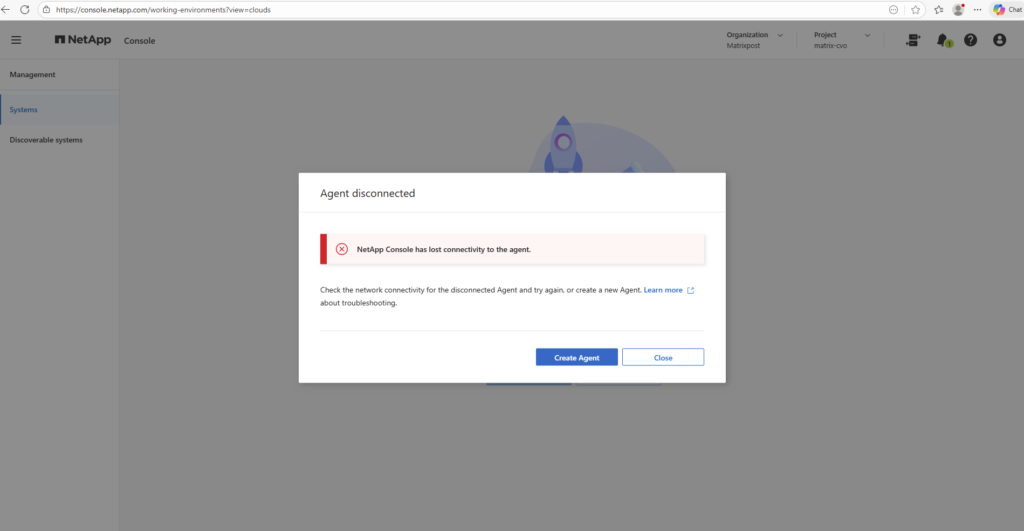

After shutting down all three VMs (both CVO nodes and the Connector), the NetApp console correctly reports that it has lost connectivity to the agent.

This is expected behavior, as the Connector VM is responsible for maintaining the outbound connection to the NetApp console, and once it is stopped, management access is temporarily unavailable.

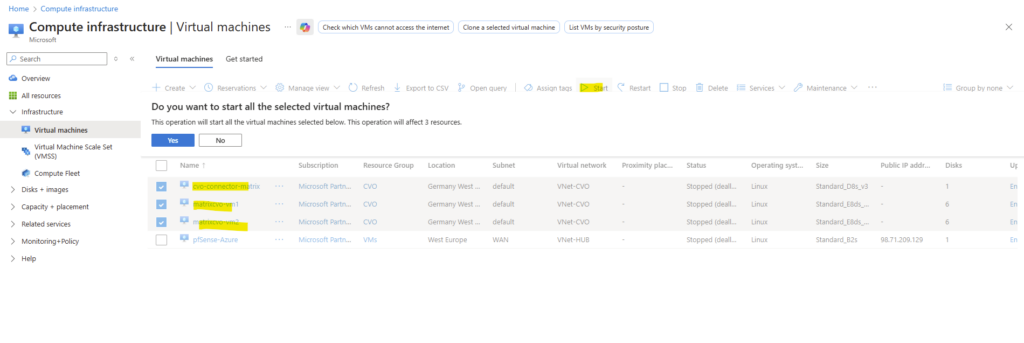

Power On the Azure HA pair (ONTAP Nodes) and Agent (Connector VM)

In a lab environment like in my case, it’s common to shut down Cloud Volumes ONTAP and the Connector VM to avoid unnecessary costs.

After starting the CVO nodes and the Connector again, the system can be verified by connecting to the ONTAP CLI and checking the cluster, node, and HA status to ensure everything is up and running as expected.

So first I will start all three Azure VMs again.

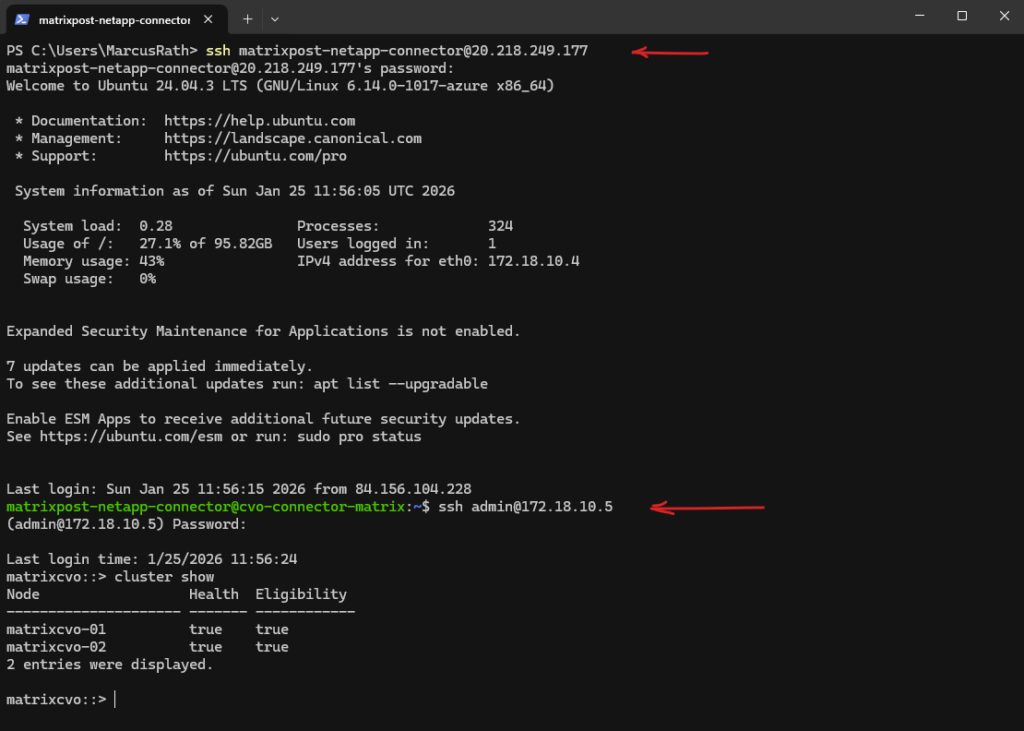

I will connect to the Agent (Connector VM) by using SSH and the new public IP address.

To avoid unnecessary costs in the lab environment, the public IP address of the Connector VM is released when the VM is shut down.

When the Connector is started again and SSH access is required, a new public IP is assigned and must be determined before connecting to the VM.

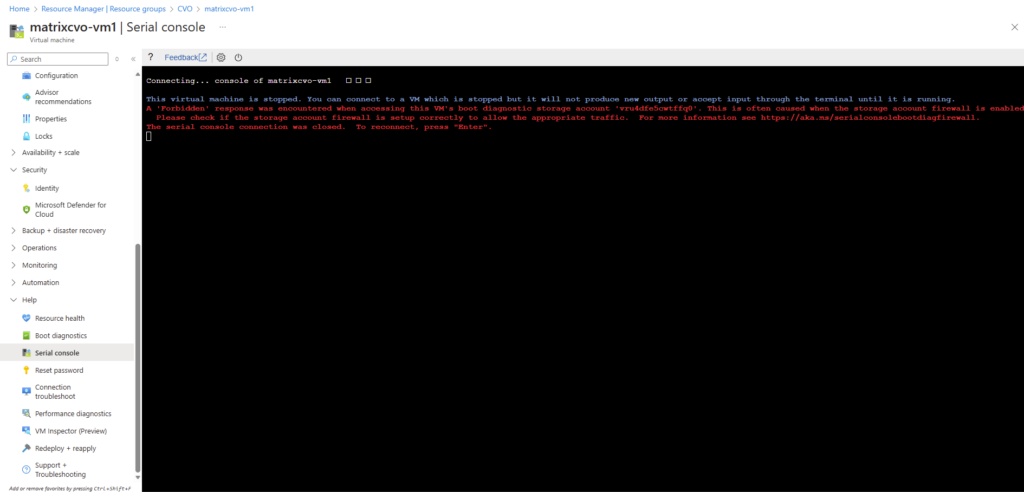

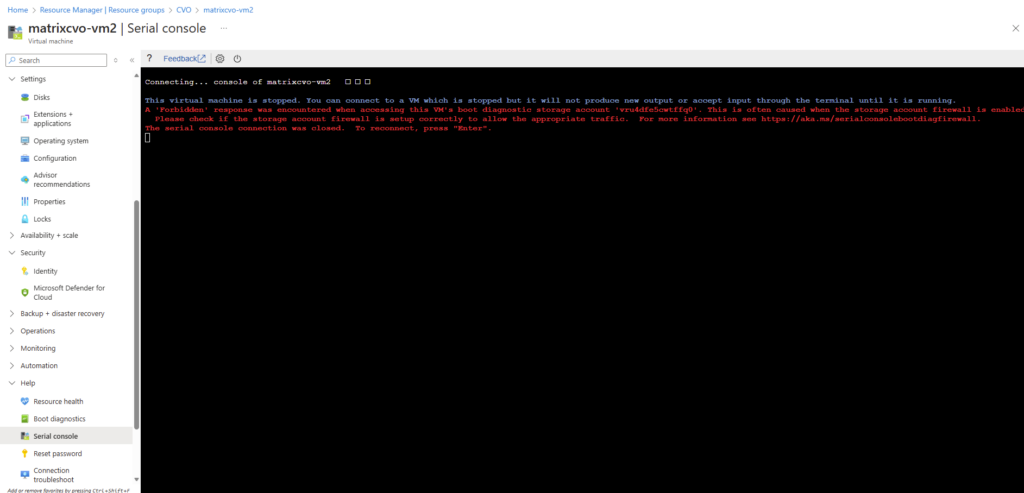

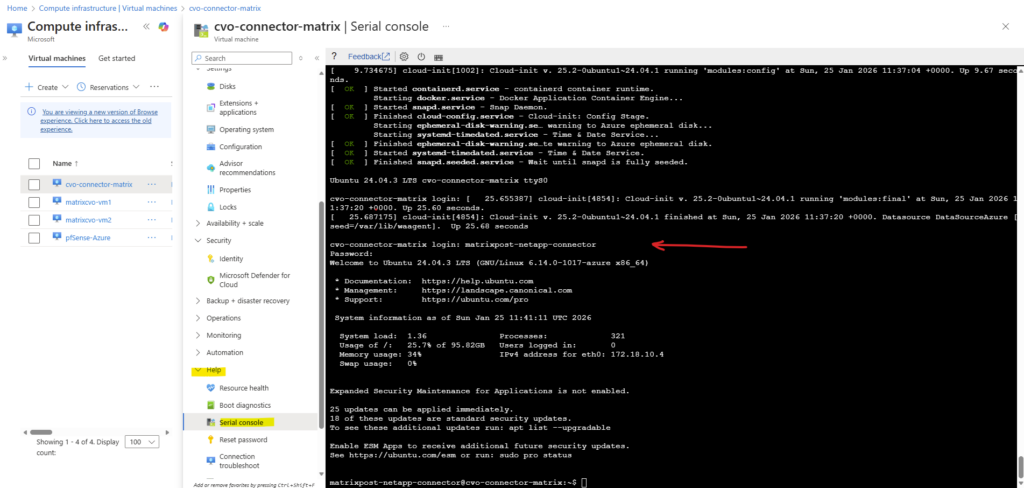

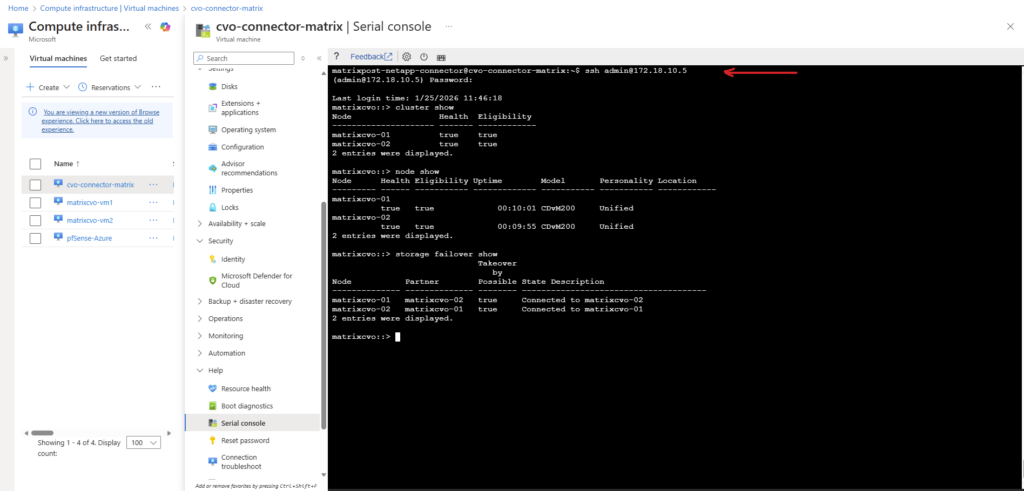

To connect to the Agent (Connector VM) we can use either the serial console in the Azure portal (no public IP needed) or connecting from remote through SSH and the public IP of the Agent VM.

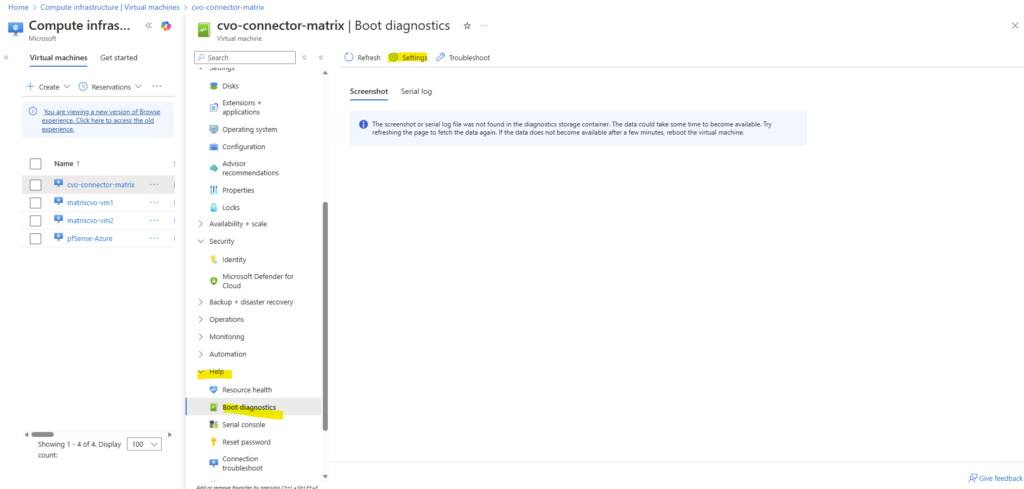

By using the serial console we first need to enable boot diagnostics for the Agent VM.

Finally we can login to the Agent by using the serial console.

And connecting to the ONTAP cluster by using SSH and the cluster IP finally.

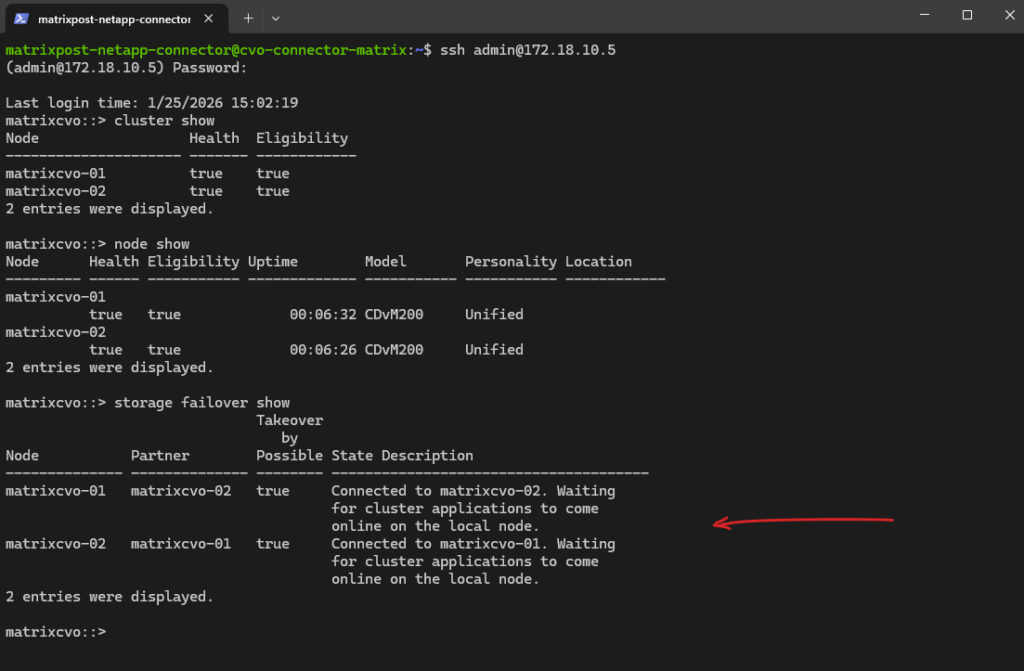

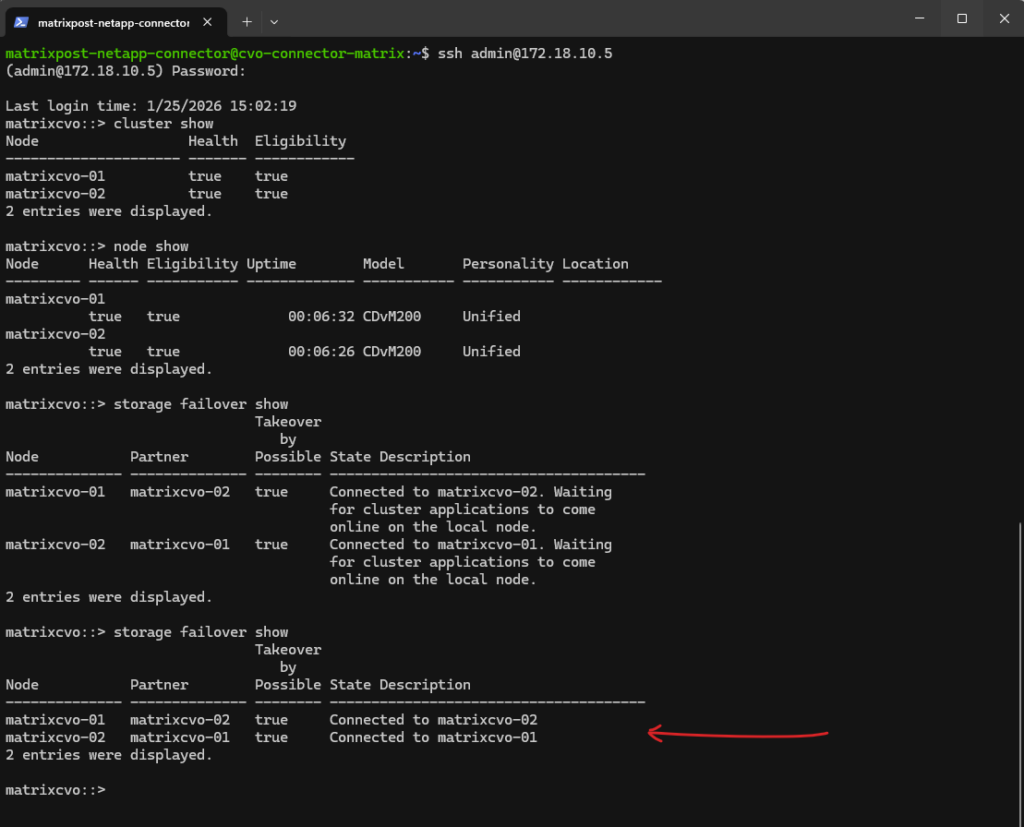

In ONTAP we can run the following commands to ensure everything is up and running as expected.

After starting the Cloud Volumes ONTAP nodes, the

cluster showandnode showcommands confirm that both nodes are online and healthy.The

storage failover showoutput indicates that the HA relationship is established and that both nodes are waiting for all cluster services to fully come online, which is expected shortly after startup.

matrixcvo::> cluster show matrixcvo::> node show matrixcvo::> storage failover show

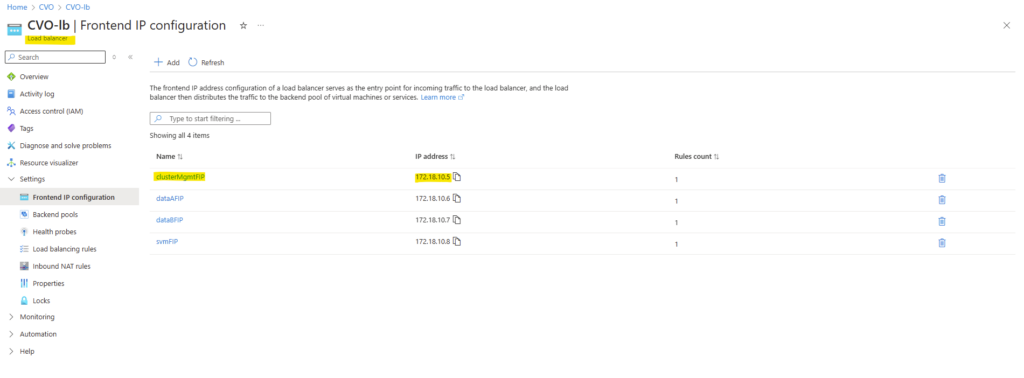

The cluster IP we can obtain from the in Azure created load balancer like shown below.

Azure automatically creates an internal Load Balancer for the Cloud Volumes ONTAP HA pair, which is used to provide a stable access point for cluster management and inter-node communication.

The frontend IP configuration contains the clusterMgmtFIP, which represents the floating management IP of the ONTAP cluster and transparently moves between nodes during failover.

The floating IP on the Azure Load Balancer ensures that the active ONTAP node can retain the same frontend IP address during failover, enabling seamless connectivity without client-side reconfiguration.

This abstraction ensures continuous management access even when a node becomes unavailable.

Or as mentioned by connecting from remote through SSH also first to the Agent (Connector VM) and then to the ONTAP cluster.

In the NetApp console also the Cloud Volume ONTAP HA cluster looks good again after powering on our virtual machines. This take up some minutes.

If the following message shown up when running the command below shortly after starting the VMs (nodes), the cluster initialization is not already finished.

matrixcvo::> storage failover show

Connected to matrixcvo-02. Waiting for cluster applications to come online on the local node.

The “waiting for cluster applications to come online” message indicates that both nodes are up and connected, but ONTAP is still completing the startup of internal cluster services. This is normal shortly after a reboot and typically resolves automatically once all services are fully initialized.

Once initialization is complete, the status changes to “Connected”, indicating that both nodes are fully operational and the HA configuration is healthy.

Troubleshooting

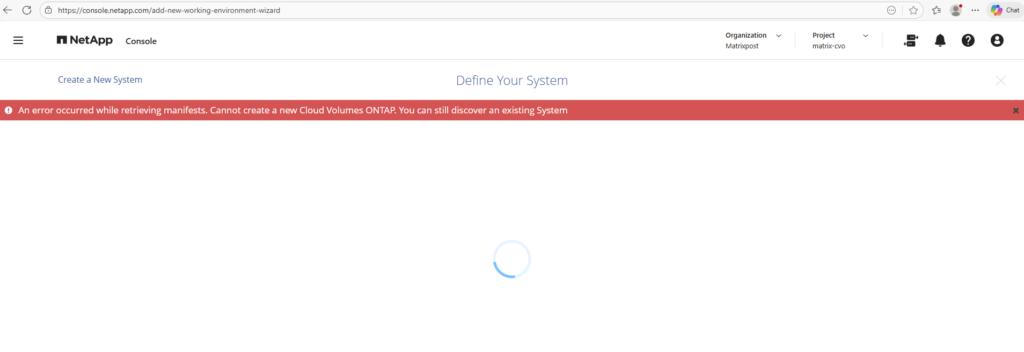

An error occurred while retrieving manifests. Cannot create a new Cloud Volumes ONTAP. You can still discover an existing System

Also I will run for some deployments into this error, after clicking on Add new Cloud Volume ONTAP HA shown below.

An error occurred while retrieving manifests. Cannot create a new Cloud Volumes ONTAP. You can still discover an existing System

This error indicating that the NetApp Connector could not access the required resources.

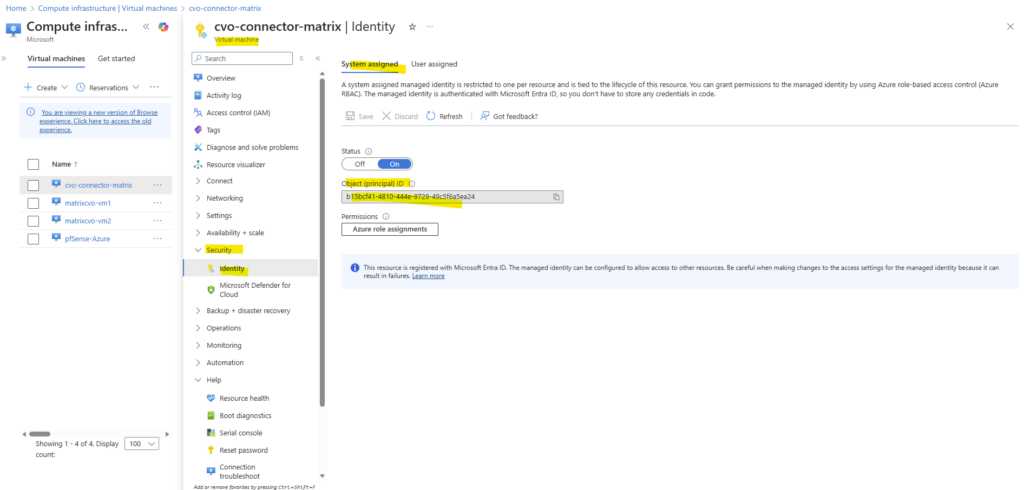

The root cause was that the Connector VM’s managed identity was no longer associated with the custom “Console Operator” role created via JSON in Part 1.

Reassigning the role to the managed identity immediately resolved the issue and allowed the deployment to proceed successfully.

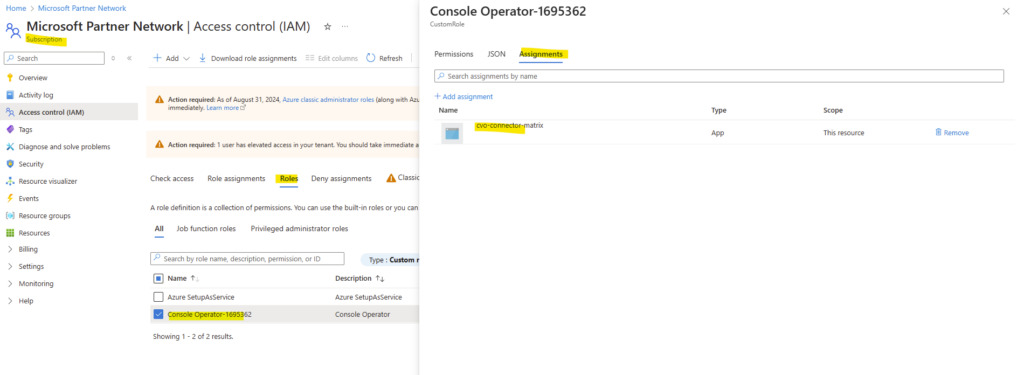

In the Azure Portal, go to Subscription > Access Control (IAM) > Role Assignments. Find every eventual entry labeled “Identity not found” and remove it.

Verify that our VM (Connector VM) Identity is associated with this role.

Go to your Connector VM > Identity and check its identity.

Next go to your Subscription > Access Control (IAM) > Roles, for Type filter for Custom role, select your previously via JSON created custom role in Part 1 and check if the identity from the Connector VM is associated like shown below.

If not click on + Add assignment and add it.

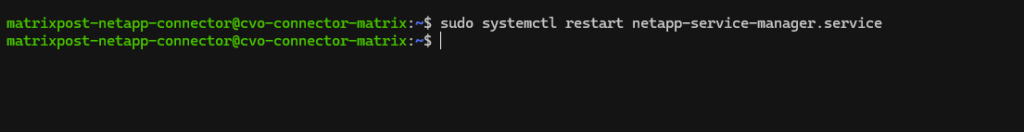

Even after fixing Azure, the Connector often “remembers” the old failure. You need to force it to re-scan its permissions by running the following command on the Connector VM.

$ sudo systemctl restart netapp-service-manager.service

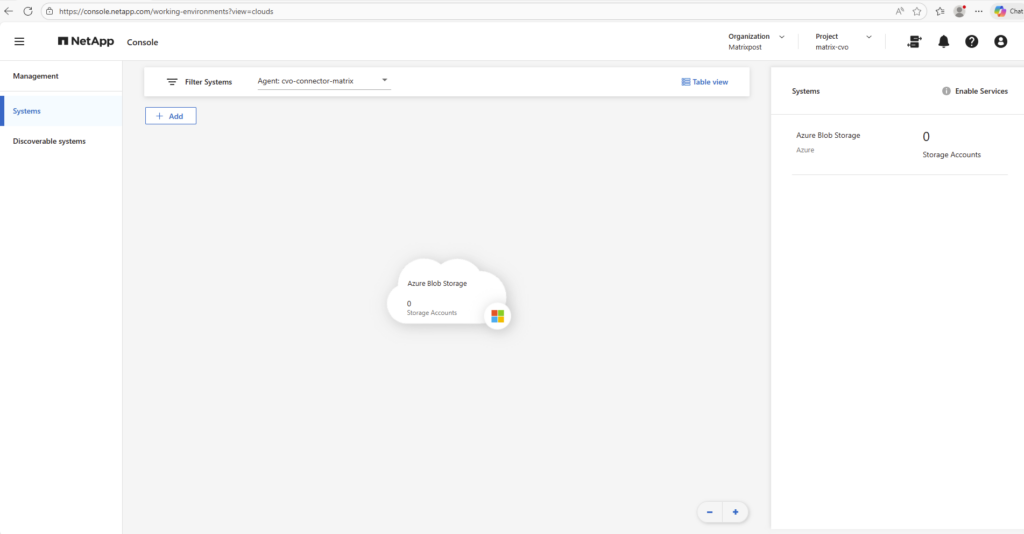

Afterwards the connector VM is recognized again under Management -> Systems.

With the Cloud Volumes ONTAP HA system now successfully deployed in Azure, the core infrastructure and ONTAP environment are in place.

At this stage, no storage objects or data services such as NFS or CIFS/SMB have been configured yet.

These steps, including SVM creation and volume provisioning, will be covered in Part 3 (coming soon).

Links

Plan your Cloud Volumes ONTAP configuration in Azure

https://docs.netapp.com/us-en/storage-management-cloud-volumes-ontap/task-planning-your-config-azure.htmlLaunch Cloud Volumes ONTAP in Azure

https://docs.netapp.com/us-en/storage-management-cloud-volumes-ontap/task-deploying-otc-azure.htmlLaunch a Cloud Volumes ONTAP HA pair in Azure

https://docs.netapp.com/us-en/storage-management-cloud-volumes-ontap/task-deploying-otc-azure.html#launch-a-cloud-volumes-ontap-ha-pair-in-azureSupported configurations for Cloud Volumes ONTAP in Azure

https://docs.netapp.com/us-en/cloud-volumes-ontap-relnotes/reference-configs-azure.htmlLicensing for Cloud Volumes ONTAP

https://docs.netapp.com/us-en/storage-management-cloud-volumes-ontap/concept-licensing.htmlAzure region support

https://bluexp.netapp.com/cloud-volumes-global-regionsLearn about NetApp Console agents

https://docs.netapp.com/us-en/console-setup-admin/concept-agents.html

Tags In

Related Posts

Follow me on LinkedIn