Installing SAP Data Intelligence on Azure Kubernetes Service – Part 2

In Part 2 we will first install and configure the tools including the Software Lifecycle Container Bridge 1.0 on the Jumphost, in order to deploy SAP Data Intelligence to our Kubernetes Cluster. We also use in this part the SAP Maintenance Planner to set up container based stack on Azure Kubernetes Service.

We will first install the Azure CLI

Install the Azure CLI on Linux

https://docs.microsoft.com/en-us/cli/azure/install-azure-cli-linux?pivots=apt

Step-by-step installation instructions

https://docs.microsoft.com/en-us/cli/azure/install-azure-cli-linux?pivots=apt#option-2-step-by-step-installation-instructions

1. Get packages needed for the install process:

sudo apt-get update

sudo apt-get install ca-certificates curl apt-transport-https lsb-release gnupg

2. Download and install the Microsoft signing key:

curl -sL https://packages.microsoft.com/keys/microsoft.asc |

gpg --dearmor |

sudo tee /etc/apt/trusted.gpg.d/microsoft.gpg > /dev/null

3. Add the Azure CLI software repository:

AZ_REPO=$(lsb_release -cs)

echo "deb [arch=amd64] https://packages.microsoft.com/repos/azure-cli/ $AZ_REPO main" |

sudo tee /etc/apt/sources.list.d/azure-cli.list

4. Update repository information and install the azure-cli package:

sudo apt-get update

sudo apt-get install azure-cliNow we can use the Azure-Cli to login to our Azure tenant and set the active subscription.

az login –tenant <your tenant id>

az account set –subscription <your subscription id>

Next we will install Kubectl

You must use a kubectl version that is within one minor version difference of your cluster. For example, a v1.23 client can communicate with v1.22, v1.23, and v1.24 control planes. Using the latest compatible version of kubectl helps avoid unforeseen issues.

Source: https://kubernetes.io/docs/tasks/tools/install-kubectl-linux/

As my Kubernetes service is running in version 1.21.9, I will use v1.22.0 for kubectl to fulfil the one minor version difference mentioned above.

# curl -LO https://storage.googleapis.com/kubernetes-release/release/v1.22.0/bin/linux/amd64/kubectl # chmod +x ./kubectl # mv ./kubectl /usr/bin/kubectl

We also need to install docker, docker is available as a standard Ubuntu package so it’s enough to run the apt-get command, no need to update repositories.

# sudo apt install docker.io

Further we need to install the python YAML parser and emitter

# sudo apt install python-yaml

Another tool is helm we need to install. We will install the latest version 3.0.

Install helm

https://docs.microsoft.com/en-us/azure/aks/quickstart-helm

https://helm.sh/docs/intro/install/

# curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 # chmod 700 get_helm.sh # ./get_helm.sh

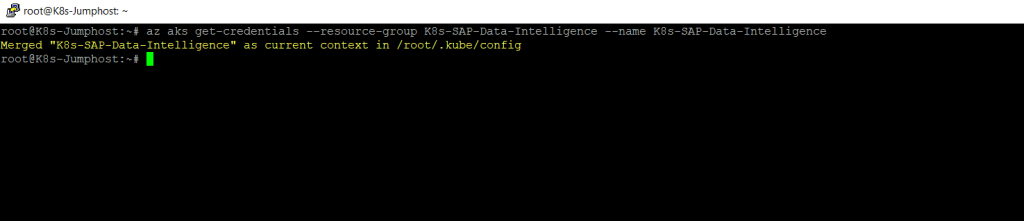

Now we need to connect to our AKS cluster. Therefore we can use the az aks get-credentials command.

az aks get-credentials –resource-group <name resource group> –name <name kubernetes service>

az aks get-credentials –resource-group K8s-SAP-Data-Intelligence –name K8s-SAP-Data-Intelligence

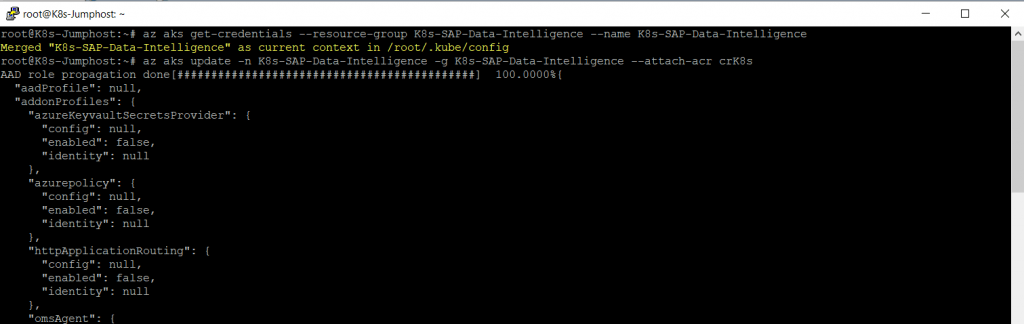

We also need to attach the Azure container registry to our Kubernetes Cluster:

az aks update -n <name kubernetes service> -g <name resource group> –attach-acr <name container registry>

az aks update -n K8s-SAP-Data-Intelligence -g K8s-SAP-Data-Intelligence –attach-acr crK8s

The next step is to install the SLC Bridge tool from SAP on our Jumphost.

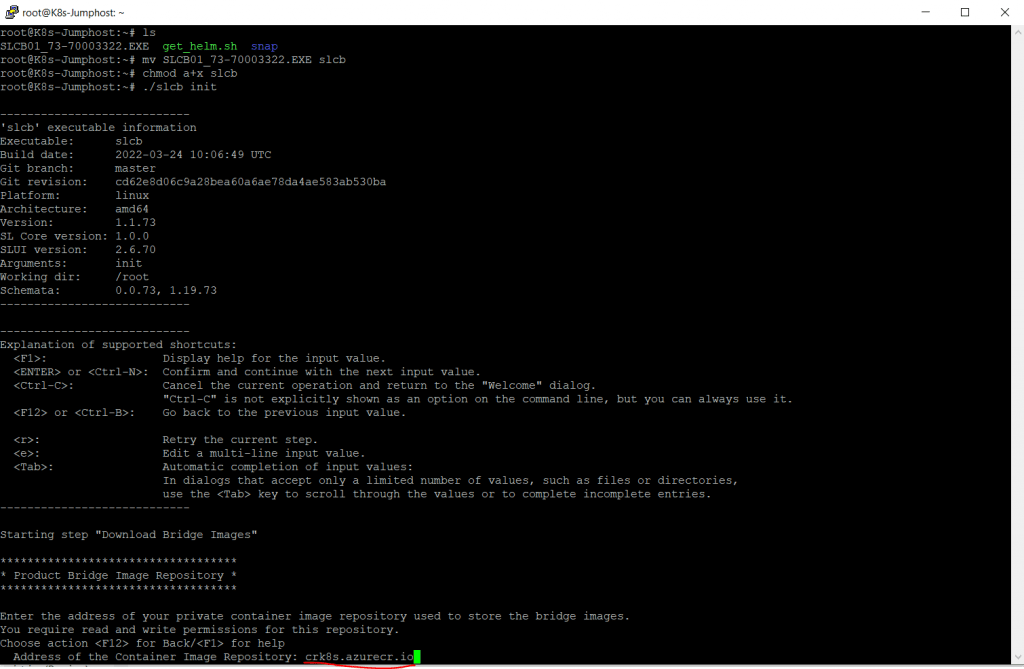

Installing SAP Data Intelligence using the SLC Bridge

Software Lifecycle Container Bridge 1.0 is a tool for installing, upgrading, and uninstalling container-based applications. In the following, we use the abbreviated term “SLC Bridge”.

As of SAP Data Intelligence 3.0 on Premise, the SLC Bridge has completely replaced the alternative command line tool install.sh. The install.sh tool remains only available for SAP Data Hub 2.7 and lower.

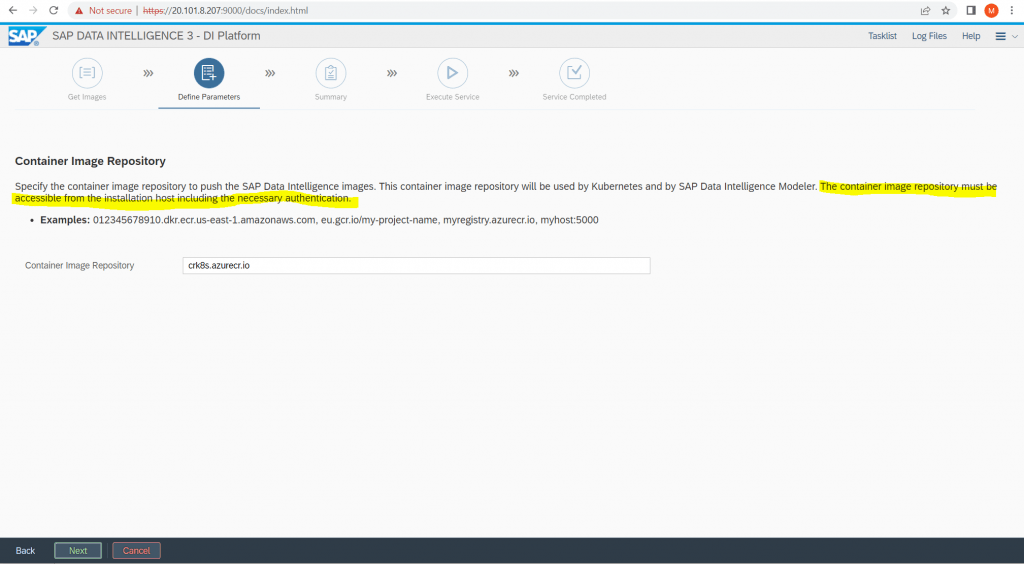

You run any deployment using the Software Lifecycle Container Bridge 1.0 tool with Maintenance Planner. In the following, we use the abbreviated tool name “SLC Bridge”. The SLC Bridge runs as a Pod named “SLC Bridge Base” in your Kubernetes cluster. The SLC Bridge Base downloads the required images from the SAP Registry and deploys them to your private container registry (container image repository) from which they are retrieved by the SLC Bridge to perform an installation, upgrade, or uninstallation.

Unlike previous versions of SLC Bridge which require an SAP Host Agent installed and configured on an installation host, the new SLC Bridge version 1.1.41 and higher, which is used by this release of SAP Data Intelligence , now runs as a Pod in your Kubernetes cluster. You deploy the SLC Bridge to your Kubernetes cluster by downloading the SLCB01_<Version>.[EXE|DMG] program from https://support.sap.com/ to your administrator’s workstation and run it from there. Once deployed, the SLC Bridge stays available in your Kubernetes cluster as a Service Pod, the so-called “Software Lifecycle Container Bridge Base” ( “SLC Bridge Base” for short). You can always use this SLC Bridge Base Pod to perform lifecycle management tasks for the installed product, such as upgrade and uninstall.

Download SLCB

https://launchpad.support.sap.com/#/softwarecenter/search/SLCB01

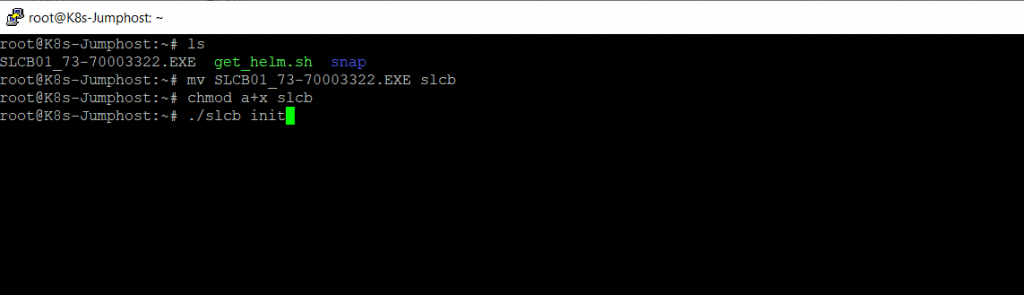

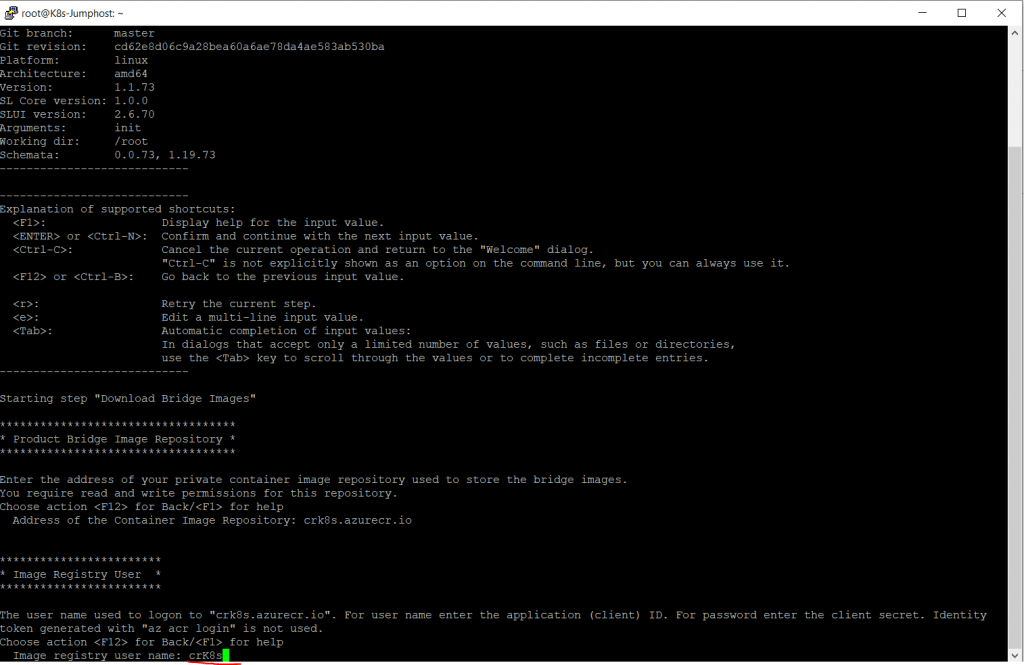

After uploading the SLCB to our Ubuntu VM (Jumphost), we will rename the binary, add the execute rights and run it with the init flag.

# mv SLCB01_73-70003322.EXE slcb

# chmod a+x slcb

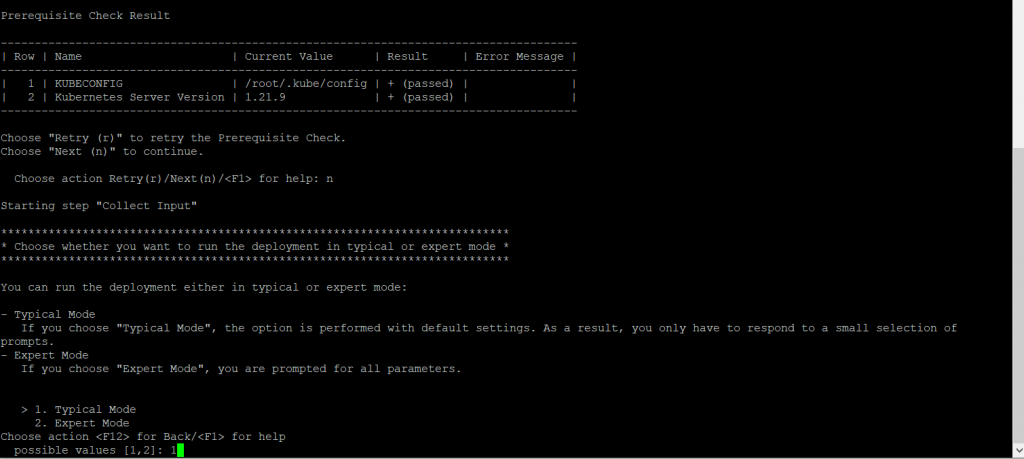

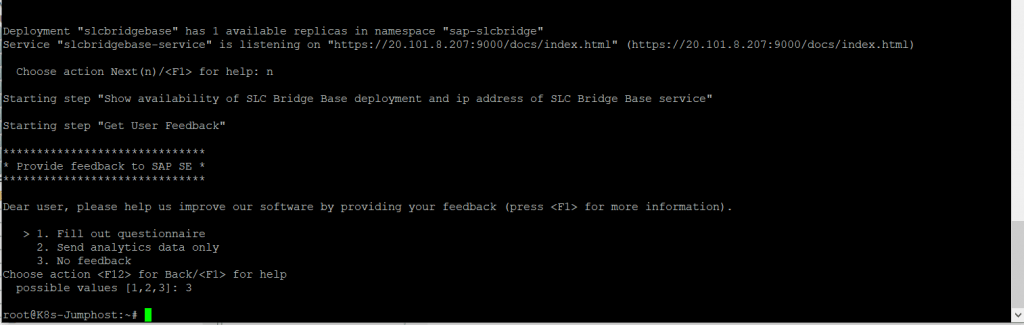

# ./slcb init

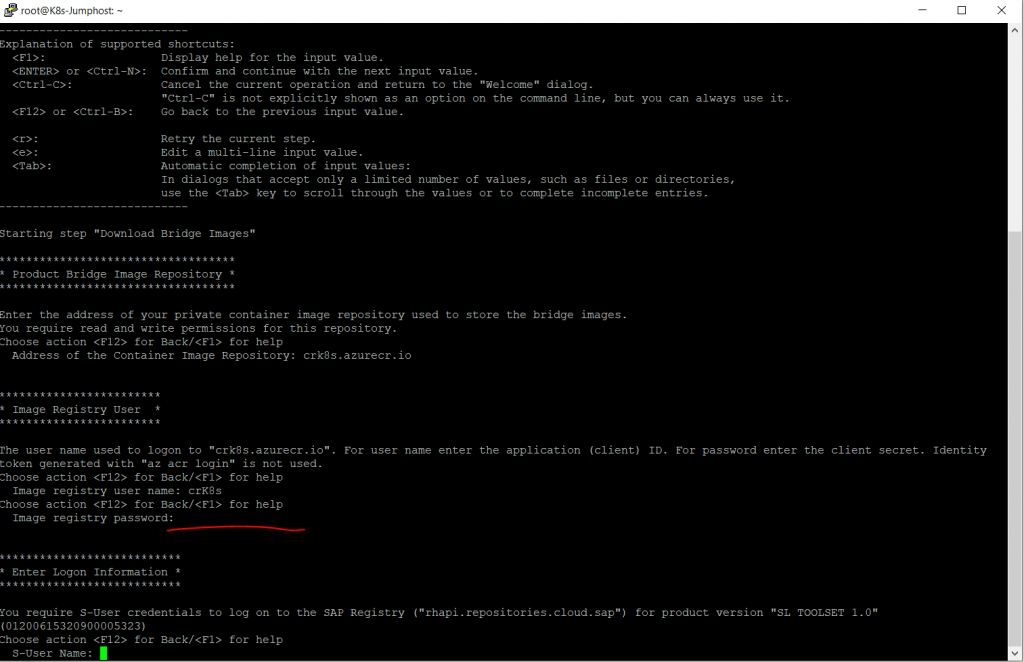

As we enabled the Admin user for our container registry, we can use the name as user.

For the password we can use the password under Access keys in our Container registry as we enabled the admin user.

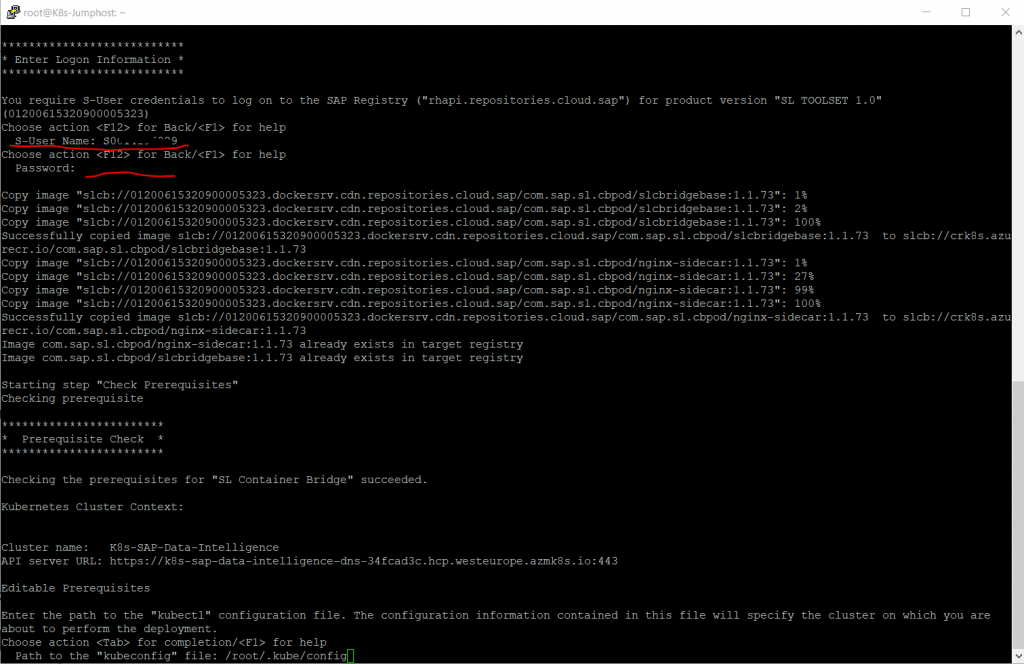

Next step is to enter our SAP credentials to access the SAP registry

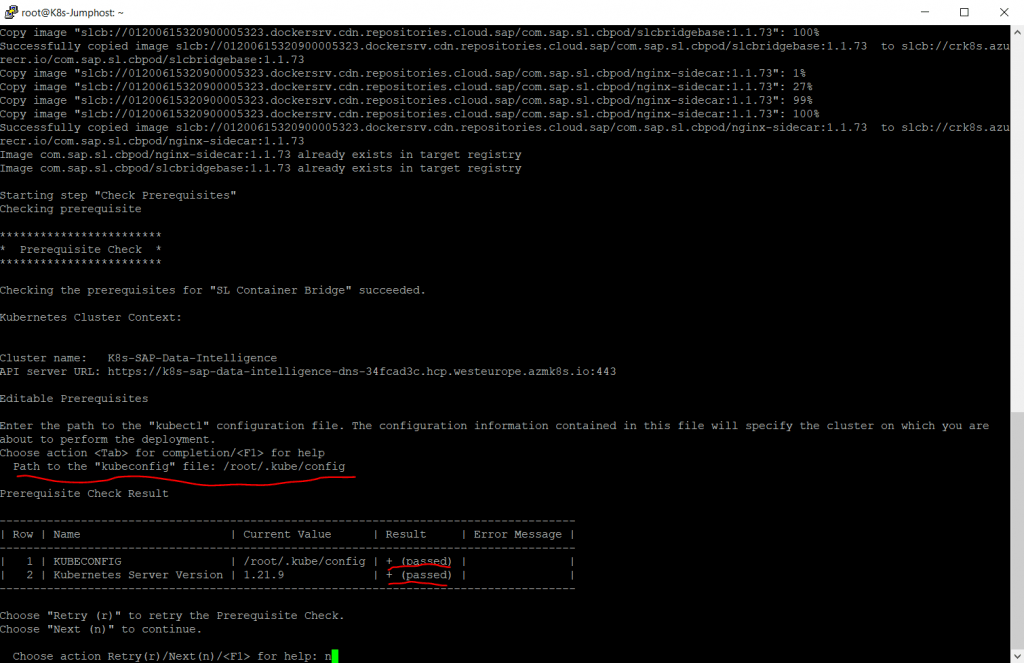

Path to the kubeconfig file we will use the default, so press enter.

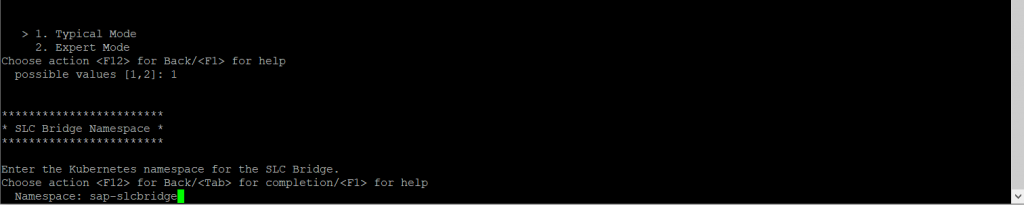

For the deployment mode we use 1. Typical Mode

For the SLC Bridge Namespace we will use the default name sap-slcbridge

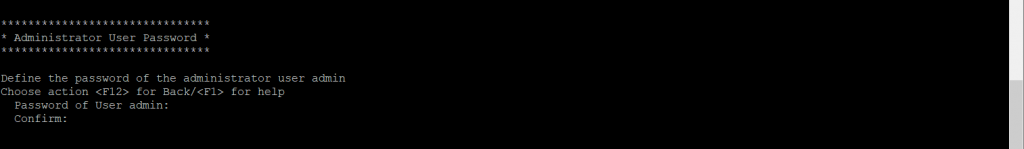

For the Administrator User we use the default name admin and also set a password.

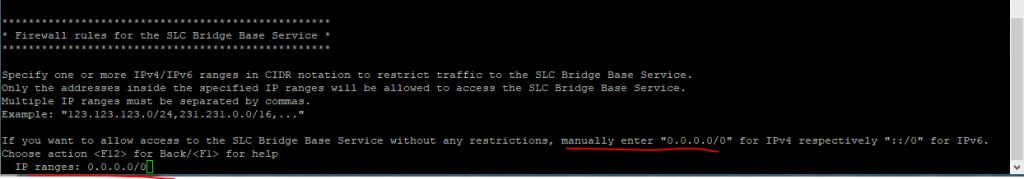

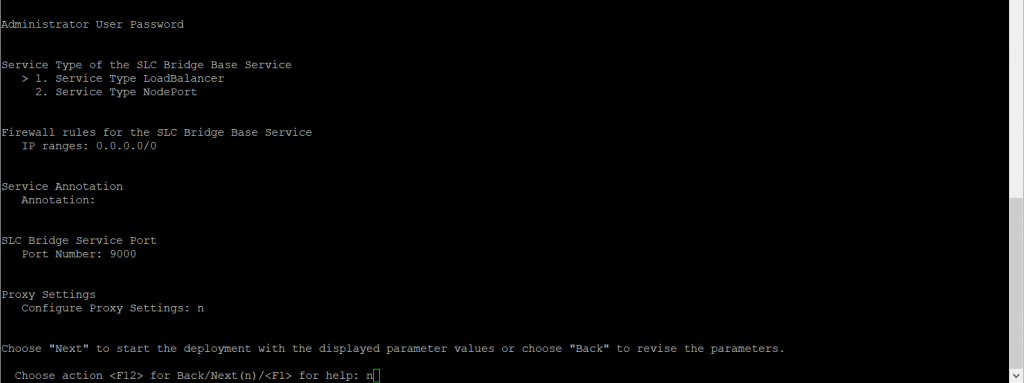

I will allow access to the SLC Bridge Base Service without any restrictions. Therefore I will enter the IP Range 0.0.0.0/0.

By default Service Annotation will be blank, the SLC Bridge Port is by default 9000 and also the proxy settings are blank by default. To change them you need to select the Expert Mode previously above.

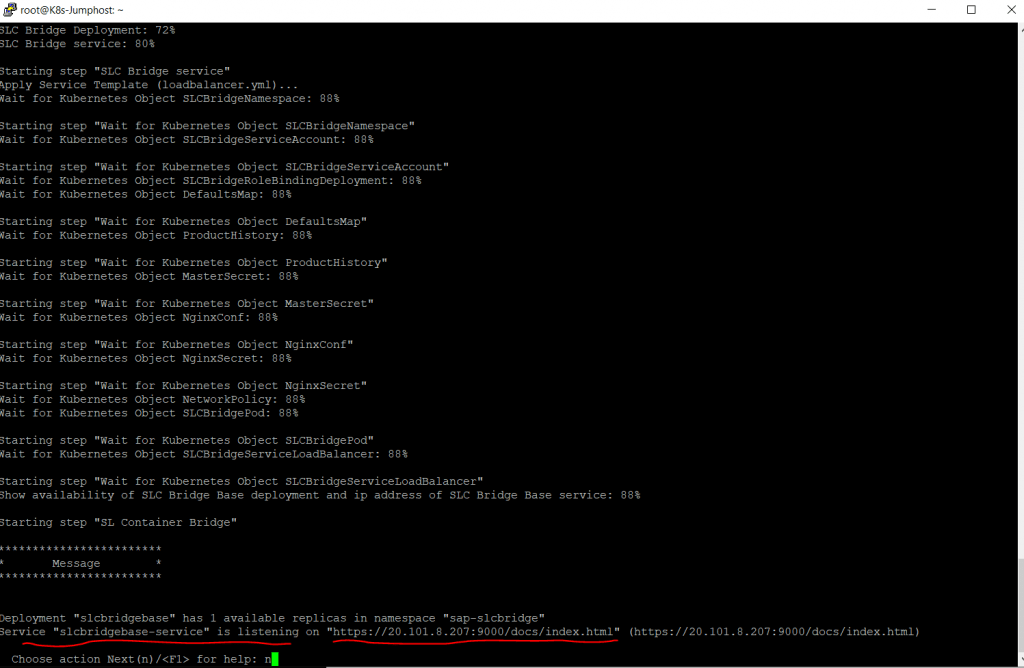

Finally we can see the SLCB URL to access it.

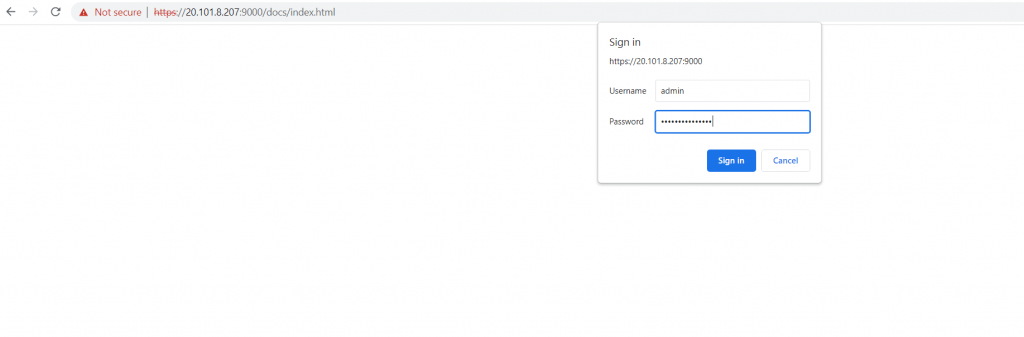

Here we need to login with the admin user and the password we set previously.

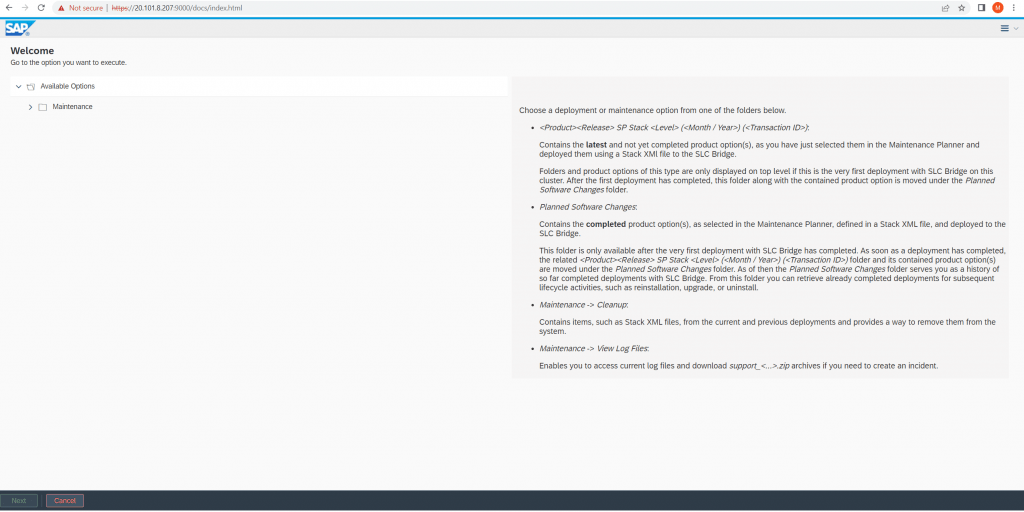

SLC Bridge Setup was successful.

The next steps we need to perform on the SAP Maintenance Planner below in the next section.

SAP Maintenance Planner Setup

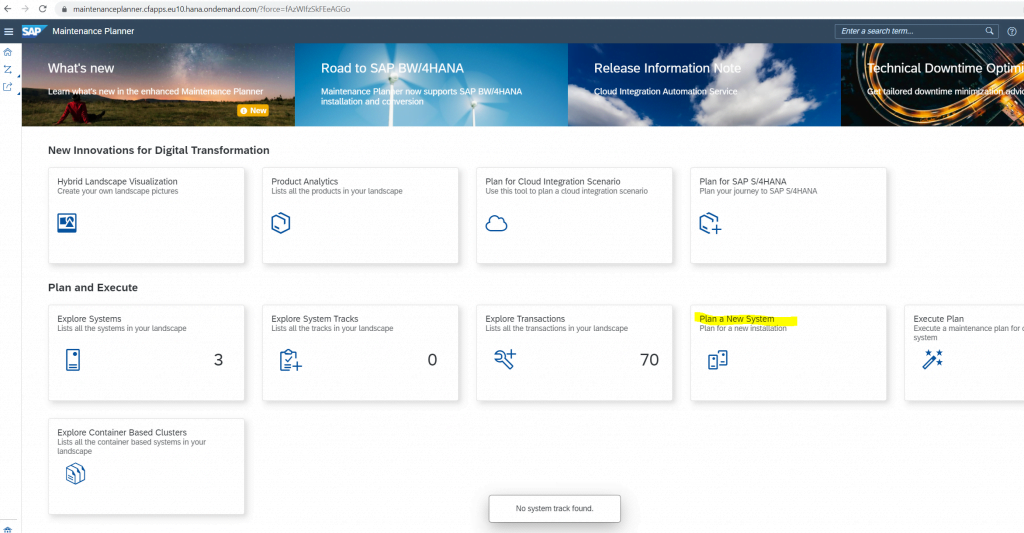

The SAP Maintenance planner is used to deploy the selected SAP Data Intelligence Container based stack on the Azure Kubernetes Services.

- Log on to SAP Maintenance Planner

- Select Plan a new system as we are planning to install a new SAP Data Intelligence system.

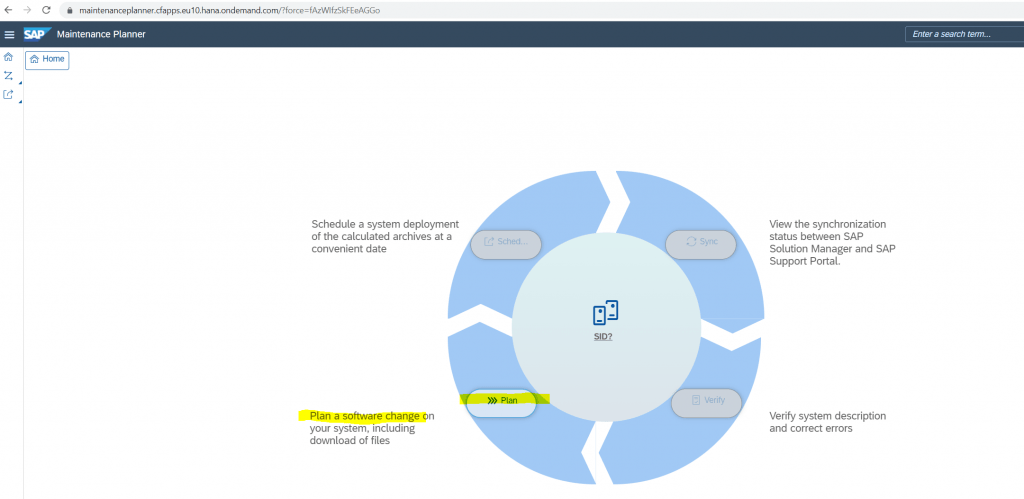

Select Plan

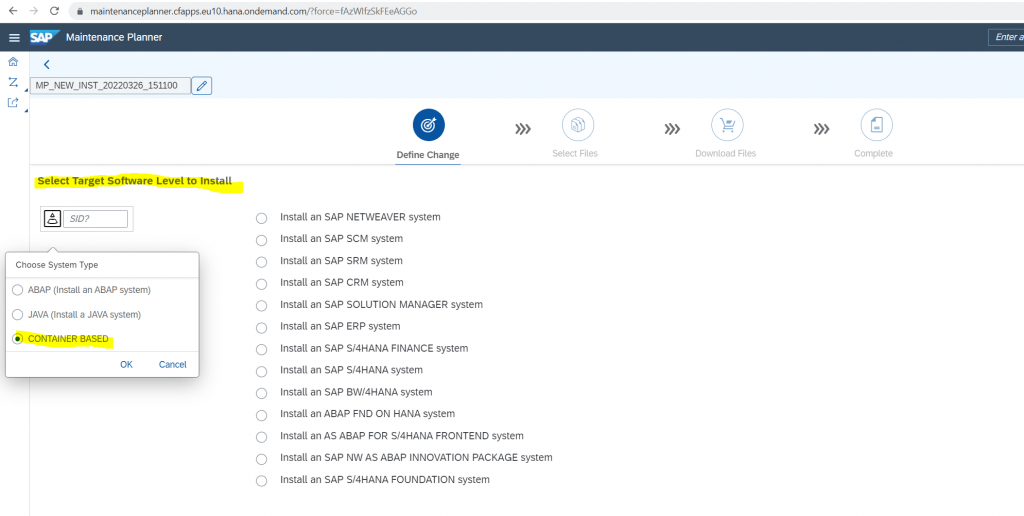

Select for the System Type Container BASED

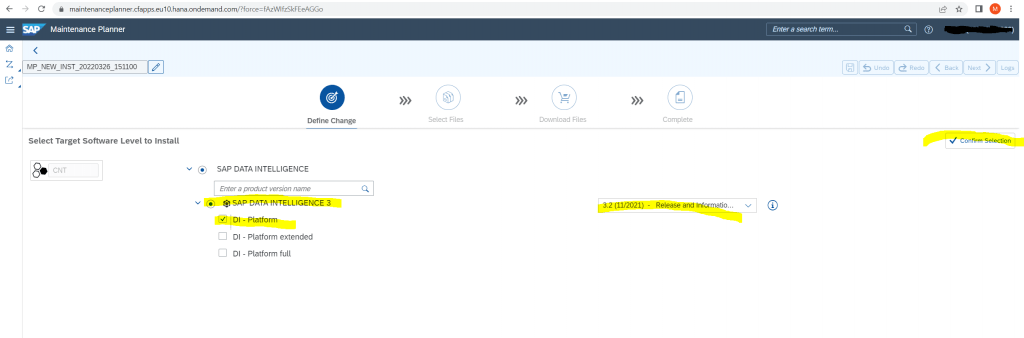

Select SAP DATA Intelligence option field and then SAP Data Interlligence 3, on the right side, select the latest release, 3.2 at writing this post.

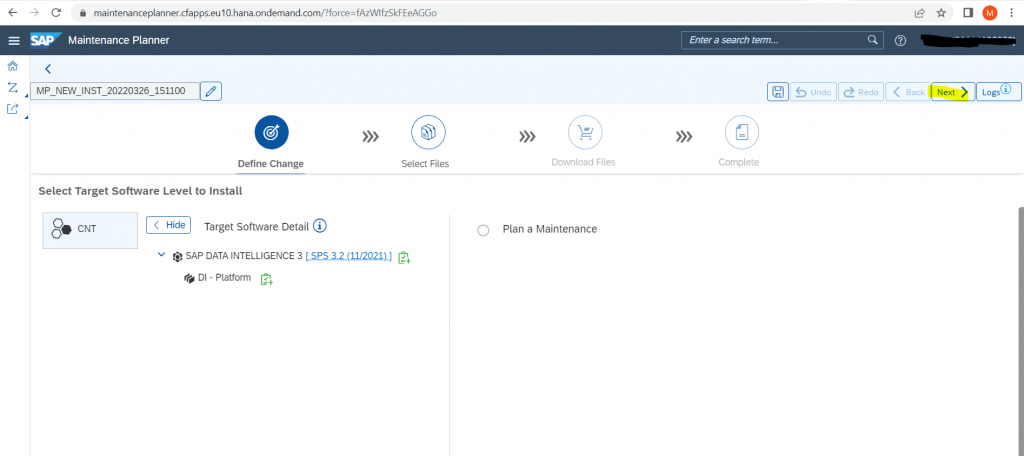

I will select the DI – Platform and then click on Confirm Selection on the right.

Click on Next

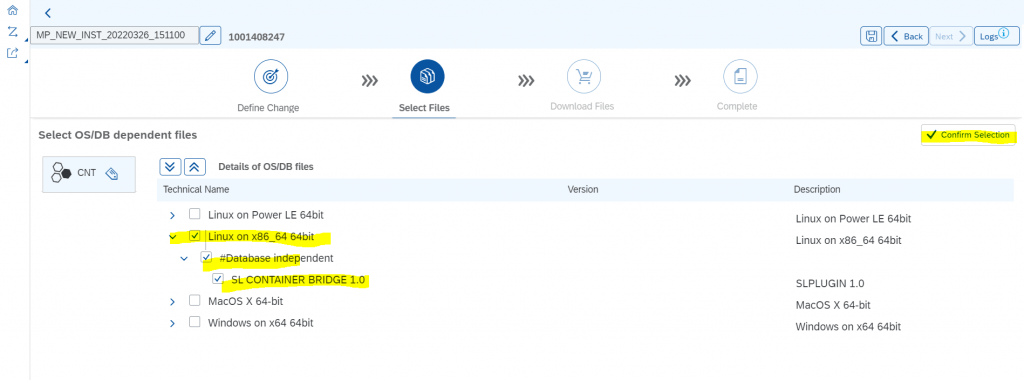

Select Linx on X86_64 64 bit and click on Confirm Selection.

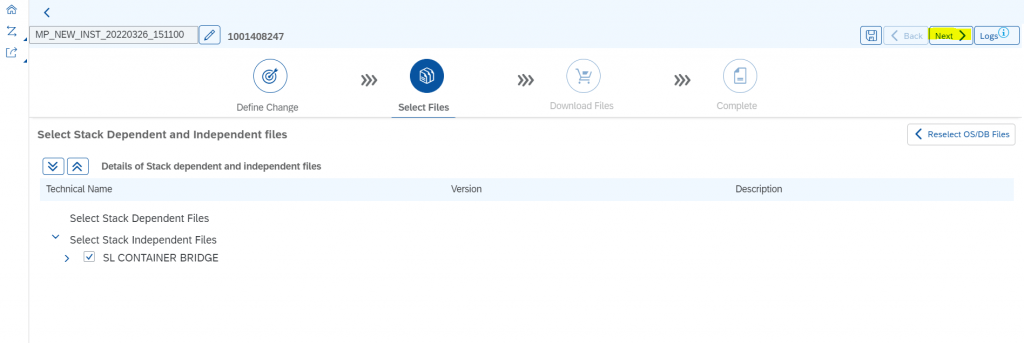

Click on Next.

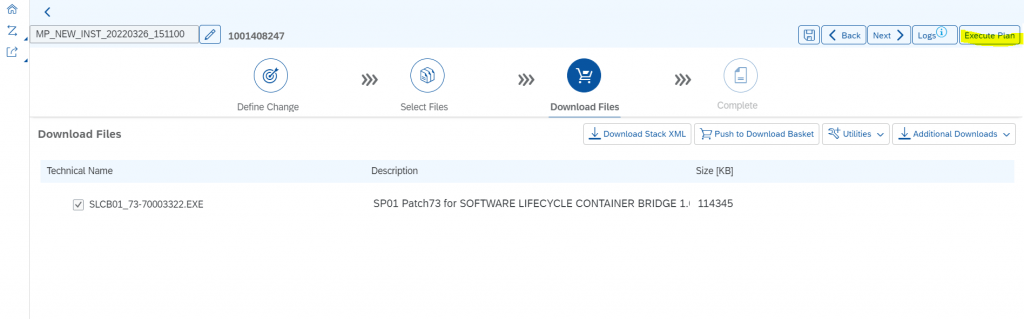

Click on Execute Plan.

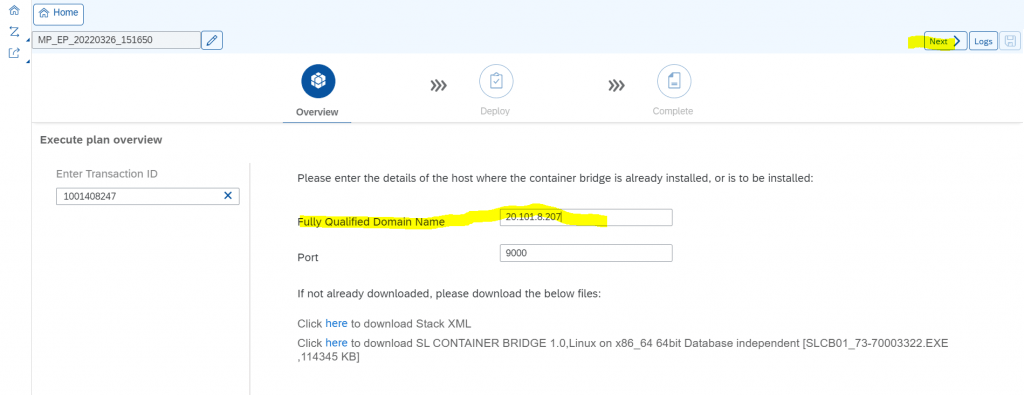

Enter the IP from the SLCB tool previously and click on Next.

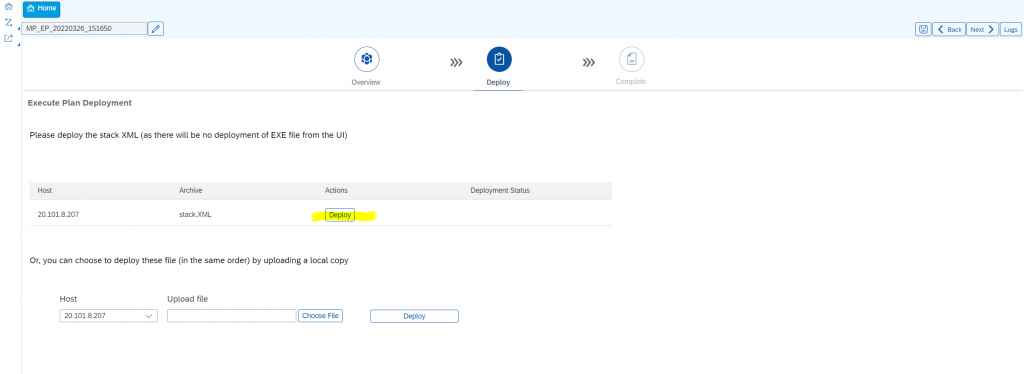

Click on Deploy.

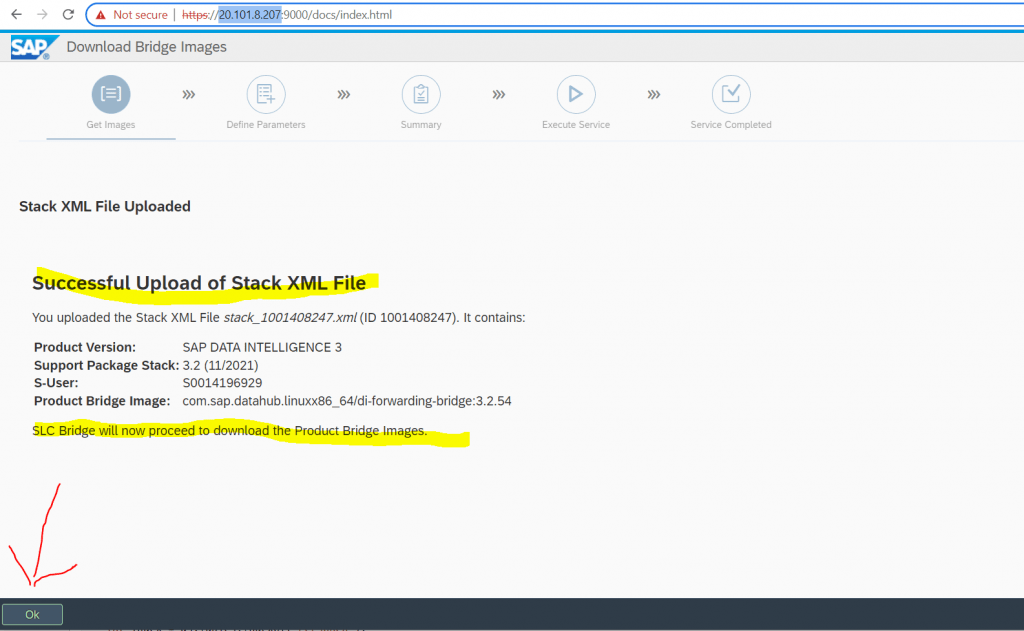

Back on the SLCB Bridge tool (Portal site) we can see that the SLC Bridge deployment XML file will be directly deployed to our SLCB tool running on the jumphost.

Click here on OK at the bottom of the site.

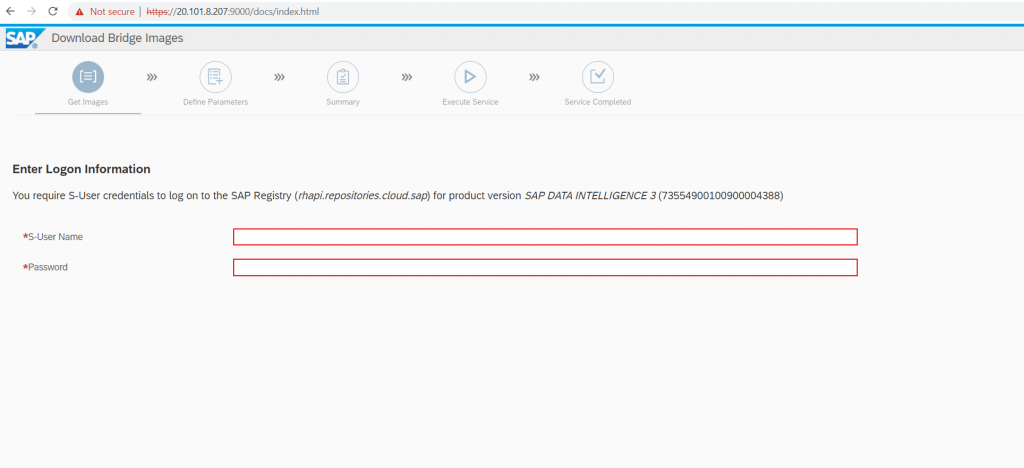

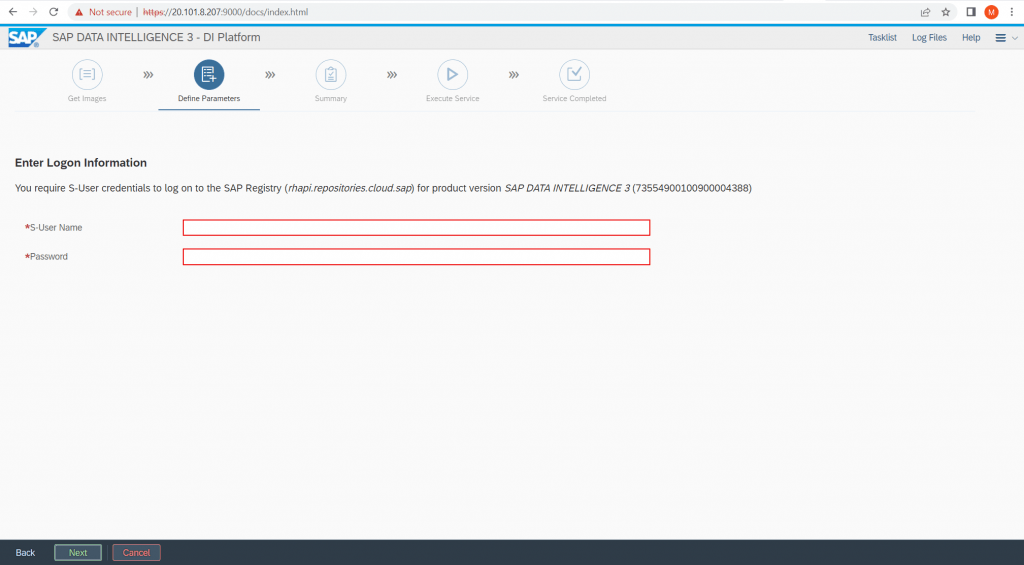

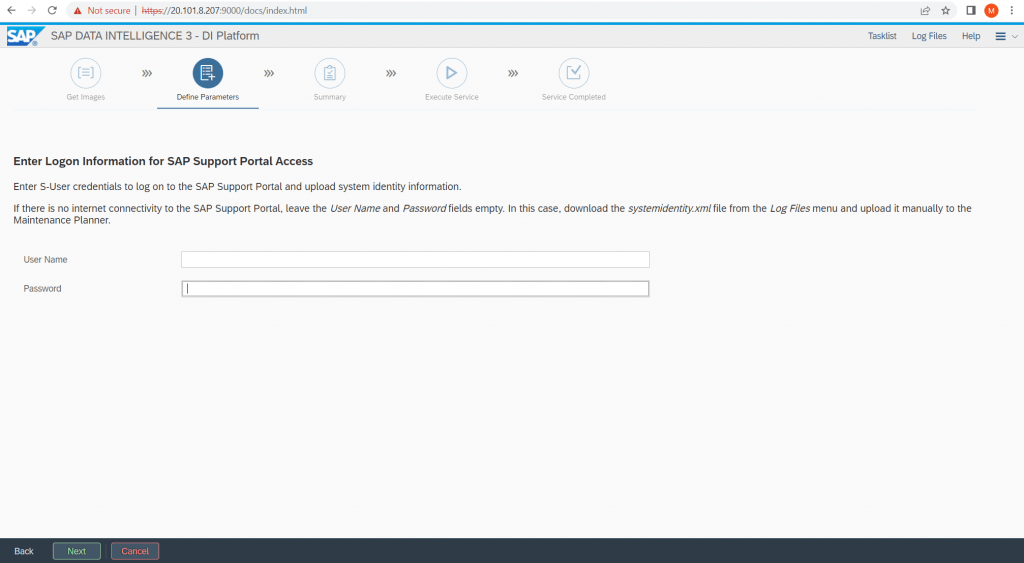

Enter your SAP credentials and click on next at the bottom of the site.

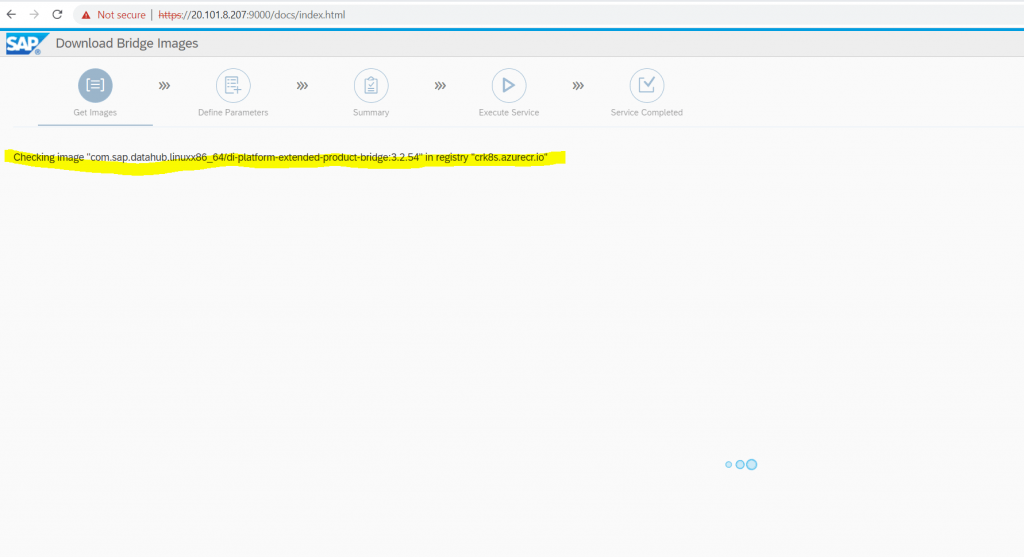

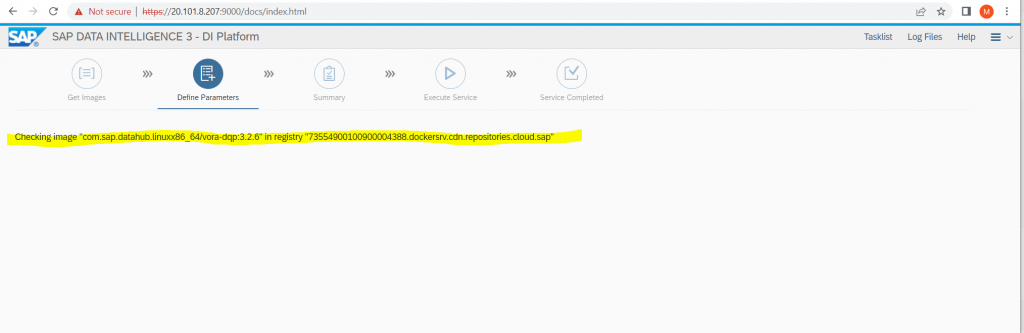

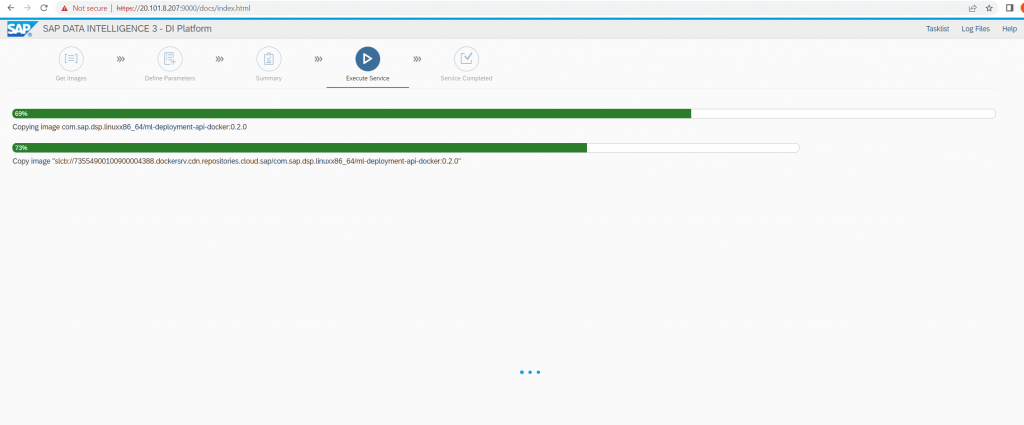

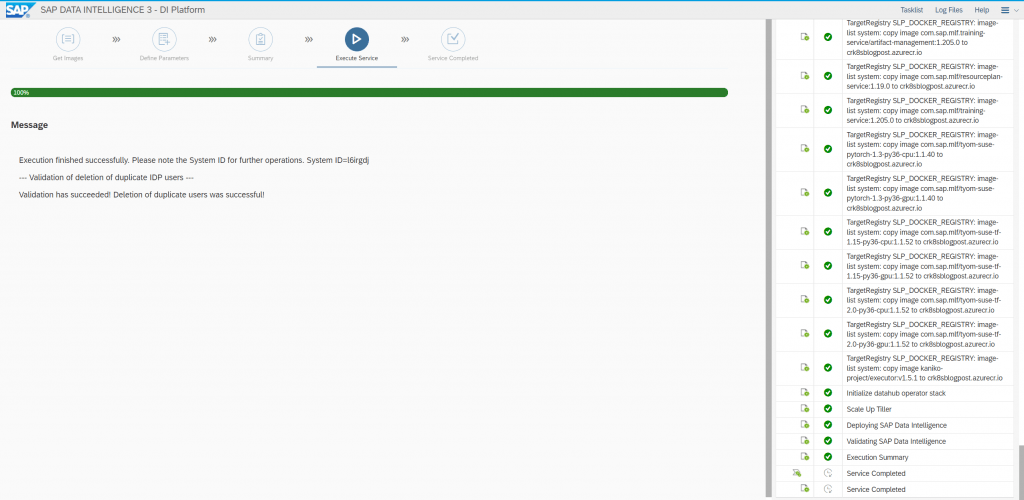

The SAP Data Intelligence images will be copied from the SAP Registry into your Azure Container Registry (ACR).

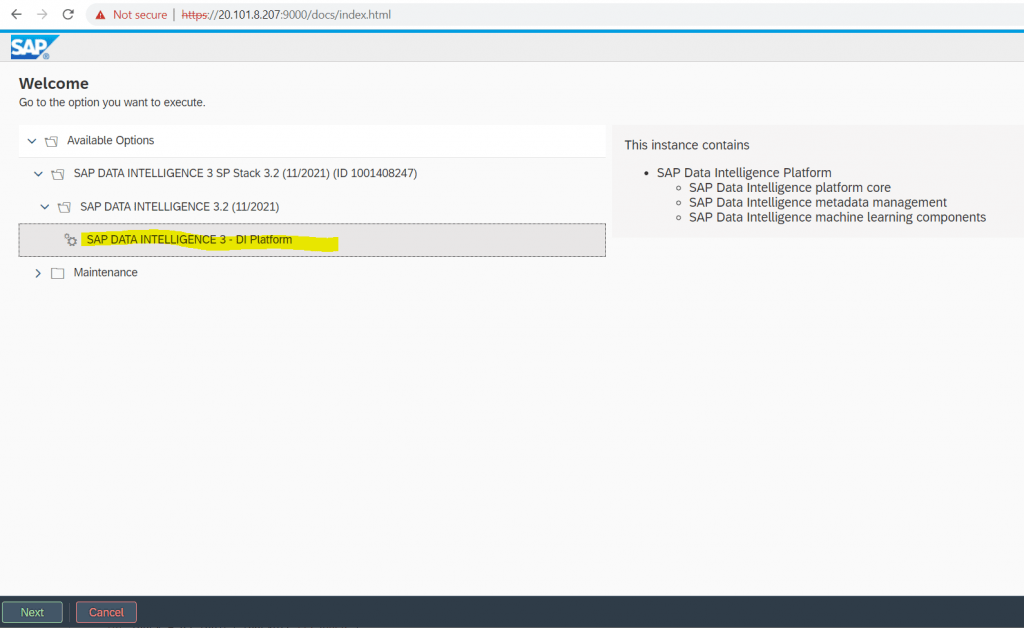

Select SAP Data Intelligence 3 – DI Platform and click on Next at the bottom of the site.

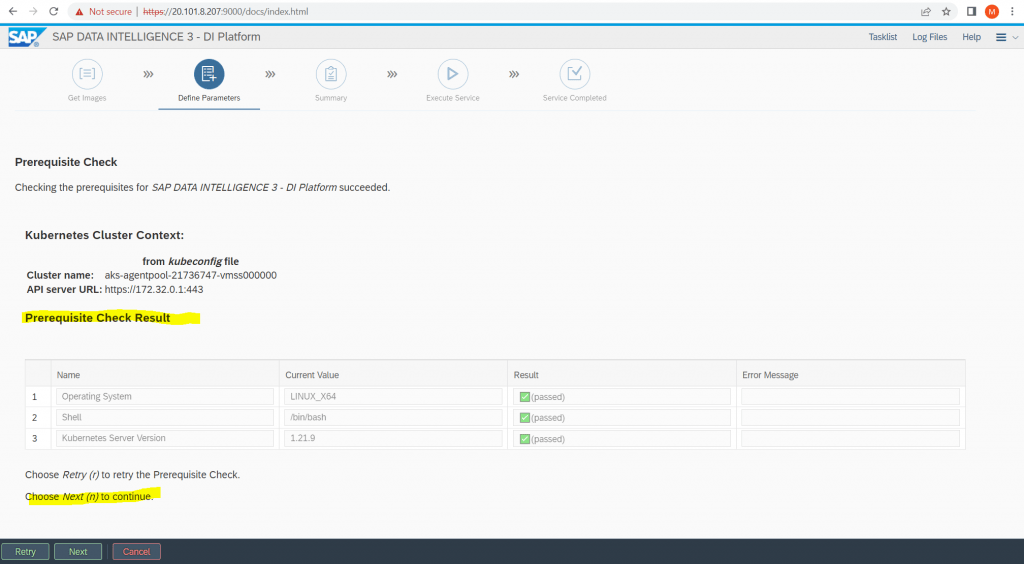

If prerequisites passed, click on Next to set up the Installation.

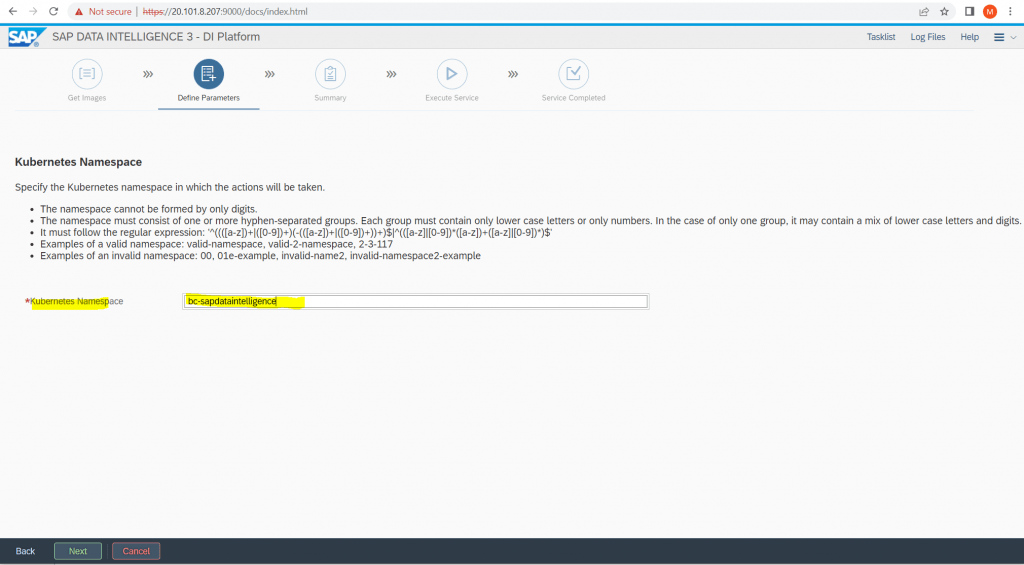

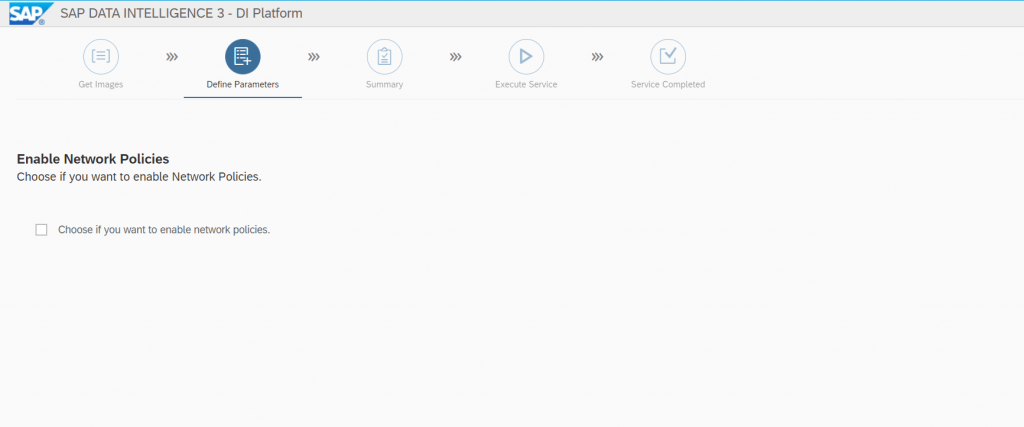

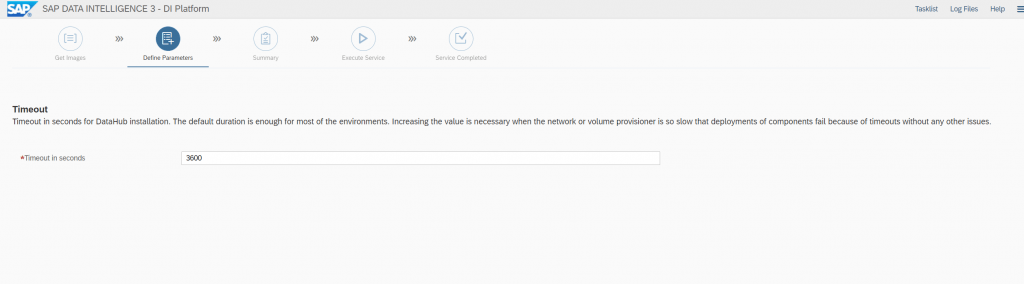

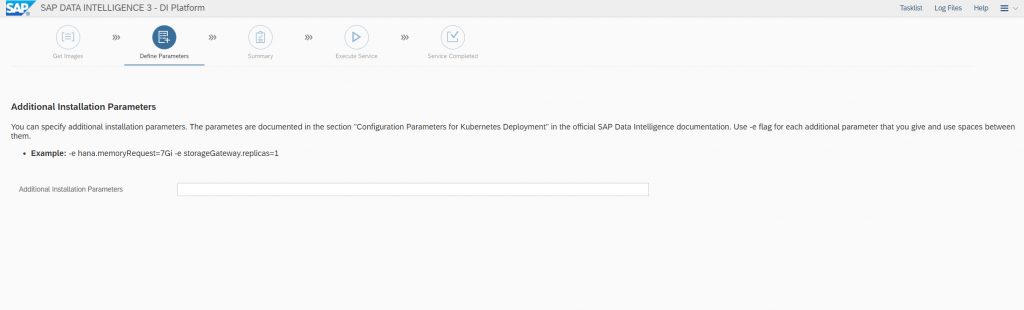

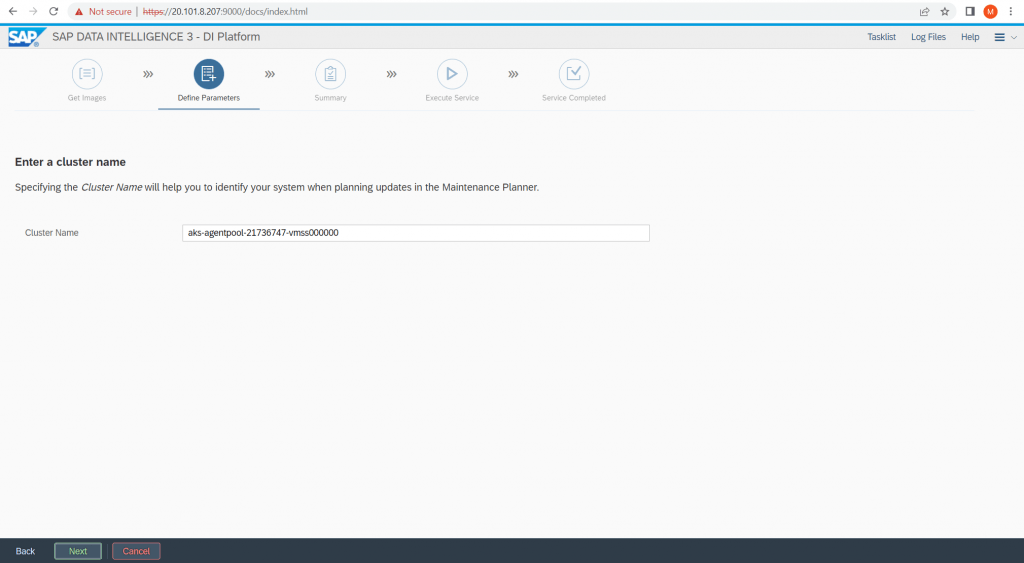

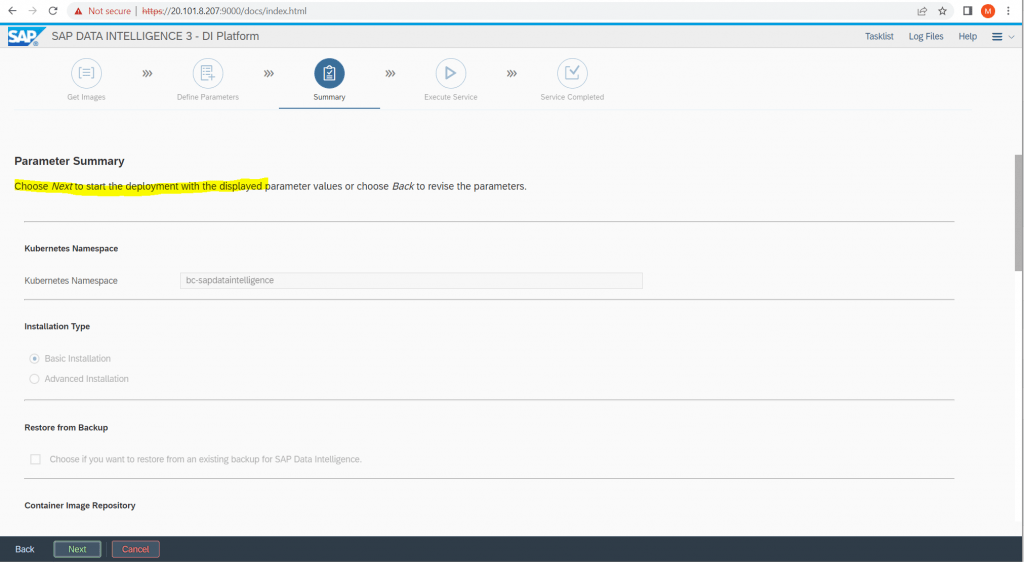

Enter a Kubernetes Namespace within SAP Data Intelligence should run.

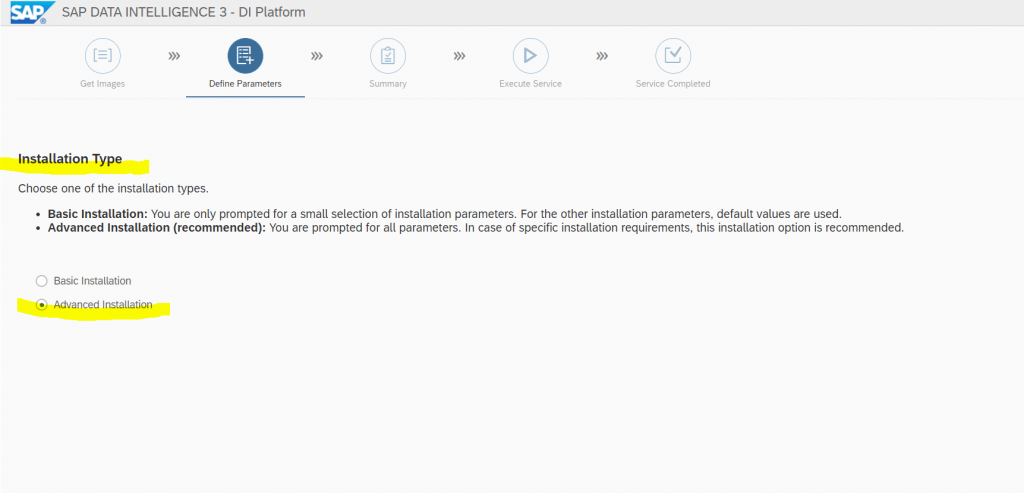

With the Basis Installation I was running into an error the first time, which I will show you in Part 3 under troubleshooting in detail. So to avoid it this time, I will choose Advanced Installation.

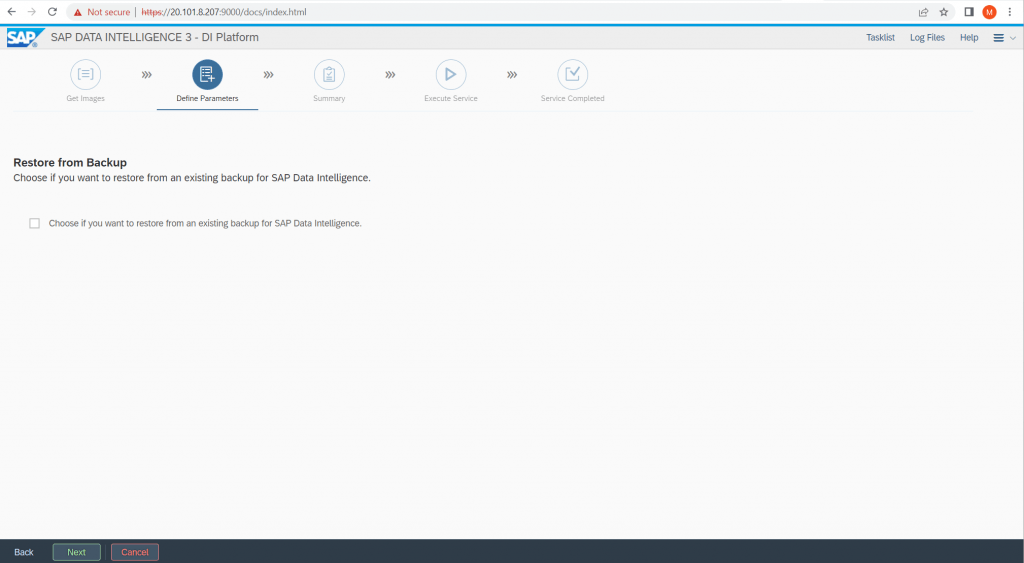

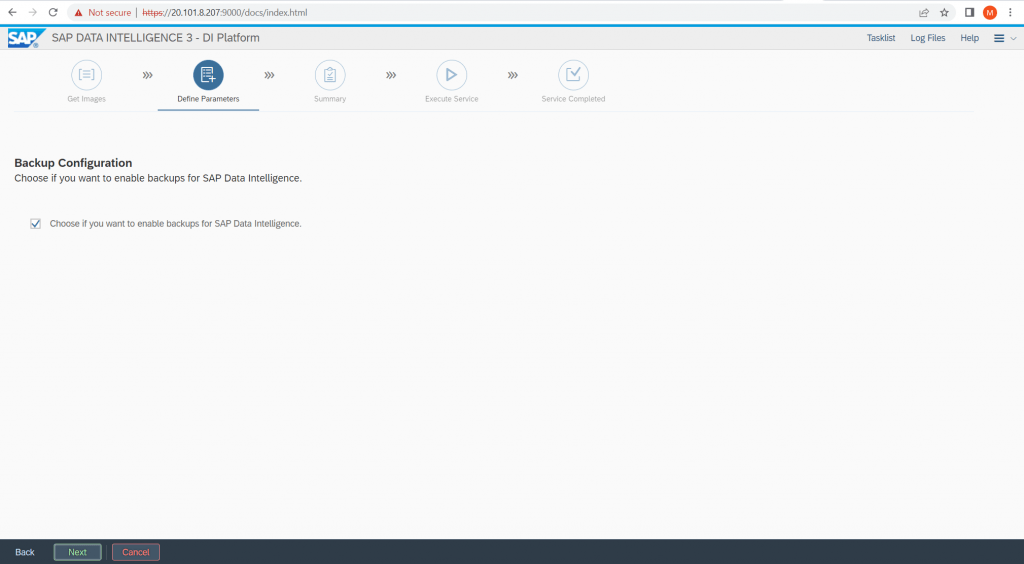

I don’t want to restore from a Backup.

Enter your SAP credentials in order to access the SAP Registry.

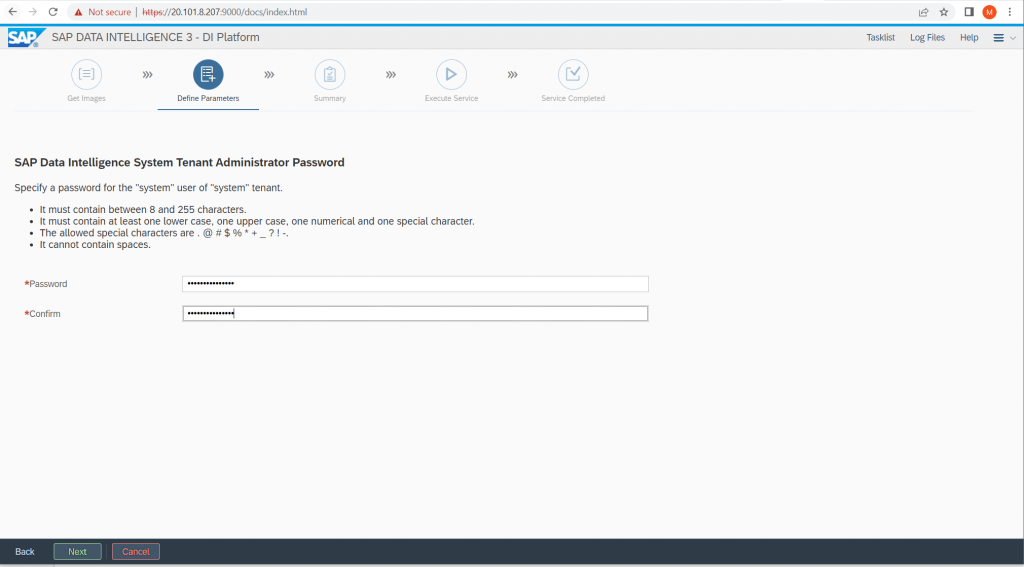

Specify a password for the “system” user of “system” tenant.

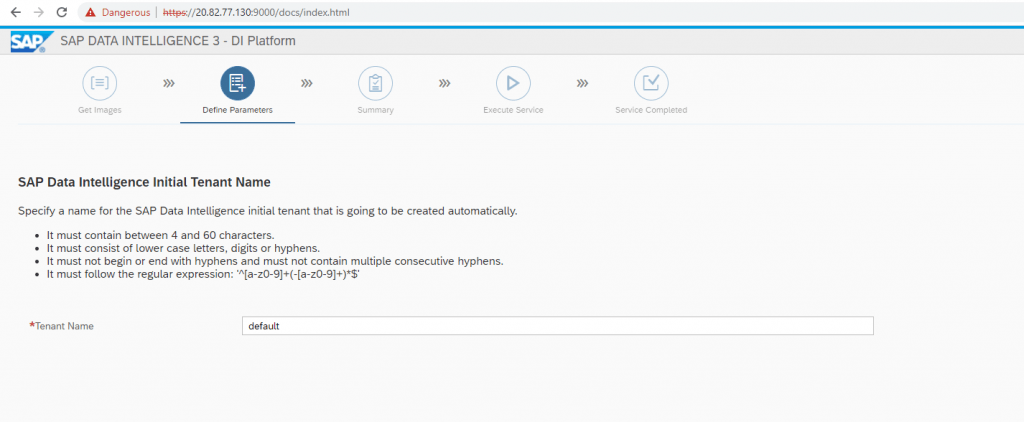

Specify a name for the SAP Data Intelligence initial tenant that is going to be created automatically.

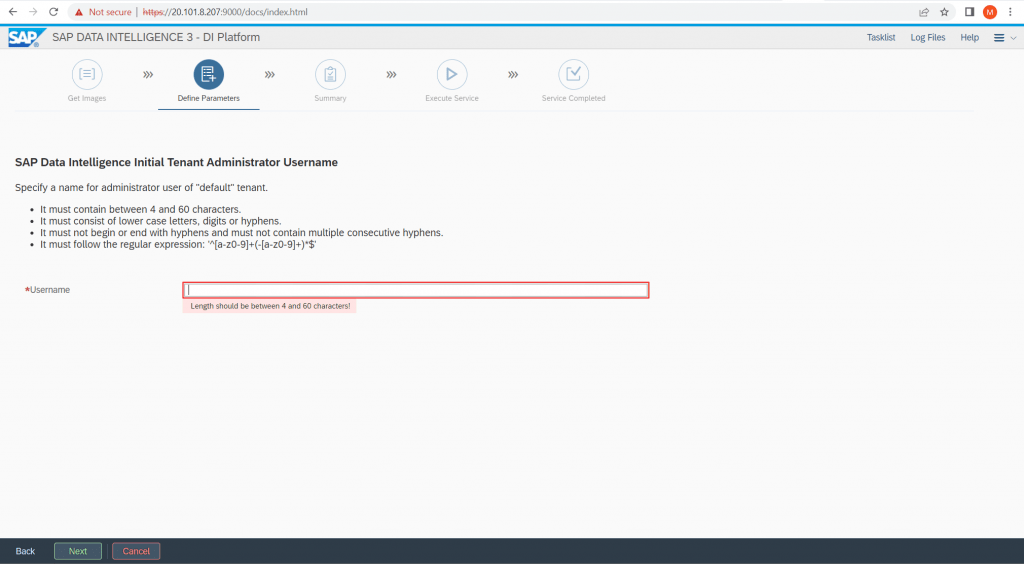

Specify a name for administrator user of “default” tenant.

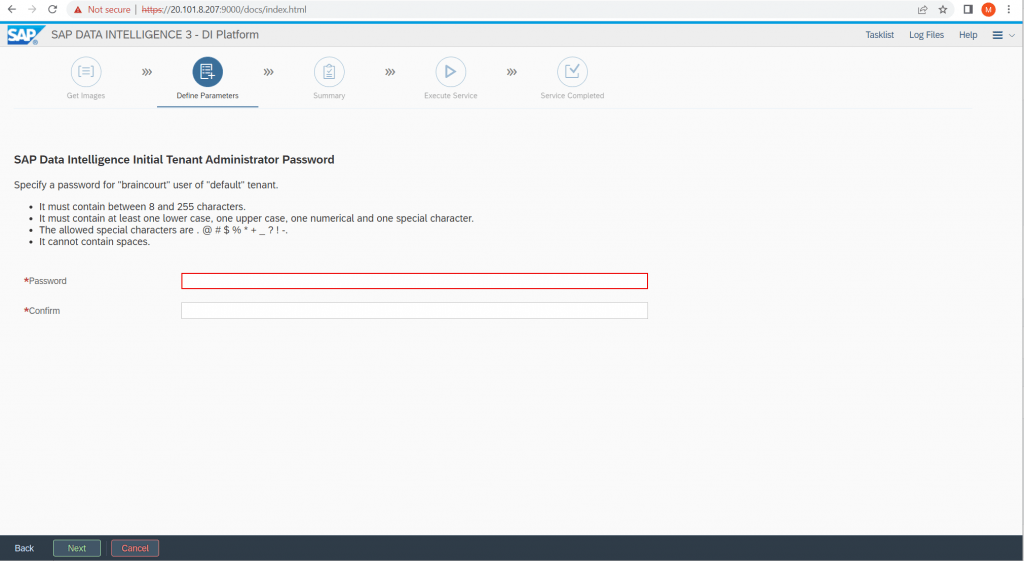

Specify a password for the user of “default” tenant.

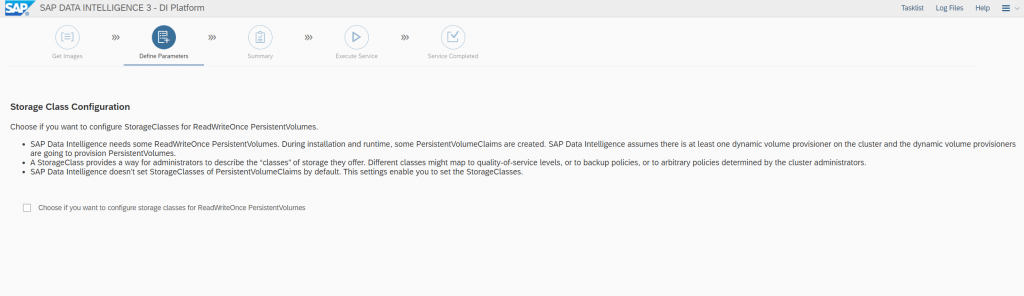

I don’t configure StorageClasses.

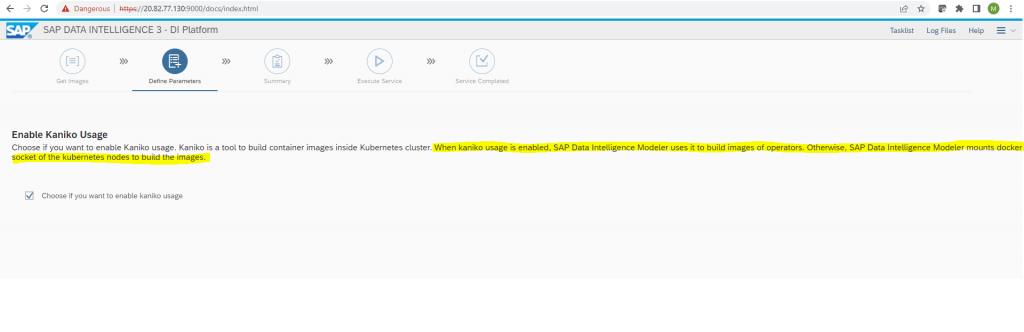

As mentioned previously, with the Basis Installation I ran into an error despite docker daemon was running. I will show this as mentioned in Part 3 under troubleshooting in detail

error building docker image. Docker daemon error: Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running?

So with this Advanced Installation I can enable Kaniko usage, which will used by SAP Data Intelligence Modeler to build images of operators instead to mount to a docker socket of the kubernetes nodes to build the images.

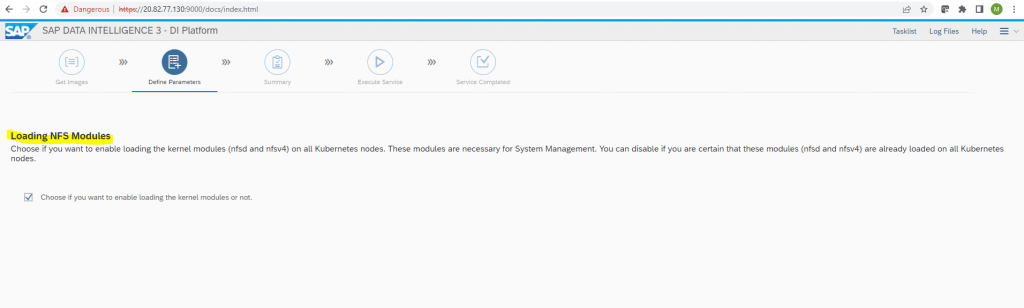

Loading NFS Modules

Enter your SAP credentials.

Images from the SAP repository will be copied to your Azure Container Registry (ACR).

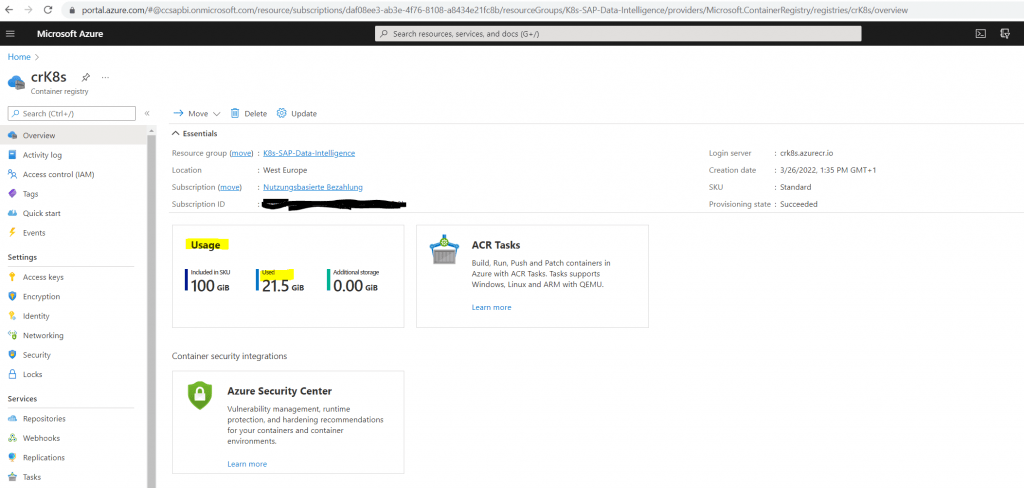

You will see that the used storage of your container registry will increase.

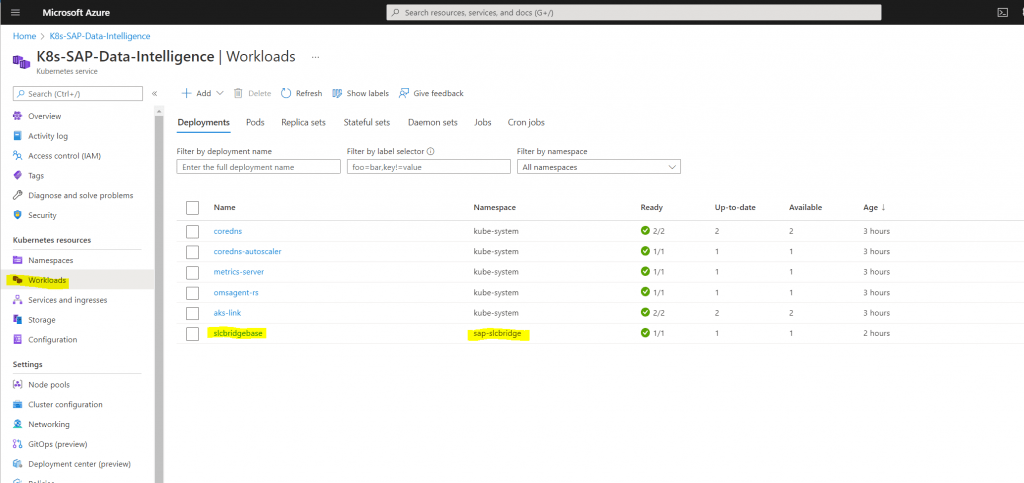

So far no containers besides the SLCB itself will be published in your AKS. At the moment first the images will be copied.

bcsapdataintelligence namespace so far no deployments

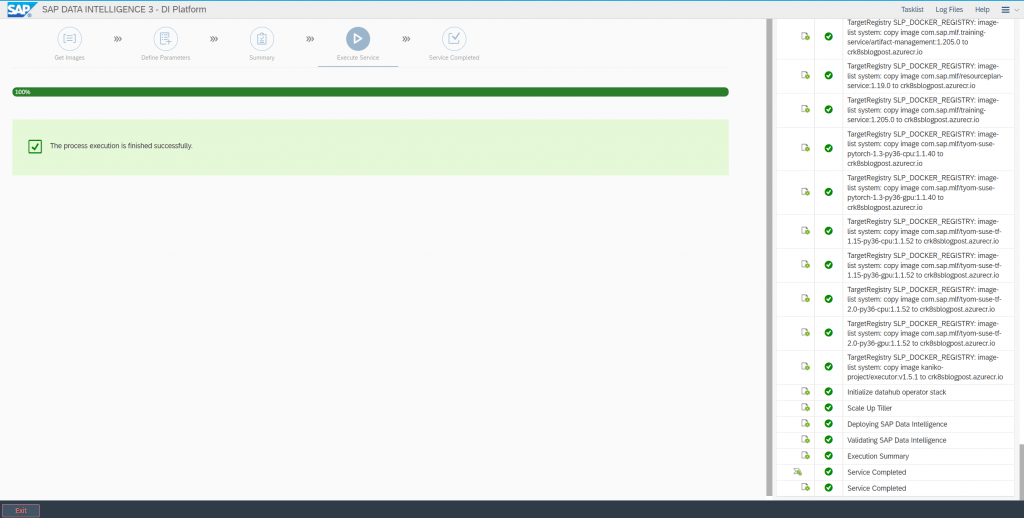

Finished successfully! You can click on Exit.

At this point our installation from SAP Data Intelligence successfully finished. The last step is to publish the portal inside the Azure Kubernetes Cluster externally through Ingress and an external IP address.

An ingress controller is a piece of software that provides reverse proxy, configurable traffic routing, and TLS termination for Kubernetes services. Kubernetes ingress resources are used to configure the ingress rules and routes for individual Kubernetes services. Using an ingress controller and ingress rules, a single IP address can be used to route traffic to multiple services in a Kubernetes cluster.

Source: https://docs.microsoft.com/en-us/azure/aks/ingress-tls?tabs=azure-cli

In Part 3 we will install and configure the ingress controller to expose SAP Data Intelligence to the internet.

Links

Official Installation Guide SAP Data Intelligence 3.2.3

https://help.sap.com/viewer/a8d90a56d61a49718ebcb5f65014bbe7/3.2.3/en-US/c22b0ff1253a4687ba99fe6795d98e89.html

Introduction to Azure Kubernetes Service

https://docs.microsoft.com/en-us/learn/modules/intro-to-azure-kubernetes-service/?WT.mc_id=APC-Kubernetesservices

Quickstart: Create an Azure container registry using the Azure portal

https://docs.microsoft.com/en-us/azure/container-registry/container-registry-get-started-portal

Use the portal to create an Azure AD application and service principal that can access resources

https://docs.microsoft.com/en-us/azure/active-directory/develop/howto-create-service-principal-portal

Azure Container Registry authentication with service principals

https://docs.microsoft.com/en-us/azure/container-registry/container-registry-auth-service-principal

Authenticate with Azure Container Registry from Azure Kubernetes Service

https://docs.microsoft.com/en-us/azure/aks/cluster-container-registry-integration?tabs=azure-cli

How to install the Azure CLI

https://docs.microsoft.com/en-us/cli/azure/install-azure-cli

Install the Azure CLI on Linux

https://docs.microsoft.com/en-us/cli/azure/install-azure-cli-linux?pivots=apt

Sign in with Azure CLI

https://docs.microsoft.com/en-us/cli/azure/authenticate-azure-cli