Set up a Microsoft iSCSI Target Server

In this post we will see how we can set up the Microsoft iSCSI Target Server to provide a network-accessible block storage device at low cost. This is a great and affordable solution for development, test, demonstration and lab environments.

Internet Small Computer Systems Interface or iSCSI is an Internet Protocol-based storage networking standard for linking data storage facilities. iSCSI provides block-level access to storage devices by carrying SCSI commands over a TCP/IP network. iSCSI facilitates data transfers over intranets and to manage storage over long distances. It can be used to transmit data over local area networks (LANs), wide area networks (WANs), or the Internet and can enable location-independent data storage and retrieval.

Introduction

The iSCSI Target Server, a role service in Windows Server that enables you to make storage available via the iSCSI protocol. This is useful for providing access to storage on your Windows server for clients that can’t communicate over the native Windows file sharing protocol, SMB.

iSCSI Target Server is ideal for the following:

- Network and diskless boot By using boot-capable network adapters or a software loader, you can deploy hundreds of diskless servers. With iSCSI Target Server, the deployment is fast. In Microsoft internal testing, 256 computers deployed in 34 minutes. By using differencing virtual hard disks, you can save up to 90% of the storage space that was used for operating system images. This is ideal for large deployments of identical operating system images, such as on virtual machines running Hyper-V or in high-performance computing (HPC) clusters.

- Server application storage Some applications require block storage. iSCSI Target Server can provide these applications with continuously available block storage. Because the storage is remotely accessible, it can also consolidate block storage for central or branch office locations.

- Heterogeneous storage iSCSI Target Server supports non-Microsoft iSCSI initiators, making it easy to share storage on servers in a mixed software environment.

- Development, test, demonstration, and lab environments When iSCSI Target Server is enabled, a computer running the Windows Server operating system becomes a network-accessible block storage device. This is useful for testing applications prior to deployment in a storage area network (SAN).

Enabling iSCSI Target Server to provide block storage leverages your existing Ethernet network. No additional hardware is needed. If high availability is an important criterion, consider setting up a high-availability cluster. You need shared storage for a high-availability cluster—either hardware for Fibre Channel storage or a serial attached SCSI (SAS) storage array.

If you enable guest clustering, you need to provide block storage. Any servers running Windows Server software with iSCSI Target Server can provide block storage.

Source: https://learn.microsoft.com/en-us/windows-server/storage/iscsi/iscsi-target-server

- Transport protocol for SCSI, which runs over TCP.

- Encapsulating SCSI commands in an IP network.

- A protocol for a new generation of storage systems that use native TCP/IP.

- The iSCSI protocol is standardized by RFC 3720.

Block Storage

Block storage is technology that controls data storage and storage devices. It takes any data, like a file or database entry, and divides it into blocks of equal sizes. The block storage system then stores the data block on underlying physical storage in a manner that is optimized for fast access and retrieval. Developers prefer block storage for applications that require efficient, fast, and reliable data access. Think of block storage as a more direct pipeline to the data. By contrast, file storage has an extra layer consisting of a file system (NFS, SMB) to process before accessing the data.

Developers often deploy block storage as a storage area network (SAN). SAN is a complex network technology that presents block storage to multiple networked systems as if those blocks were locally attached devices. SAN’s typically use fiber channel interconnects. In contrast, a network attached storage (NAS) is a single device that serves files over Ethernet.

Source: https://aws.amazon.com/what-is/block-storage/

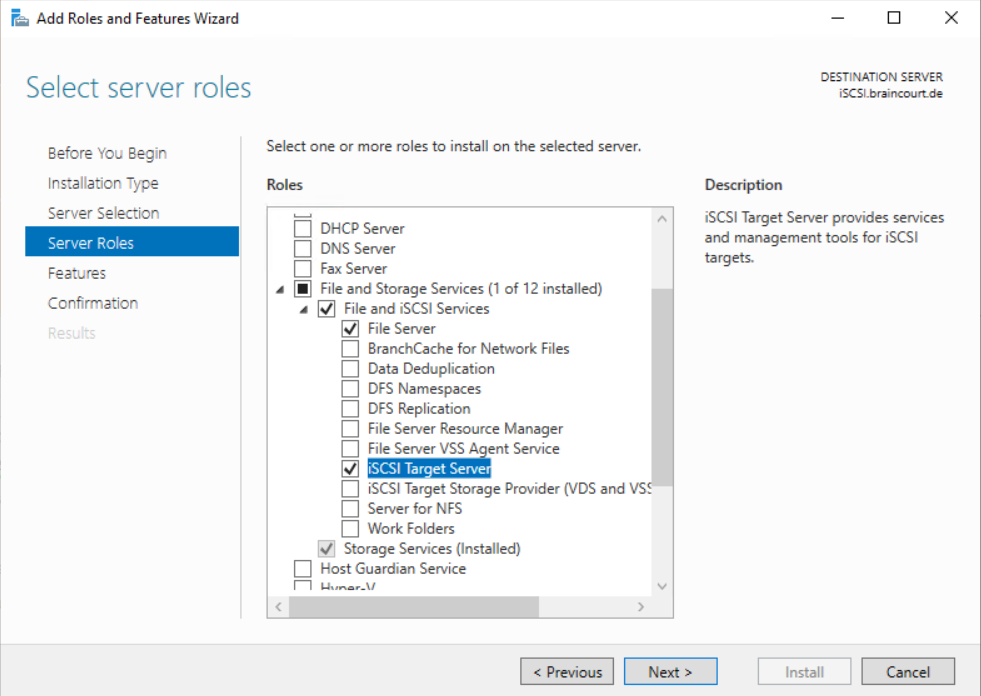

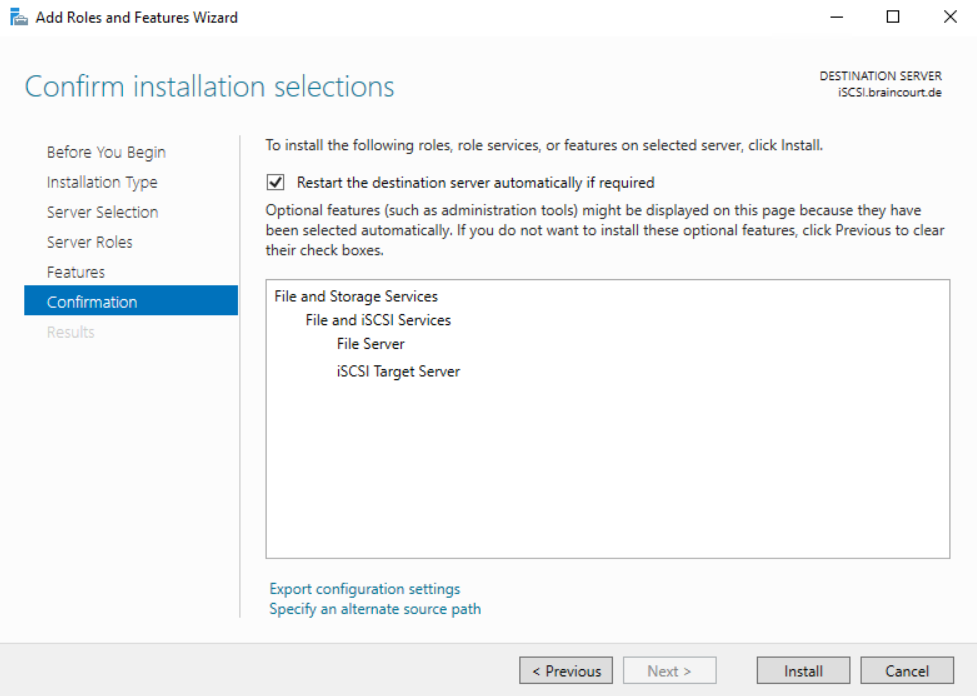

Install the iSCSI Target Server Role

I will use here a Windows Sever 2022 virtual machine therefore. In the Server Manager we first need to install the iSCSI Target Server role.

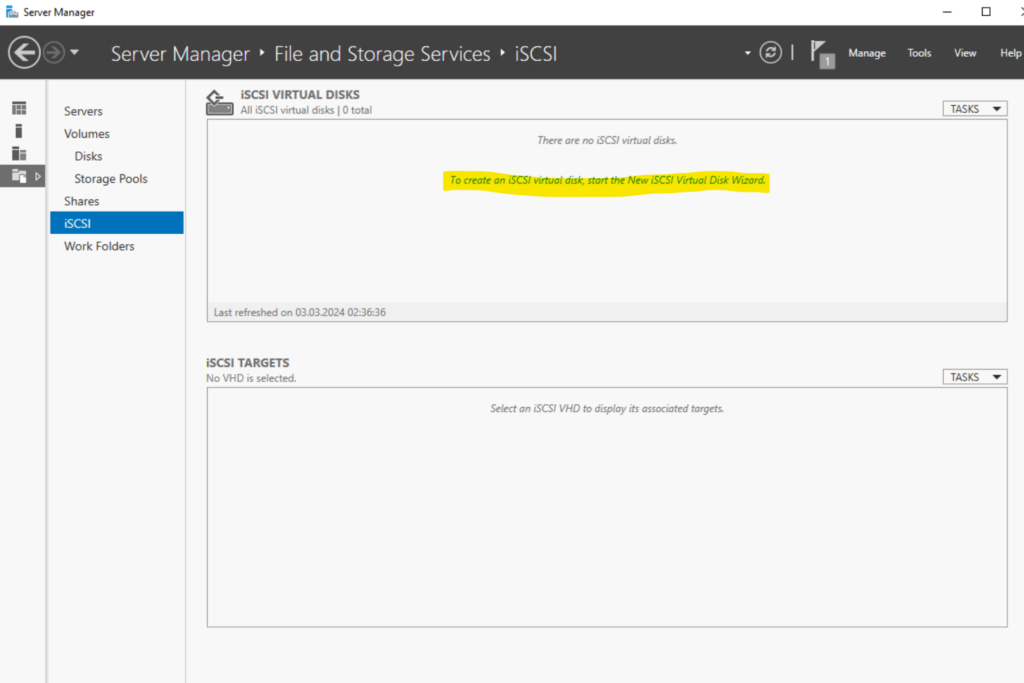

Configure the iSCSI Target Server

Create an iSCSI LUN (iSCSI virutal disk) to share storage. The iSCSI virtual disk is finally a VHD. Click on the link below to start the New iSCSI Virtual Disk Wizard.

In computer storage, a logical unit number, or LUN, is a number used to identify a logical unit, which is a device addressed by the SCSI protocol or by Storage Area Network protocols that encapsulate SCSI, such as Fibre Channel or iSCSI.

A LUN may be used with any device which supports read/write operations, such as a tape drive, but is most often used to refer to a logical disk as created on a SAN. Though not technically correct, the term “LUN” is often also used to refer to the logical disk itself.

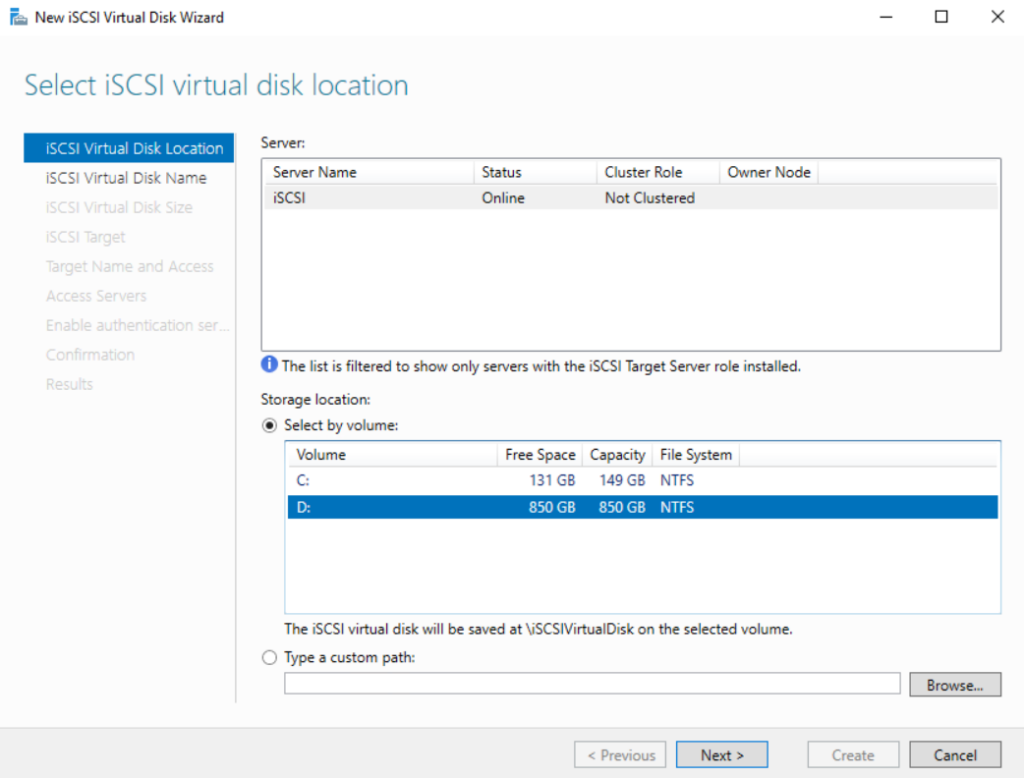

Select the server and the volume where the iSCSI virtual disk will be saved. Then click on Next.

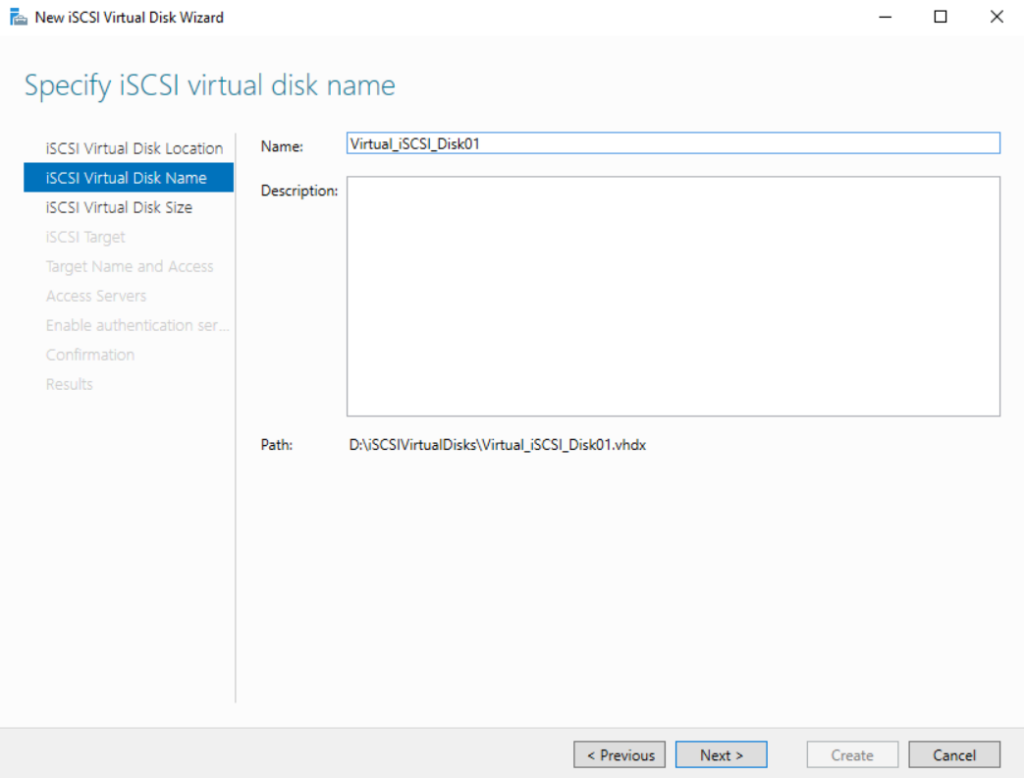

Provide a name for the iSCSI virtual disk.

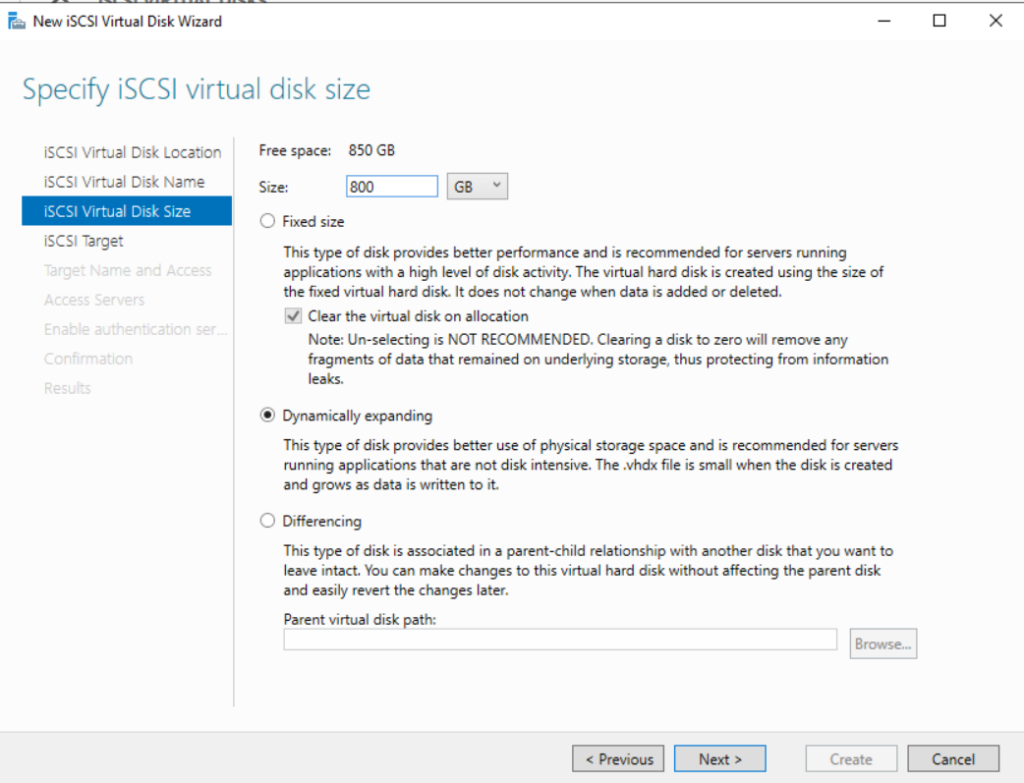

Specify the size for the virtual disk. Further I will select here dynamically expanding because it’s just for demonstration and testing purpose and the final performance is not crucial in my case.

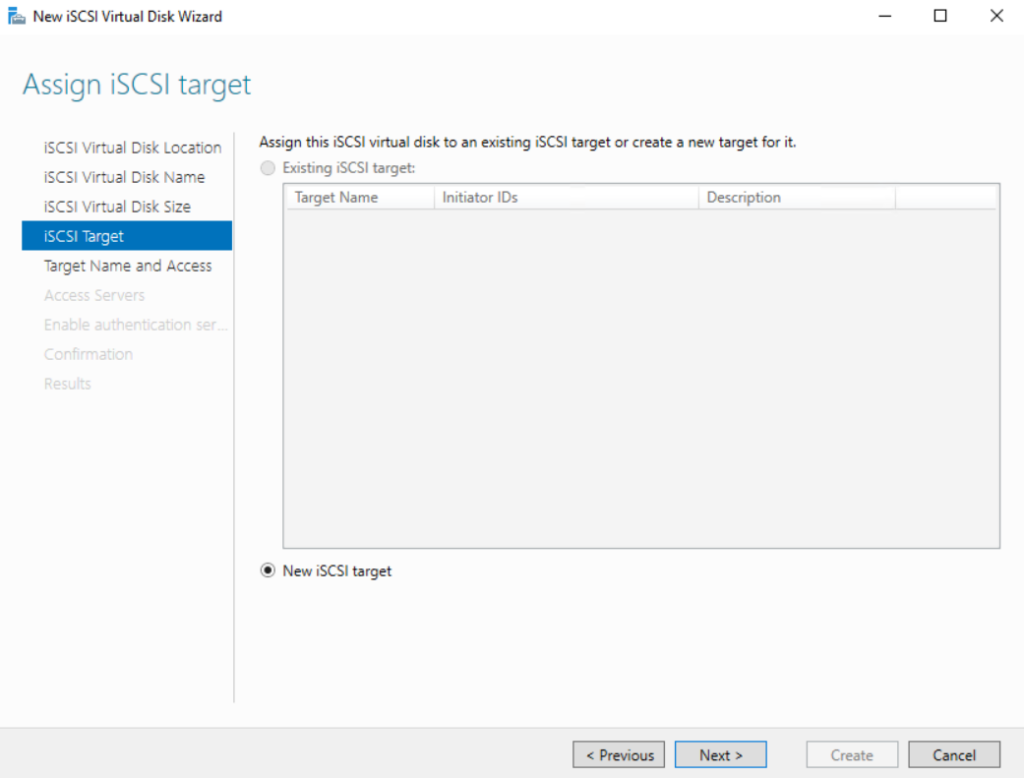

Under Assign iSCSI target I am just able to assign here a new iSCSI target to my virtual disk, because so far, I haven’t created any other iSCSI targets.

The iSCSI specification refers to a storage resource located on an iSCSI server (more generally, one of potentially many instances of iSCSI storage nodes running on that server) as a target.

An iSCSI target is often a dedicated network-connected hard disk storage device, but may also be a general-purpose computer, since as with initiators, software to provide an iSCSI target is available for most mainstream operating systems.

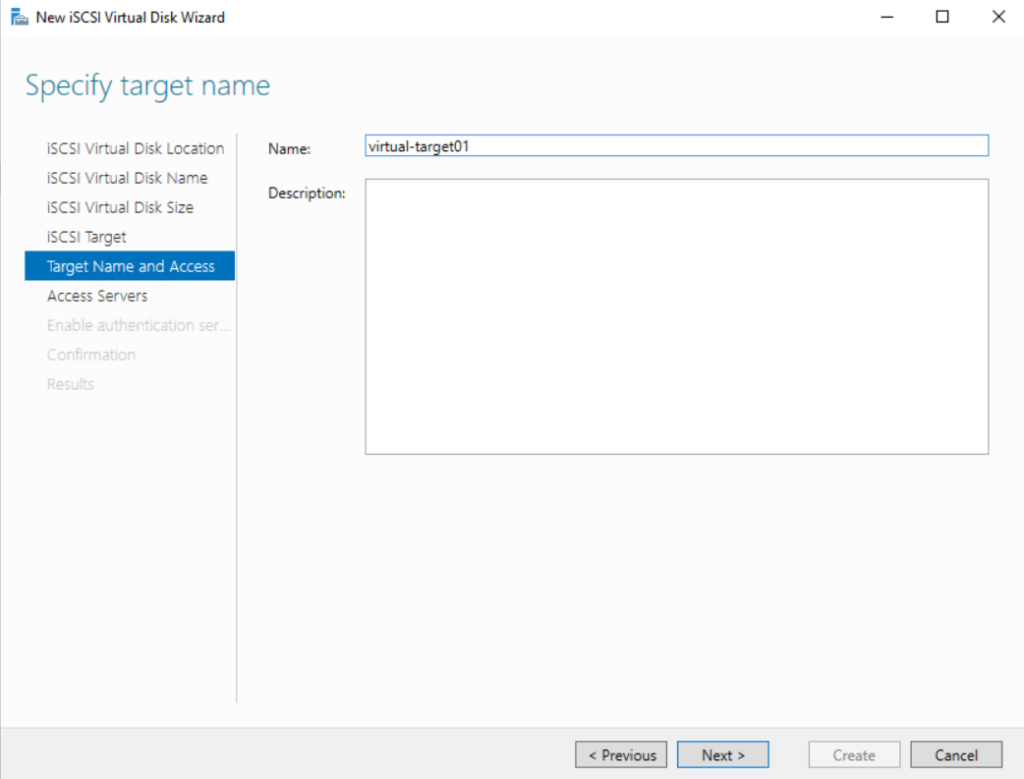

Specify a target name for the new iSCSI target.

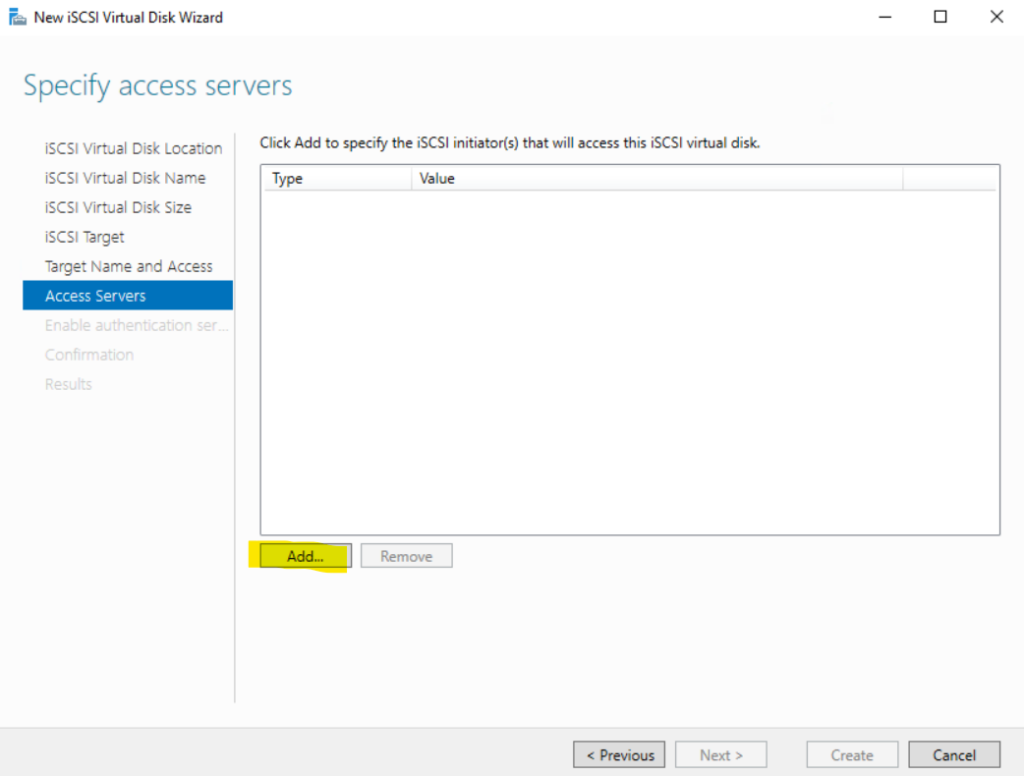

On the Specify access servers page we need to add the servers (so called iSCSI initiator(s)) which should have access to this iSCSI virtual disk later.

An initiator functions as an iSCSI client. An initiator typically serves the same purpose to a computer as a SCSI bus adapter would, except that, instead of physically cabling SCSI devices (like hard drives and tape changers), an iSCSI initiator sends SCSI commands over an IP network.

Click on Add… to add the servers.

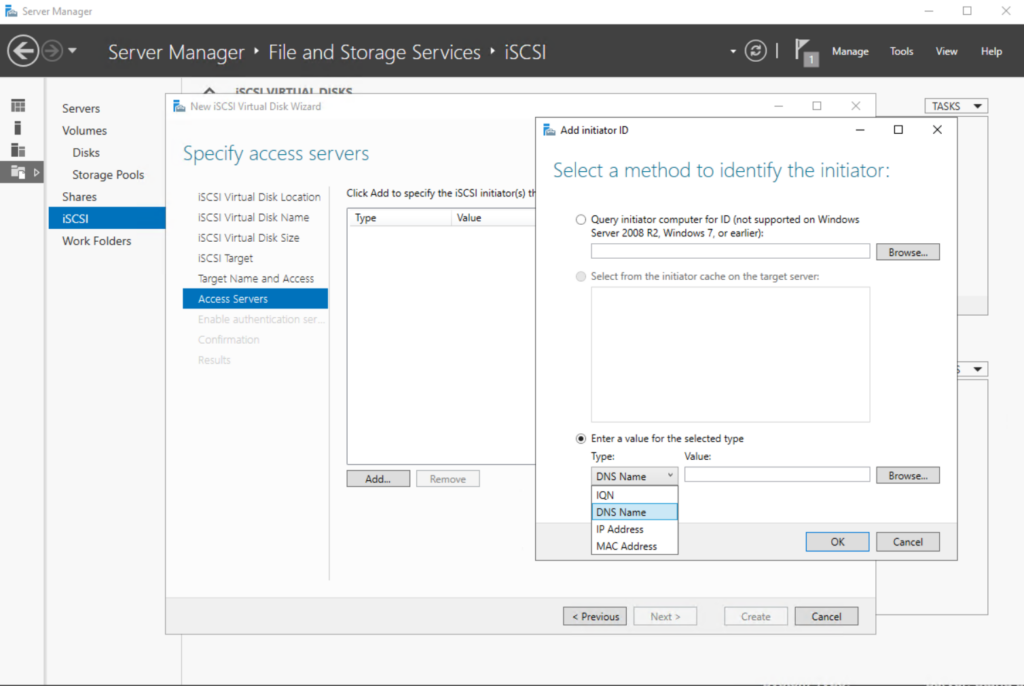

For the initiator ID I will select DNS Name and browser to the server (computer) in Active Directory I want to select to have access to the iSCSI virtual disk.

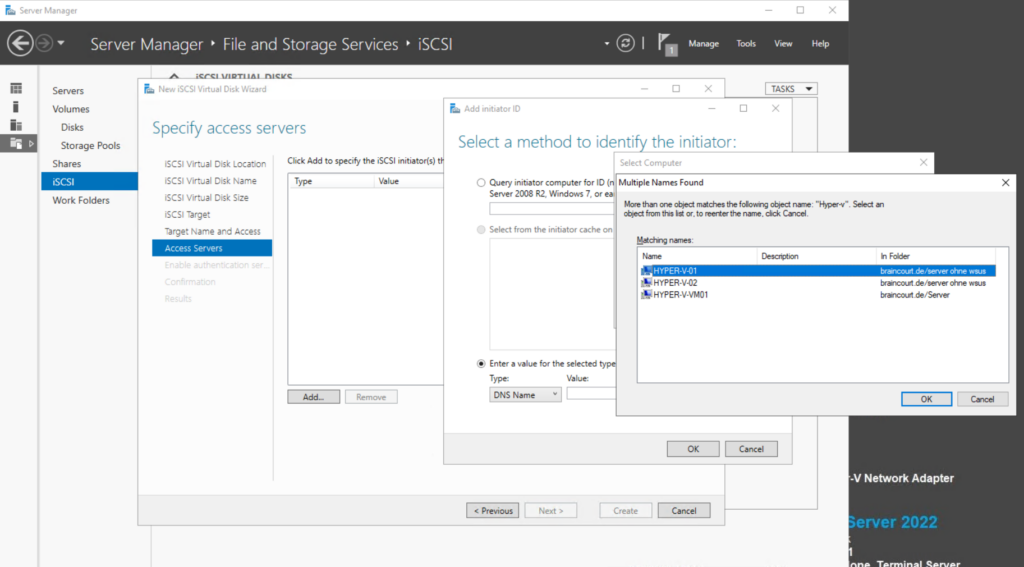

Here I will select one of two Hyper-V servers which will use this iSCSI virtual disk later for clustering.

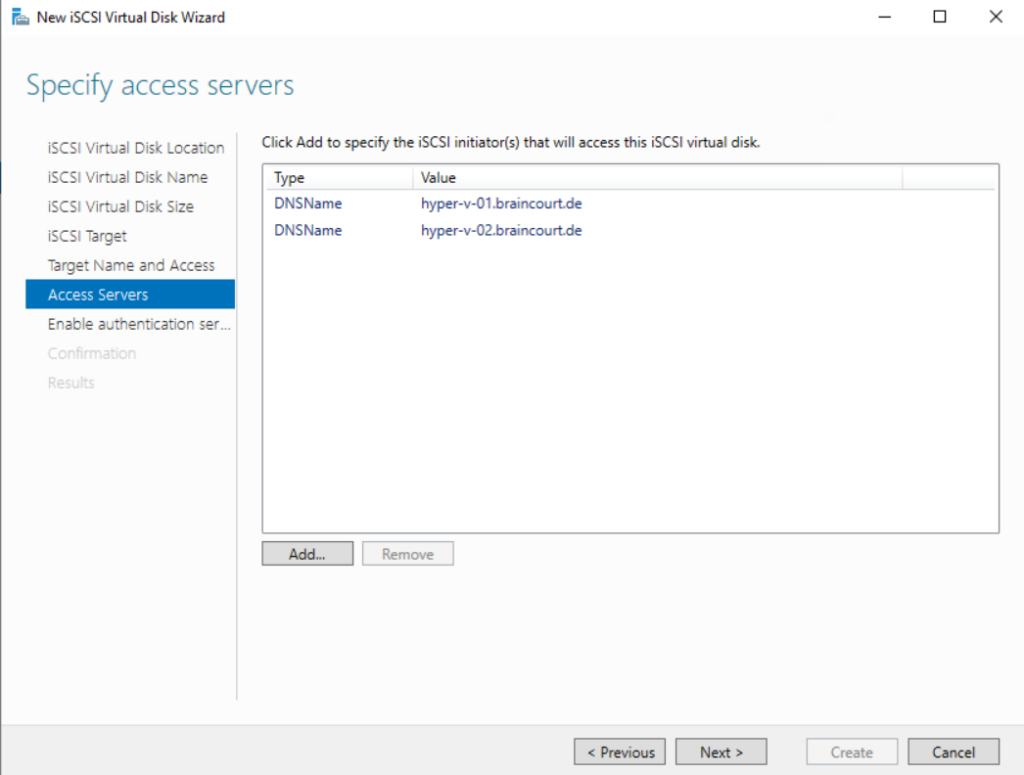

I was also adding my second Hyper-V server. Then click Next.

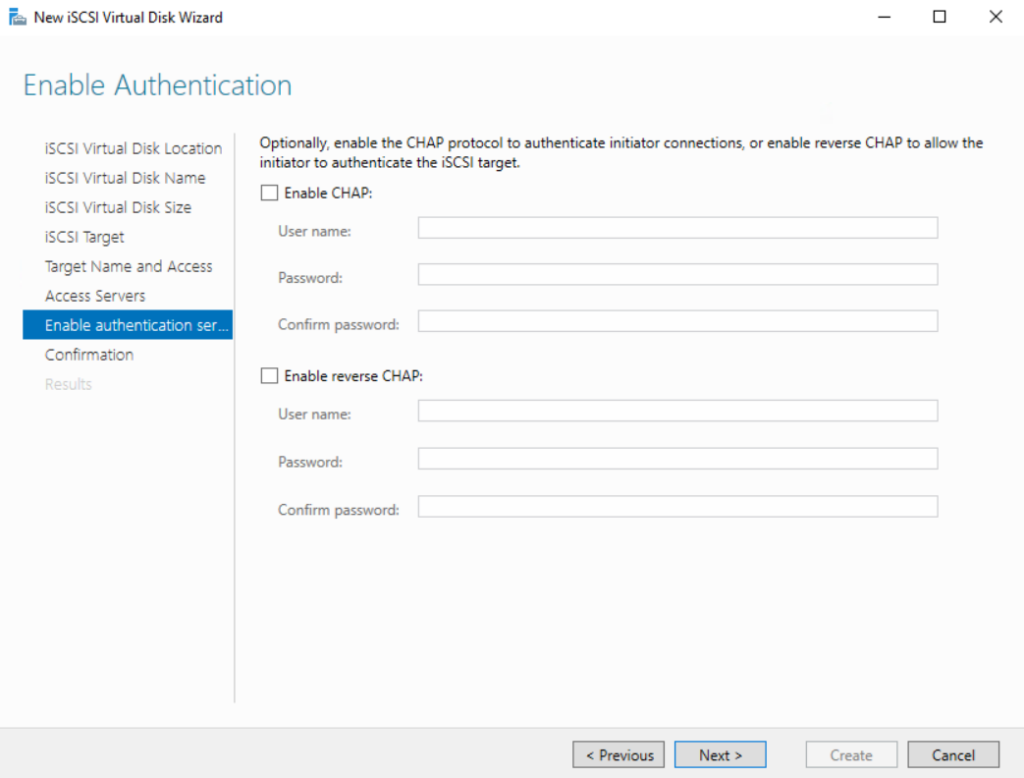

On the Enable Authentication page you can optionally add CHAP or reverse CHAP to secure the access to the iSCSI virtual disk. I will leave this blank just for demonstration and testing purpose. Then click Next.

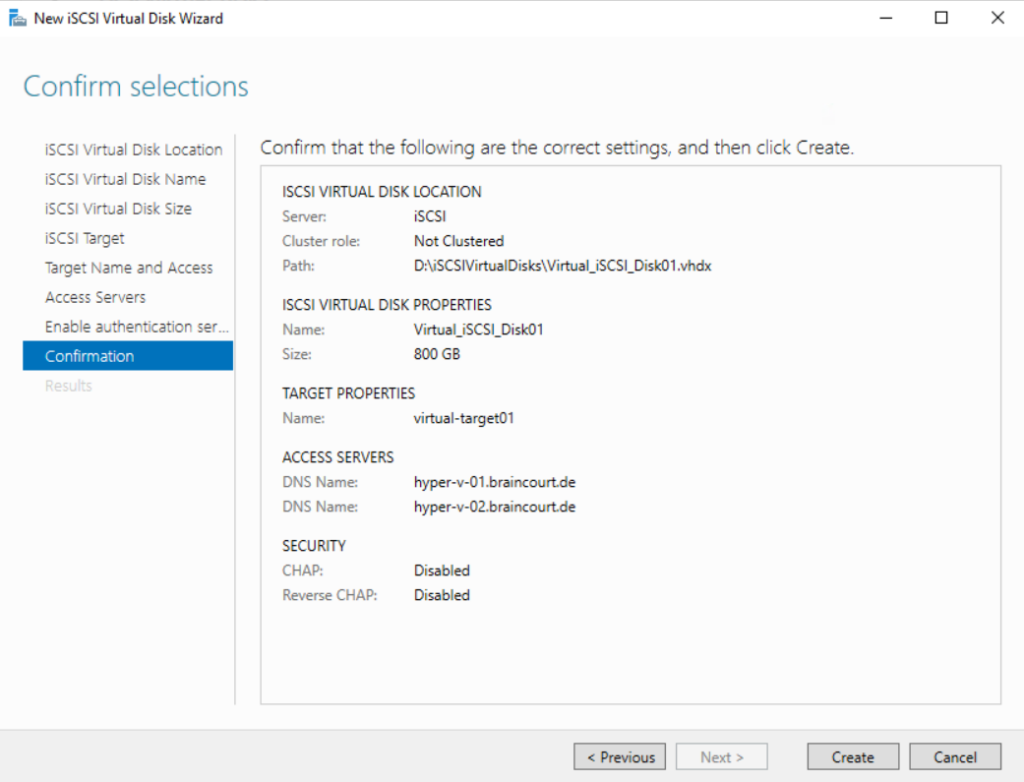

On the Confirm selections page I will click on Create to finally create the iSCSI virtual disk.

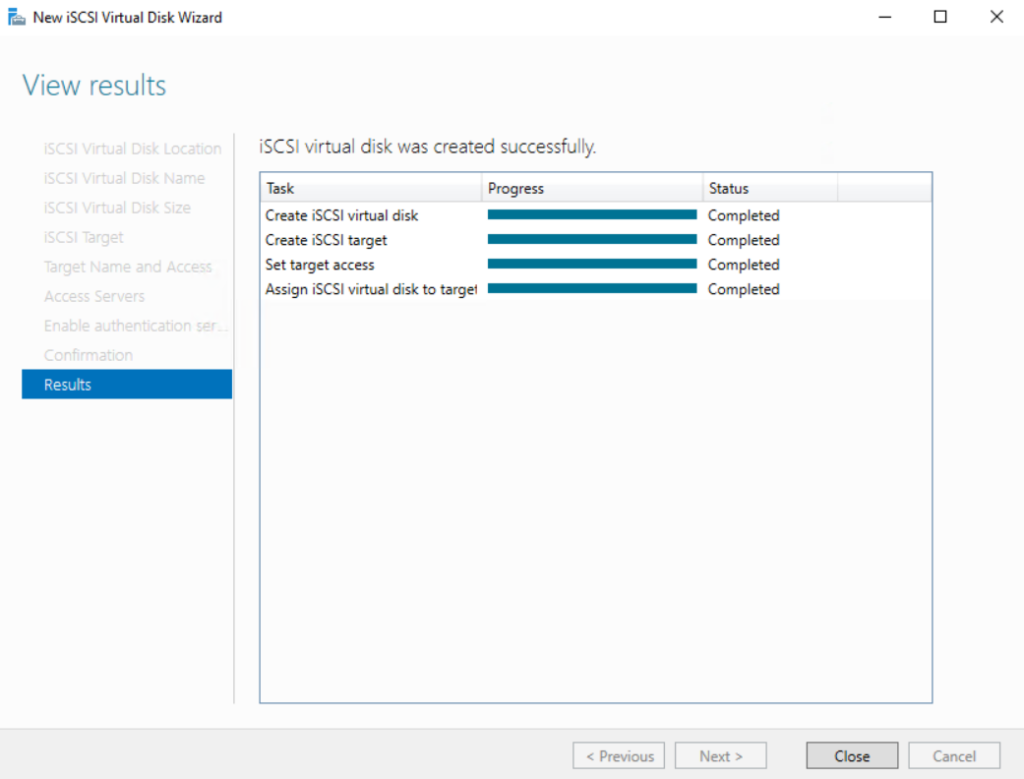

Finally the iSCSI virtual disk is created.

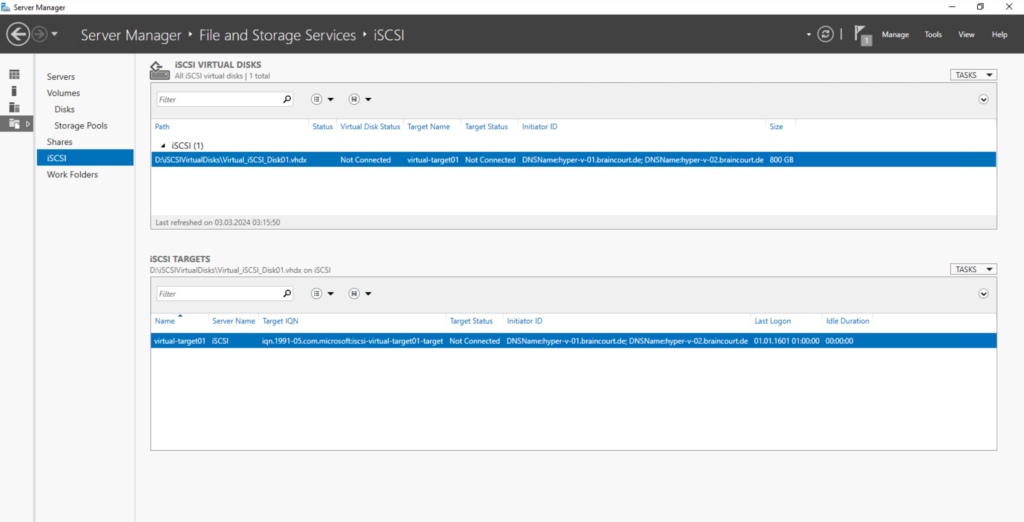

The next step is to configure the iSCSI Initiator, the access servers which wants to access our iSCSI virtual disk.

Configure the iSCSI Initiator

Microsoft provides an iSCSI initiator (software initiator) which is a tool that allows to connect a Windows computer to an external iSCSI-based storage array.

This iSCSI initiator will manage the iSCSI session on the client and the remote iSCSI target devices, in our case the iSCSI virtual disk on the iSCSI target server.

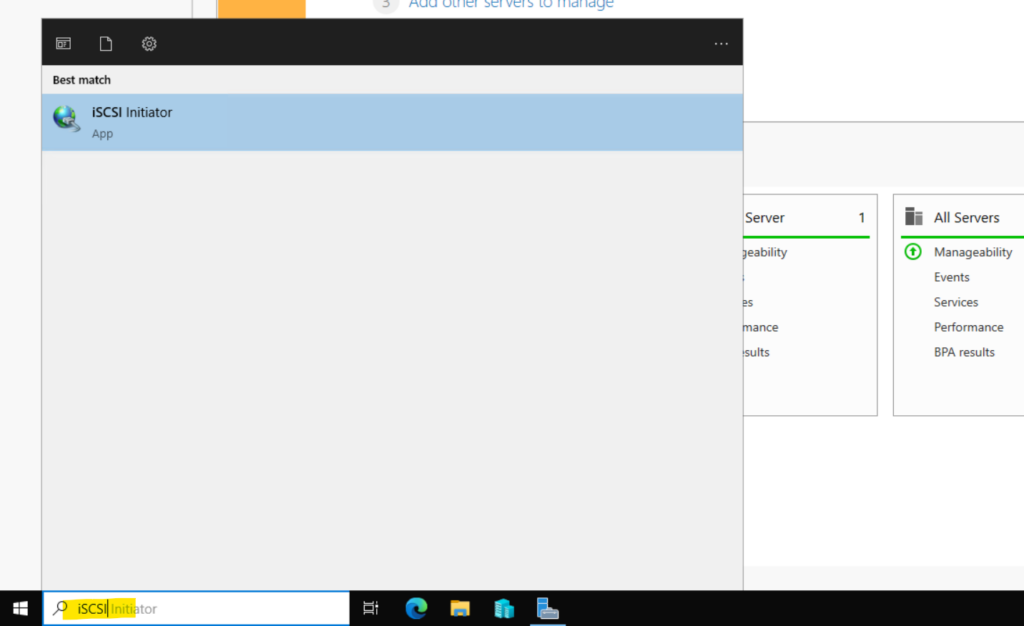

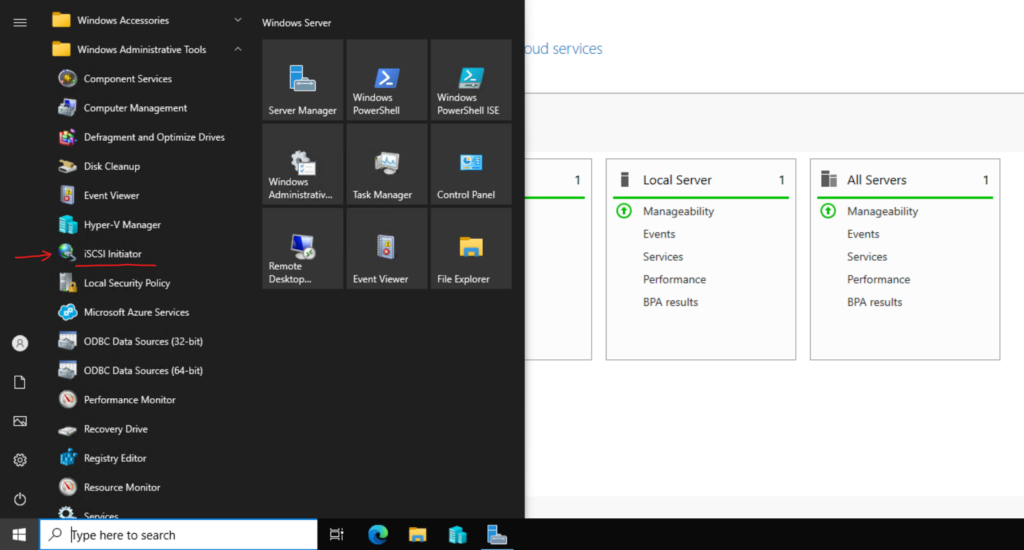

To open the iSCSI initiator on the access servers, in my case both Hyper-V servers, you can either just type iSCSI in the search bar or navigate directly to Windows Administrative Tools -> iSCSI initiator as shown below.

A software initiator uses code to implement iSCSI. Typically, this happens in a kernel-resident device driver that uses the existing network card (NIC) and network stack to emulate SCSI devices for a computer by speaking the iSCSI protocol. Software initiators are available for most popular operating systems and are the most common method of deploying iSCSI.

A hardware initiator uses dedicated hardware, typically in combination with firmware running on that hardware, to implement iSCSI. A hardware initiator mitigates the overhead of iSCSI and TCP processing and Ethernet interrupts, and therefore may improve the performance of servers that use iSCSI. An iSCSI host bus adapter (more commonly, HBA) implements a hardware initiator. A typical HBA is packaged as a combination of a Gigabit (or 10 Gigabit) Ethernet network interface controller, some kind of TCP/IP offload engine (TOE) technology and a SCSI bus adapter, which is how it appears to the operating system. An iSCSI HBA can include PCI option ROM to allow booting from an iSCSI SAN.

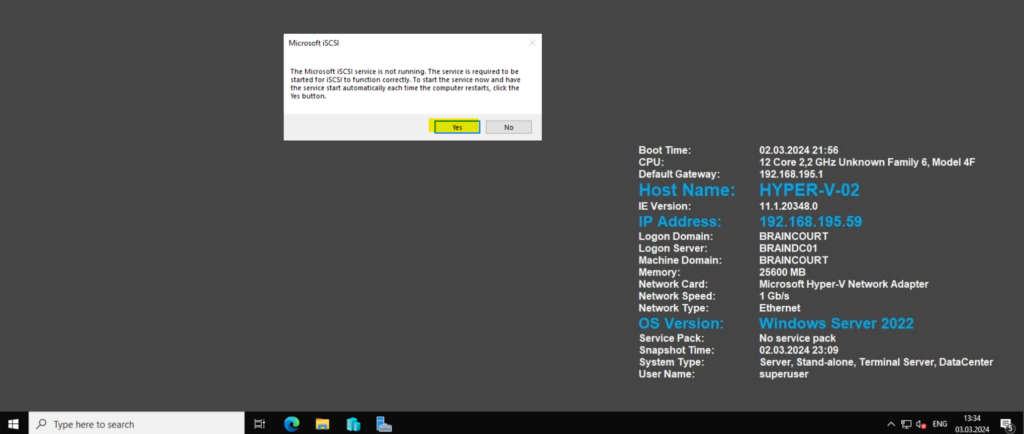

Click on Yes in order to start the Microsoft iSCSI service and enable automatic start.

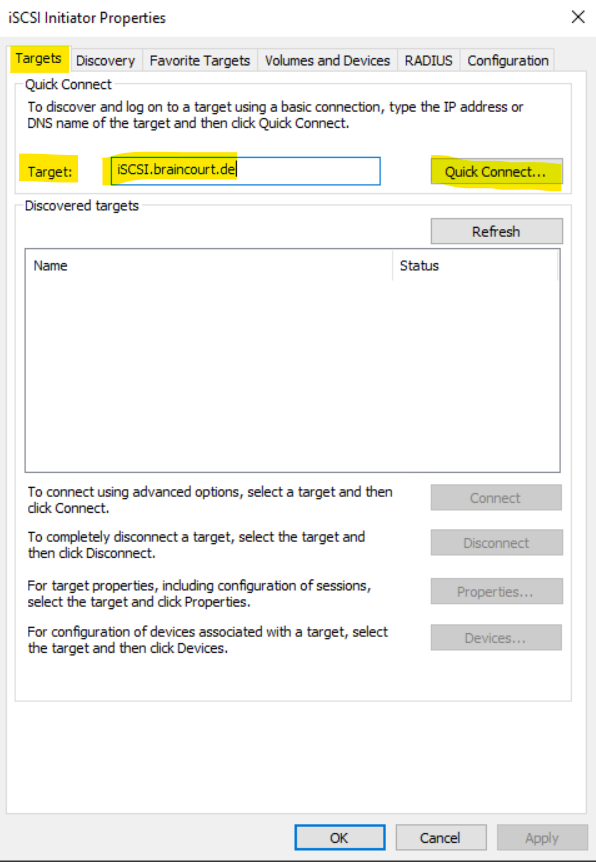

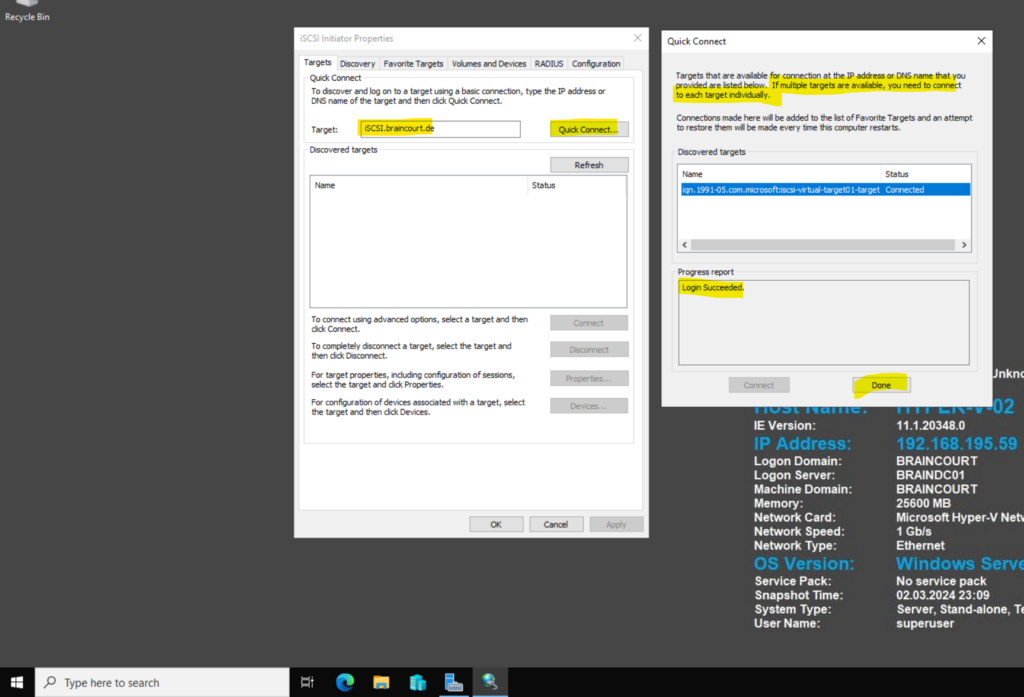

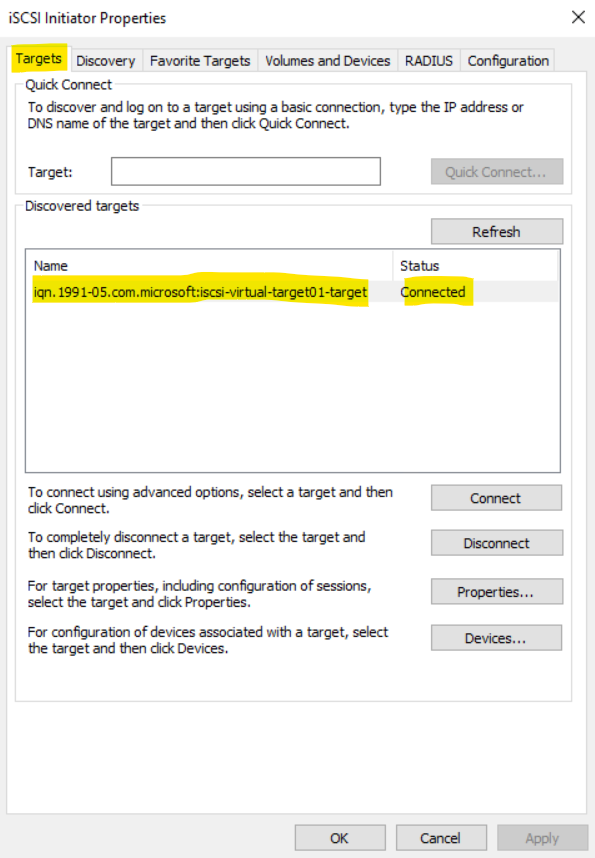

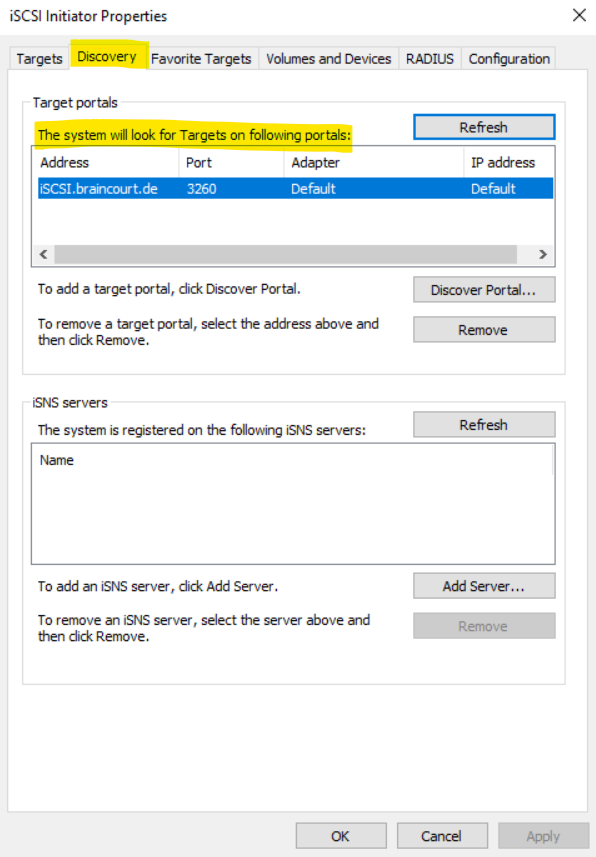

Within the iSCSI Initiator Properties dialog enter the IP address or DNS name of the iSCSI target device (iSCSI Target Server in my case) in the Target field as shown below.

From now on the iSCSI virtual hard disk is connected to my client, here one of my Hyper-V servers which will use this SCSI virtual hard disk later as a Cluster Shared Volume and shown in my following post.

Click on Done.

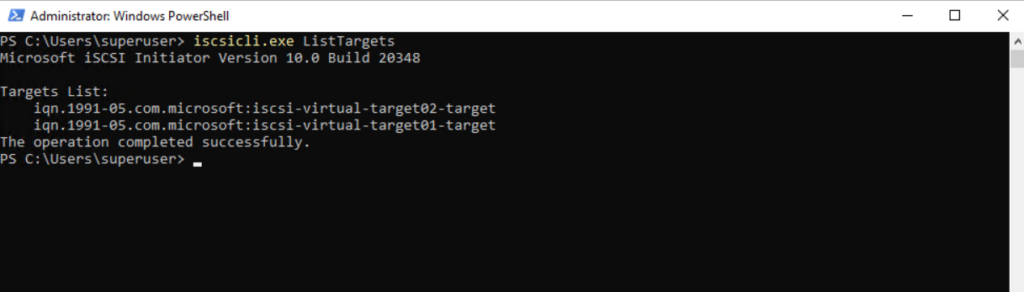

You can also use PowerShell and the iscsicli.exe ListTargets command to list iSCSI targets as shown below.

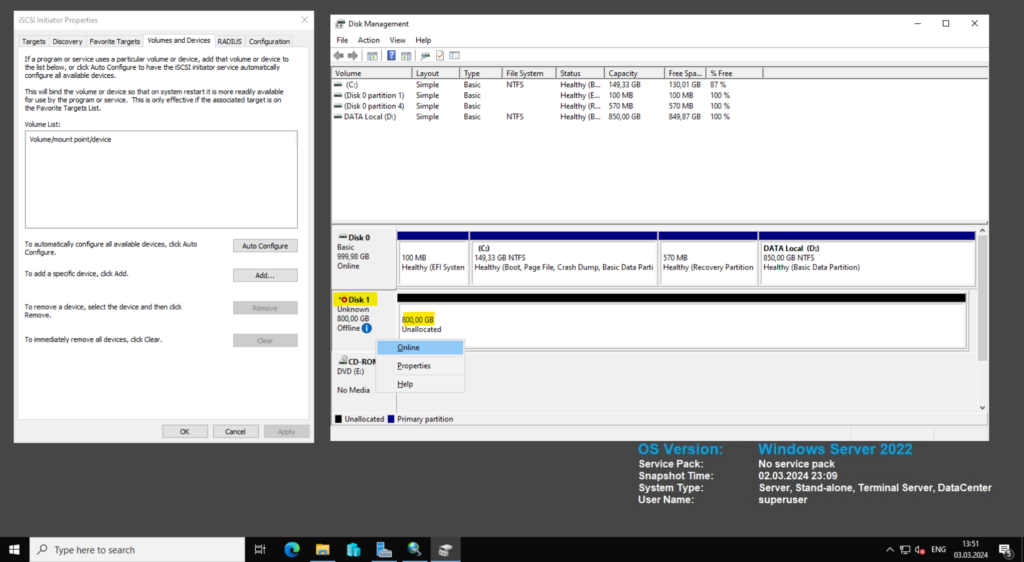

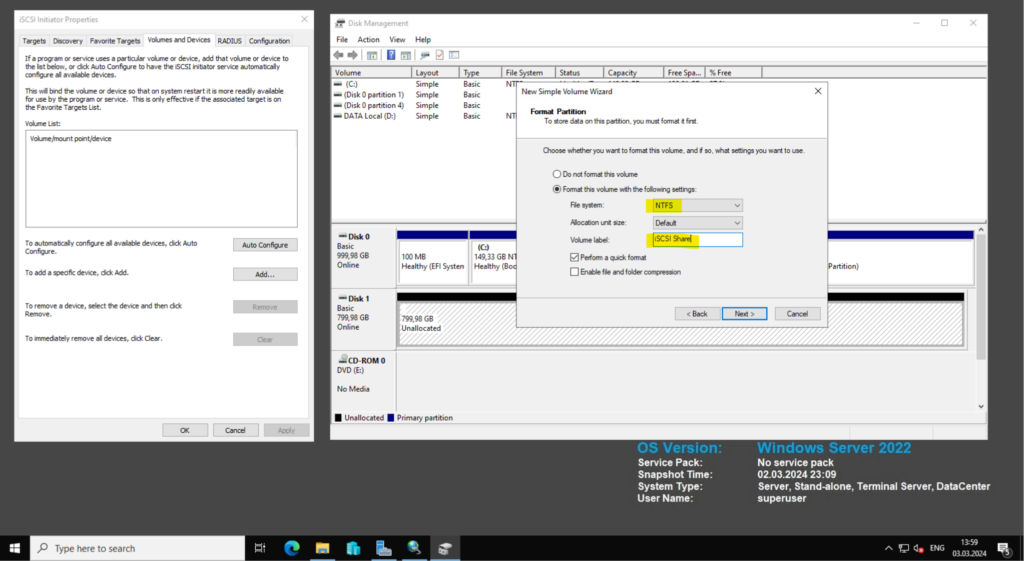

In order to use the iSCSI virtual disk we first have to initialize it by using the Disk Management tool as usual.

Right click on the new disk shown up here an select Online.

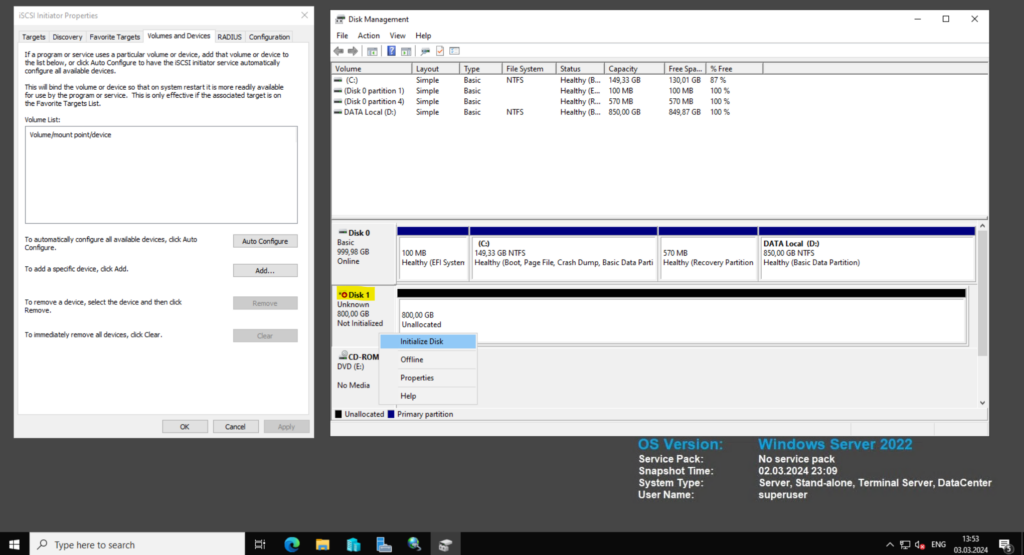

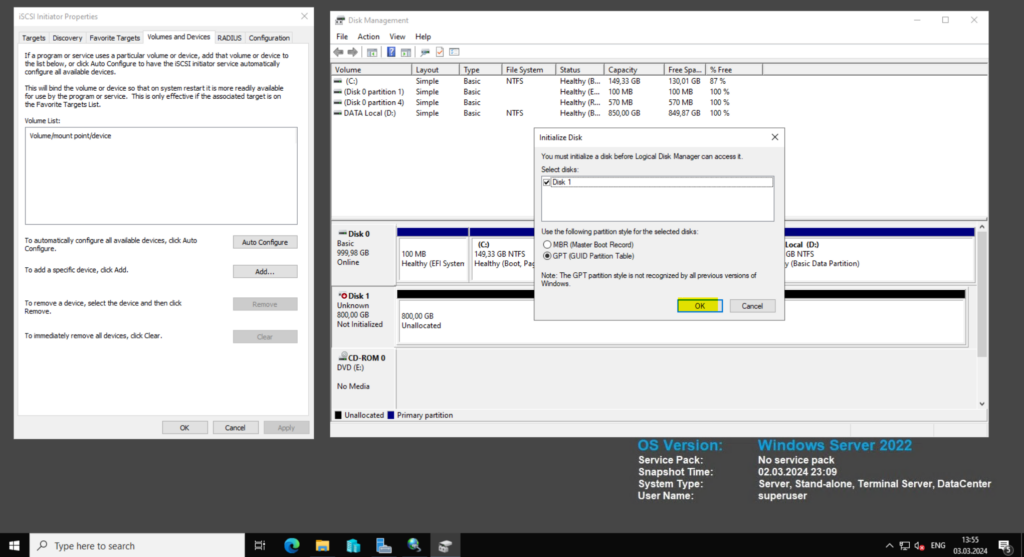

Then to initial the disk also right click another time and select Initialize Disk.

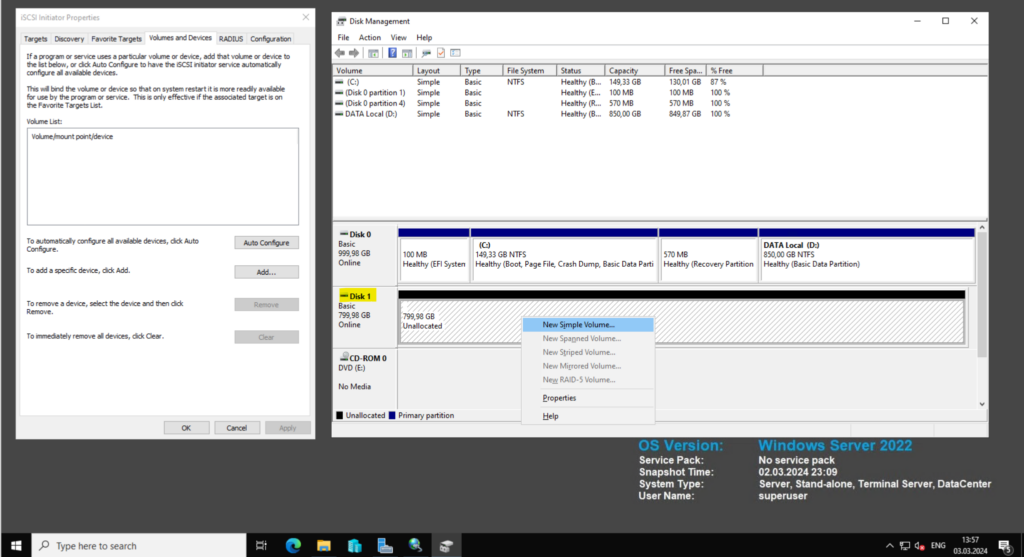

Now we can finally create a new volume on that disk.

I will create here a new NTFS volume.

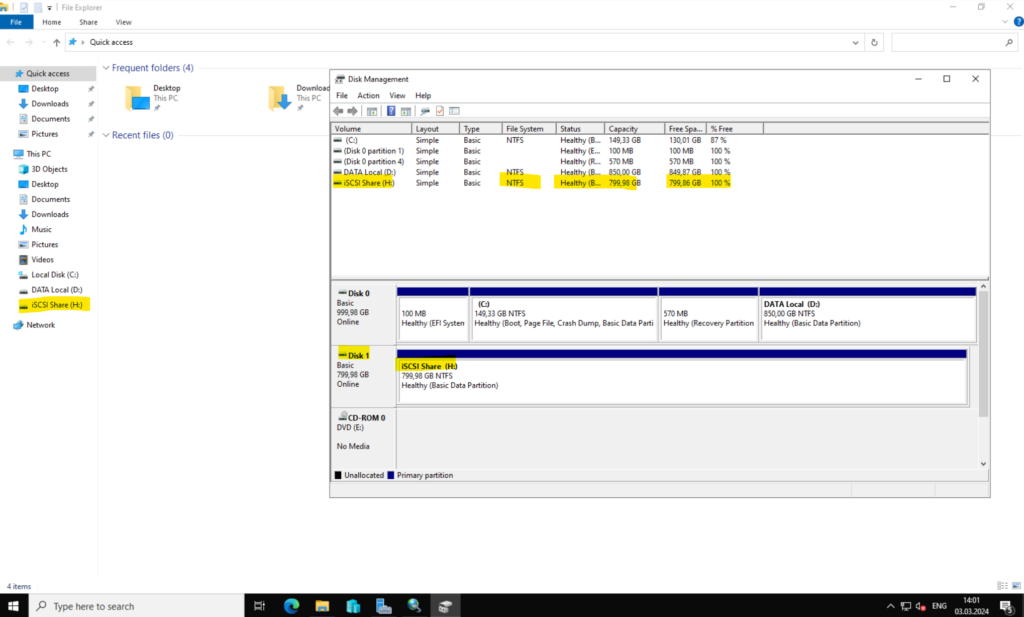

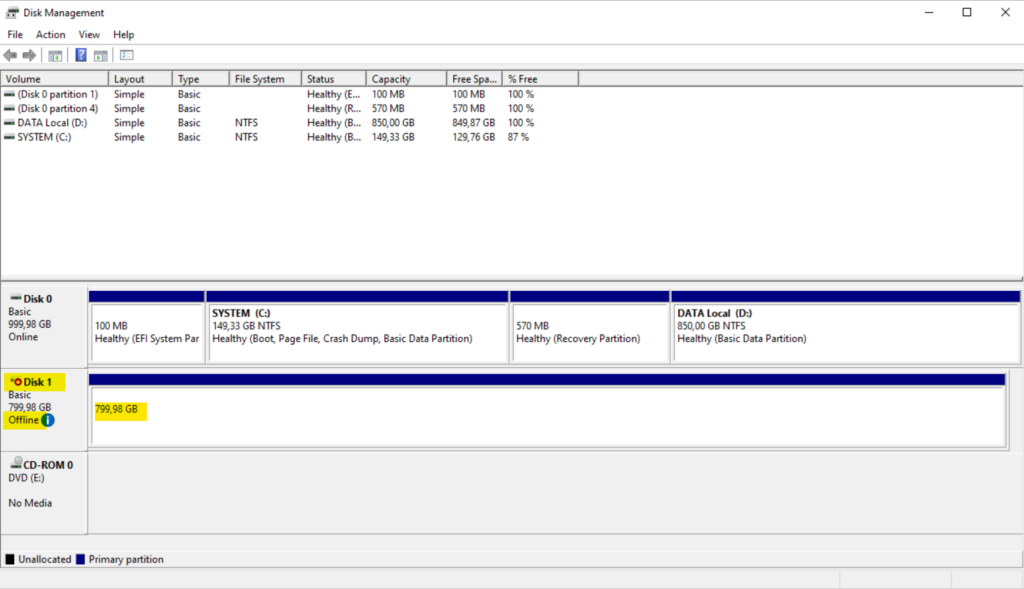

Now I will do the same on my second Hyper-V server. First using the iSCSI Initiator to connect to the iSCSI Target Server and its iSCSI virtual disk.

Then open the Disk Management tool to bring the disk online and to assign a drive letter. Because we already created a NTFS volume on this disk previously, we can skip this step here.

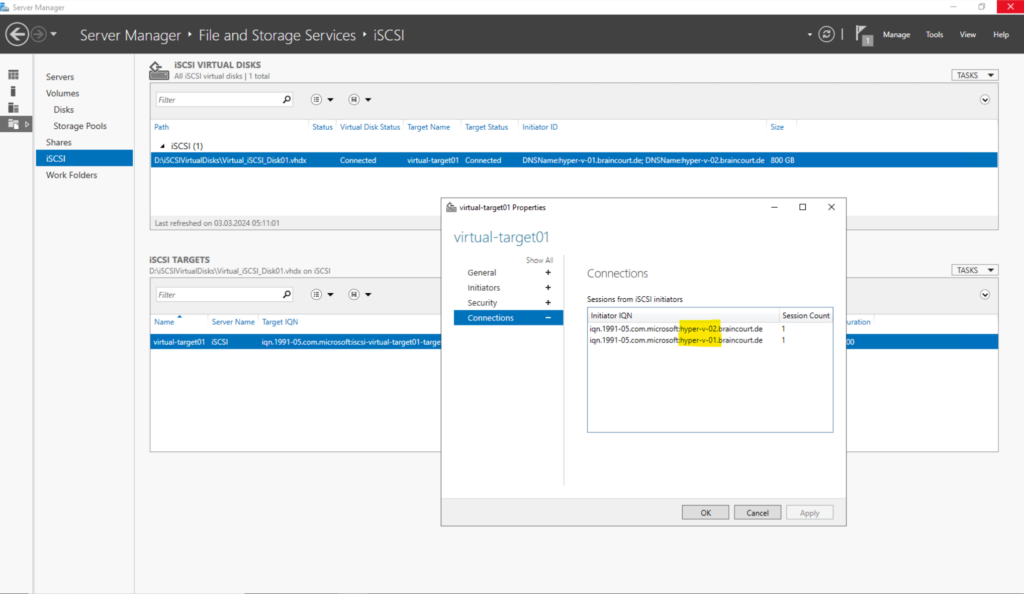

On the iSCSI Target Server we can now see both connected initiators (our Hyper-V servers).

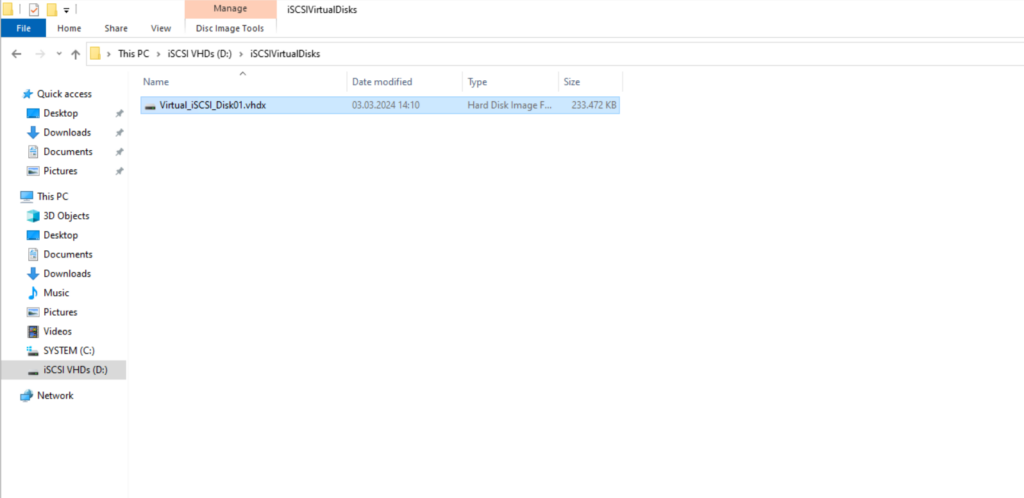

Here you can see the created VHD on the iSCSI Target Server which will store finally the iSCSI virtual disk.

To have multiple initiators share a single target, whether over ISCSI, Fibre Channel or other SAN solution, you need a cluster-aware filesystem.

VMWare ESXi does this with VMFS.

Microsoft offers NTFS Cluster Shared Volumes, which is a Windows clustered filesystem.

Clustered filesystems have a lot more nuanced work that stand-alone filesystems, since they have to understand quorum-counting, fencing, dead-peer detection and propagation. If they cannot do these things, a split-brain scenario (each node thinks he has the sole access to the shared resource, and cannot check with the other nodes) would enable you to corrupt all your data, or at least get into an inconsistent (and hard to recover from) state.

Source: https://serverfault.com/questions/633556/shared-access-to-iscsi-target

To see the iSCSI Target Server and iSCSI Initiator in action, you can also read my following post, where I will use two iSCSI virtual disks, one is used for the Cluster Shared Volume and one for the Disk Witness of the cluster.

Troubleshooting

iSCSI Target Server not Listening on TCP Port 3260

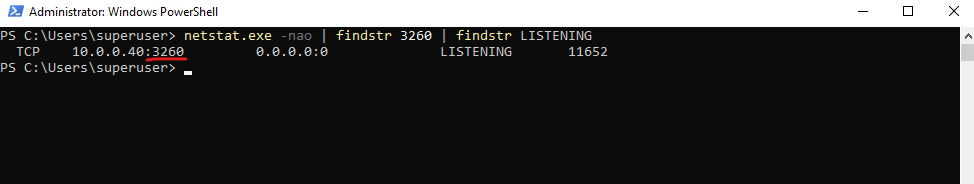

In case the clients cannot connect to the iSCSI targets on the server, we can first check if the iSCSI Target Server is really listening on TCP Port 3260.

iSCSI uses TCP port 3260 for communication between the initiators (clients) and the target (storage device on iSCSI Target Server).

PS> netstat.exe -nao | findstr 3260 | findstr LISTENING

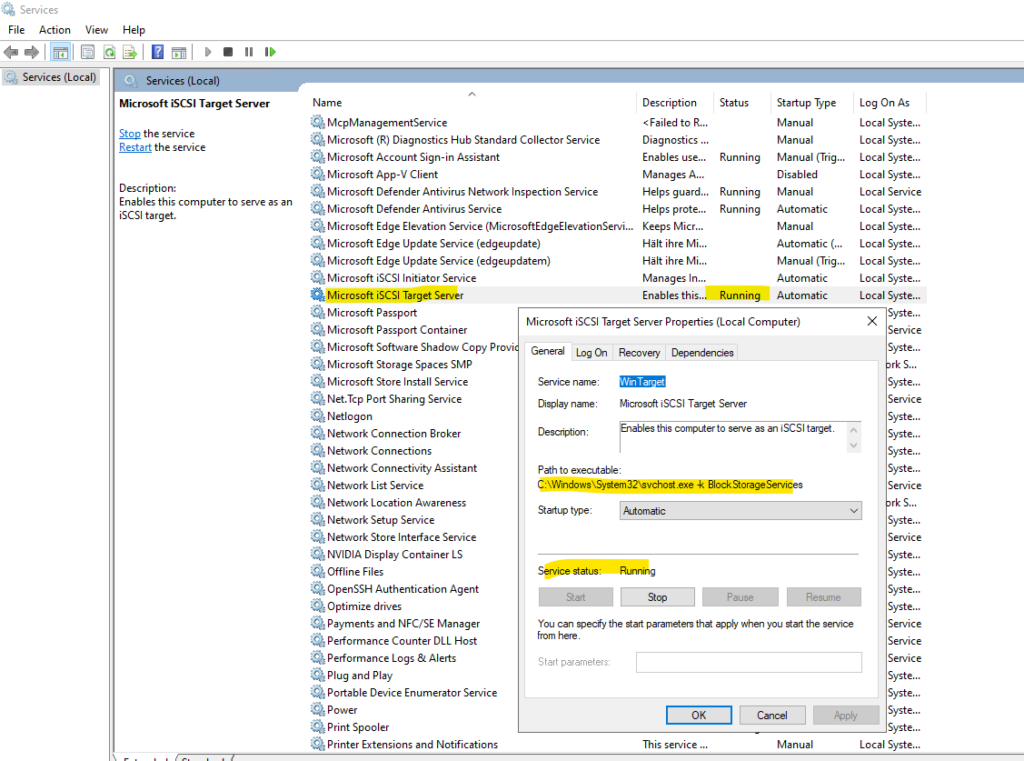

If not first check if the Microsoft iSCSI Target Server is running.

I had this issue on one iSCSI Target server, the service was running but not listening on TCP Port 3260, finally a reboot of the service solved it.

Links

iSCSI Target Server overview

https://learn.microsoft.com/en-us/windows-server/storage/iscsi/iscsi-target-serveriSCSI

https://en.wikipedia.org/wiki/ISCSILogical unit number (LUN)

https://en.wikipedia.org/wiki/Logical_unit_number(i)SCSI Initiator

https://en.wikipedia.org/wiki/ISCSI#Initiator(i)SCSI target

https://en.wikipedia.org/wiki/ISCSI#Target