Set up and Deploy a VMware ESXi Host Cluster and Datastore Cluster

In this post we will see step by step how we can set up and deploy a new cluster of two ESXi hosts (2-node cluster). At the end we will also create and adding a datastore cluster to our newly created ESXi host cluster to provide shared storage for.

This cluster architecture provides high availability and fault tolerance for virtual machines while minimizing hardware costs. Setting up such a cluster involves configuring shared storage, network connectivity, and cluster-specific settings. By leveraging features like VMware vSphere High Availability (HA) and VMware vSphere Distributed Resource Scheduler (DRS), administrators can ensure that VMs remain accessible and balanced across the cluster nodes, even in the event of hardware failures.

Introduction

A cluster is a group of hosts. When a host is added to a cluster, the resources of the host become part of the resources of the cluster. The cluster manages the resources of all hosts that it contains.

When you create clusters, you can enable vSphere High Availability (HA), vSphere Distributed Resource Scheduler (DRS), and the VMware vSAN features.

Starting with vSphere 7.0, you can create a cluster that you manage with a single image. By using vSphere Lifecycle Manager images, you can easily update and upgrade the software and firmware on the hosts in the cluster. Starting with vSphere 7.0 Update 2, during cluster creation, you can select a reference host and use the image on that host as the image for the newly created cluster. For more information about using images to manage ESXi hosts and clusters, see the Managing Host and Cluster Lifecycle documentation.

Starting with vSphere 7.0 Update 1, vSphere Cluster Services (vCLS) is enabled by default and runs in all vSphere clusters. vCLS ensures that if vCenter Server becomes unavailable, cluster services remain available to maintain the resources and health of the workloads that run in the clusters. For more information about vCLS, see vSphere Cluster Services.

Starting with vSphere 7.0, the clusters that you create can use vSphere Lifecycle Manager images for host updates and upgrades.

A vSphere Lifecycle Manager image is a combination of vSphere software, driver software, and desired firmware with regard to the underlying host hardware. The image that a cluster uses defines the full software set that you want to run on all ESXi hosts in the cluster: the ESXi version, additional VMware-provided software, and vendor software, such as firmware and drivers.

The image that you define during cluster creation is not immediately applied to the hosts. If you do not set up an image for the cluster, the cluster uses baselines and baseline groups. Starting with vSphere 7.0 Update 2, during cluster creation, you can select a reference host and use the image on that host as the image for the newly created cluster. For more information about using images and baselines to manage hosts in clusters, see the Managing Host and Cluster Lifecycle documentation.

Prerequisites

- Verify that a data center, or a folder within a data center, exists in the inventory.

- Verify that hosts have the same ESXi version and patch level.

- Obtain the user name and password of the root user account for the host.

- If you want to extend a cluster with initially configured networking, verify that hosts do not have a manual vSAN configuration or a manual networking configuration.

- To create a cluster that you manage with a single image, review the requirements and limitations information in the Managing Host and Cluster Lifecycle documentation.

Required privileges:

- Host.Inventory.Create cluster

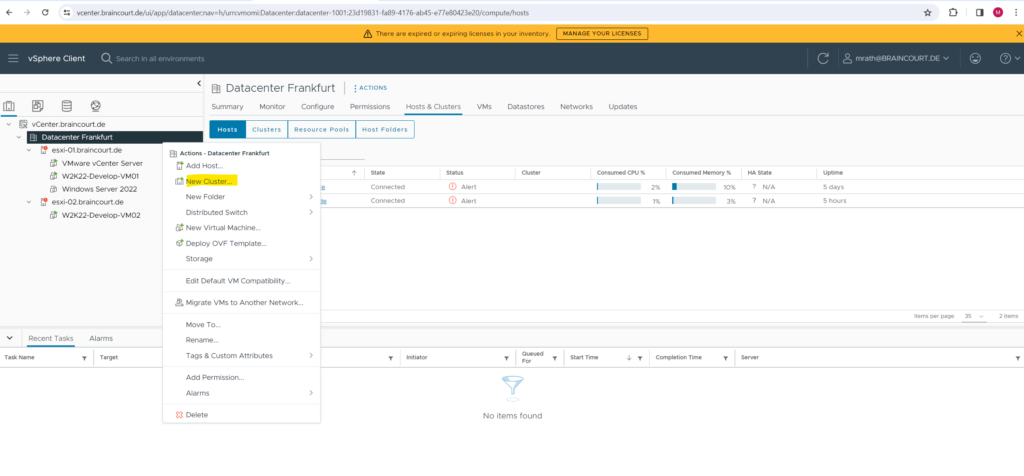

Create a new vSphere Cluster

In the vSphere Client home page, navigate to Inventory. Right click the data center and select New Cluster.

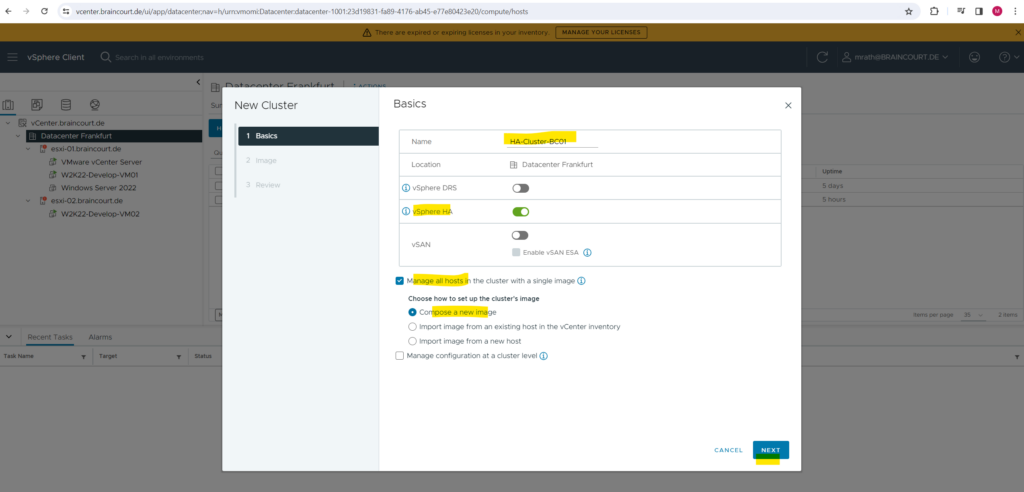

Enter a name for the cluster.

Select DRS, vSphere HA, or vSAN cluster features.

- vSphere DRS -> Distributed Resource Scheduler will provide highly available resources to your workloads, balance workloads for optimal performance and scale and manage computing resources without service disruption.

More about under https://www.vmware.com/products/vsphere/drs-dpm.html - vSphere HA -> vSphere HA provides high availability for virtual machines by pooling the virtual machines and the hosts they reside on into a cluster. Hosts in the cluster are monitored and in the event of a failure, the virtual machines on a failed host are restarted on alternate hosts.

More about under https://docs.vmware.com/en/VMware-vSphere/7.0/com.vmware.vsphere.avail.doc/GUID-33A65FF7-DA22-4DC5-8B18-5A7F97CCA536.html - vSAN -> vSAN Express Storage Architecture is a next-generation architecture designed to get the most out of high-performance storage devices, resulting in greater performance and efficiency.

More about under https://www.vmware.com/products/vsan.html

(Optional) To create a cluster that you manage with a single image, select the Manage all hosts in the cluster with a single image check box.

For information about creating a cluster that you manage with a single image, see the Managing Host and Cluster Lifecycle documentation.

Below for the moment I will just enable vSphere HA.

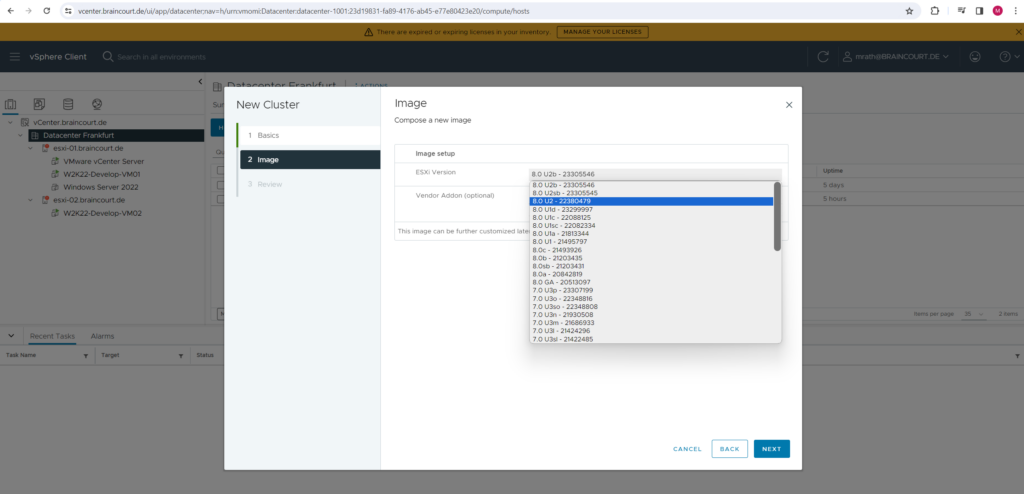

Select the ESXi version of your hosts for the new image.

As mentioned under prerequisites all hosts have to be on the same ESXi version and patch level.

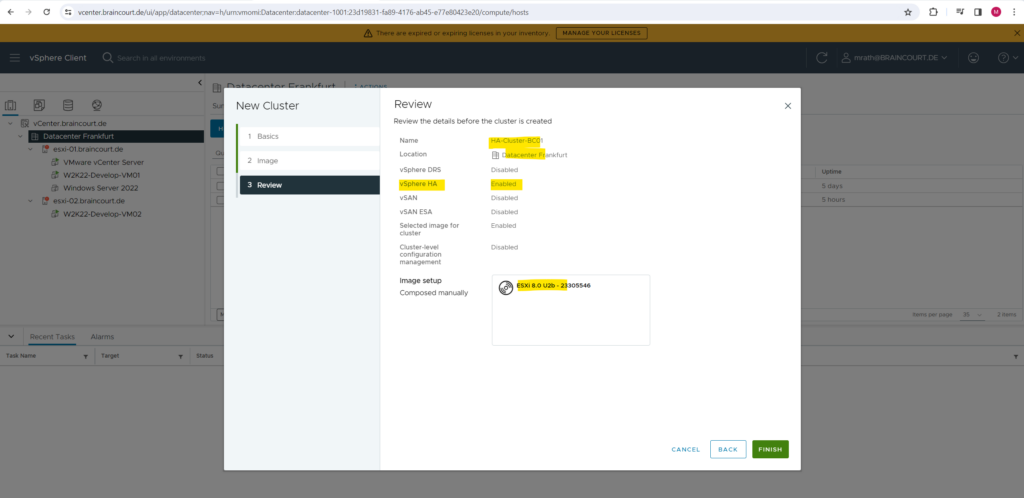

Review the cluster details and click Finish.

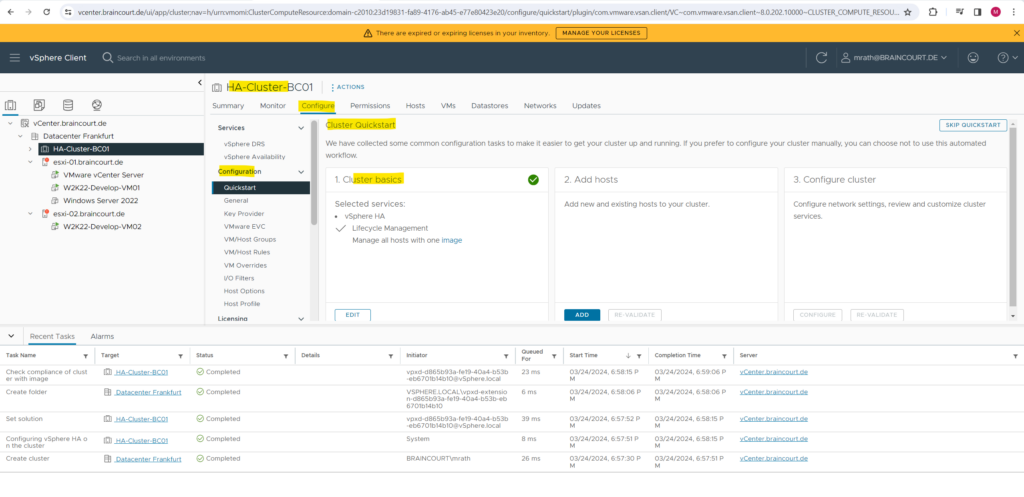

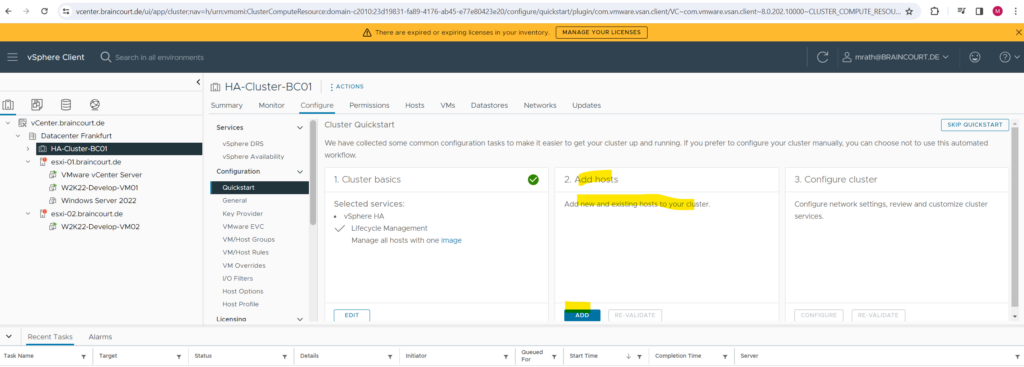

The cluster appears in the vCenter Server inventory a few seconds later. The Quickstart workflow appears under Configure > Configuration.

So far we have just created an empty cluster in the vCenter Server inventory.

You can use the Quickstart workflow to easily configure and expand the cluster. You can also skip the Quickstart workflow and continue configuring the cluster and its hosts manually. More about you will find here https://docs.vmware.com/en/VMware-vSphere/8.0/vsphere-vcenter-esxi-management/GUID-F7818000-26E3-4E2A-93D2-FCDCE7114508.html

Quickstart groups common tasks and offers configuration wizards that guide you through the process of configuring and extending a cluster. After you provide the required information on each wizard, your cluster is configured based on your input. When you add hosts using the Quickstart workflow, hosts are automatically configured to match the cluster configuration.

The next step is to add my two ESXi hosts to the newly created cluster object.

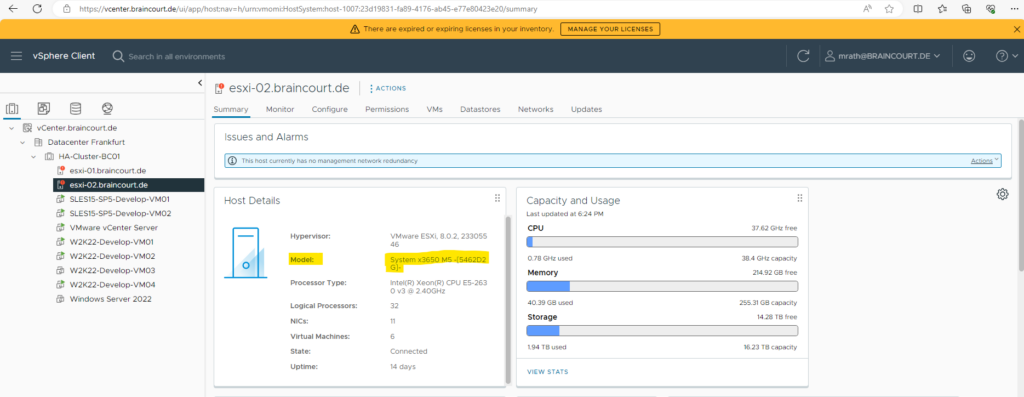

I will use here for demonstration purpose and just for the lab environment two old IBM System x3650 M4 & M5 server. As long as the CPU instruction sets are identical, you can also run different server model in an ESXi host cluster.

Can vSphere/ESXi run on different CPU architecture?

No, the basic requirement to transfer a live running OS is that both, source and destination host support the same CPU instruction sets. Exact model, Mhz clock rate, cache size etc. are irrelevant as long as the CPU instruction sets are identical. So no, they don’t have to be the exact same model.

https://communities.vmware.com/t5/ESXi-Discussions/Can-vSphere-ESXi-run-on-different-CPU-architecture/td-p/2239792

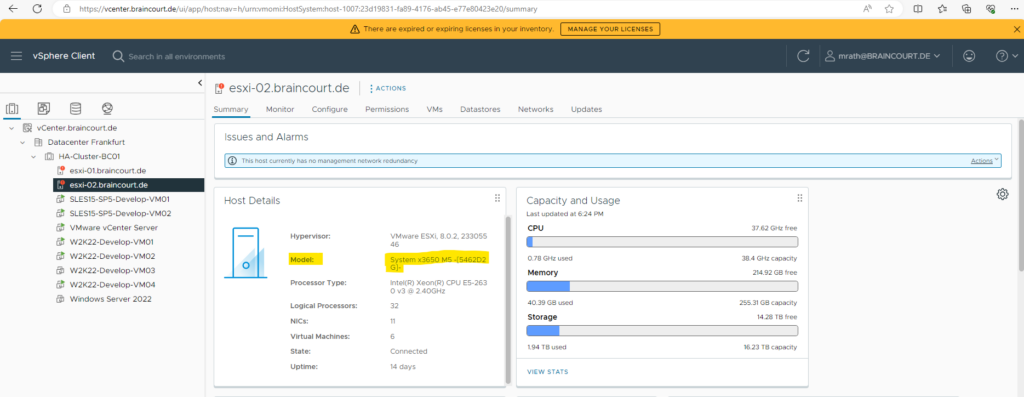

My first node the IBM System x3650 M4.

My second node the IBM System x3650 M5.

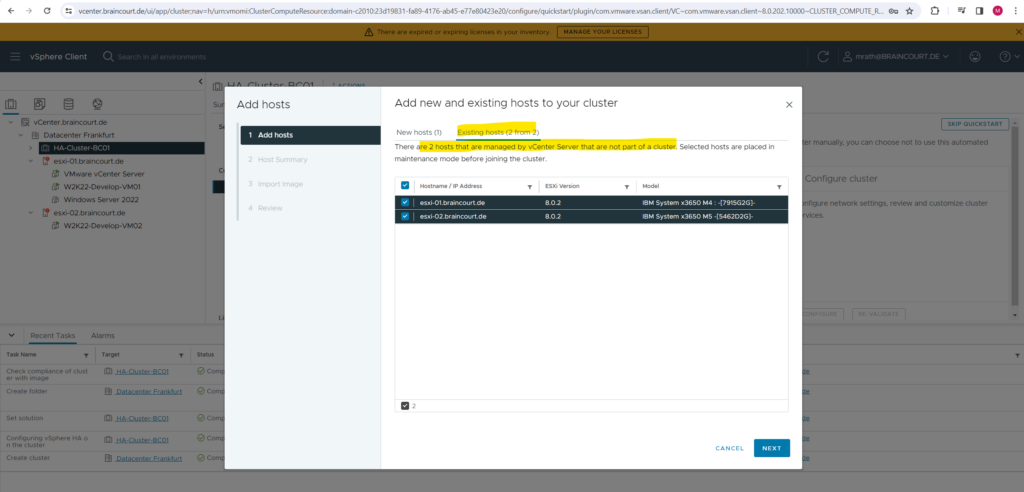

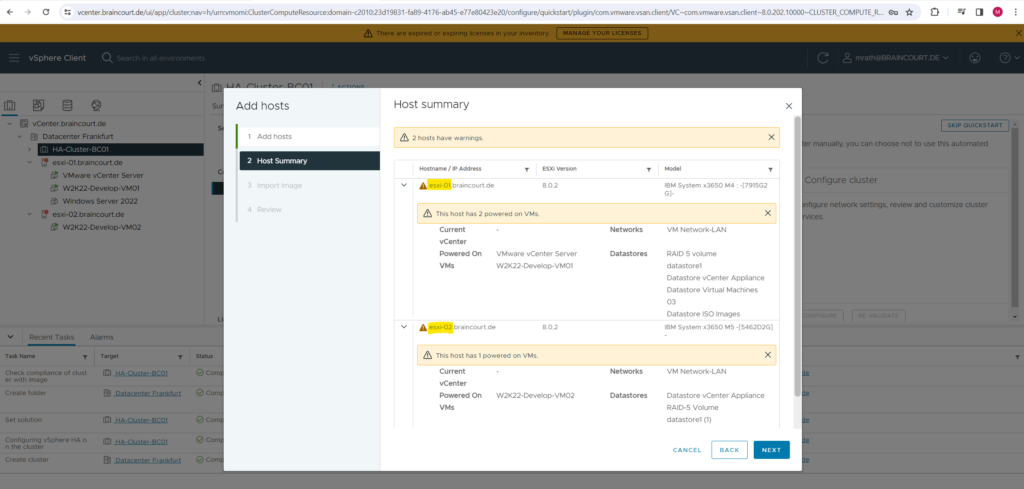

I will now have to add my two ESXi hosts to the newly created cluster object.

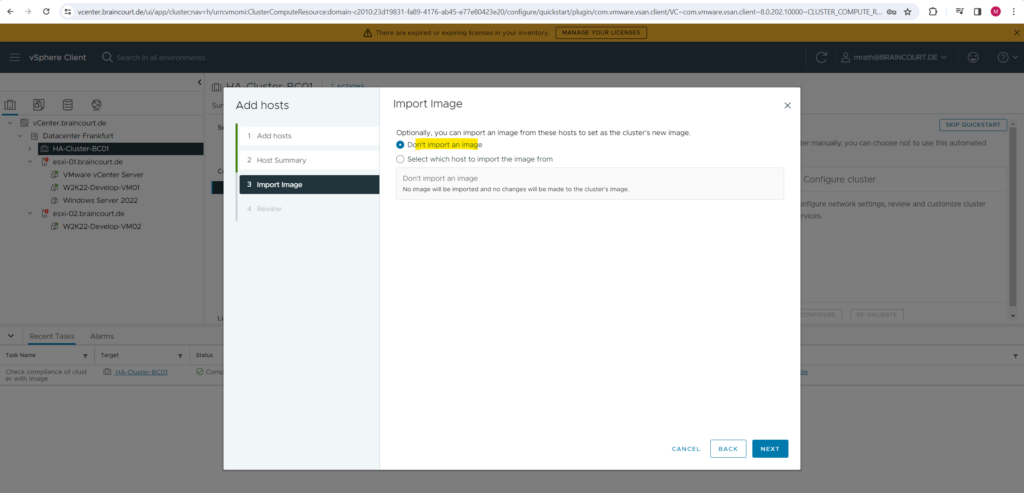

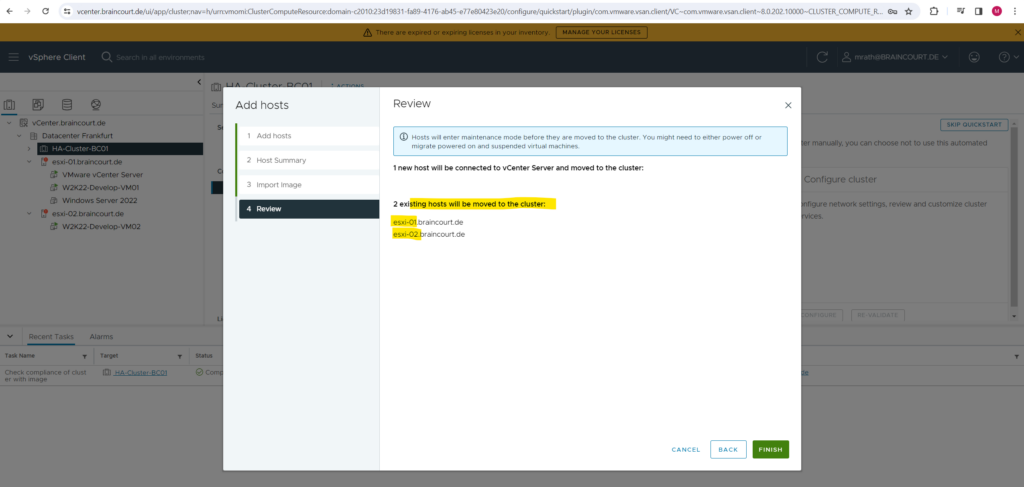

I will select my both existing hosts which are already managed by vCenter server. The selected hosts are placed in maintenance mode before joining the cluster.

In case of some problems you may have to move an ESXi host manually to the cluster by just left clicking on and hold it and then move it to the cluster object in the vSphere client. Further I needed also shutdown some virtual machines manually in order to be able to switch the host into maintenance mode.

The vCenter server virtual machine can be run further on one of the ESXi hosts which then doesn’t need to put into maintenance mode.

Nevertheless in my case of a two node cluster, I have no other choice where I can run the vCenter server virtual machine as one of these two hosts.

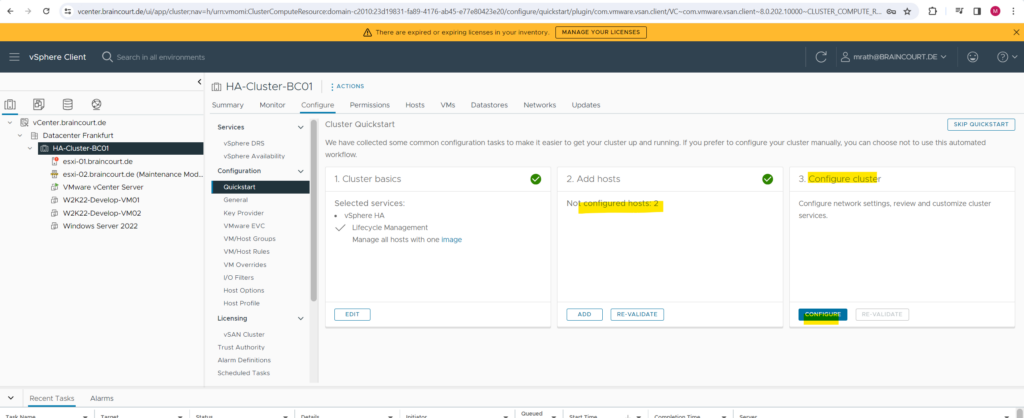

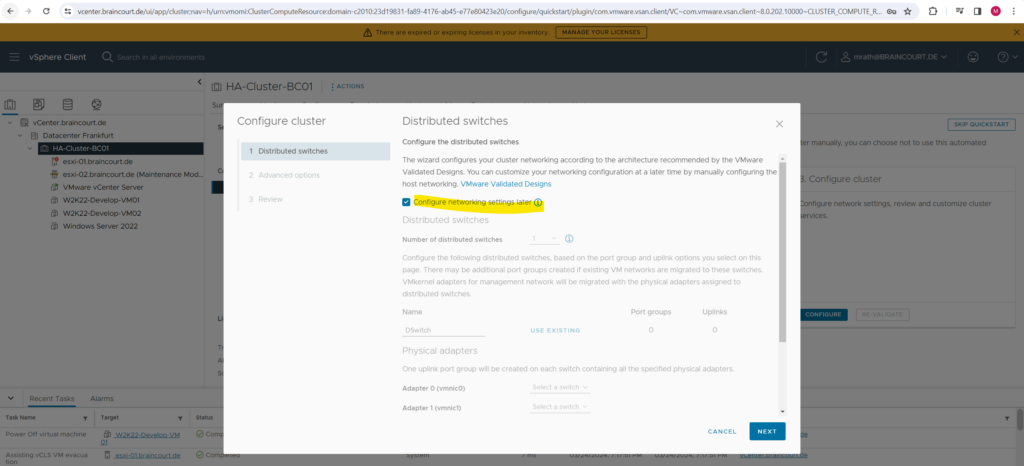

I can now finally configure the cluster by clicking above on Configure in step 3.

For my lab environment I don’t want to configure distributed vSwitches and therefore check Configure network settings later and click on Next.

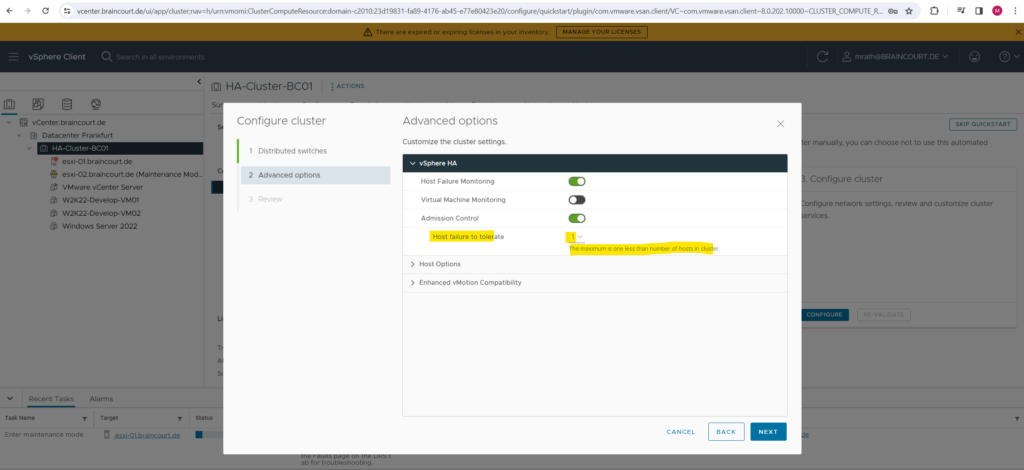

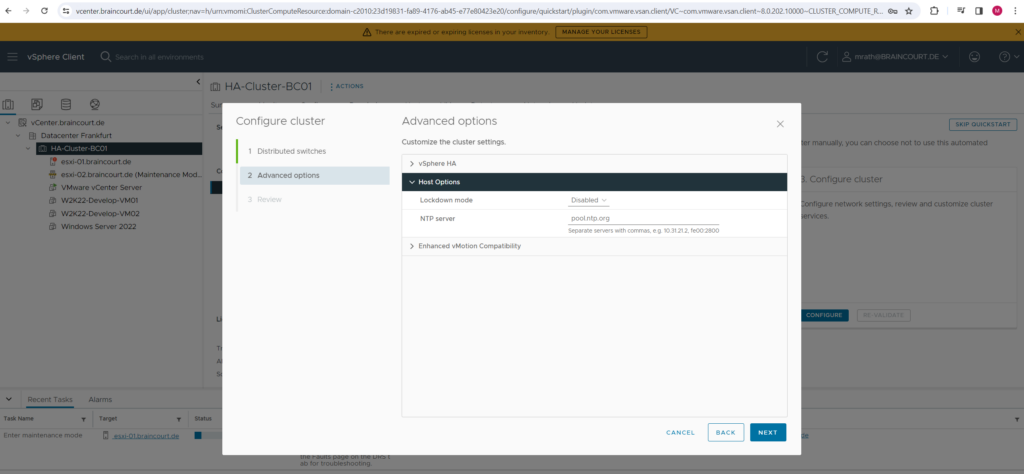

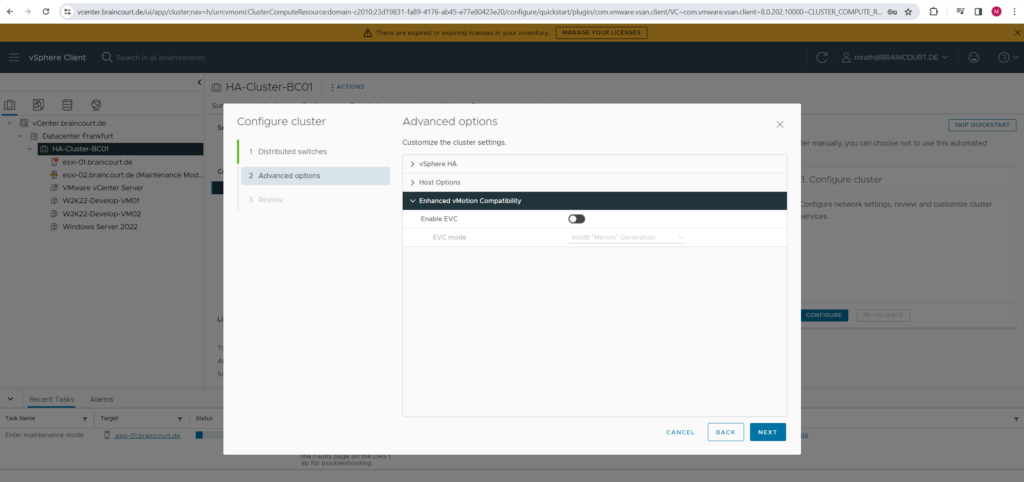

Next under Advanced options we can configure some further cluster settings like how many ESXi hosts are tolerated to fail within the cluster, in case of a two node cluster like in my case, of course just one can be tolerated.

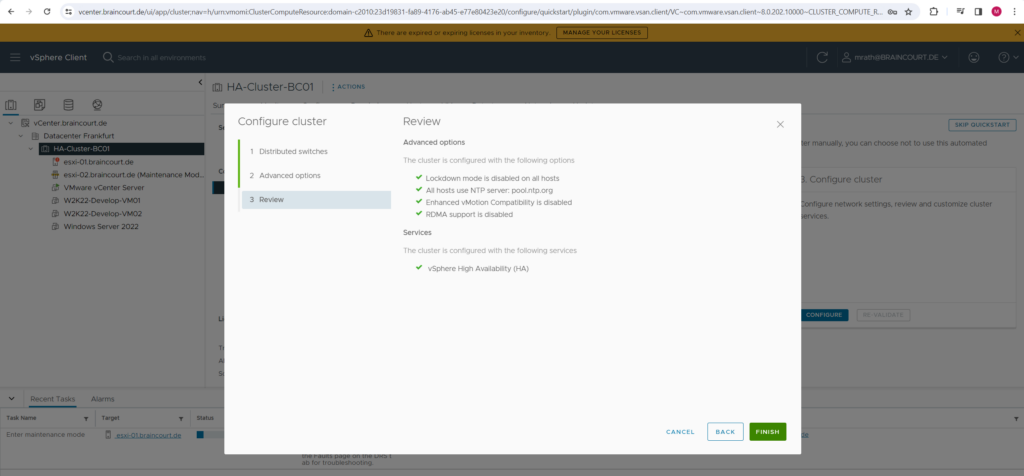

Finally click on FINISH to create the cluster object within vCenter.

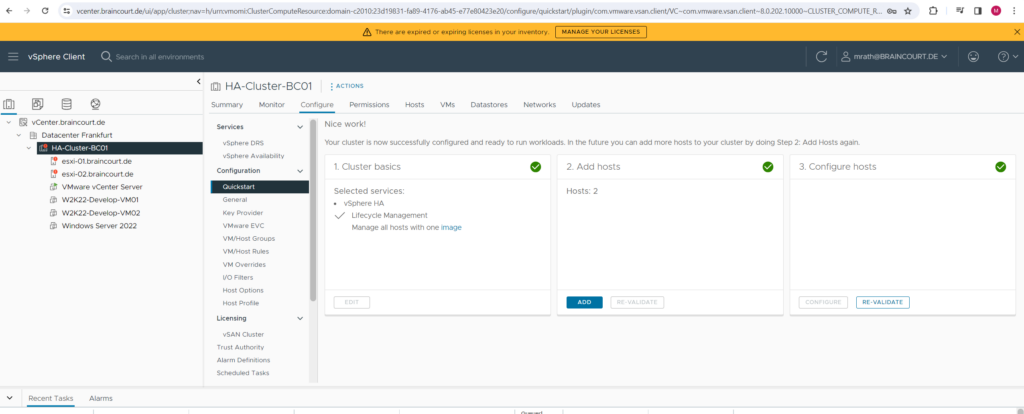

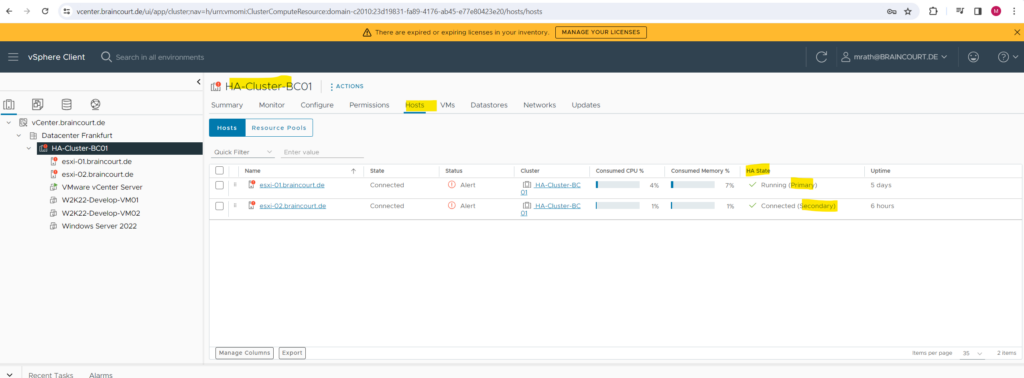

The HA Cluster is finally configured successfully.

The primary cluster node is my first esxi-01.braincourt.de host.

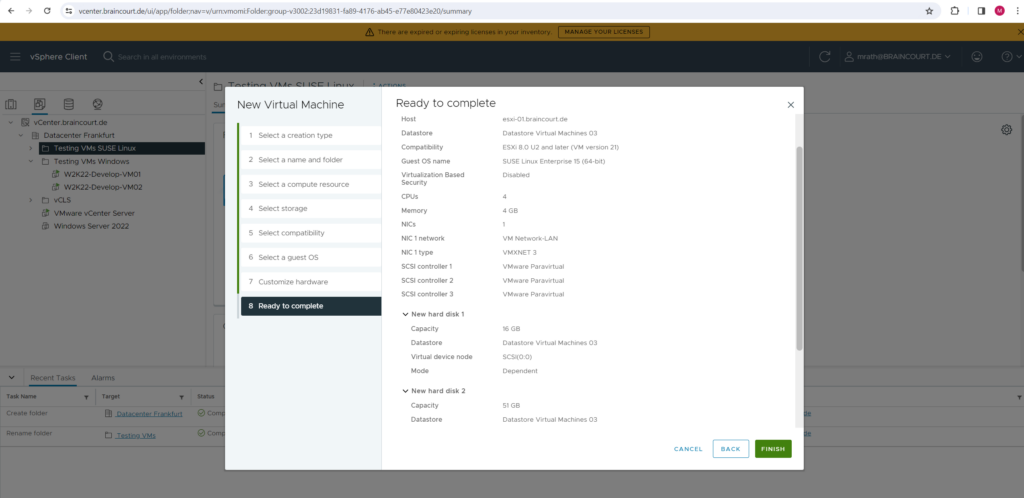

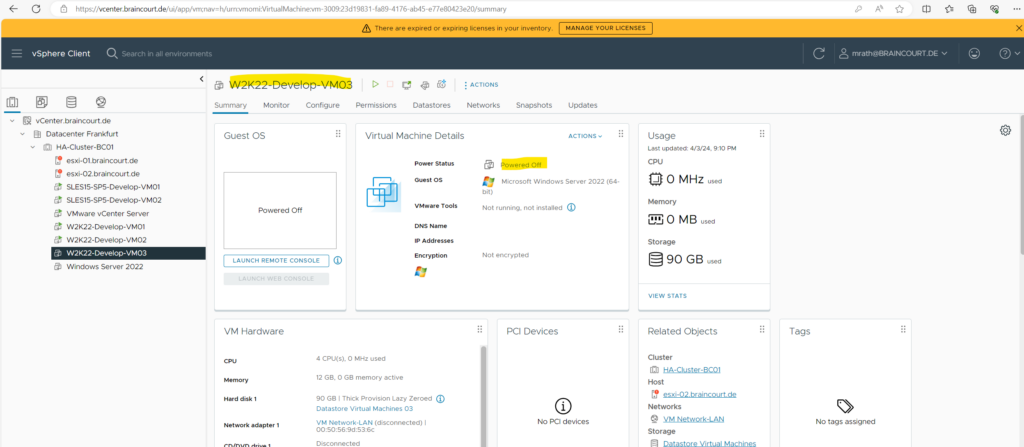

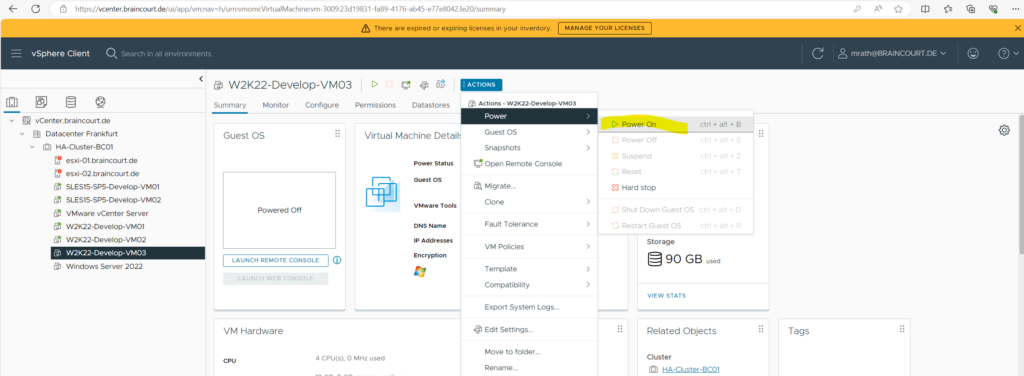

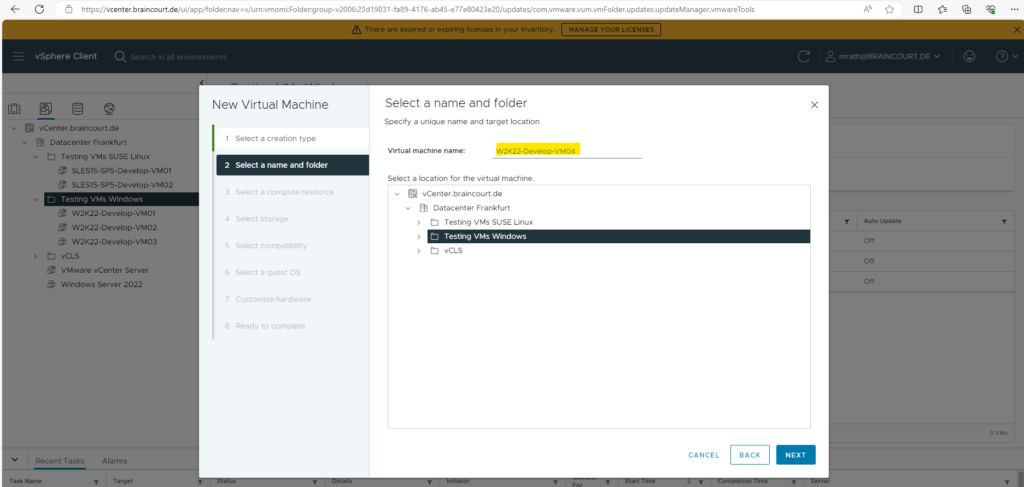

Create a new VM in the Cluster

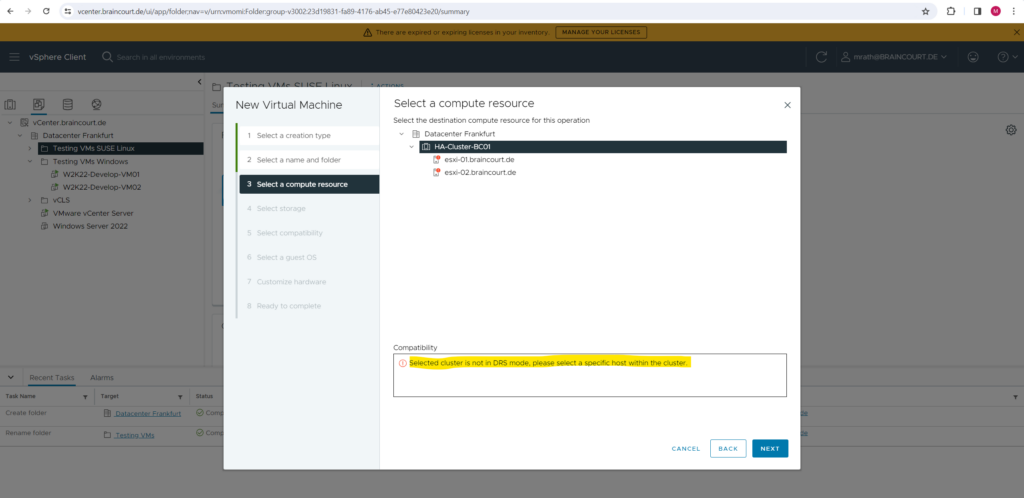

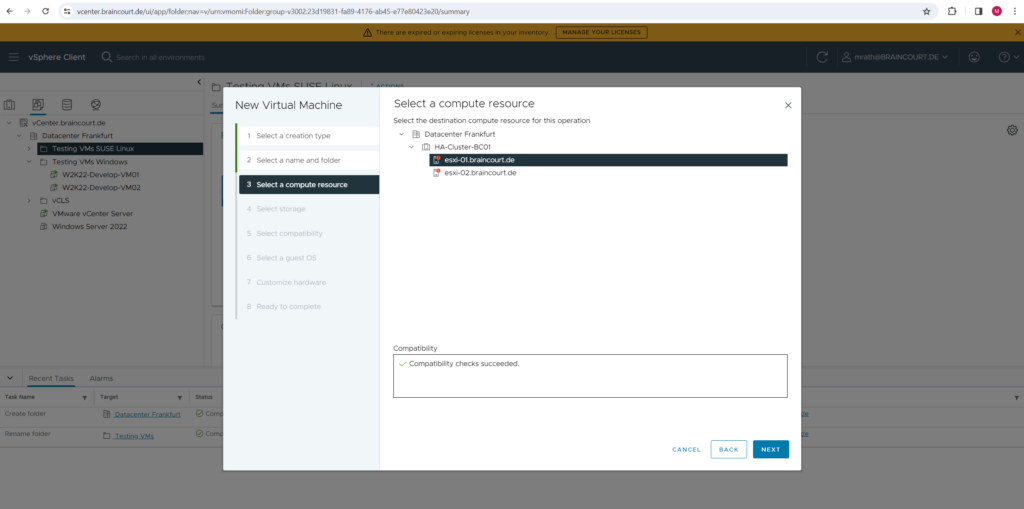

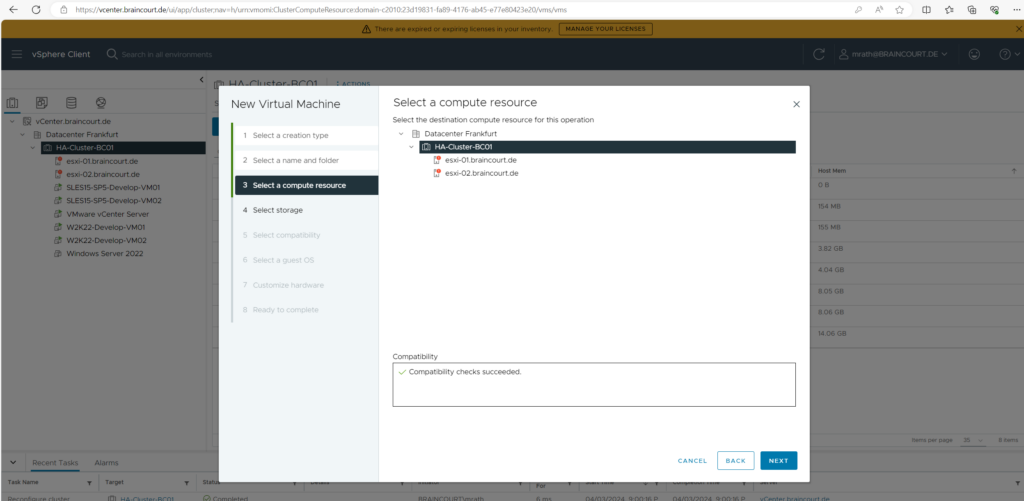

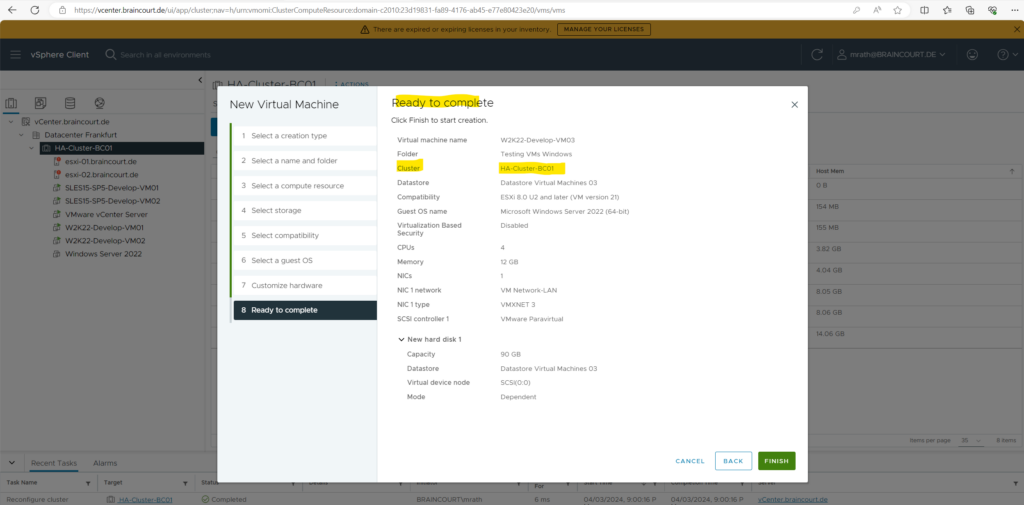

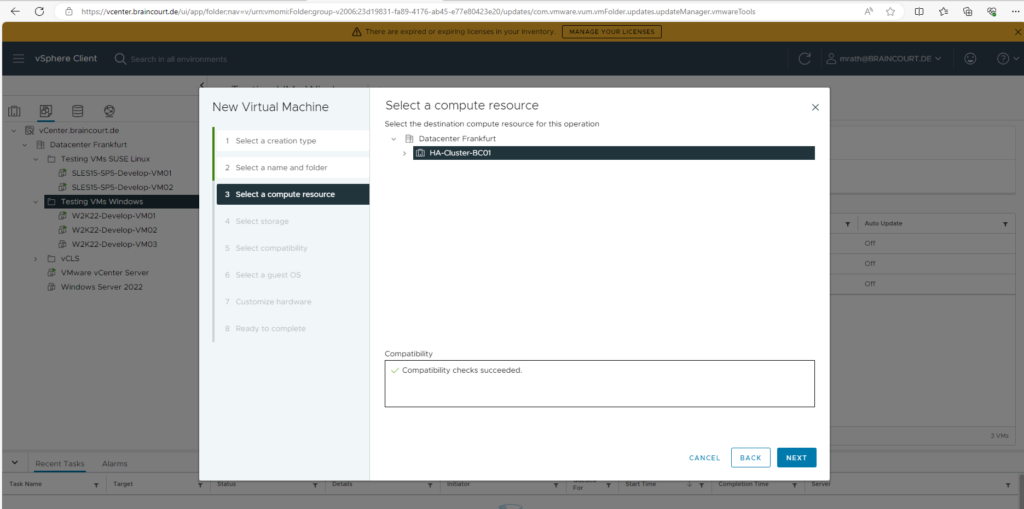

Because I previously further above just configured vSphere HA and left vSphere DRS disabled, I have to select below a specific host (compute resource) where the new virtual machine will be running.

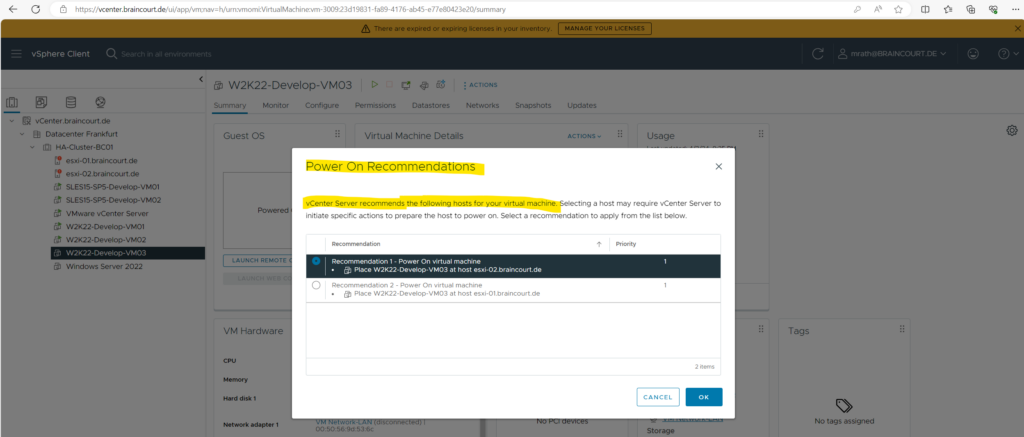

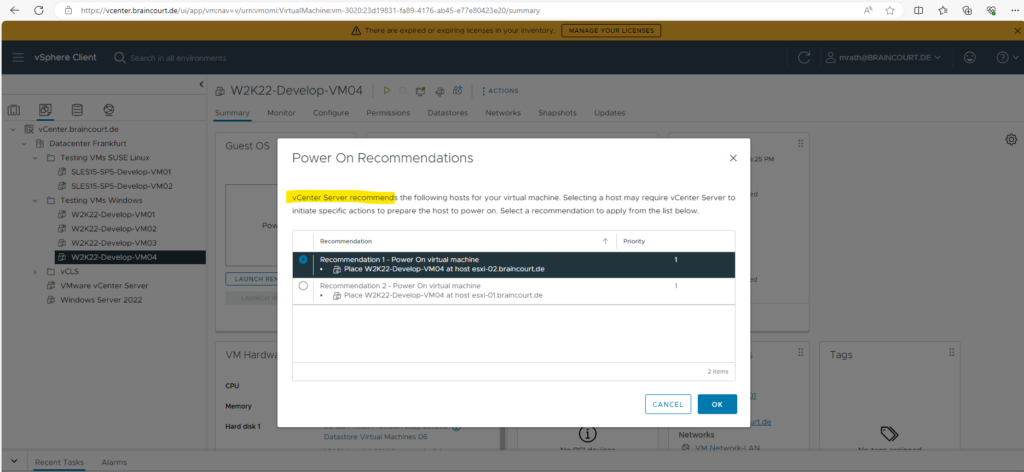

In case vSphere DRS (Distributed Resource scheduler) is enabled for the cluster, we just need to select the cluster within our new virtual machine should be running later. When the virtual machine then is powered on later, we get either a recommendation which ESXi host we should use best as compute resource or vCenter resp. the vSphere DRS feature will select by itself a suitable ESXi host to run the virtual machine on, this behavior depends on the automation level from vSphere DRS if you will get a recommendation or it will choose by itself a ESXi host to run the virtual machine on.

More about vSphere DRS further down.

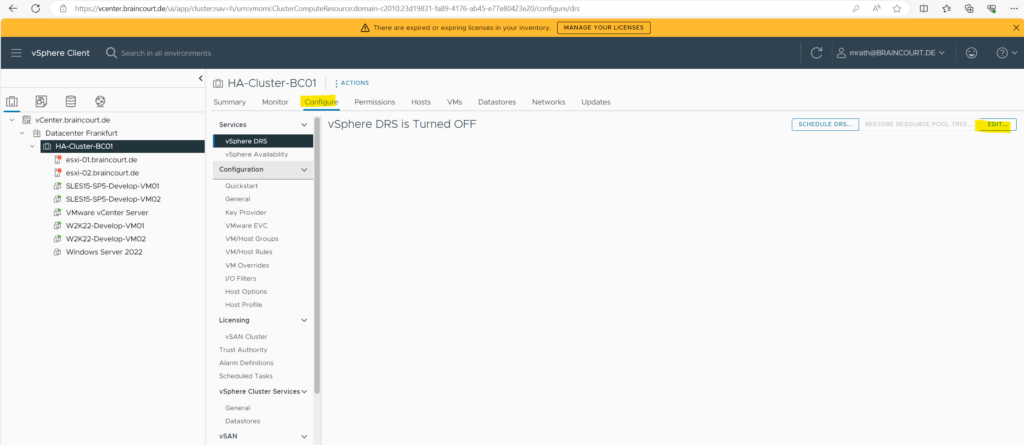

Configure vSphere DRS

So far I haven’t enabled vSphere DRS for my new cluster which I will now catch up. But first some interesting information posted by NAKIVO about the vSphere HA and vSphere DRS features by vCenter.

A cluster is a group of hosts connected to each other with special software that makes them elements of a single system. At least two hosts (also called nodes) must be connected to create a cluster. When hosts are added to the cluster, their resources become the cluster’s resources and are managed by the cluster.

The most common types of VMware vSphere clusters are High Availability (HA) and Distributed Resource Scheduler (DRS) clusters.

HA clusters are designed to provide high availability of virtual machines and services running on them; if a host fails, they immediately restart the virtual machines on another ESXi host.

DRS clusters provide the load balancing among ESXi hosts.

Distributed Resource scheduler (DRS) is a type of VMware vSphere cluster that provides load balancing by migrating VMs from a heavily loaded ESXi host to another host that has enough computing resources, all while the VMs are still running. This approach is used to prevent overloading of ESXi hosts.

For this reason, DRS is usually used in addition to HA, combining failover with load balancing. In a case of failover, the virtual machines are restarted by the HA on other ESXi hosts and the DRS, being aware of the available computing resources, provides the recommendations for VM placement.

vMotion technology is used for this live migration of virtual machines, which is transparent for users and applications.

Resource pools are used for flexible resource management of ESXi hosts in the DRS cluster. You can set processor and memory limits for each resource pool, then add virtual machines to them. For example, you could create one resource pool with high resource limits for developers’ virtual machines, a second pool with normal limits for testers’ virtual machines, and a third pool with low limits for other users. vSphere lets you create child and parent resource pools.

Source: https://www.nakivo.com/blog/what-is-vmware-drs-cluster/

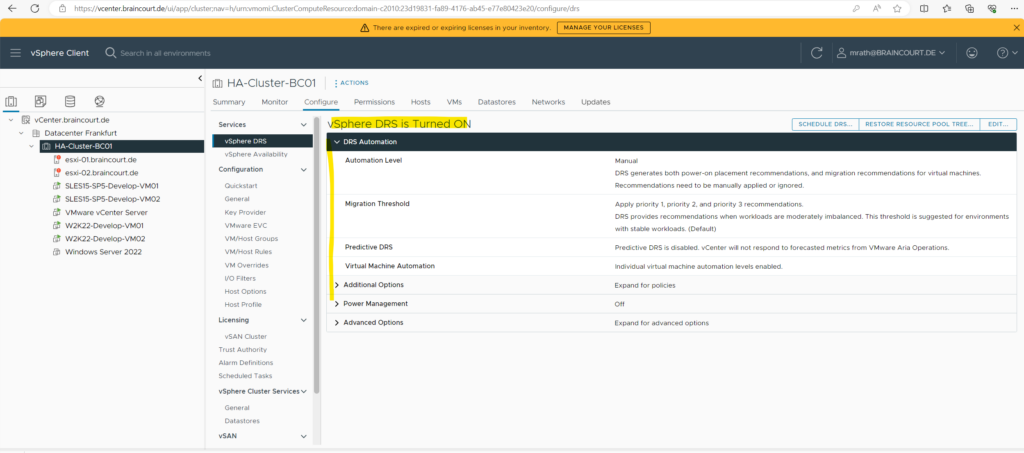

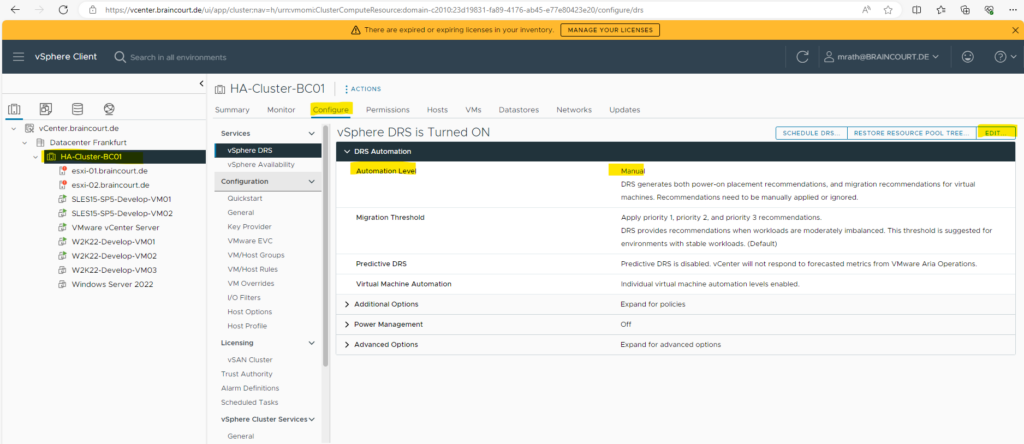

To enable vSphere DRS first select the cluster object within the Inventory and then within the Configuration tab select Services -> vSphere DRS and click on EDIT … as shown below.

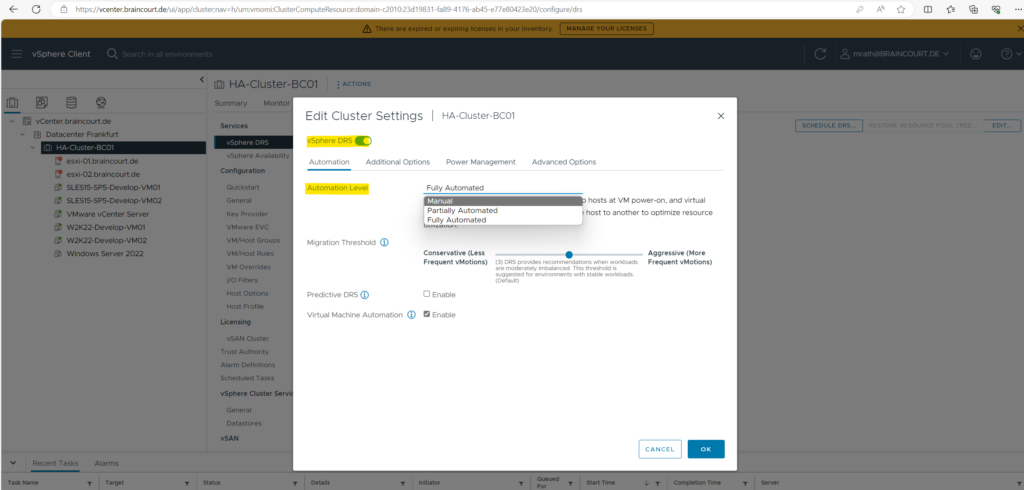

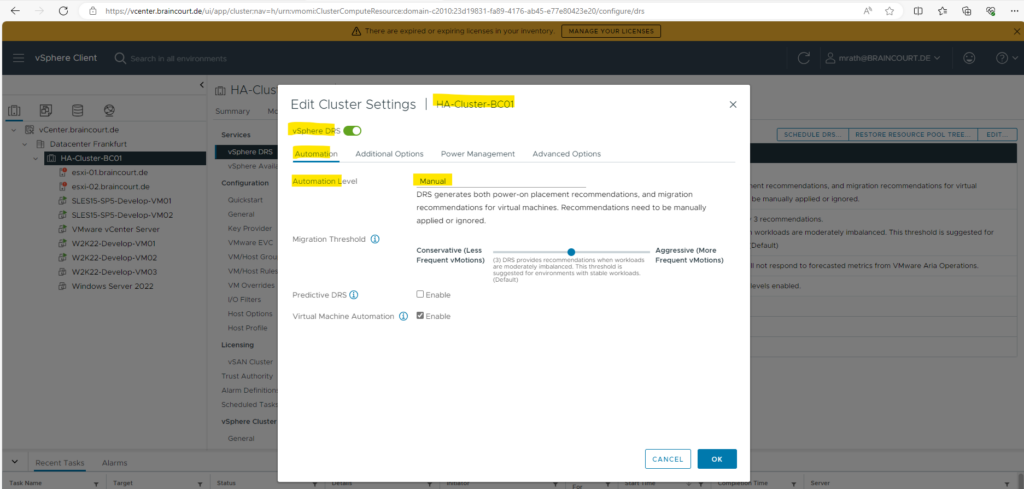

Press the toggle button to enable vSphere DRS and select the Automation level for. I will select here Manual. More about the different automation level they are available in vCenter I will show in the next section.

Manual -> DRS generates both power-on placement recommendations, and migration recommendations for virtual machines. Recommendations need to be manually applied or ignored.

More about the different automation levels under https://docs.vmware.com/en/VMware-vSphere/7.0/com.vmware.vsphere.resmgmt.doc/GUID-C21C0609-923B-46FB-920C-887F00DBCAB9.html

Finally click on OK.

From now on when creating a new virtual machine, we don’t need to specify an ESXi host within the cluster where the virtual machine will be running later when selecting a compute resource in this step.

When the virtual machine then is powered on later, we get either a recommendation which ESXi host we should use best as compute resource or vCenter resp. the vSphere DRS feature will select by itself a suitable ESXi host to run the virtual machine on, this behavior depends on the automation level from vSphere DRS if you will get a recommendation or it will choose by itself a ESXi host to run the virtual machine on.

So far no specific ESXi host is selected to deploy the virtual machine, just the cluster.

When we now powering on this virtual machine, we get a recommendation which ESXi host we should use as compute resource to run the virtual machine on.

This is because we now have enabled vSphere DRS with an automation level of manual.

Automation Level

During initial placement and load balancing, DRS generates placement and vMotion recommendations, respectively. DRS can apply these recommendations automatically, or you can apply them manually.

vSphere vMotion enables zero-downtime, live migration of workloads from one server to another so your users can continue to access the systems they need to stay productive.

Source: https://www.vmware.com/products/vsphere/vmotion.html

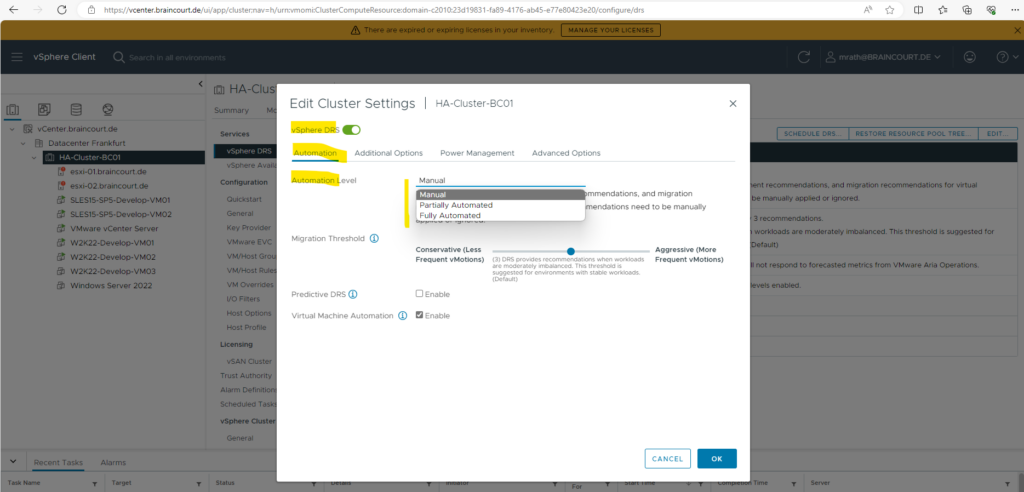

DRS has three levels of automation:

- Manual -> Placement and migration recommendations are displayed, but do not run until you manually apply the recommendation.

- Fully Automated ->Placement and migration recommendations run automatically.

- Partially Automated -> Initial placement is performed automatically. Migration recommendations are displayed, but do not run.

- Disabled -> vCenter Server does not migrate the virtual machine or provide migration recommendations for it.

You can configure the automation level for the cluster by selecting the cluster within the Inventory menu and then on the tab Configure under Services -> vSphere DRS as shown below.

Here you can see that I have configured the automation level with Manual. To change it just click on EDIT here.

Create a Datastore Cluster

When you add a datastore to a datastore cluster, the datastore’s resources become part of the datastore cluster’s resources. As with clusters of hosts, you use datastore clusters to aggregate storage resources, which enables you to support resource allocation policies at the datastore cluster level. The following resource management capabilities are also available per datastore cluster.

- Space utilization load balancing

You can set a threshold for space use. When space use on a datastore exceeds the threshold, Storage DRS generates recommendations or performs Storage vMotion migrations to balance space use across the datastore cluster.

- I/O latency load balancing

You can set an I/O latency threshold for bottleneck avoidance. When I/O latency on a datastore exceeds the threshold, Storage DRS generates recommendations or performs Storage vMotion migrations to help alleviate high I/O load.

- Anti-affinity rules

You can create anti-affinity rules for virtual machine disks. For example, the virtual disks of a certain virtual machine must be kept on different datastores. By default, all virtual disks for a virtual machine are placed on the same datastore.

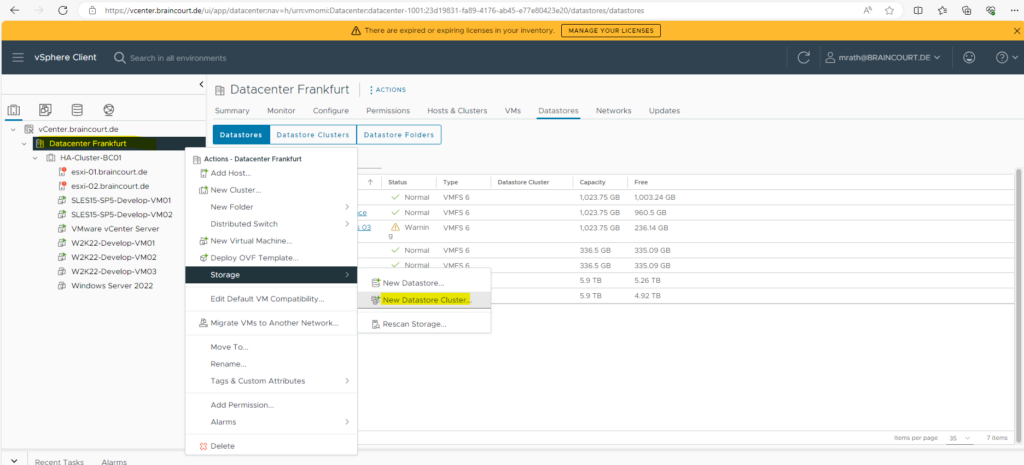

To create a new Datastore Cluster right click on the Datacenter and select Storage -> New Datastore Cluster … as shown below.

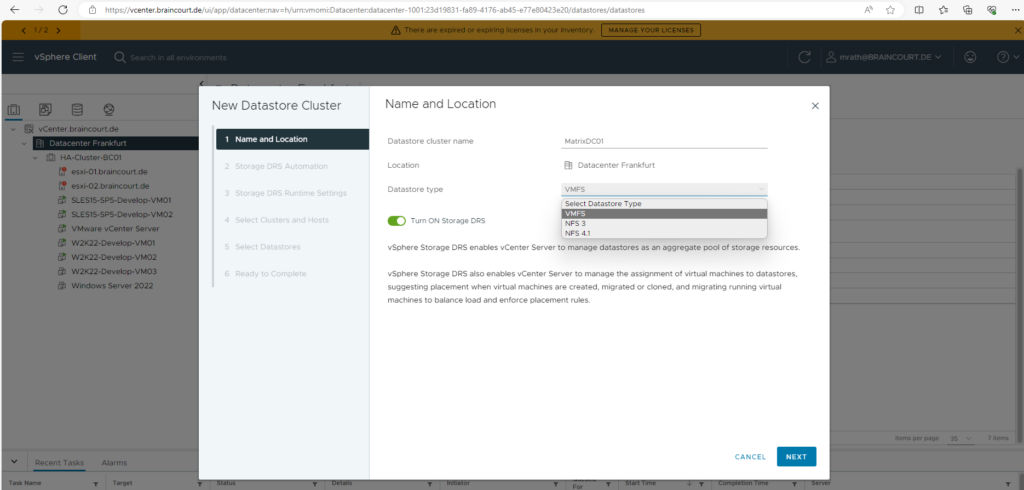

vSphere Storage DRS also enables vCenter Server to manage the assignment of virtual machines to datastores, suggesting placement when virtual machines are created, migrated or cloned, and migrating running virtual machines to balance load and enforce placement rules.

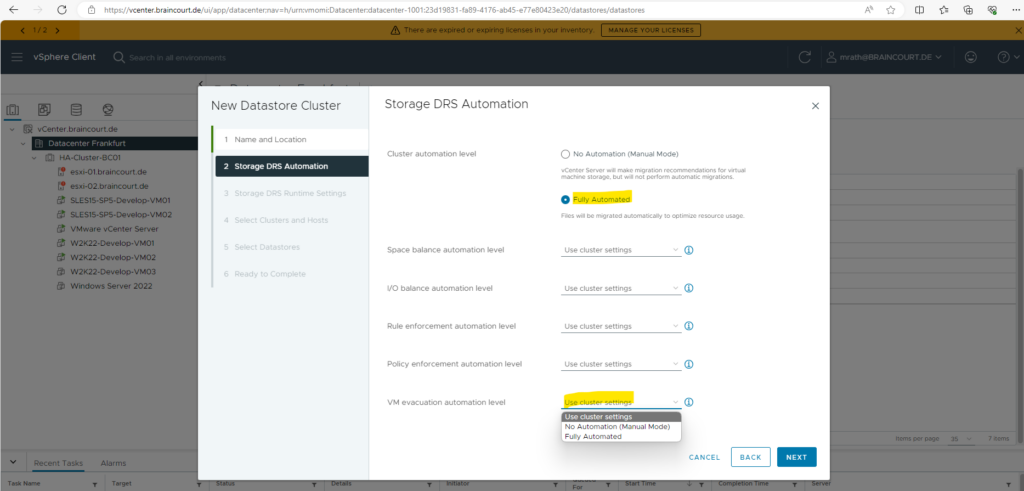

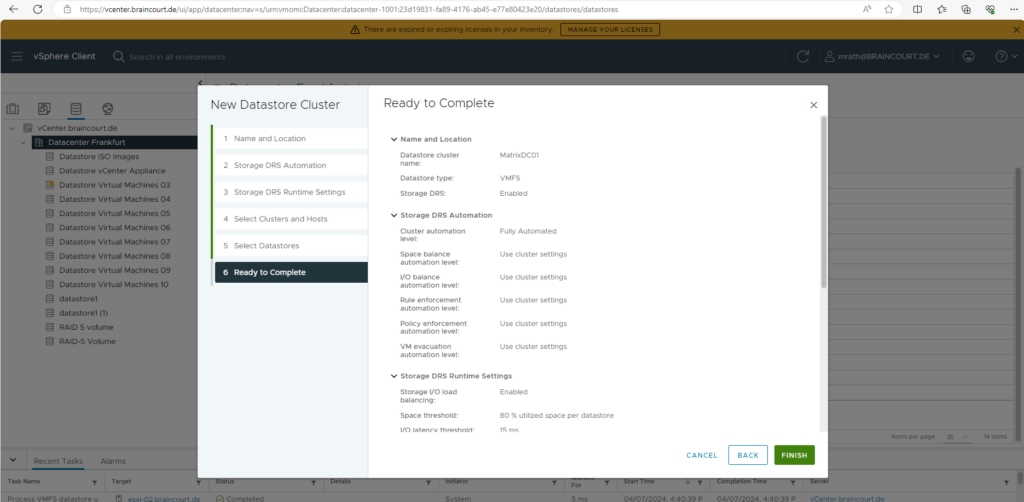

For the resource management capabilities as described above I will use the cluster settings and therefore Fully Automated for all of them.

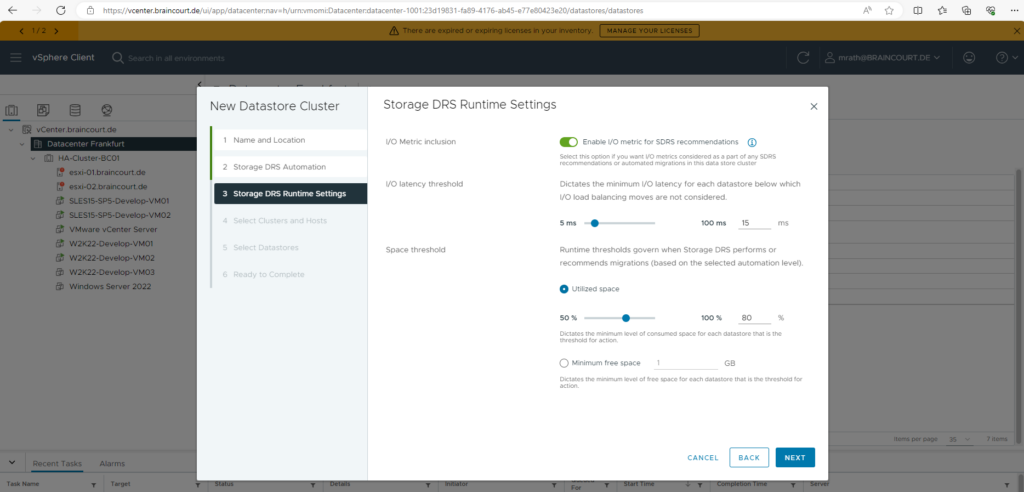

For the Storage DRS Runtime Settings you can set different options for I/O. The first I/O Metric inclusion will consider I/O metrics if enabled as part of any Storage Distributed Resource Scheduler (SDRS) recommendation.

The second I/O latency threshold will set the minimum I/O latency for each datastore. Below this threshold I/O load balancing moves are not considered.

Last the Space treshold and its Utilized Space slider will indicate the maximum percentage of consumed space allowed before Storage DRS is triggered. Storage DRS makes recommendations and performs migrations when space use on the datastores is higher than the threshold.

VMware Storage Distributed Resource Scheduler (SDRS) is a vSphere feature that moves virtual machines or VMDKs nondisruptively (by using vMotion in the background) between the datastores in a datastore cluster, selecting the best datastore in which to place the virtual machine or VMDKs in the datastore cluster. A datastore cluster is a collection of similar datastores that are aggregated into a single unit.

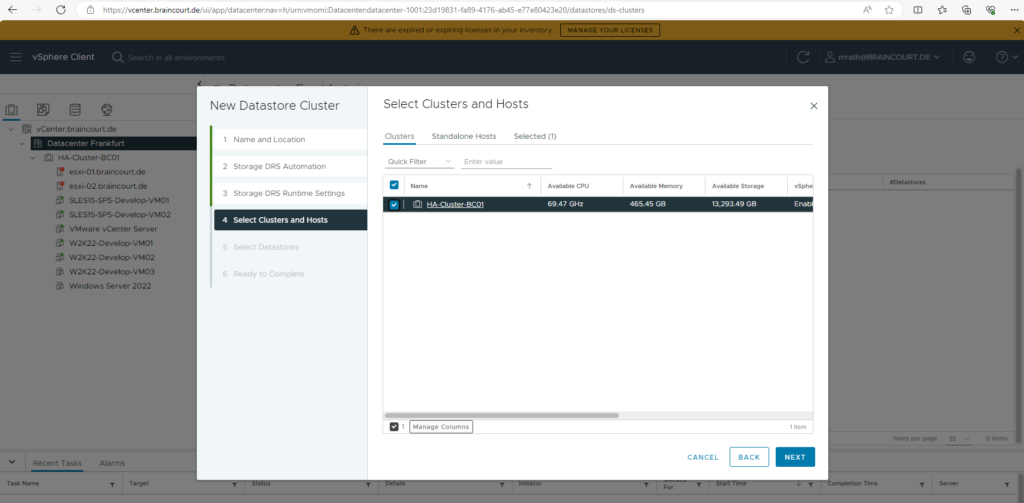

We can assign the new datastore cluster to an ESXi host cluster or to standalone hosts, I will assign it below to my 2-node ESXi host cluster.

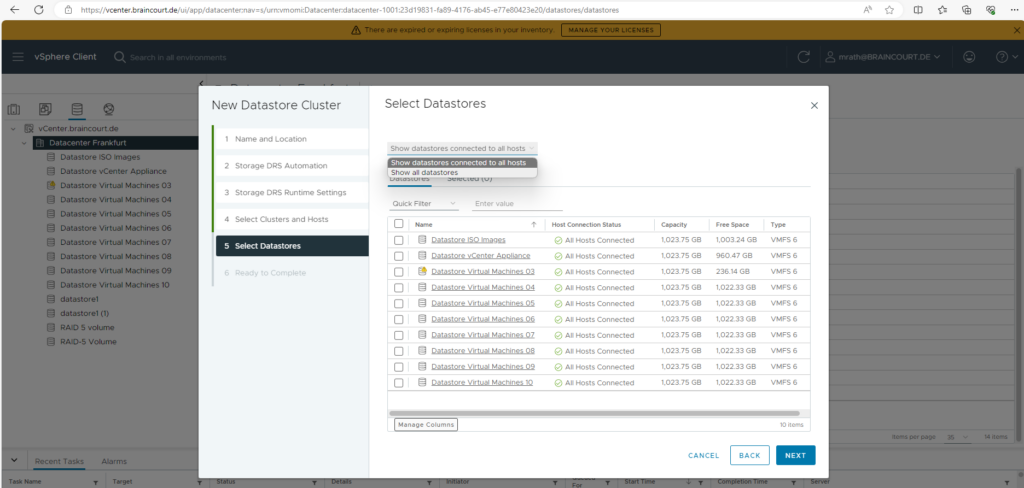

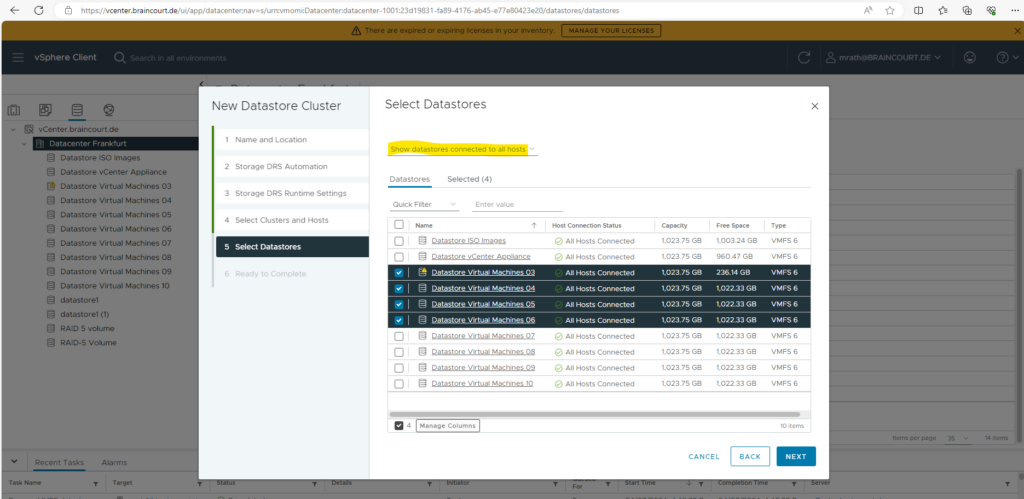

Finally we need to add all datastores which should be a resource in our new datastore cluster. You can filter here to show all available datastores within your vSphere environment or just the ones which are connected to all hosts in the cluster.

To provide high availability you should add datastores which are connected to all host and selected by default below.

A datastore cluster can contain a mix of datastores with different sizes and I/O capacities, and can be from different arrays and vendors. NFS and VMFS datastores cannot be combined in the same datastore cluster.

By the way all datastores listed above are provided by my Microsoft iSCSI Target Server which is a great solution especially for lab environments to provide a network-accessible block storage device at low cost.

More about and how to set up a Microsoft iSCSI Target Server you will find in my following post

More about setting up iSCSI Virtual Disks as Shared Storage for an ESXi Host Cluster, you can read in my following post.

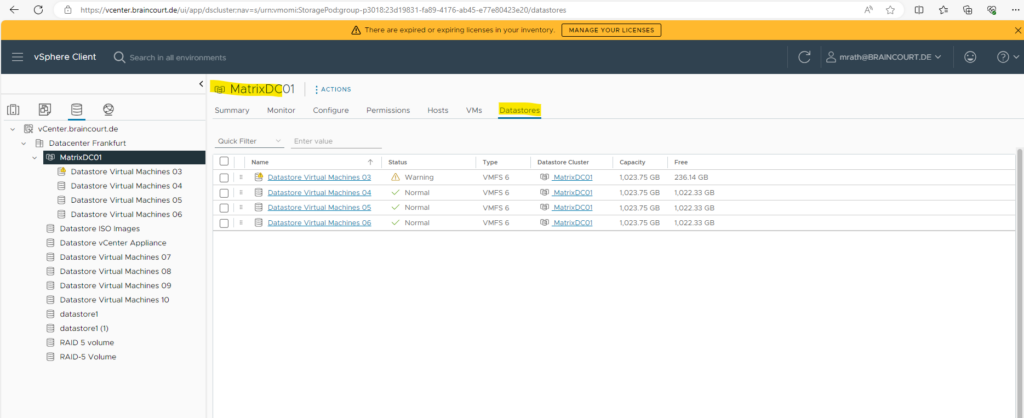

For my first datastore cluster named MatrixDC01 I will assign the following 4 datastores and later create a second datastore cluster by using the remaining datastores 07 – 10.

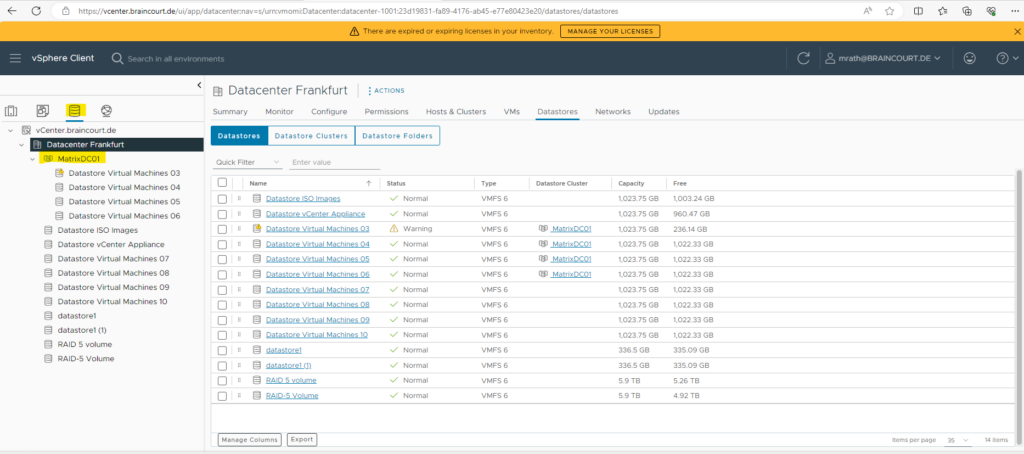

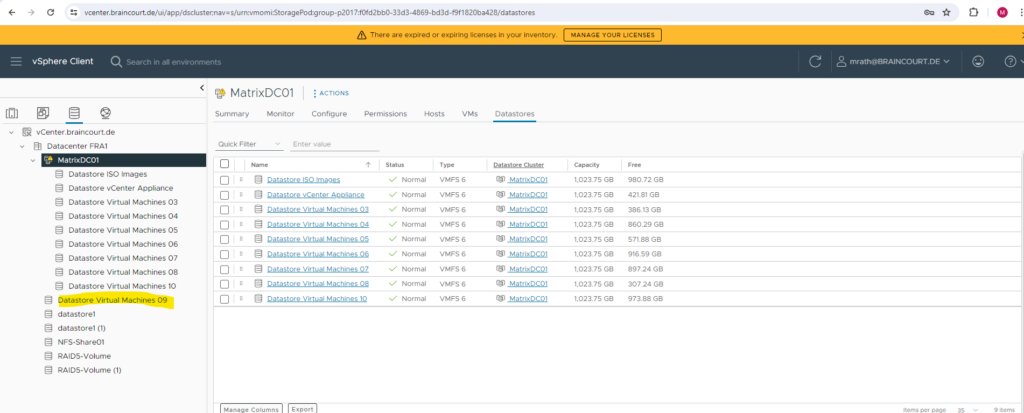

The new datastore cluster is shown up immediately in the Storage menu as shown below.

You can see all associated datastores which belongs to this datastore cluster within the Datastores tab of the datastore cluster and shown below.

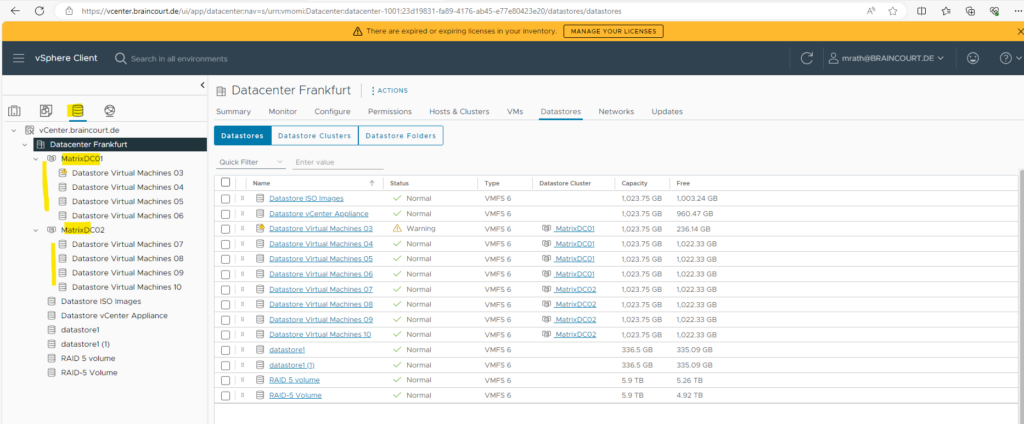

After I added the second datastore cluster named MatrixDC02 it will looks like this.

The datastore cluster you can easily identify by the dedicated icon as shown below.

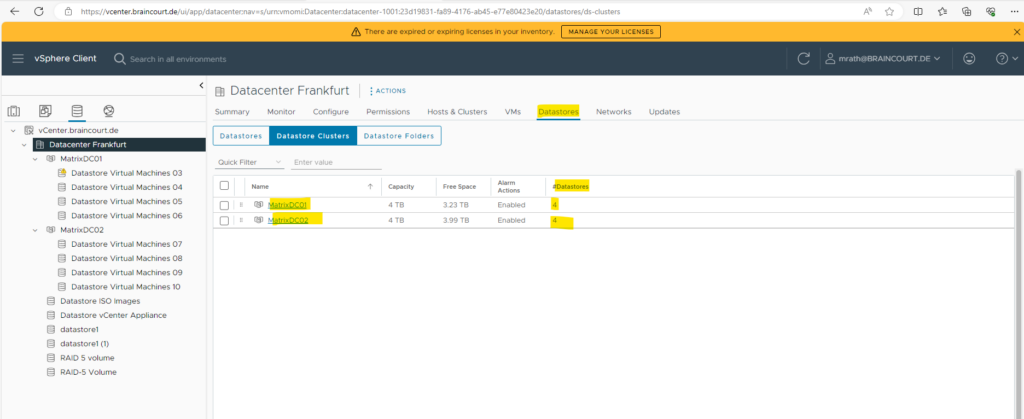

All datastore clusters in your datacenter you can also see within the Datastore Clusters tab under the Datacenter and Datastores tab as shown below.

Datastore Cluster Requirements

Datastores and hosts that are associated with a datastore cluster must meet certain requirements to use datastore cluster features successfully.

Follow these guidelines when you create a datastore cluster.

- Datastore clusters must contain similar or interchangeable datastores.A datastore cluster can contain a mix of datastores with different sizes and I/O capacities, and can be from different arrays and vendors. However, the following types of datastores cannot coexist in a datastore cluster.

- NFS and VMFS datastores cannot be combined in the same datastore cluster.

- Replicated datastores cannot be combined with non-replicated datastores in the same Storage-DRS-enabled datastore cluster.

- All hosts attached to the datastores in a datastore cluster must be ESXi 5.0 and later. If datastores in the datastore cluster are connected to ESX/ESXi 4.x and earlier hosts, Storage DRS does not run.

- Datastores shared across multiple data centers cannot be included in a datastore cluster.

- As a best practice, do not include datastores that have hardware acceleration enabled in the same datastore cluster as datastores that do not have hardware acceleration enabled. Datastores in a datastore cluster must be homogeneous to guarantee hardware acceleration-supported behavior.

Remove/Add a Datastore from/to a Datastore Cluster

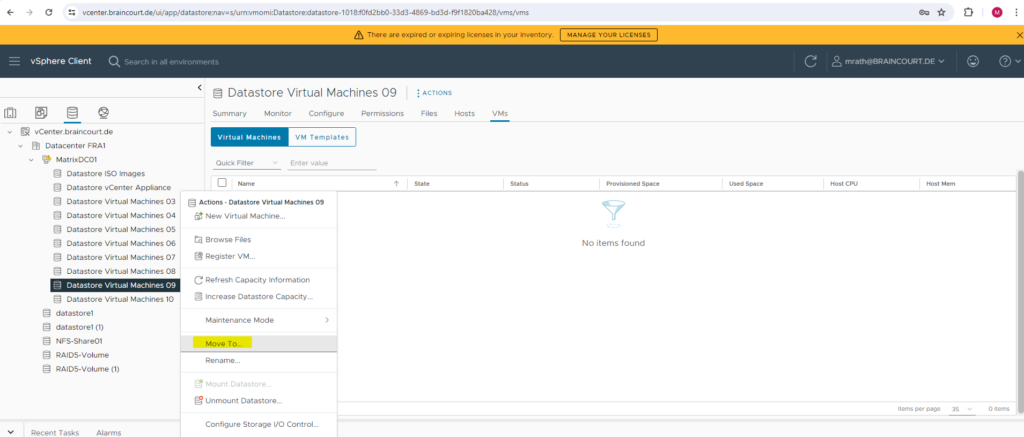

In order to remove a datastore from a datastore cluster, just right click on the datastore and select Move To … as shown below.

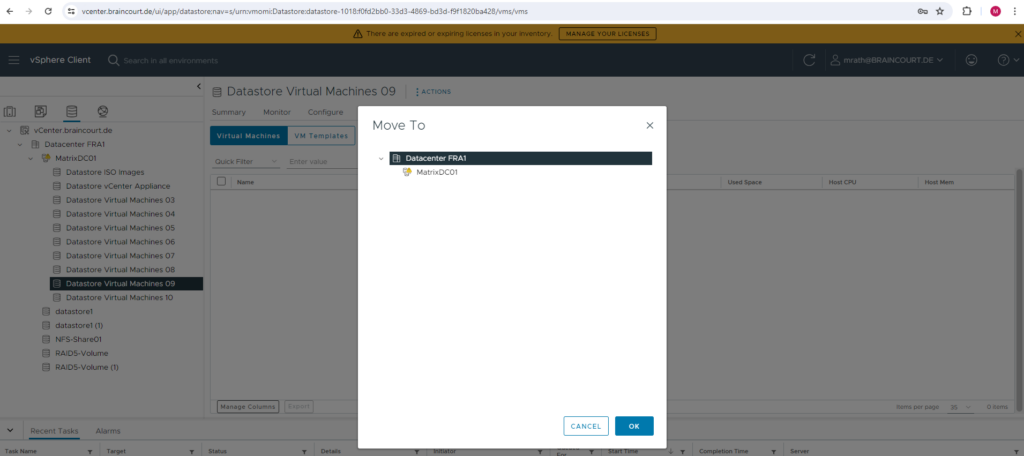

Move the datastore directly to the datacenter node and click on OK.

The datastore is now removed from the datastore cluster.

To add a datastore to a datastore cluster also just right click on the datastore and select Move To … and then select the desired datastore cluster you want to add to.

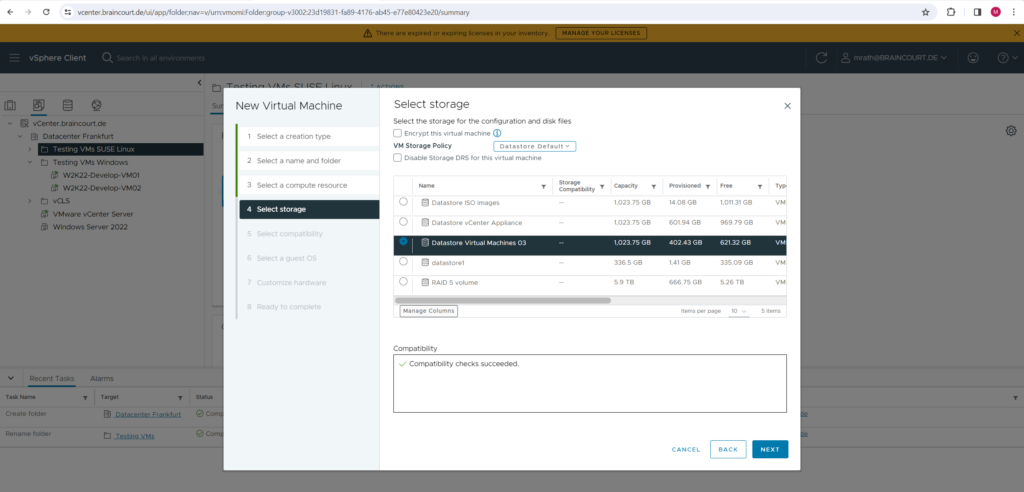

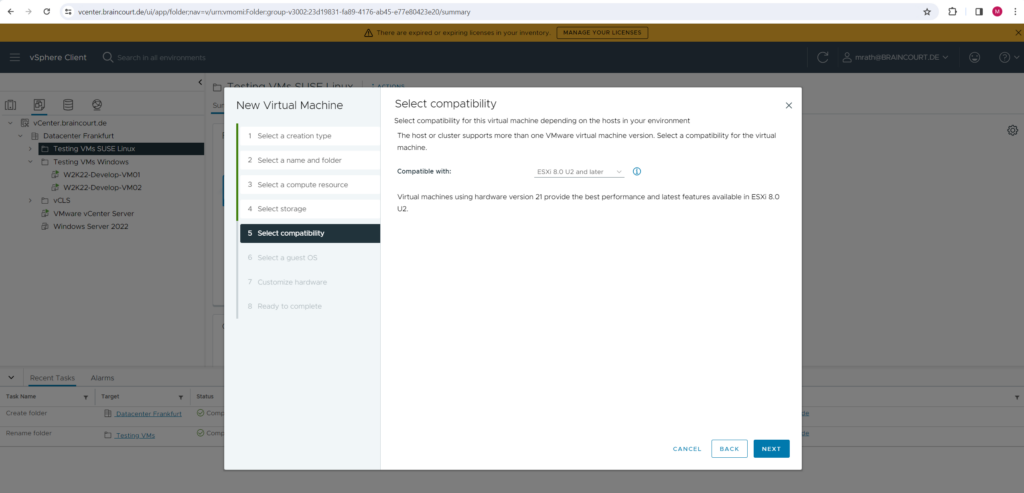

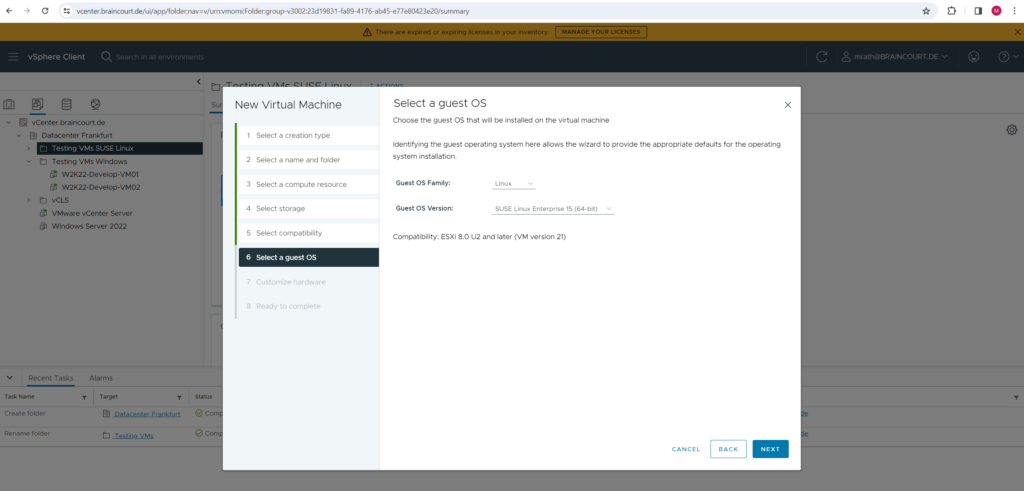

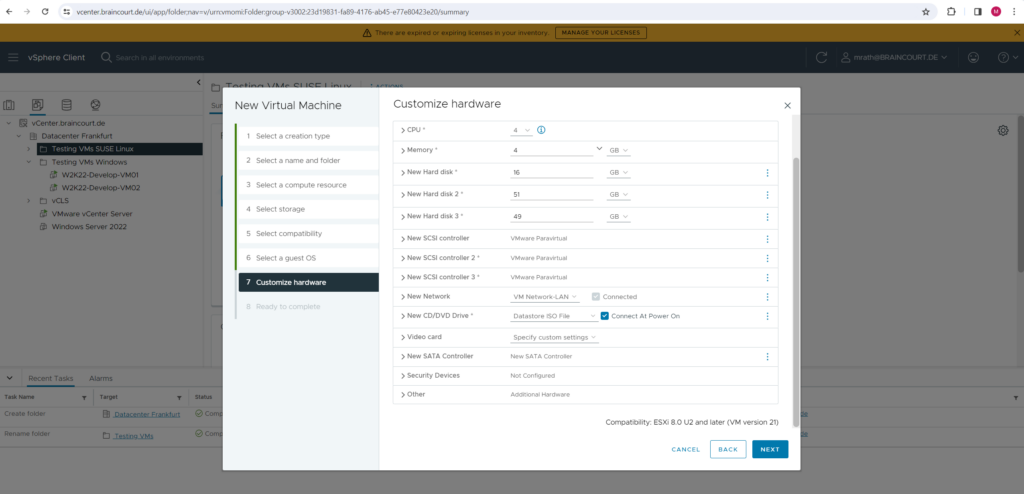

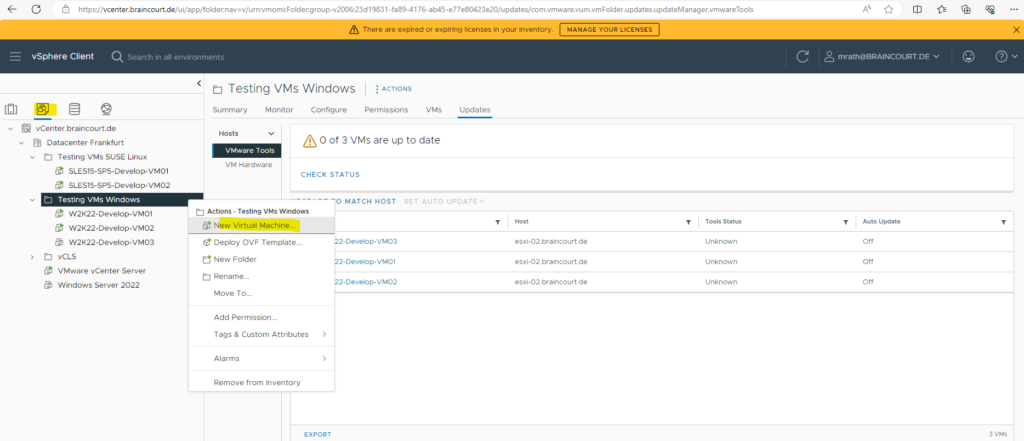

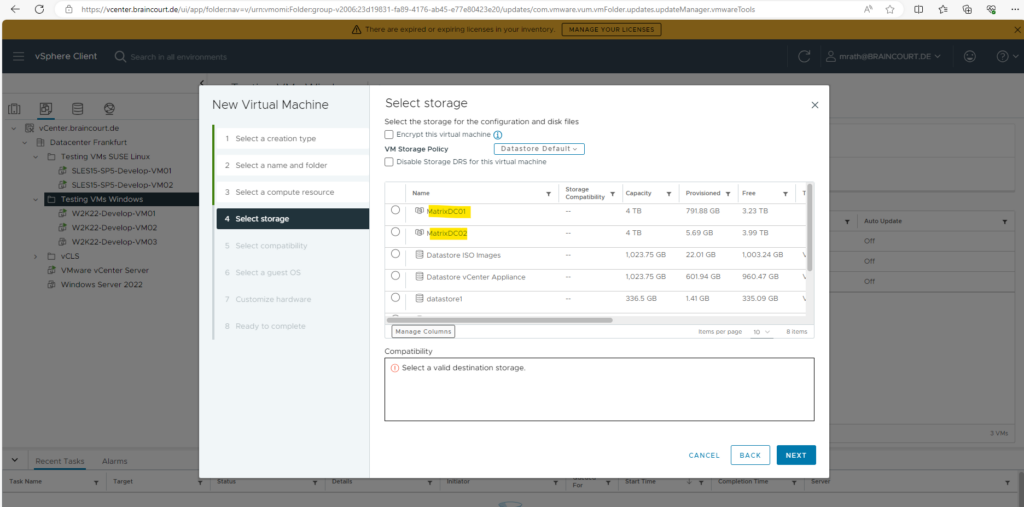

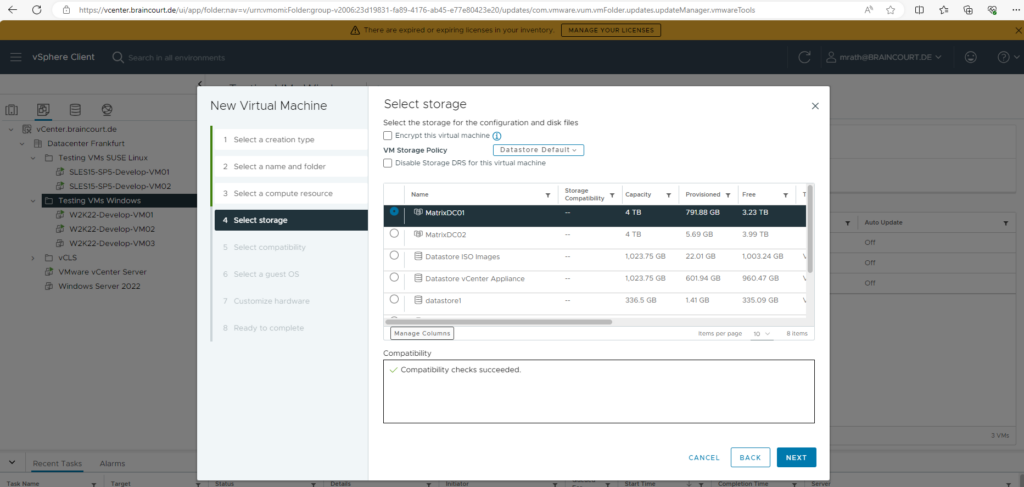

Creating a new VM by using the Datastore Cluster

Below I also want to show how to use the new datastore cluster when creating a new virtual machine to store its files on.

For the compute resource I will just select the ESXi host cluster and not a dedicated ESXi host.

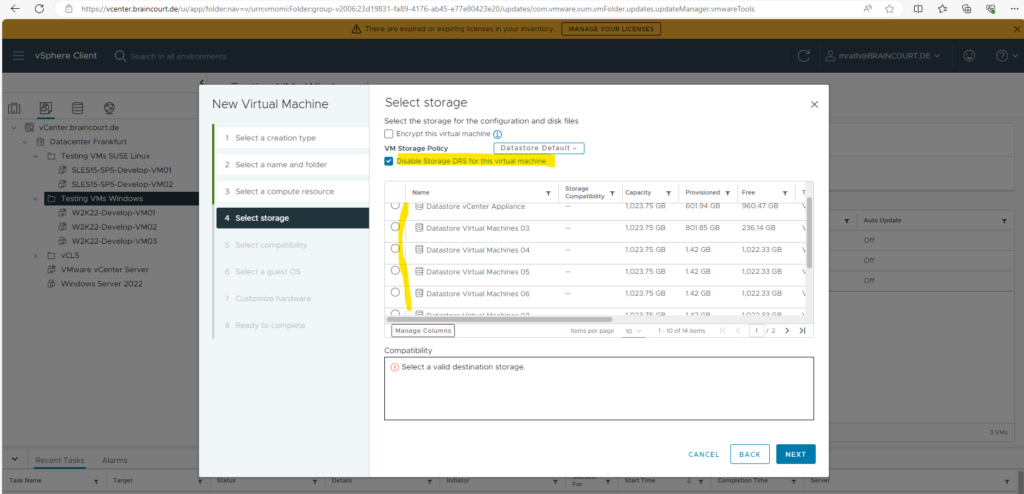

For the storage we can now select either individual datastores not associated to a datastore cluster or we can select one of the datastored clusters I previously created.

By default the individual datastores which are assigned to my new datastore clusters (MatrixDC01 & MatrixDC02) are now listed separately below.

In case you want to choose a specific individual datastore from a datastore cluster, you can also check Disable Storage DRS for this virtual machine as shown below.

When Storage DRS is activated, it provides recommendations for virtual machine disk placement and migration to balance space and I/O resources across the datastores in the datastore cluster.

Below I will use my first new datastore cluster where the virtual machine will be stored on.

Finally when I will power on the virtual machine, I will get again a recommendation on which hosts I should run the virtual machine because of my automation level with Manual for the vSphere DRS feature as shown further above.

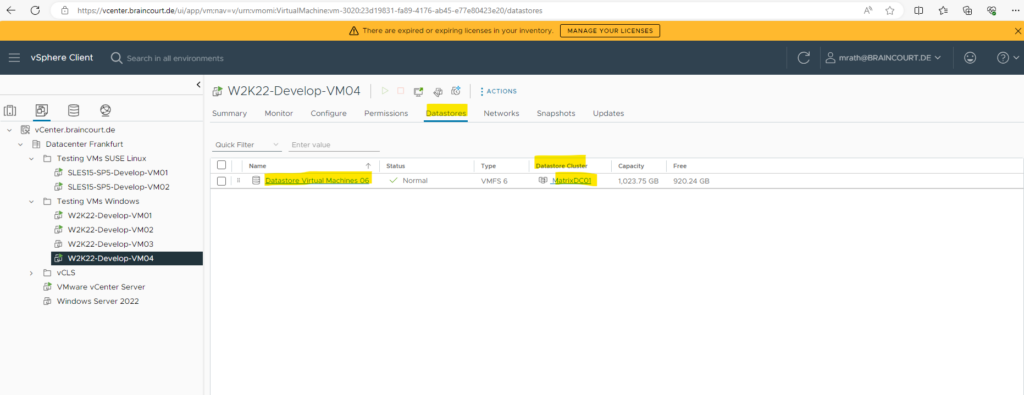

By clicking on the virtual machine and switching to the Datastore tab we can check which individual datastore from the datastore cluster finally will be use by vSphere storage DRS (SDRS) to store the files including the VMDK (Virtual Machine Disk) file of the virtual machine.

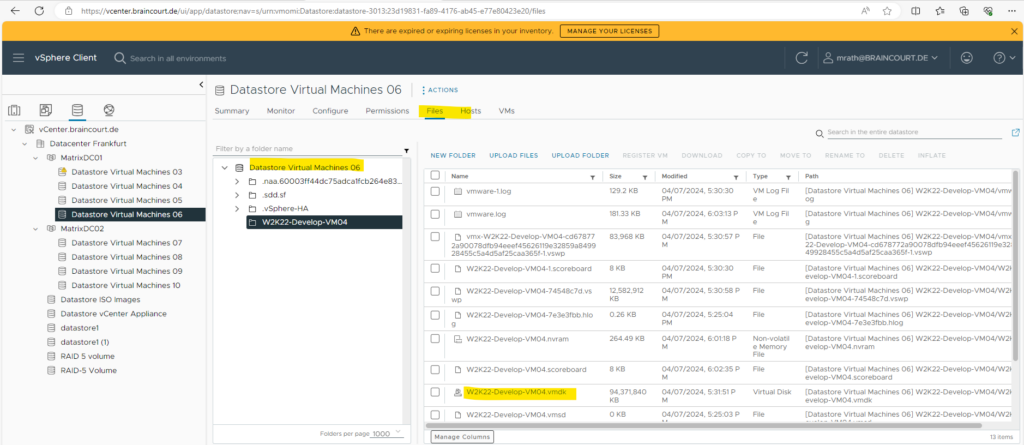

Further by clicking on this datastore and switching to the Files tab, we can see all files stored on it including our VMDK (Virtual Machine Disk) file.

VMDK (short for Virtual Machine Disk) is a file format that describes containers for virtual hard disk drives to be used in virtual machines like VMware Workstation or VirtualBox.

Initially developed by VMware for its proprietary virtual appliance products, VMDK became an open format with revision 5.0 in 2011, and is one of the disk formats used inside the Open Virtualization Format for virtual appliances.

The maximum VMDK size is generally 2TB for most applications, but in September 2013, VMware vSphere 5.5 introduced 62TB VMDK capacity.

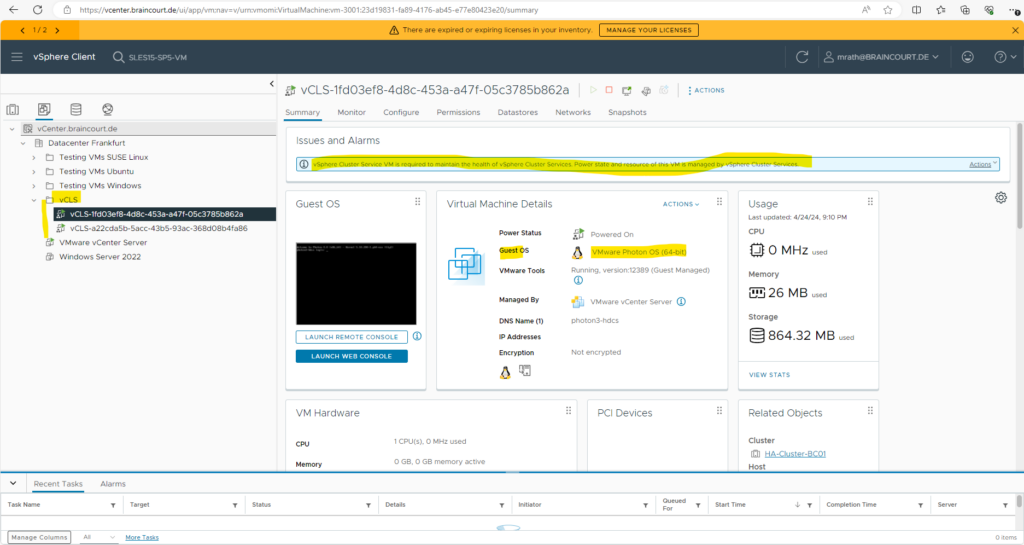

vSphere Cluster Services

vSphere Cluster Services (vCLS) is activated by default and runs in all vSphere clusters. vCLS ensures that if vCenter Server becomes unavailable, cluster services remain available to maintain the resources and health of the workloads that run in the clusters. vCenter Server is still required to run DRS and HA.

vCLS is activated when you upgrade to vSphere 7.0 Update 3 or when you have a new vSphere 7.0 Update 3 deployment. vCLS is upgraded as part of vCenter Server upgrade.

vCLS uses agent virtual machines to maintain cluster services health. The vCLS agent virtual machines (vCLS VMs) are created when you add hosts to clusters. Up to three vCLS VMs are required to run in each vSphere cluster, distributed within a cluster. vCLS is also activated on clusters which contain only one or two hosts. In these clusters the number of vCLS VMs is one and two, respectively.

New anti-affinity rules are applied automatically. Every three minutes a check is performed, if multiple vCLS VMs are located on a single host they will be automatically redistributed to different hosts.

Below you can see that in my lab environment with two ESXi hosts for the cluster also two vCLS VMs are running.

Links

How Do You Create and Configure Clusters in the vSphere Client

https://docs.vmware.com/en/VMware-vSphere/8.0/vsphere-vcenter-esxi-management/GUID-F7818000-26E3-4E2A-93D2-FCDCE7114508.htmlHow vSphere HA Works

https://docs.vmware.com/en/VMware-vSphere/7.0/com.vmware.vsphere.avail.doc/GUID-33A65FF7-DA22-4DC5-8B18-5A7F97CCA536.htmlDistributed Resource Scheduler

https://www.vmware.com/products/vsphere/drs-dpm.htmlvSAN

https://www.vmware.com/products/vsan.htmlAdd a Managed Host to a Cluster

https://docs.vmware.com/en/VMware-vSphere/7.0/com.vmware.vsphere.resmgmt.doc/GUID-199B9DE8-498A-485D-9FE2-EA639B2C1F8A.htmlvSphere vMotion

https://www.vmware.com/products/vsphere/vmotion.htmlvSAN 2-node Cluster Guide

https://core.vmware.com/resource/vsan-2-node-cluster-guideUnderstanding vSAN Architecture: Disk Groups

https://blogs.vmware.com/virtualblocks/2019/04/18/vsan-disk-groups/What Is a VMware DRS Cluster?

https://www.nakivo.com/blog/what-is-vmware-drs-cluster/VMware vSphere HA and DRS Compared and Explained

https://www.nakivo.com/blog/vmware-vsphere-ha-and-drs-compared-and-explained/UNDERSTANDING vSPHERE DRS PERFORMANCE

https://www.vmware.com/content/dam/digitalmarketing/vmware/en/pdf/techpaper/vsphere6-drs-perf.pdfCreating a Datastore Cluster

https://docs.vmware.com/en/VMware-vSphere/7.0/com.vmware.vsphere.resmgmt.doc/GUID-598DF695-107E-406B-9C95-0AF961FC227A.html