Set up an NFS Server on SUSE Linux Enterprise Server 15

Today I want to show step by step how to set up an NFS Server under SUSE Linux Enterprise Server 15. Further we will also see how to access (aka importing) the exported file system (so called exports or shares) by the NFS Server on NFS Clients like Linux, Windows or also vSphere environments.

An NFS client built into ESXi uses the Network File System (NFS) protocol over TCP/IP to access a designated NFS volume that is located on a NAS server. The ESXi host can mount the volume and use it for its storage needs. vSphere supports versions 3 and 4.1 of the NFS protocol.

The Network File System (NFS) is a standardized, well-proven, and widely-supported network protocol that allows files to be shared between separate hosts.

In the default configuration, NFS completely trusts the network and thus any machine that is connected to a trusted network. Any user with administrator privileges on any computer with physical access to any network the NFS server trusts can access any files that the server makes available.

Source: https://documentation.suse.com/sles/15-SP3/html/SLES-all/cha-nfs.html#sec-nfs-overview

NFS itself is a network file sharing protocol such as SMB or AFP that provides access to files over a network, but finally all of them depending on an underlying local file system to store and manage the files physically like the ext3, ext4, XFS, Btrfs, ZFS or NTFS as shown in my following post on a Windows NFS Server.

About how to set up an NFS Server on Windows Server, you can read my following post.

Introduction

The Network File System (NFS) is a standardized, well-proven, and widely-supported network protocol that allows files to be shared between separate hosts.

In the default configuration, NFS completely trusts the network and thus any machine that is connected to a trusted network. Any user with administrator privileges on any computer with physical access to any network the NFS server trusts can access any files that the server makes available.

Often, this level of security is perfectly satisfactory, such as when the network that is trusted is truly private, often localized to a single cabinet or machine room, and no unauthorized access is possible. In other cases, the need to trust a whole subnet as a unit is restrictive, and there is a need for more fine-grained trust. To meet the need in these cases, NFS supports various security levels using the Kerberos infrastructure. Kerberos requires NFSv4, which is used by default.

Source: https://documentation.suse.com/sles/15-SP3/html/SLES-all/cha-nfs.html

Terms

Exports -> A directory exported by an NFS server, which clients can integrate into their systems.

NFS client -> The NFS client is a system that uses NFS services from an NFS server over the Network File System protocol.

NFS server -> The NFS server provides NFS services to clients. A running server depends on the following daemons: nfsd (worker), idmapd (ID-to-name mapping for NFSv4, needed for certain scenarios only), statd (file locking), and mountd (mount requests).

NFSv3 -> NFSv3 is the version 3 implementation, the “old” stateless NFS that supports client authentication.

NFSv4 -> NFSv4 is the new version 4 implementation that supports secure user authentication via Kerberos. NFSv4 requires one single port only and thus is better suited for environments behind a firewall than NFSv3. The protocol is specified as https://datatracker.ietf.org/doc/html/rfc3530.

pNFS -> Parallel NFS, a protocol extension of NFSv4. Any pNFS clients can directly access the data on an NFS server.

Important: Need for DNS

In principle, all exports can be made using IP addresses only. To avoid timeouts, you need a working DNS system. DNS is necessary at least for logging purposes, because the mountd daemon does reverse lookups.

Source: https://documentation.suse.com/sles/15-SP3/html/SLES-all/cha-nfs.html

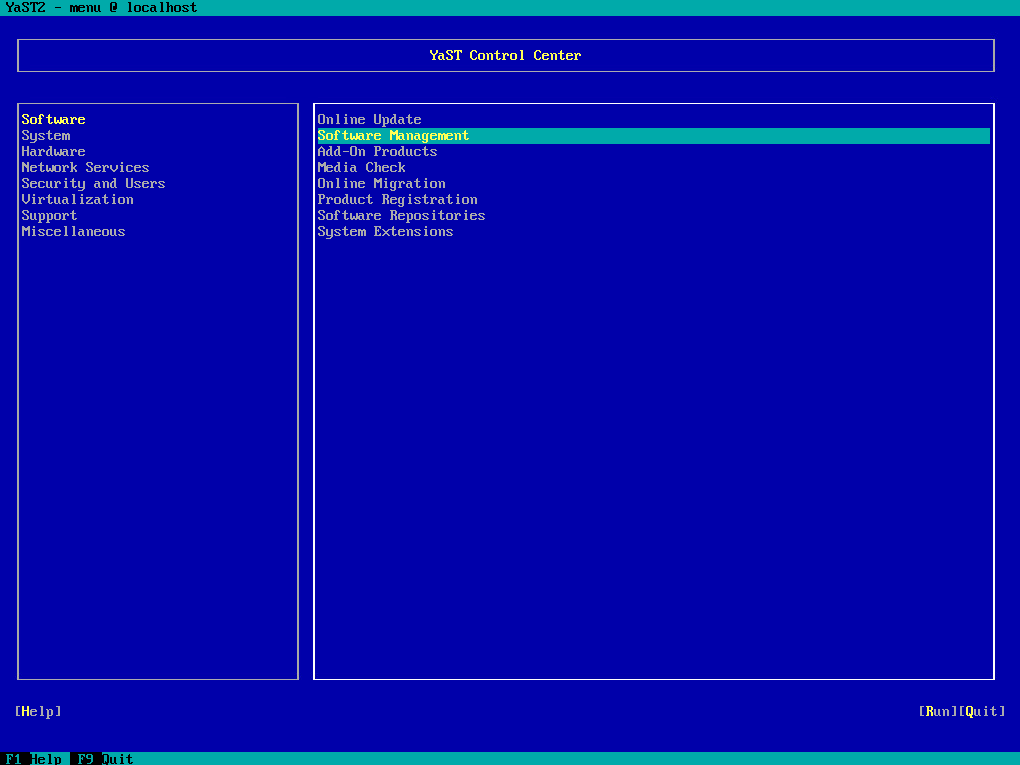

Install NFS Server

The NFS server is not part of the default installation. To install the NFS server by using YaST, select Software › Software Management as shown below.

NFS is a client/server system. However, a machine can be both – it can supply file systems over the network (export) and mount file systems from other hosts (import).

Mounting NFS volumes locally on the exporting server is not supported on SUSE Linux Enterprise Server.

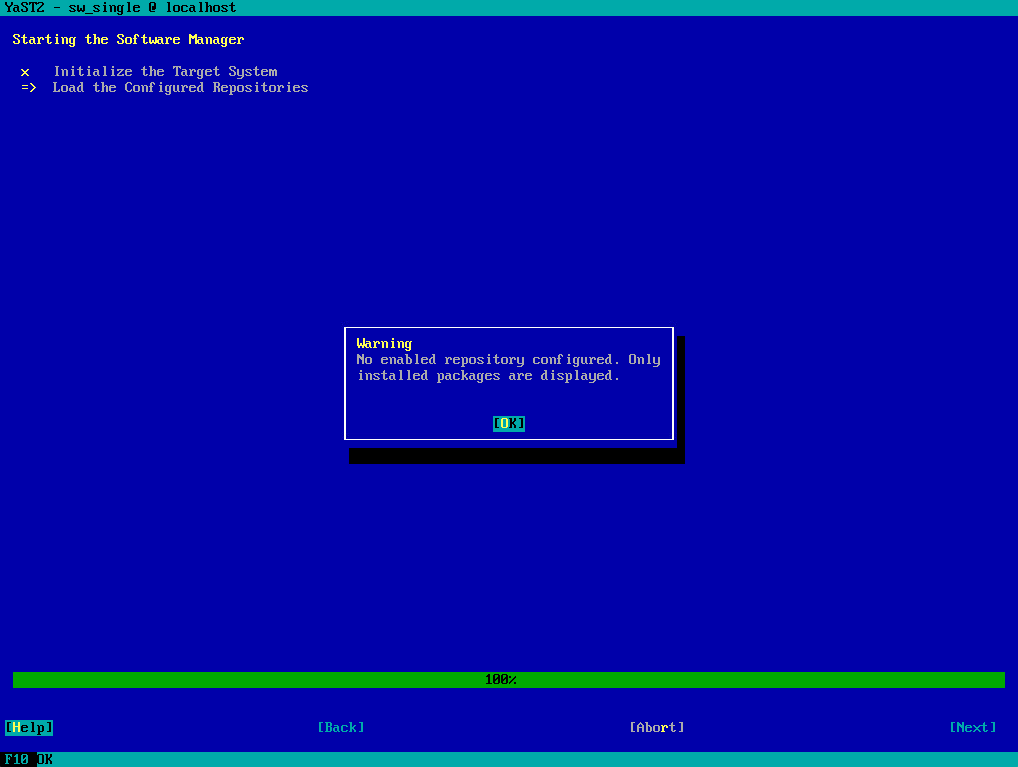

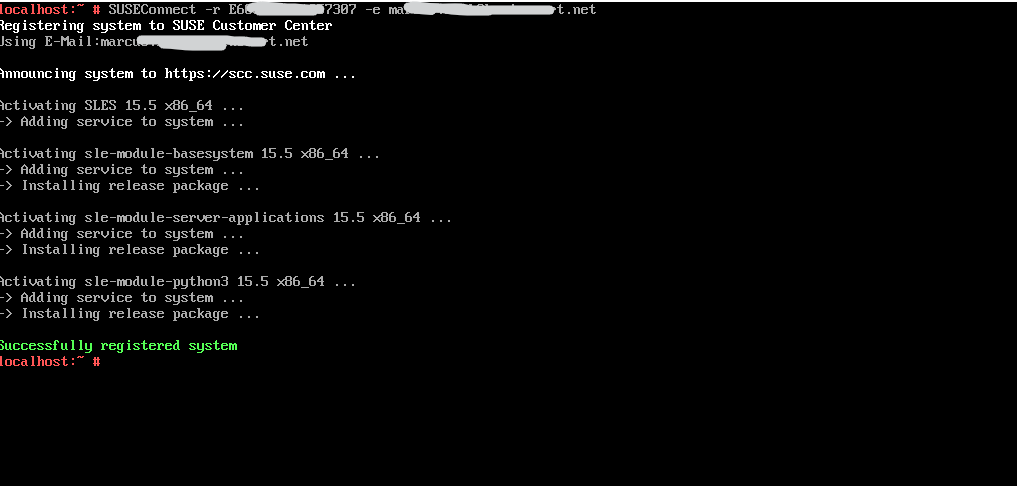

To access the SUSE repository I first need to register the system with the SUSE Customer Center (SCC) as shown next. More about you will find here https://blog.matrixpost.net/set-up-suse-linux-enterprise-server/#registering_SUSEConnect.

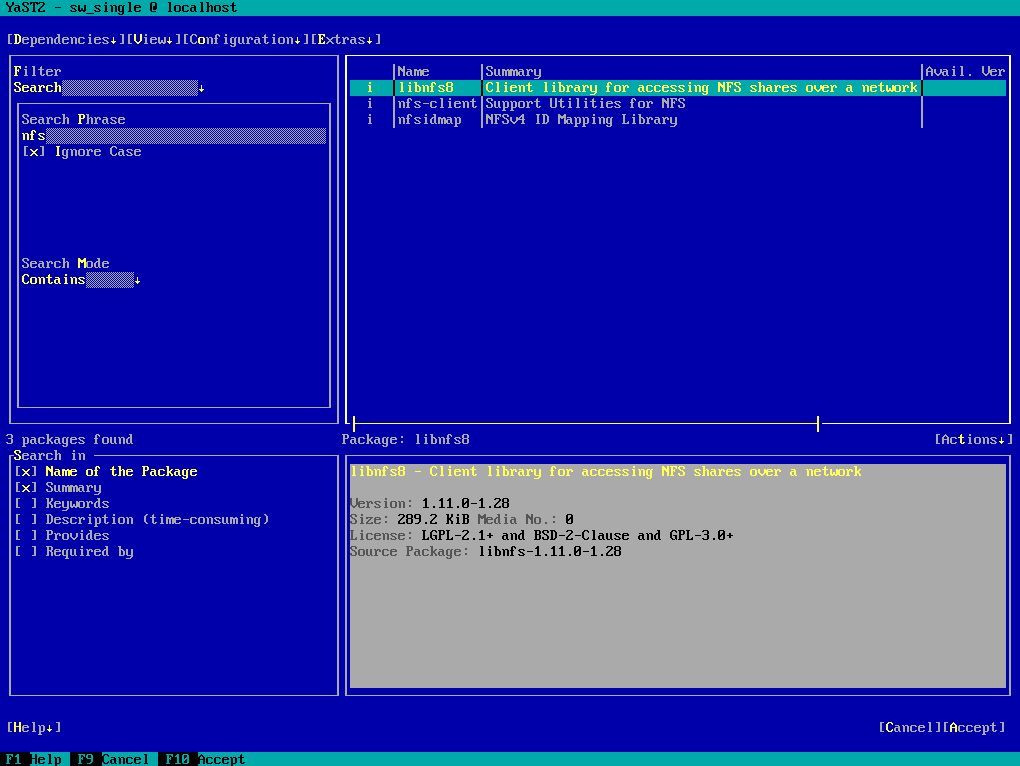

As long as the system is not registered with the SUSE Customer Center (SCC), just the following packages shown up when searching for nfs.

So I will first register the system with the SUSE Customer Center (SCC)

sudo SUSEConnect -r REGISTRATION_CODE -e EMAIL_ADDRESS

Source: https://blog.matrixpost.net/set-up-suse-linux-enterprise-server/#registering_SUSEConnectAbout how to request a trial version and account:

https://blog.matrixpost.net/set-up-suse-linux-enterprise-server/#trial

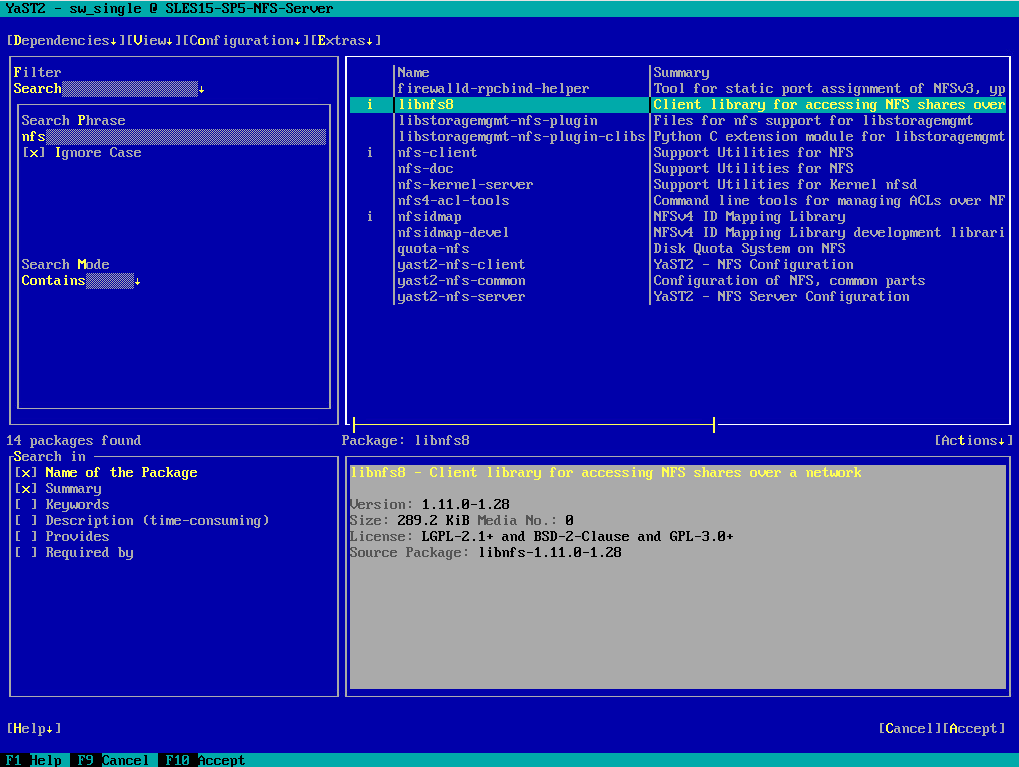

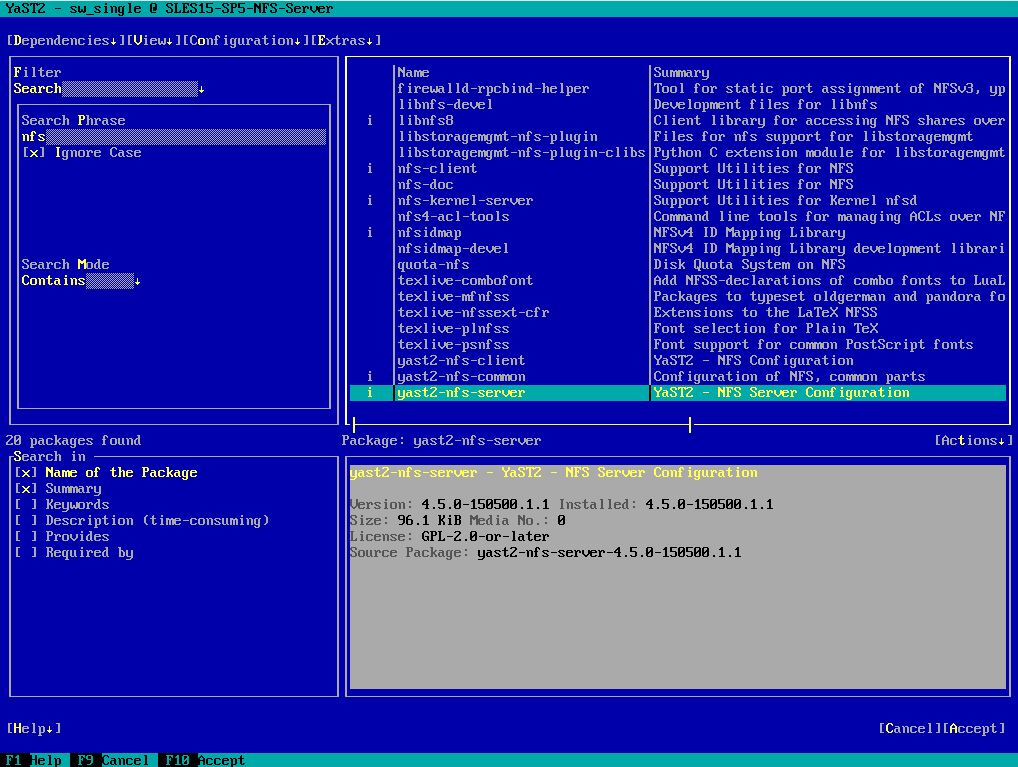

When I will now again search for nfs, a lot of more packages will shown up including the nfs-kernel-server and yast2-nfs-server package.

The nfs-kernel-server package will provide the NFS Server and the yast2-nfs-server module we need to install in order to configure the NFS Server later by using YaST.

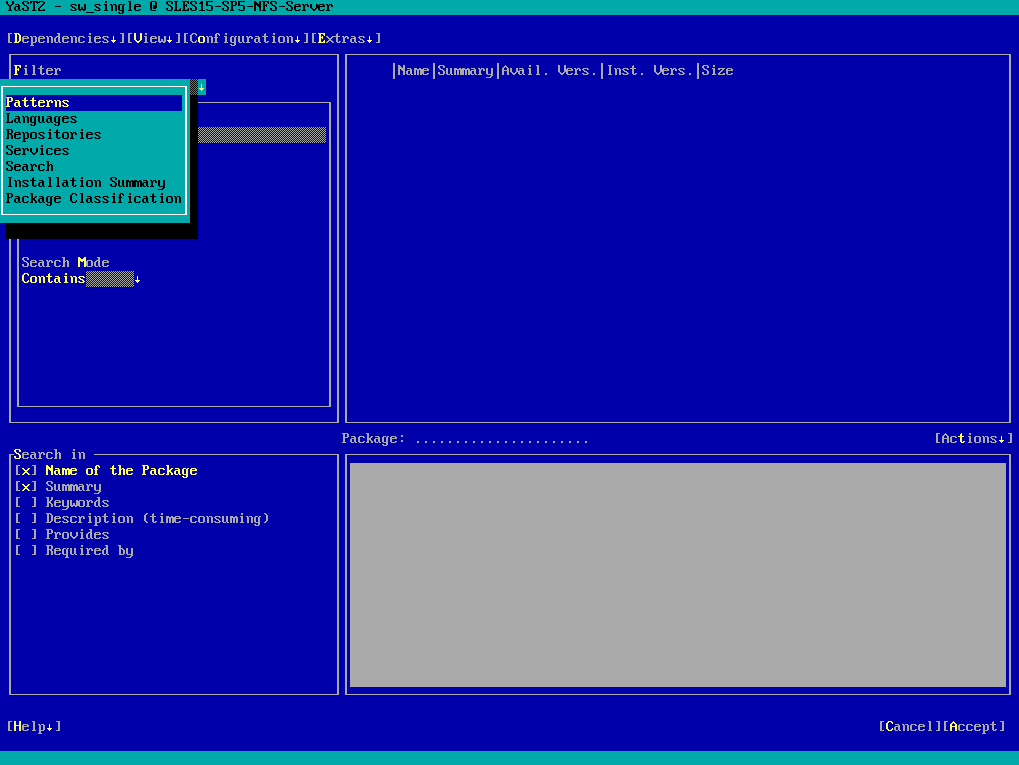

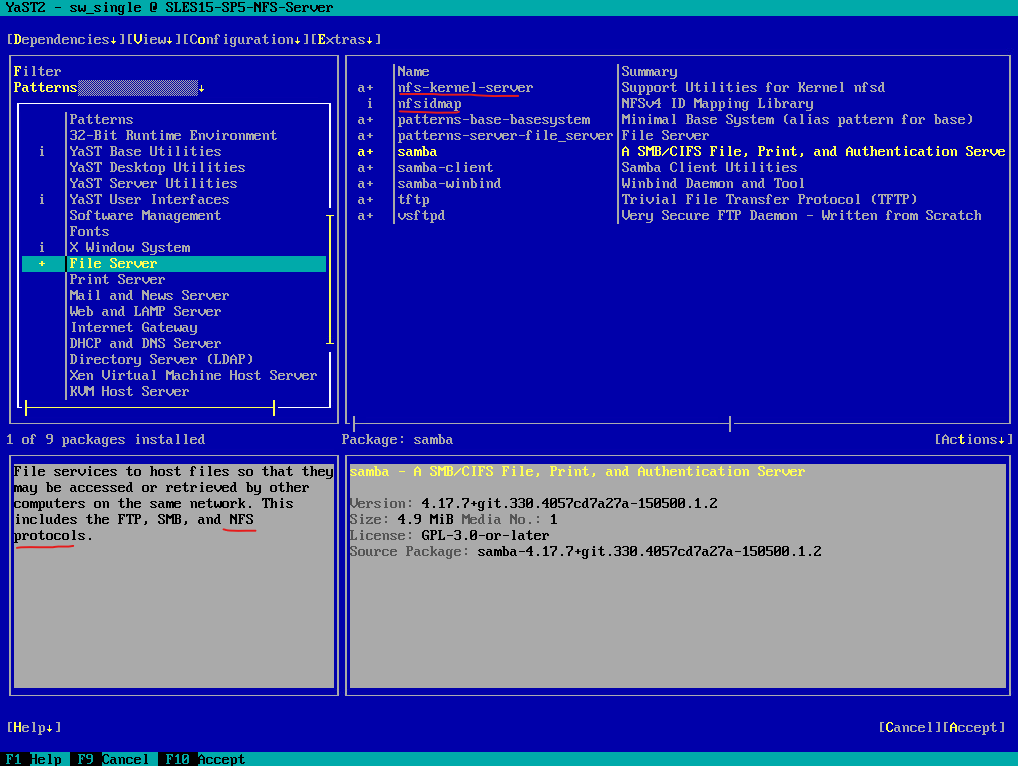

To install the packages we need for the NFS Server first select in YaST under Filters -> Patterns as shown below.

Then scroll down to the File Server pattern and enable it by clicking the space key, next click on Accept.

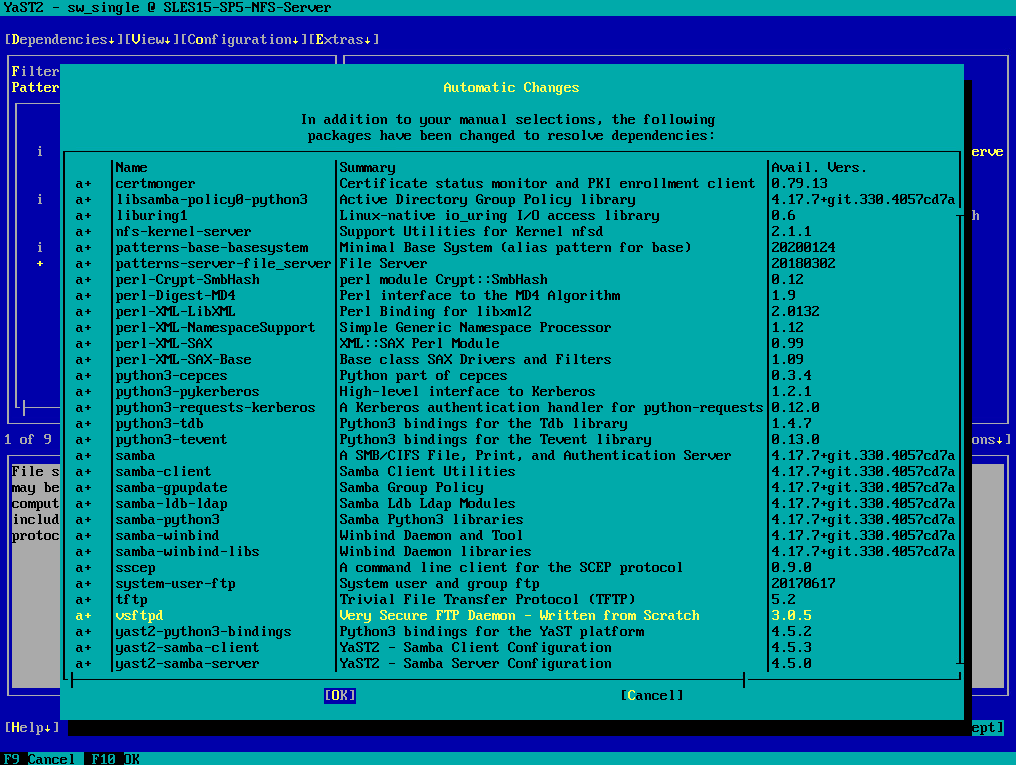

Click on OK to add further needed dependencies for the NFS Server package.

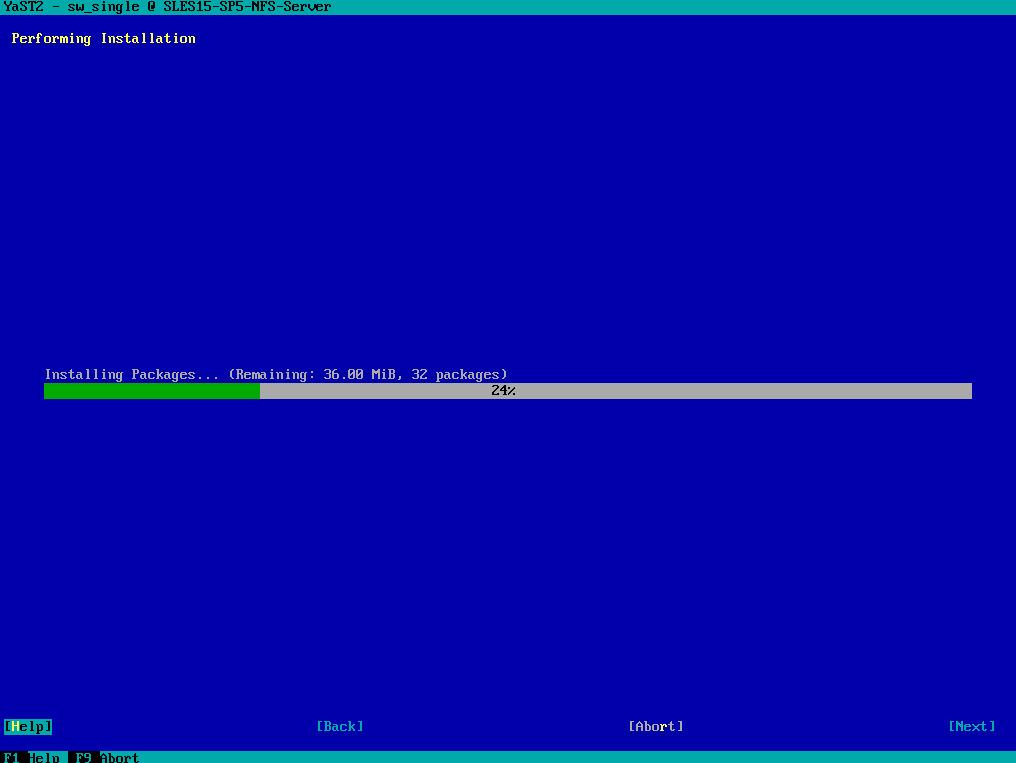

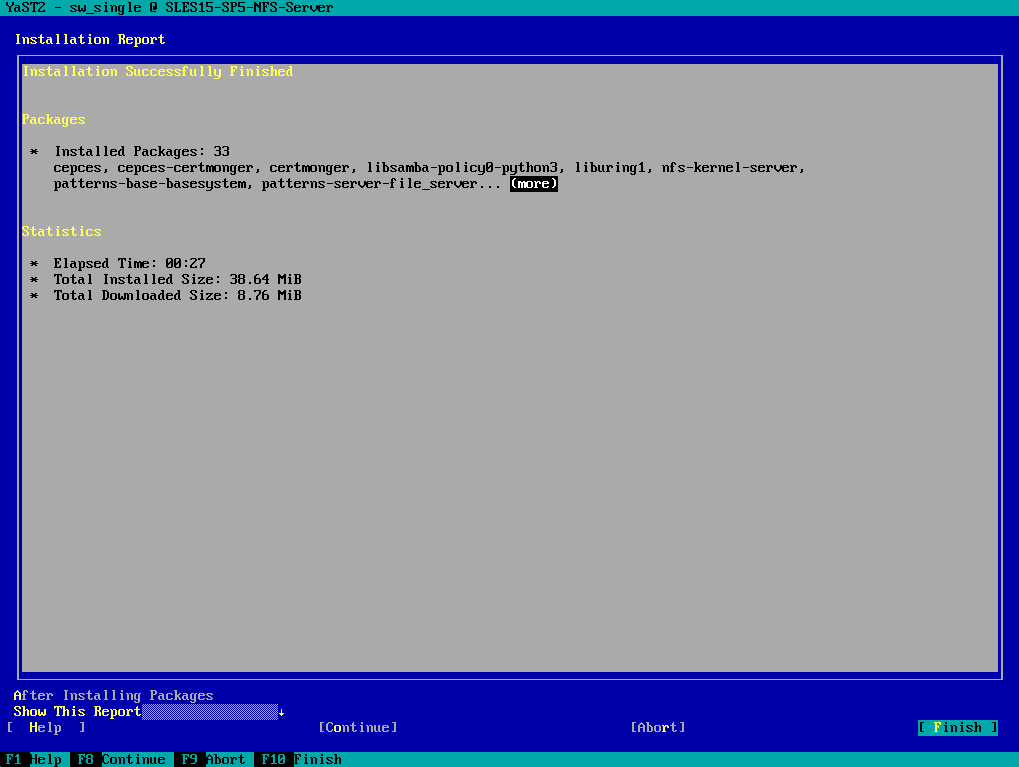

Click on Finish

The pattern does not include the YaST module for NFS Server in order to configure the NFS Server later by using YaST. After the pattern installation is complete, install the module either by also using YaST or exit YaST and install the module by using the command line as shown next.

For YaST, search dedicated for the package by entering nfs in the Search Phrase field. Then select the yast2-nfs-server package to install the YaST – NFS Server Configuration tool as shown below.

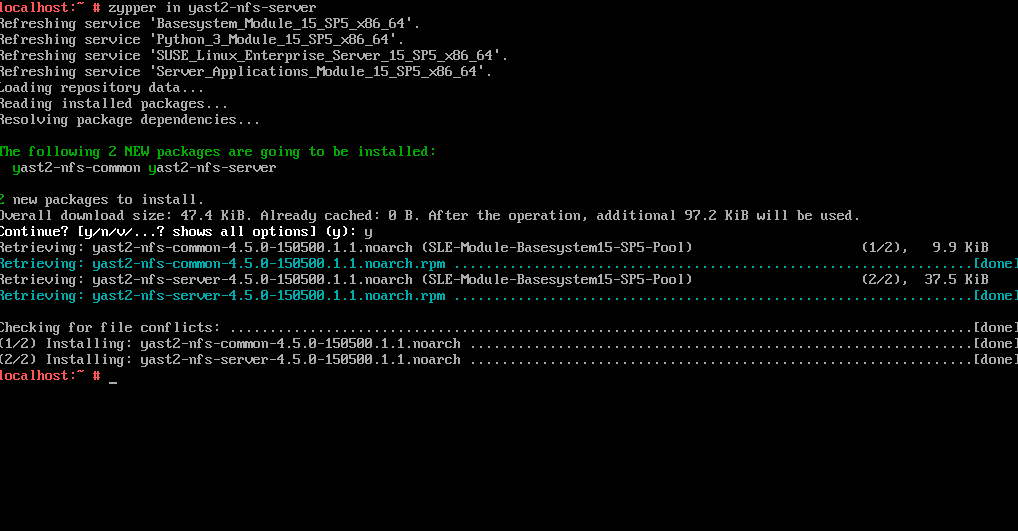

As mentioned, we can also use the command line to install the yast2-nfs-server package as shown below.

# zypper in yast2-nfs-server

Configure NFS Server

To configure our NFS Server we can use either YaST or the command line. When we need authentication we can also configure NFS with Kerberos.

Create Partition/Directory for the Export

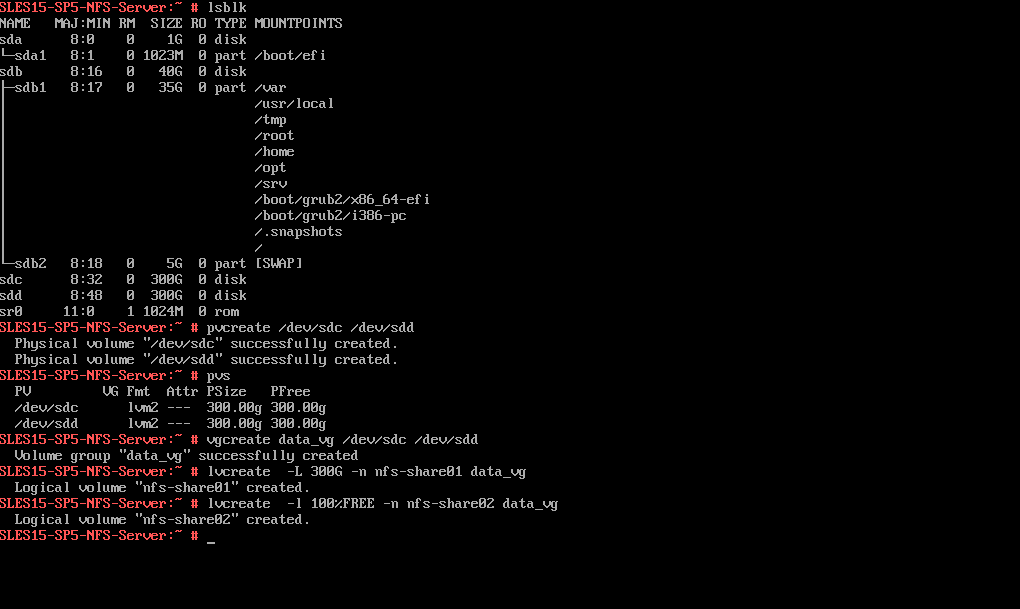

In the next section we export the file system we want to share by NFS. Therefore I will first create two new partitions and directories I want to use as NFS Exports (shares).

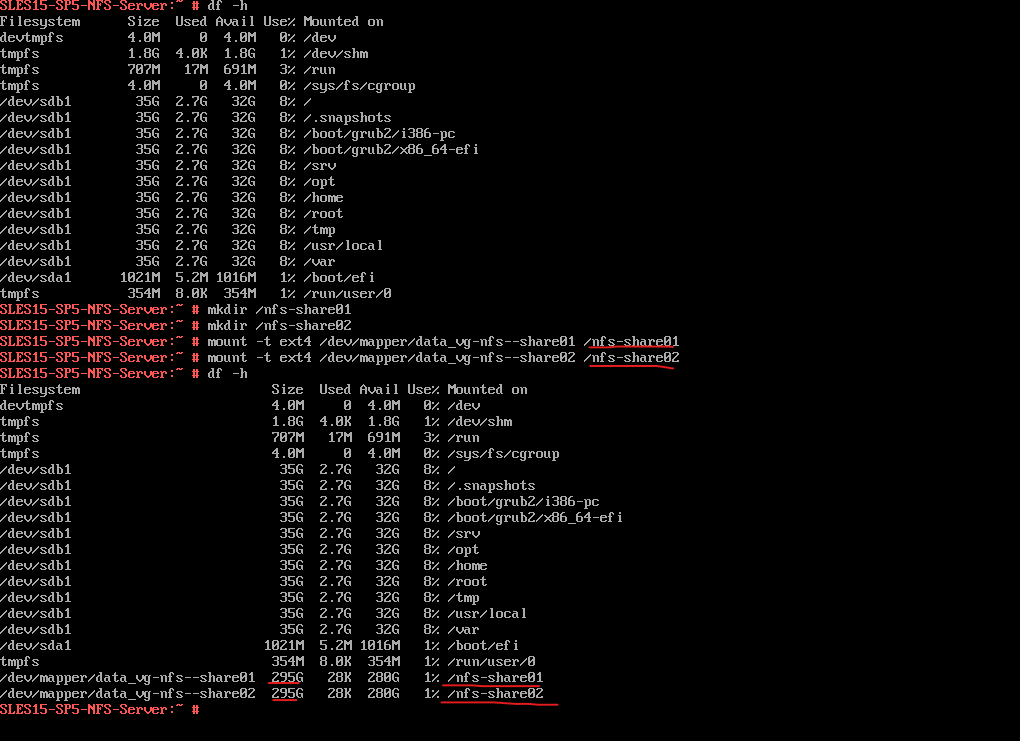

Below I was first creating a new LVM logical volume group and two LVM logical volumes by using two disks (physical volumes PVs) and mount them to the /nfs-share01 and /nfs-share02 directory. We also need to add them into the /etc/fstab file in order to get mounted every time the system boots.

More about the Logical Volume Manager (LVM) and logical volumes (LVs) you will find in my following post https://blog.matrixpost.net/vsphere-linux-virtual-machines-extend-disks-logical-volumes-and-the-file-system/.

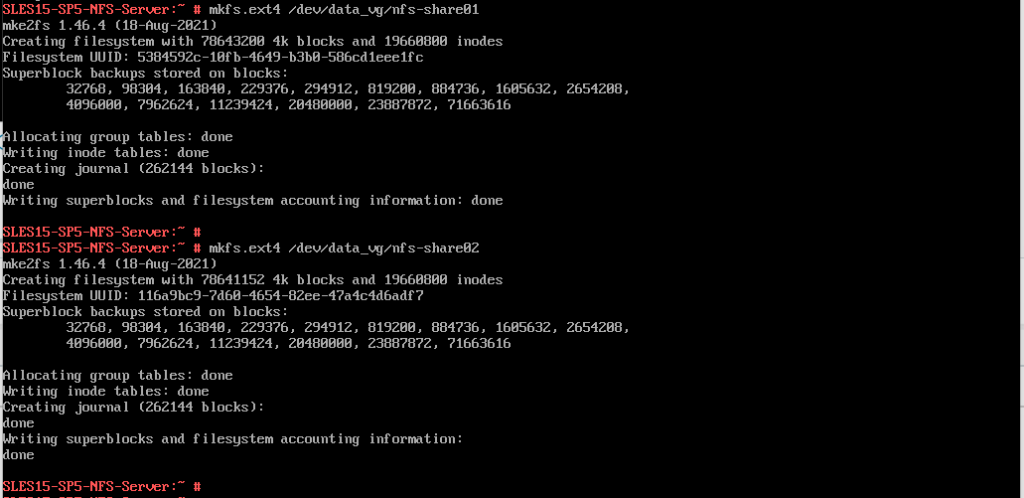

On both logical volumes I created the ext4 file system.

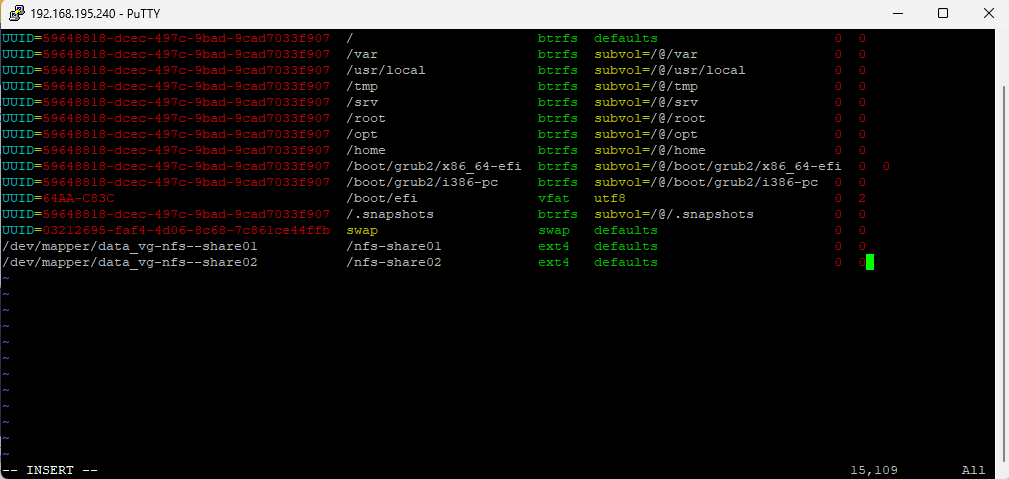

Finally as mentioned, I will also add both logical volumes into to /etc/fstab file in order to get mounted every time the system boots.

!!! Note !!!

In case you forgot this and reboot the server without the logical volumes or partitions gets mounted, the exported directories /nfs-share01 and /nfs-share02 will still be available on your NFS clients but finally will of course not show the files and capacity from your logical volumes or partitions and instead will just have access to the empty directories created for mounting purpose on your root partition. Therefore the NFS clients then also will just have and seen the capacity of the root partition to store files on besides not have access to the missing mounted partitions.

Export File System by using YaST

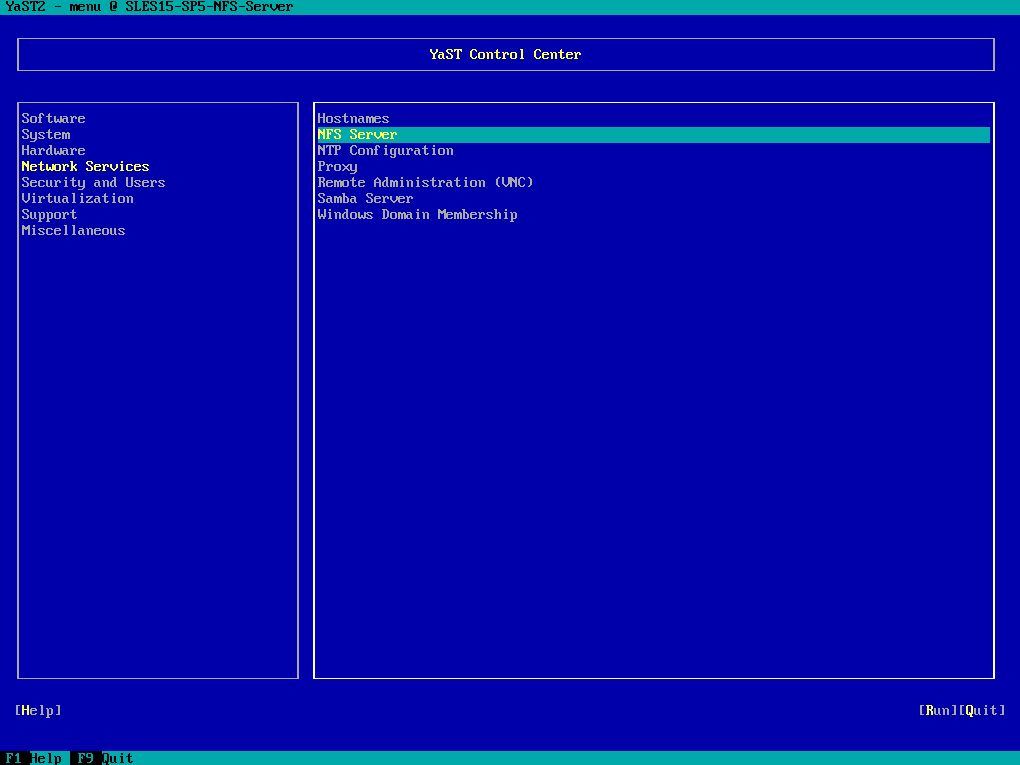

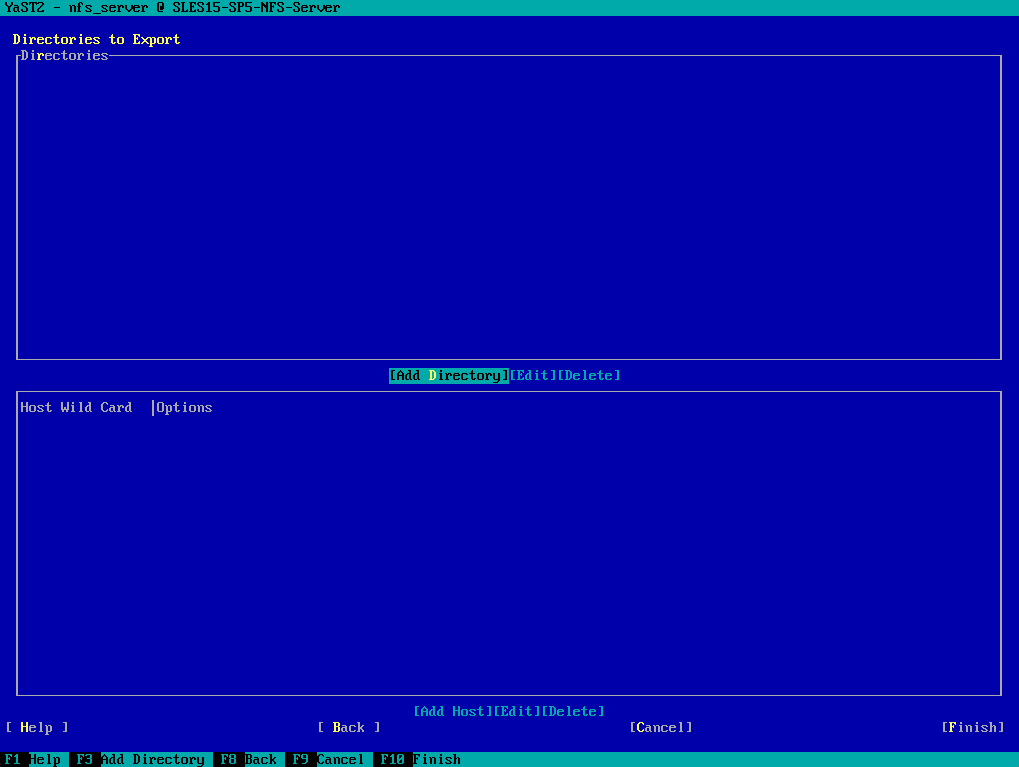

To export directories and files and grant access to all hosts, navigate in YaST to Network Services -> NFS Server.

Because of installing previously the yast2-nfs-server module, we are now able to configure the NFS Server directly in YaST.

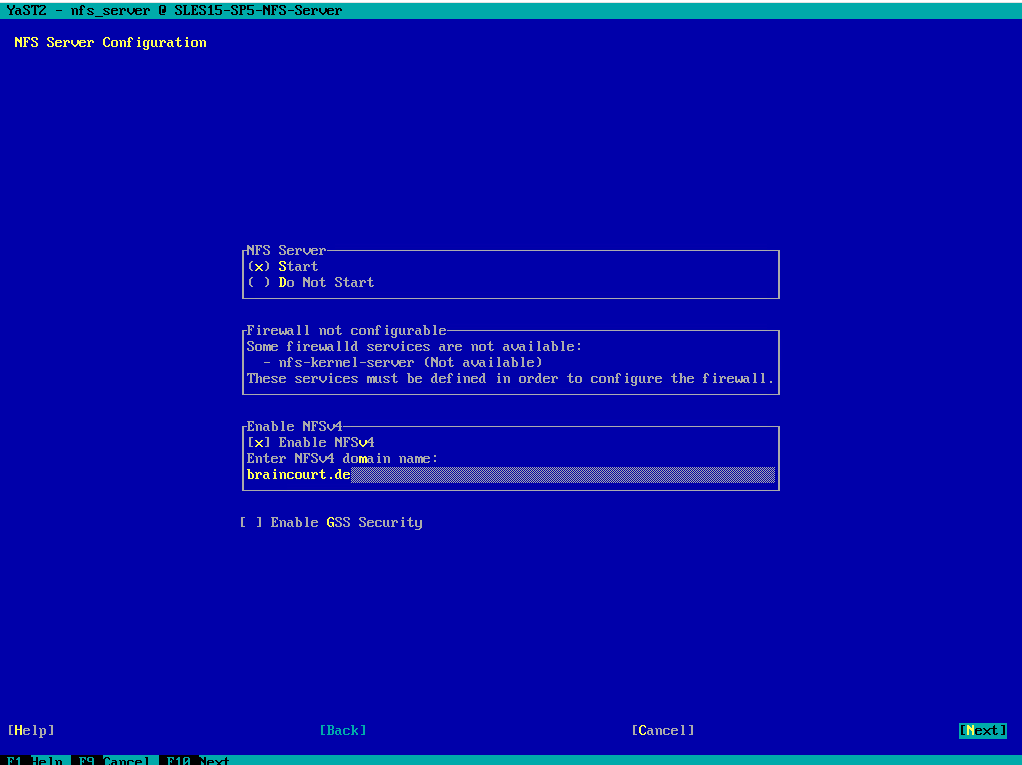

Below enable the NFS Server by selecting Start below. By default NFSv4 is enabled. Further I will enter the NFSv4 domain name for my on-premise lab environment braincourt.de. When done click on Next.

If firewalld is active on your system, configure it separately for NFS (see Section 19.5, “Operating an NFS server and clients behind a firewall”). YaST does not yet have complete support for firewalld, so ignore the “Firewall not configurable” message and continue.

If you deactivate NFSv4, YaST will only support NFSv3. For information about enabling NFSv2, see Note: NFSv2.

Note that the domain name needs to be configured on all NFSv4 clients as well. Only clients that share the same domain name as the server can access the server. The default domain name for server and clients is localdomain.

Source: https://documentation.suse.com/sles/15-SP3/html/SLES-all/cha-nfs.html

More about the firewalld on SUSE Linux Enterprise Server you will find also in my following post https://blog.matrixpost.net/set-up-suse-linux-enterprise-server/#firewall.

NFSv4 listens on the well known TCP port 2049.

NFSv4 consolidates many functions, such as locking and mounting, into this single port. Therefore, NFSv4 does not require the portmapper service and additional variable ports for those functions like NFSv3.NFSv3 also listens on the well known TCP port 2049 for the NFS daemon and NFS protocol.

NFSv3 also using the TCP and UDP Port 111 by the portmapper (or RPCbind) service which maps RPC program numbers to network numbers. For these various RPC services like mountd, nlockmgr, rquotad and statd, the ports will be assigned dynamically and controlled by the portmapper.

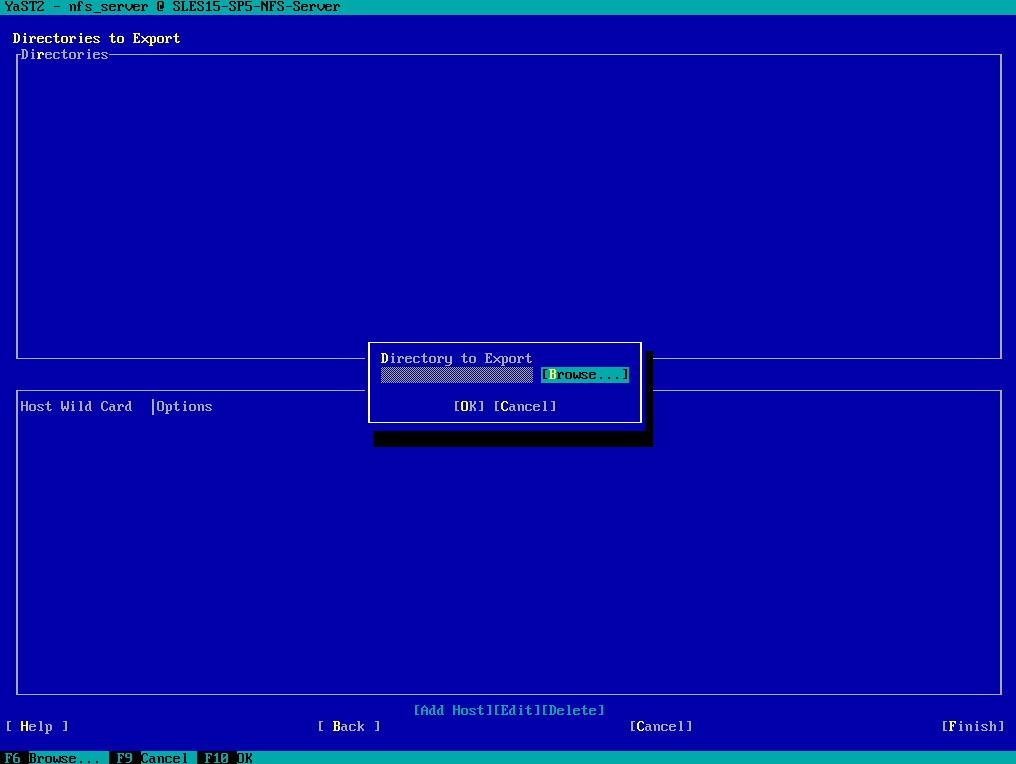

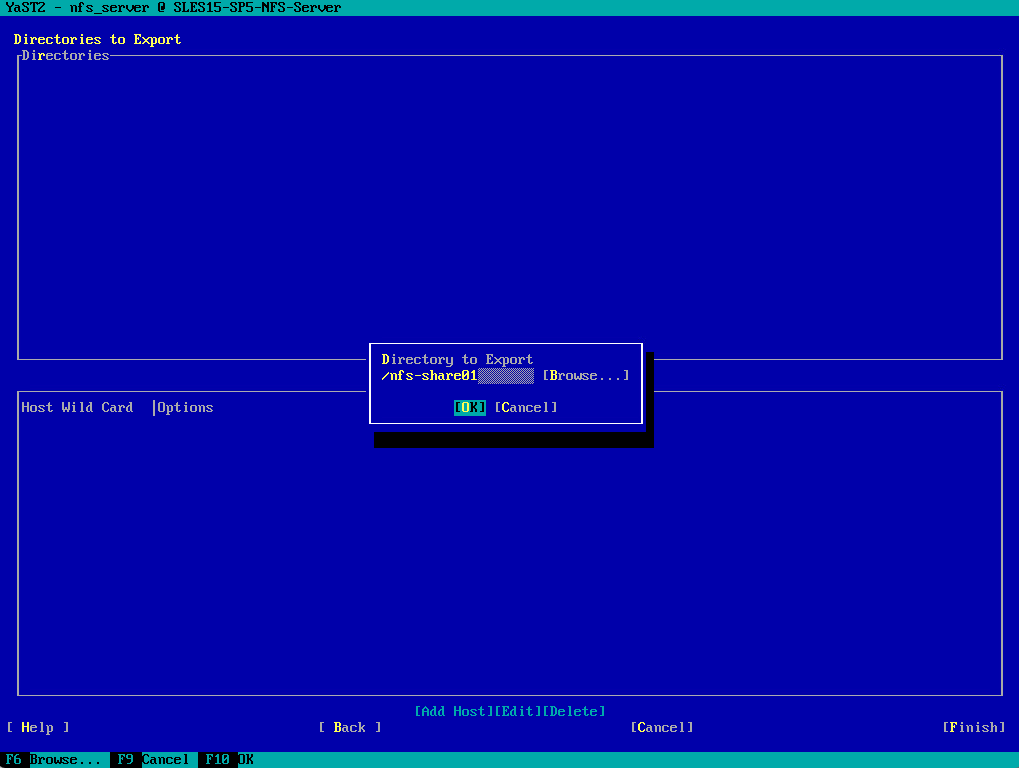

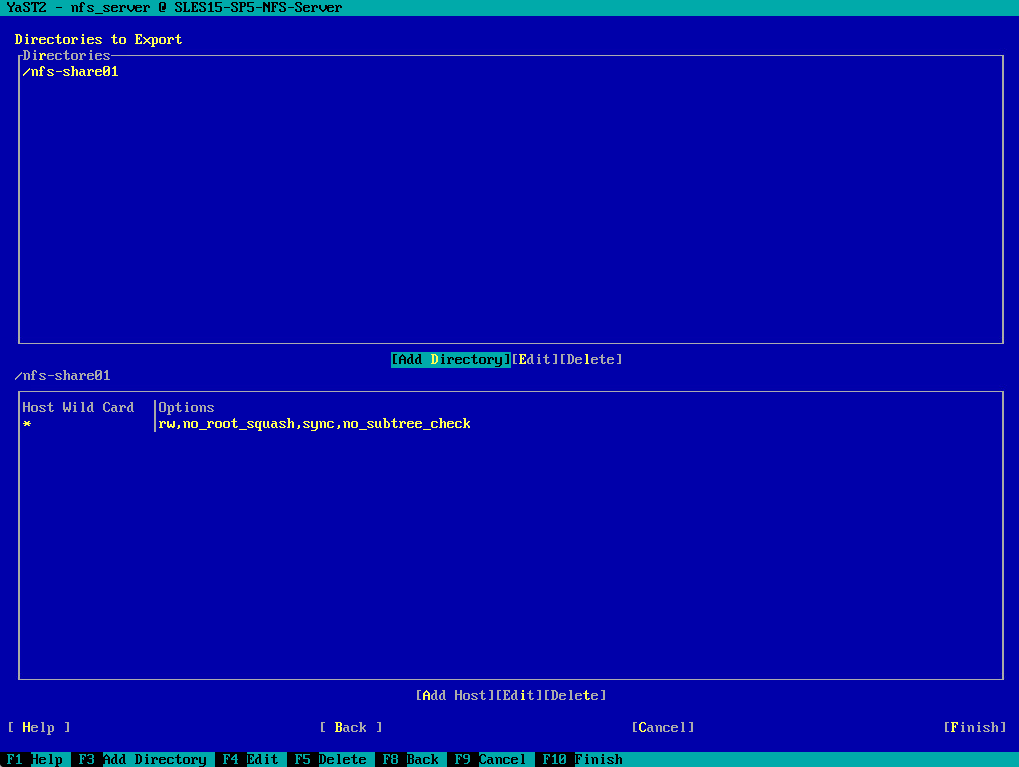

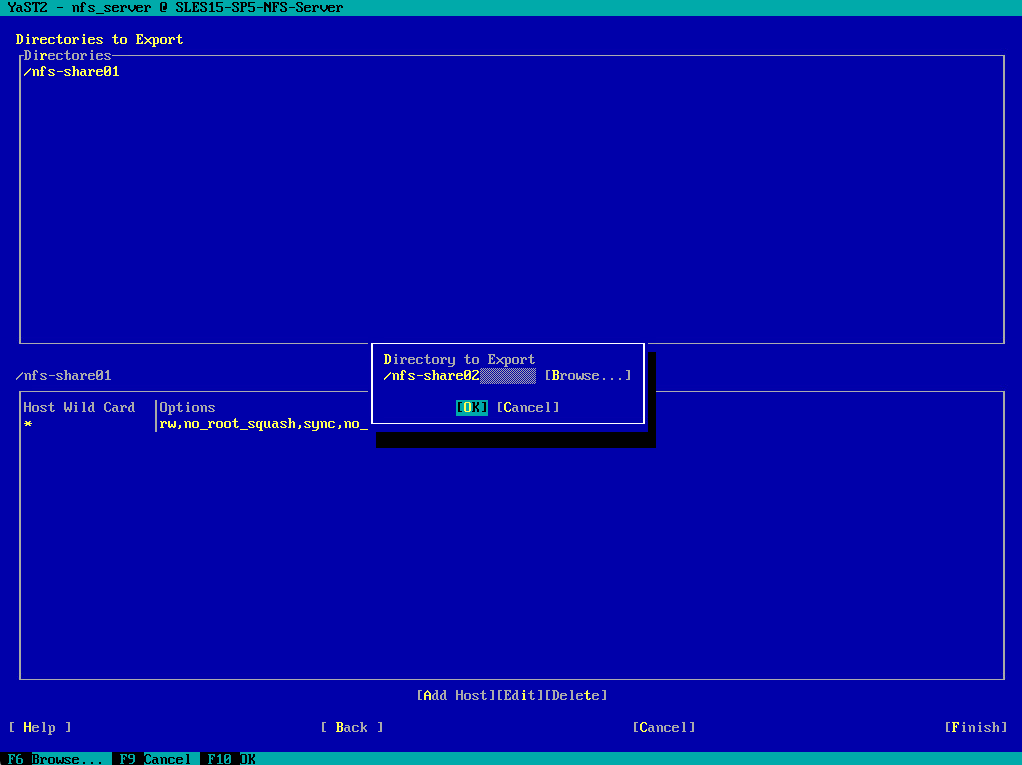

Below I will add my previously created directories /nfs-share01 and /nfs-share02 to finally export them. Click on Add Directory.

You can either enter here directly the directories you want to export or you browse through the file system to select them which I will do below.

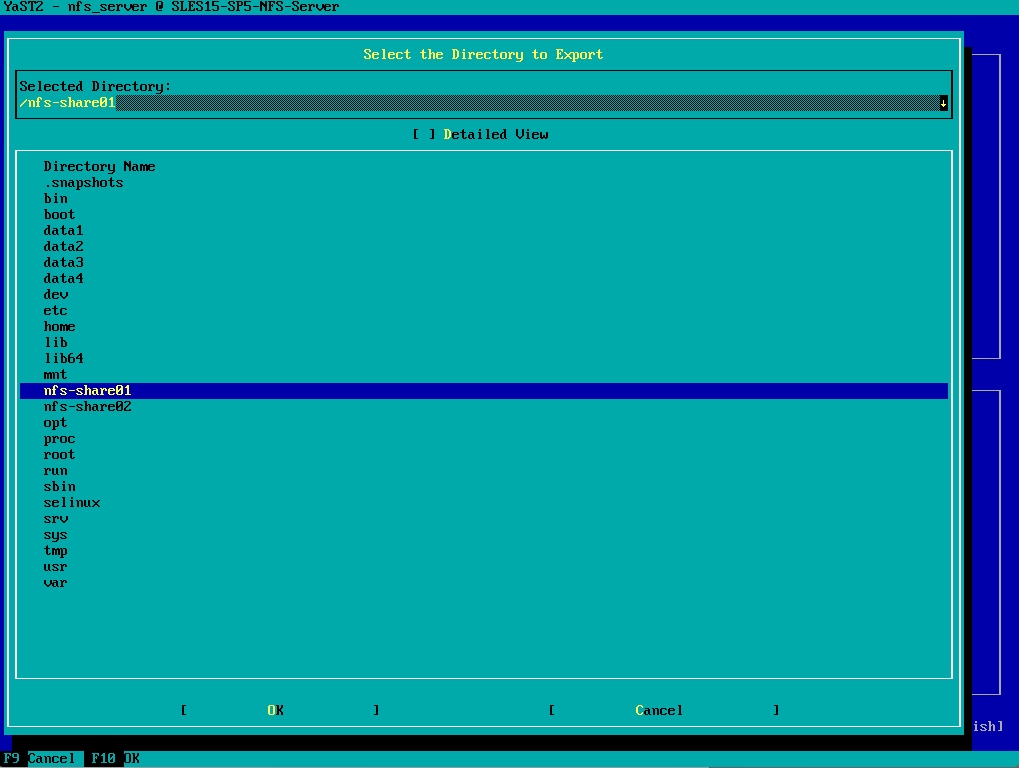

Here I will select my first directory /nfs-share01 I want to export.

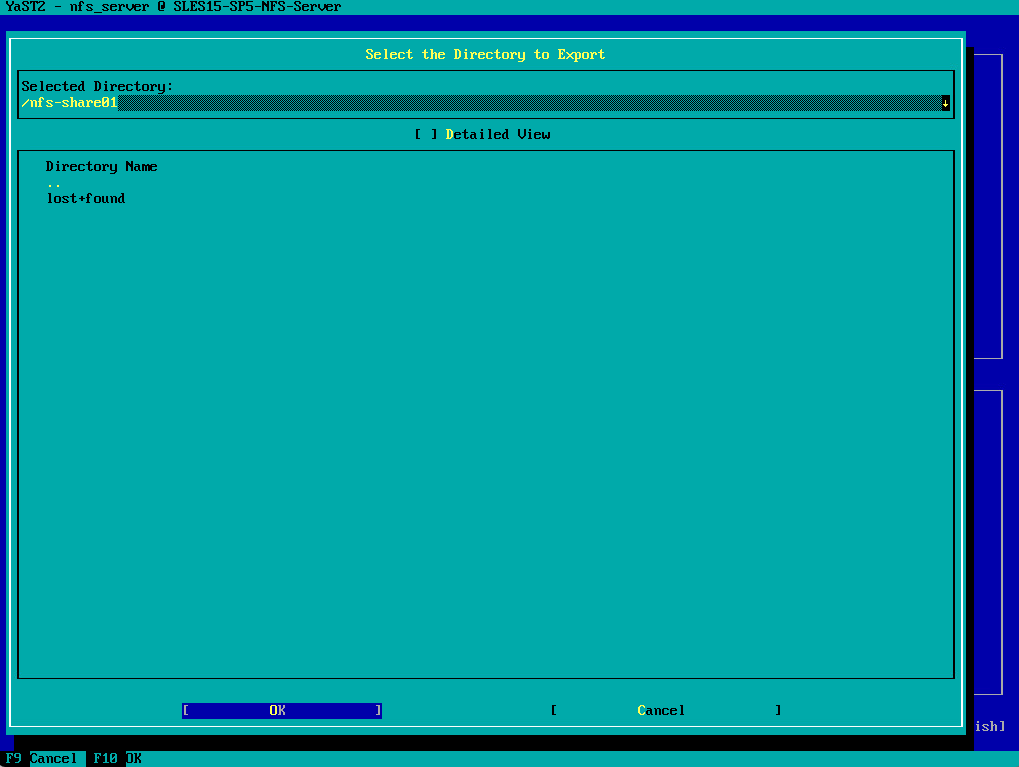

Click on OK to add it.

Click another time on OK.

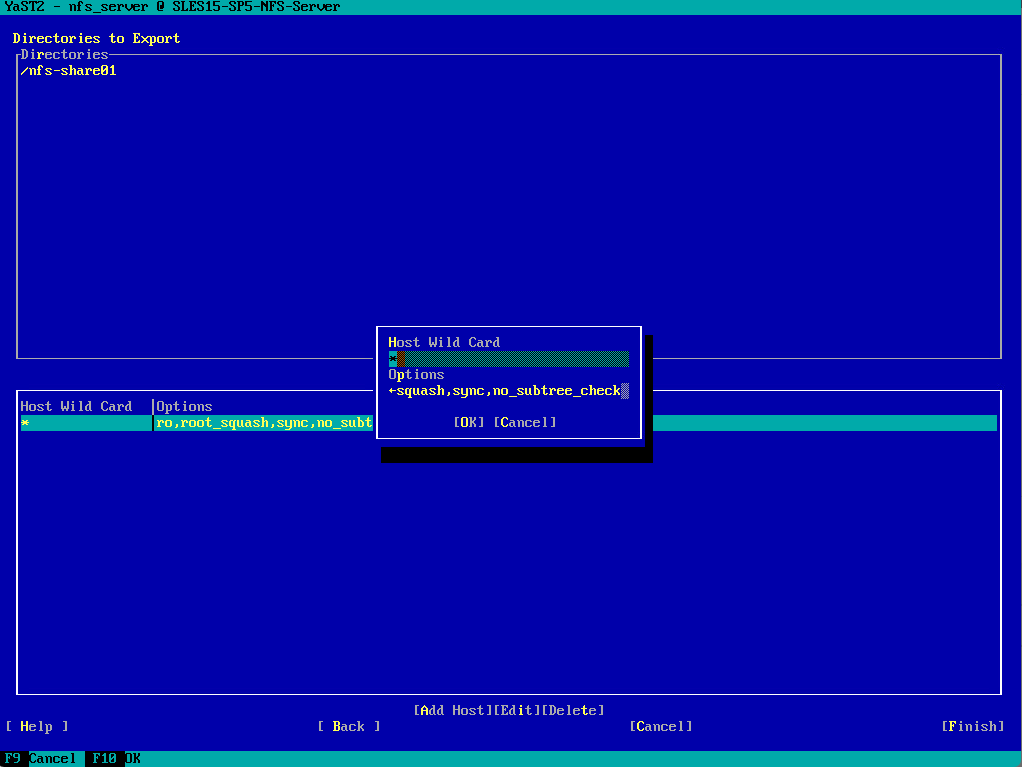

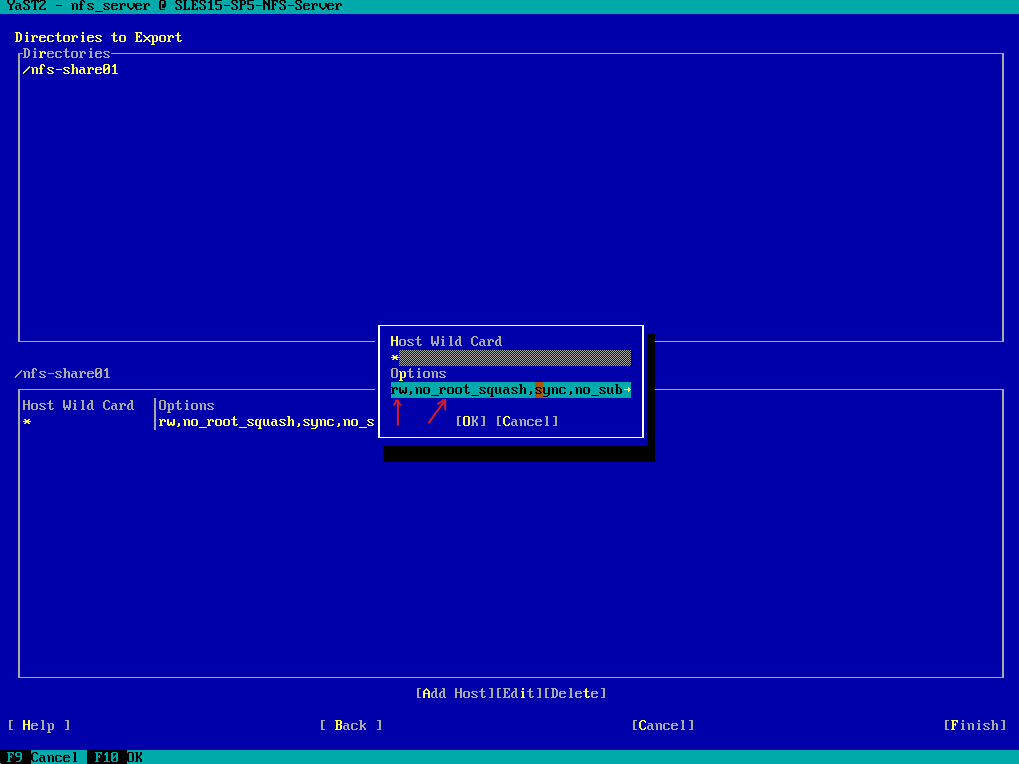

For the Host Wild Card dialog below I will leave the default settings to allow all hosts in my network to access the NFS Exports (shares) and finally click on OK to export it.

If you have not configured the allowed hosts already, another dialog for entering the client information and options pops up automatically. Enter the host wild card (usually you can leave the default settings as they are).

There are four possible types of host wild cards that can be set for each host: a single host (name or IP address), netgroups, wild cards (such as * indicating all machines can access the server), and IP networks.

Source: https://documentation.suse.com/sles/15-SP3/html/SLES-all/cha-nfs.html

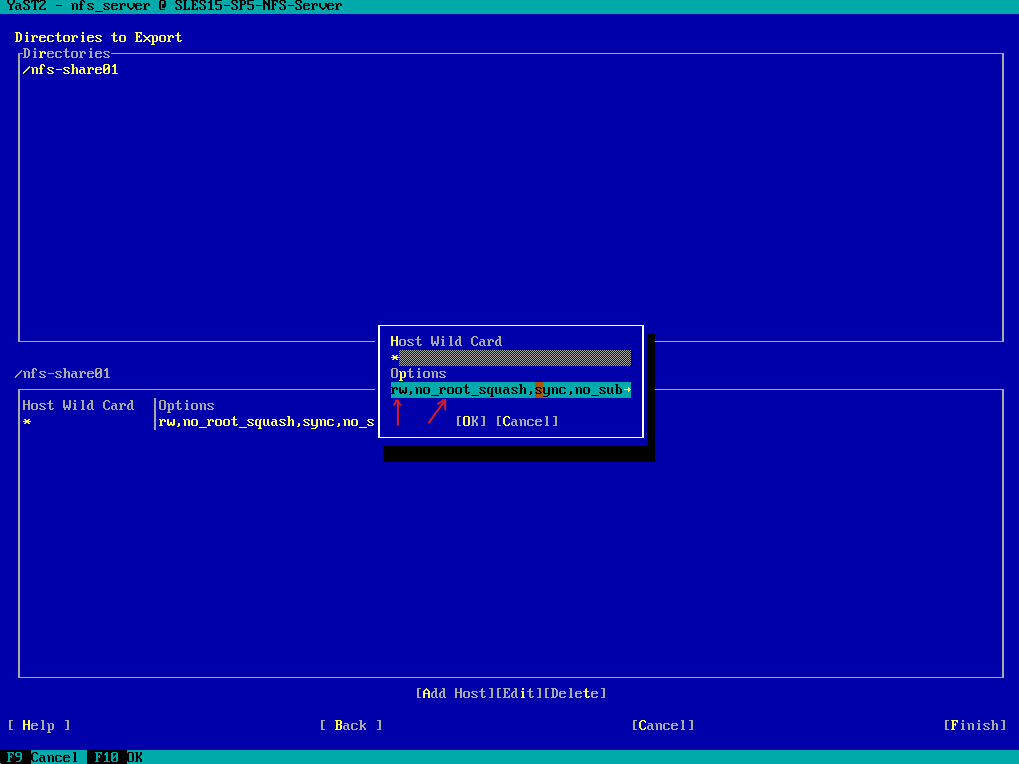

For the Options dialog below we need to adjust the following two options which will by default deny write access to our exports. In case you want to give the NFS Clients just read-only access, leave the default options.

ro -> change to rw | ro mounts the exported file system as read-only. To allow hosts to make changes to the file system, you have to specify the rw read-write option.

root_squash -> change to no_root_squash | root_squash prevents root user connected remotely from having root privileges and assigns them the user ID for the user nfsnobody. This effectively “squashes” the power of the remote root user to the lowest local user, preventing unauthorized alteration of files on the remote server.

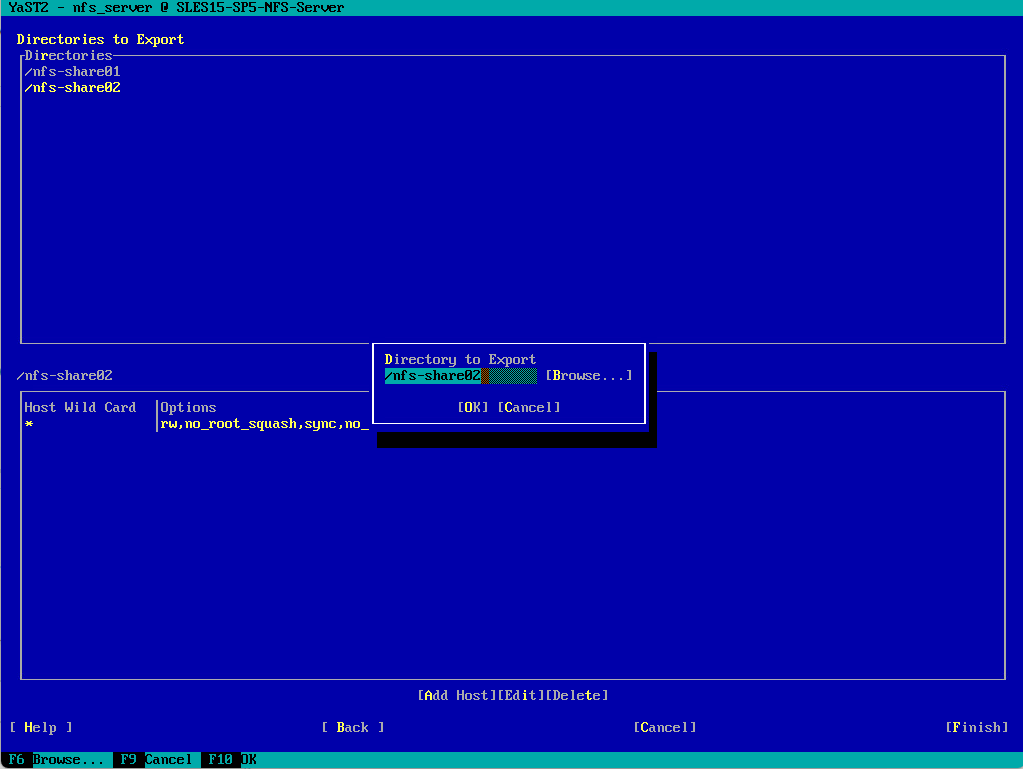

I will now add my second directory /nfs-share02 which I want to export.

This time to speed up things I will directly enter the directory /nfs-share02 into the input field and click on OK.

For the Host Wild Card and Options I will use the same as previously for the /nfs-share01 export.

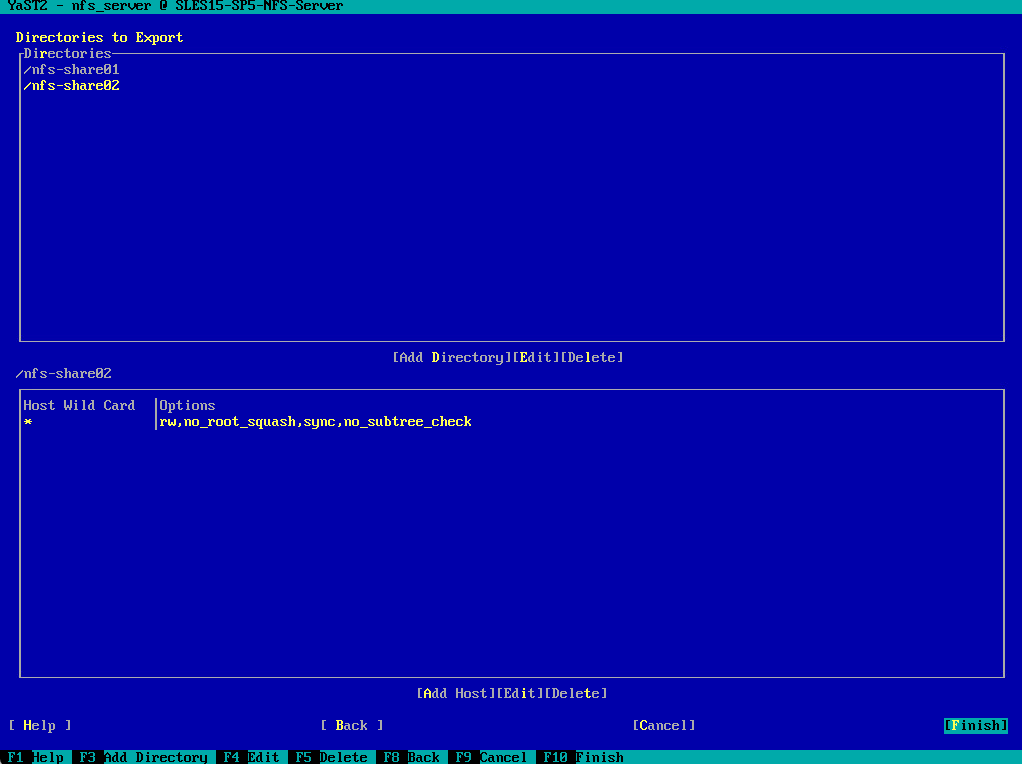

To finally export these directories, I will click on Finish.

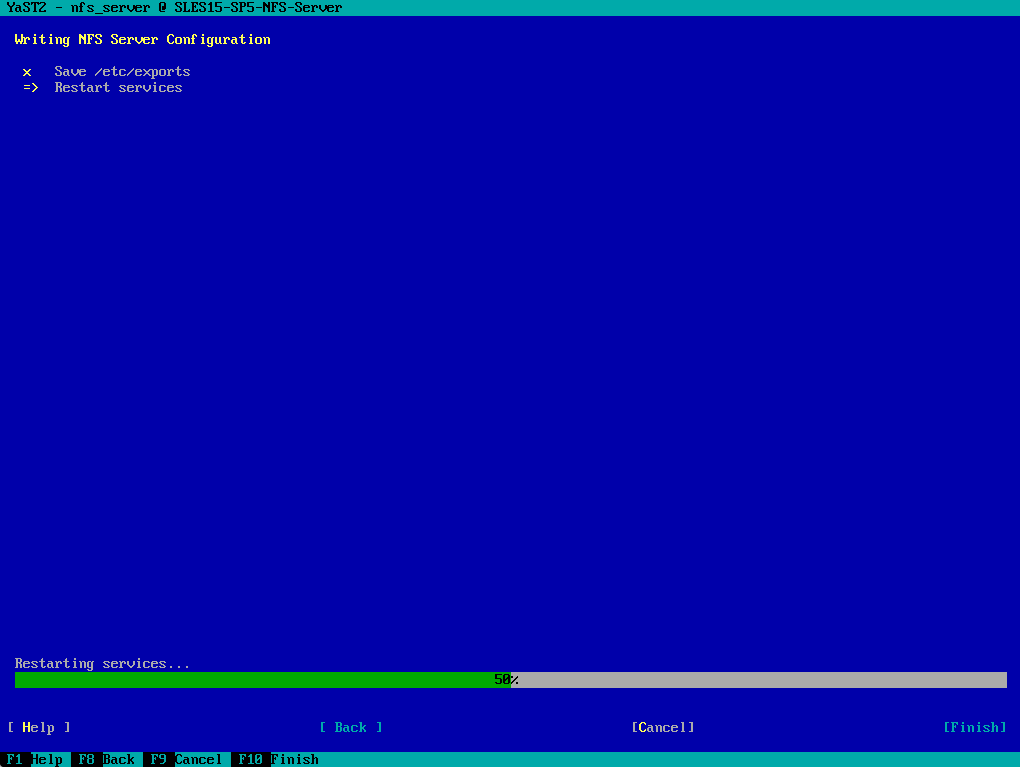

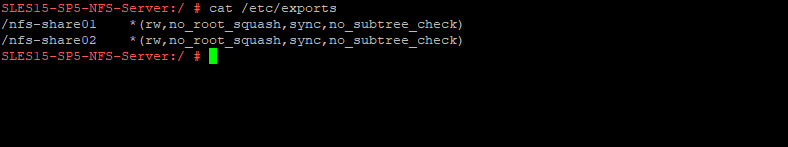

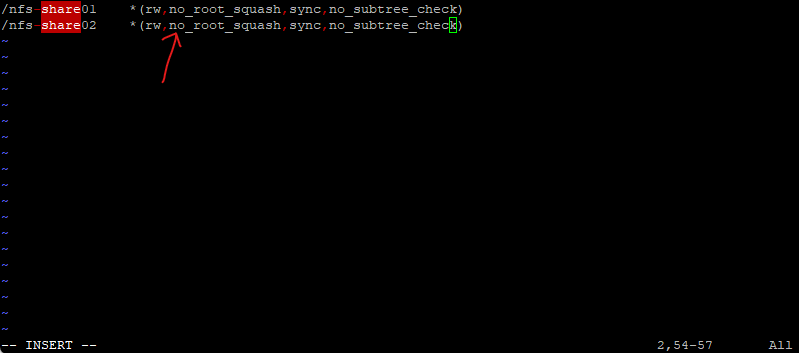

As you can see below, our exports will be configured within the /etc/exports file.

Here you can see our configured exports within the /etc/exports file.

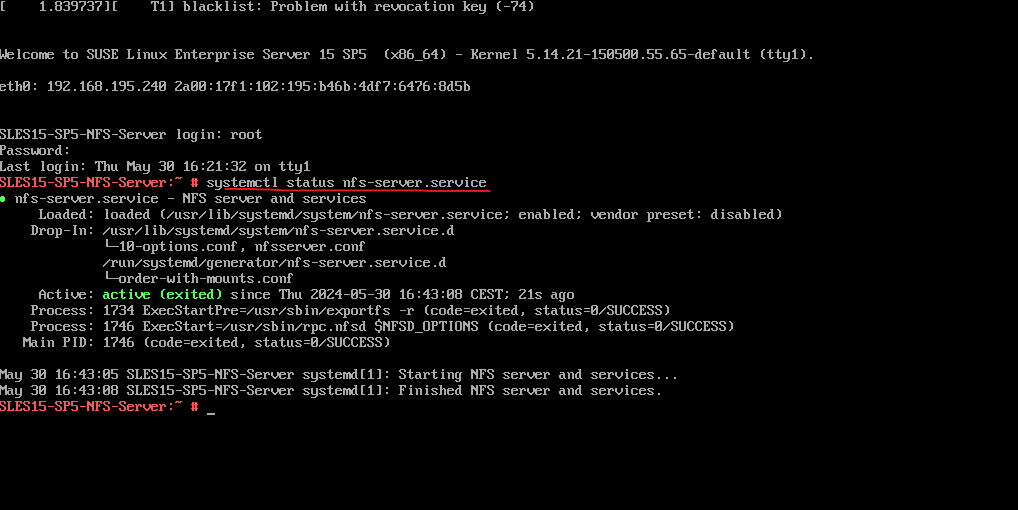

Below one last check before importing the file systems (Exports) on other hosts (so called NFS clients), if the NFS Server is already running. Look’s good!

# systemctl status nfs-server.service

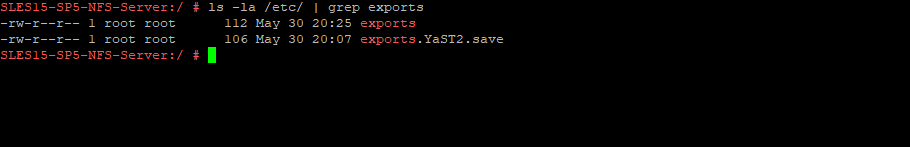

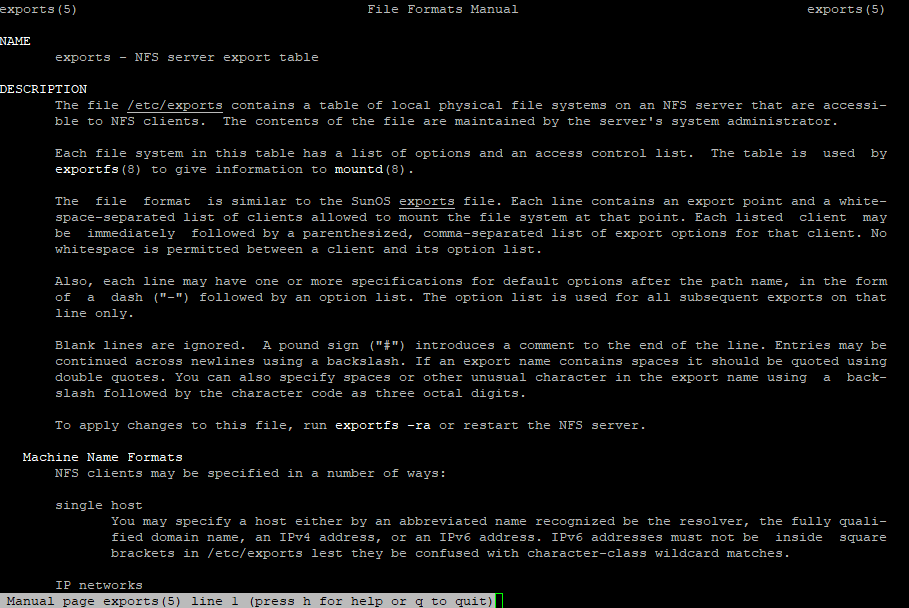

Export File System by editing /etc/exports

We can also export the file system by manually editing the /etc/exports configuration file.

Below you can see both exports (shares) we previously exported by using YaST.

The /etc/exports file controls which file systems are exported to remote hosts and specifies options.

The file contains a list of entries. Each entry indicates a directory that is shared and how it is shared. A typical entry consists of:

/SHARED/DIRECTORY HOST(OPTION_LIST)

wildcard *: exports to all clients on the network

*.department1.example.com: only exports to clients on the *.department1.example.com domain

192.168.1.0/24: only exports to clients with IP adresses in the range of 192.168.1.0/24

192.168.1.2: only exports to the machine with the IP address 192.168.1.2Source: https://documentation.suse.com/sles/15-SP3/html/SLES-all/cha-nfs.html#sec-nfs-export-manual

The format of the /etc/exports file is very precise, particularly in regards to use of the space character. Remember to always separate exported file systems from hosts and hosts from one another with a space character. However, there should be no other space characters in the file except on comment lines.

# vi /etc/exports

Default options

- ro -> The exported file system is read-only. Remote hosts cannot change the data shared on the file system. To allow hosts to make changes to the file system (that is, read and write), specify the rw option.

- sync -> The NFS server will not reply to requests before changes made by previous requests are written to disk. To enable asynchronous writes instead, specify the option async.

- wdelay -> The NFS server will delay writing to the disk if it suspects another write request is imminent. This can improve performance as it reduces the number of times the disk must be accessed by separate write commands, thereby reducing write overhead. To disable this, specify the no_wdelay option, which is available only if the default sync option is also specified.

- root_squash ->This prevents root users connected remotely (as opposed to locally) from having root privileges; instead, the NFS server assigns them the user ID nobody. This effectively “squashes” the power of the remote root user to the lowest local user, preventing possible unauthorized writes on the remote server. To disable root squashing, specify the no_root_squash option.

To squash every remote user (including root), use the all_squash option. To specify the user and group IDs that the NFS server should assign to remote users from a particular host, use the anonuid and anongid options, respectively, as in:

export host(anonuid=uid,anongid=gid)

Here, uid and gid are user ID number and group ID number, respectively. The anonuid and anongid options enable you to create a special user and group account for remote NFS users to share.

More about all options you will also find on the man page of /etc/exports.

# man exports

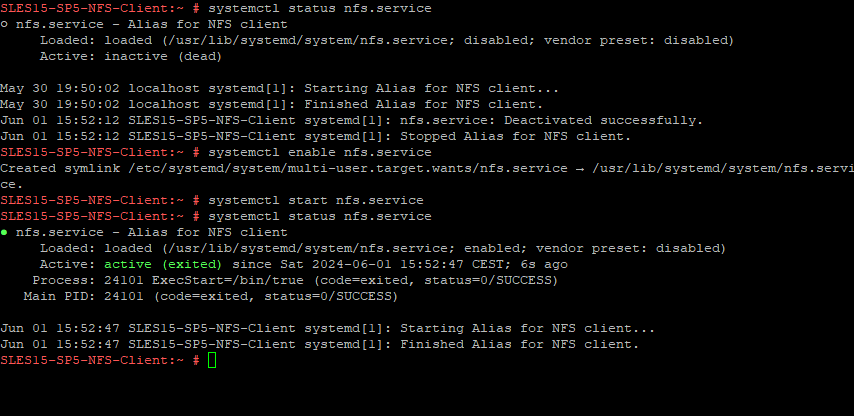

Importing File System on Linux

Now I want to access the file system (aka export or share) which we previously exported by the NFS Server on other hosts so called NFS Clients.

On these NFS Clients we first need to enable and start the NFS client daemon (service) by executing the following command.

# systemctl enable nfs.service # systemctl start nfs.service

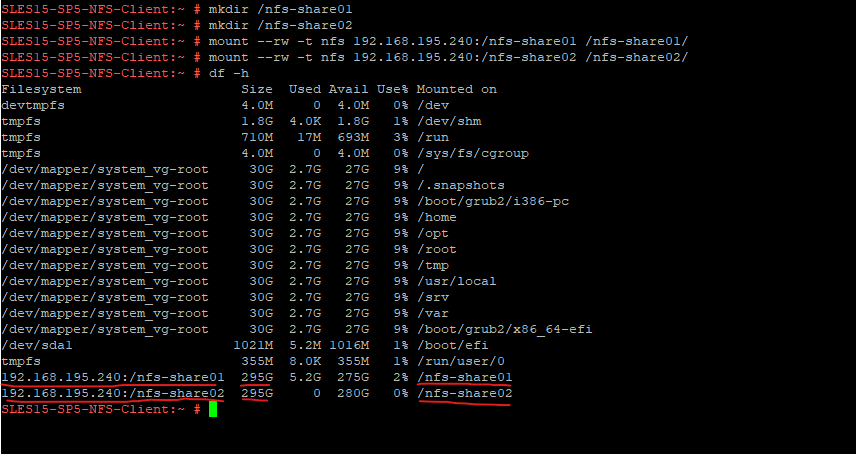

Then we need to mount the NFS exports by executing the following commands. I will first also create two new directories to mount the exports finally to.

# mkdir /nfs-share01 # mkdir /nfs-share02 # mount -t nfs <FQDN or IP NFS Server>:/<exported directory> /<mount point> # mount -t nfs 192.168.195.240:/nfs-share01 /nfs-share01 # mount -t nfs 192.168.195.240:/nfs-share02 /nfs-share02 -t flag -> Limit the set of filesystem types -rw --read-write flag -> mount the file system read-write (by default without using this flag)

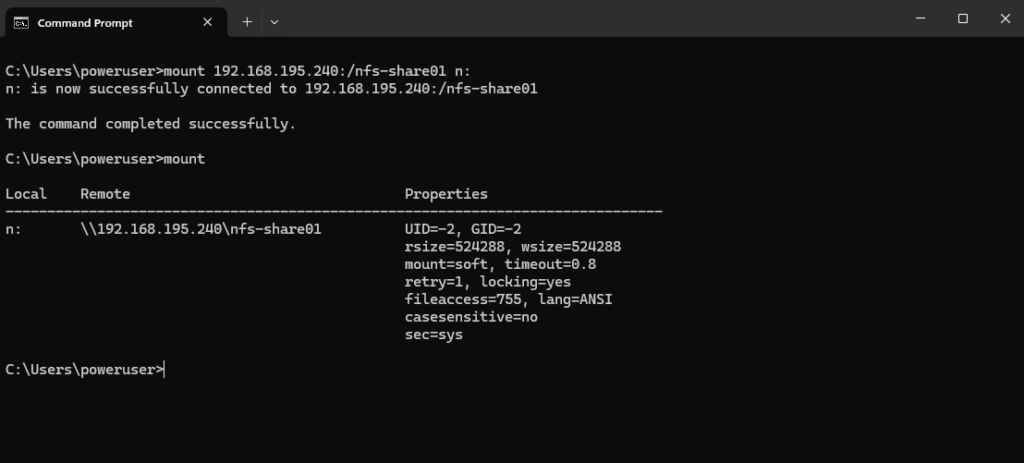

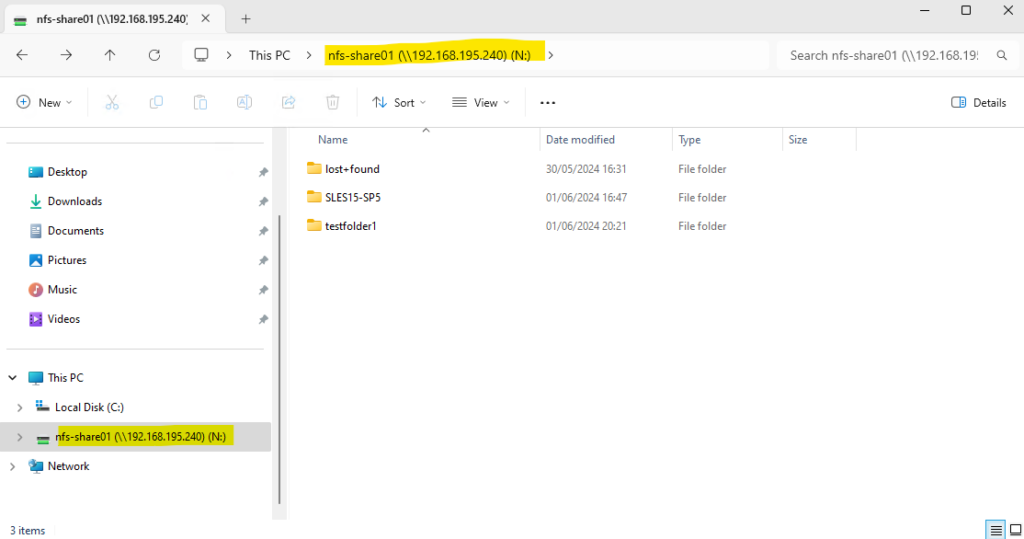

Importing File System on Windows

We can also import the exported file system by a Linux NFS Server on Windows.

The local file system on my SUSE Linux Enterprise Server 15 NFS Server is ext4, but because the Network File System (NFS) doesn’t care about the underlying file system type, we can also access the exported file system from Windows by using its NFS Client.

About how to install Client for NFS on Windows, you can read my following post.

To mount the exported file system by the NFS Server and its share /nfs-share01 we have to execute the following command in the Command Prompt from Windows.

> mount <IP or FQDN NFS Server>:/<share> <drive>: > mount 192.168.195.240:/nfs-share01 n:

In case you have mounted the file system successfully but it isn’t showing up in the file explorer below, you have probably executed the mount command by using an elevated Command Prompt (Run as Administrator) and UAC is configured to Prompt for credentials.

To solve this issue, just unmount the exported file system and mount it again by using a non-elevated Command Prompt.

The file explorer will run with normal user privileges.

When UAC is enabled, the system creates two logon sessions at user logon. Both logon sessions are linked to one another. One session represents the user during an elevated session, and the other session where you run under least user rights.

When drive mappings are created, the system creates symbolic link objects (DosDevices) that associate the drive letters to the UNC paths. These objects are specific for a logon session and are not shared between logon sessions.

When the UAC policy is configured to Prompt for credentials, a new logon session is created in addition to the existing two linked logon sessions. Previously created symbolic links that represent the drive mappings will be unavailable in the new logon session.

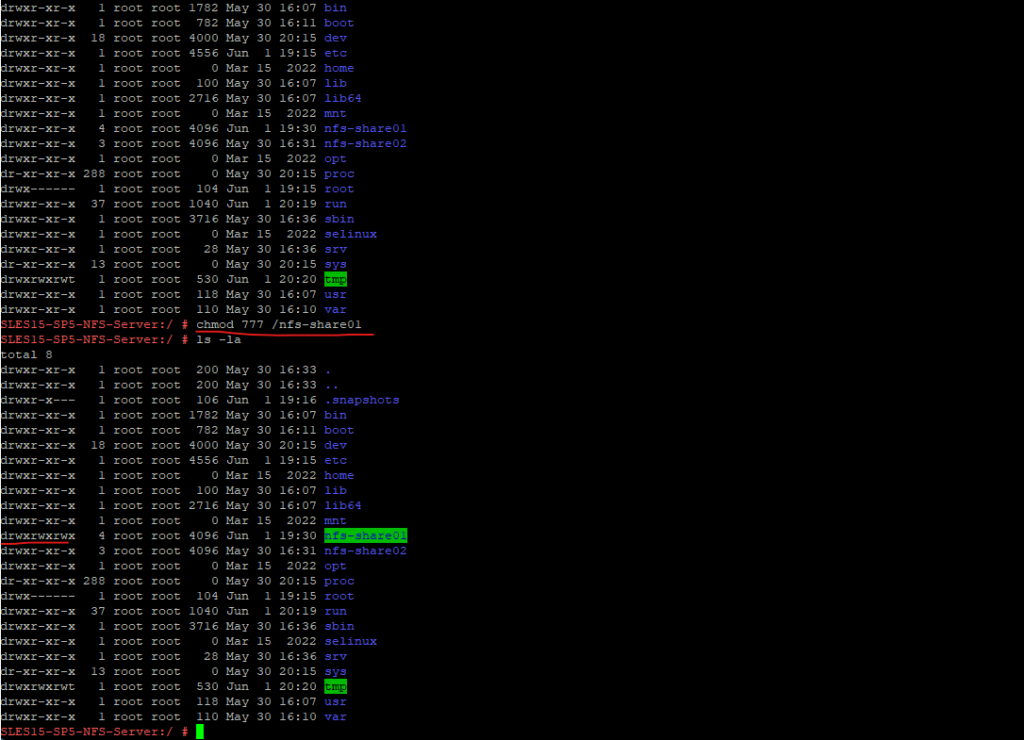

Also keep in mind to configure the local file permissions on the NFS Server and its exported file system (directory). Below for testing purpose I will grant access to everyone.

The more restrictive permissions between the NFS Exports (share) permissions and the local file system permissions (here ext4) finally wins.

The same behavior you will see when using a Windows NFS Server as shown in my following post.

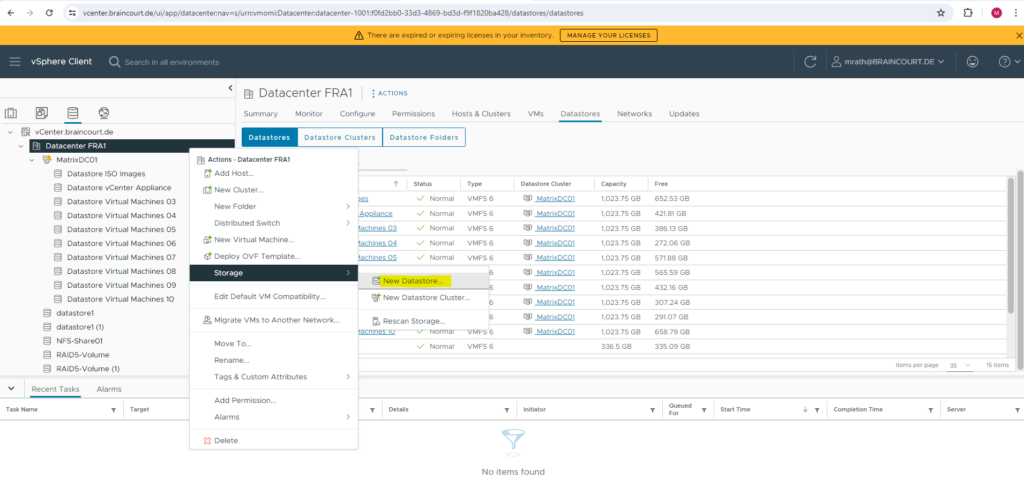

Importing File Systems on vSphere

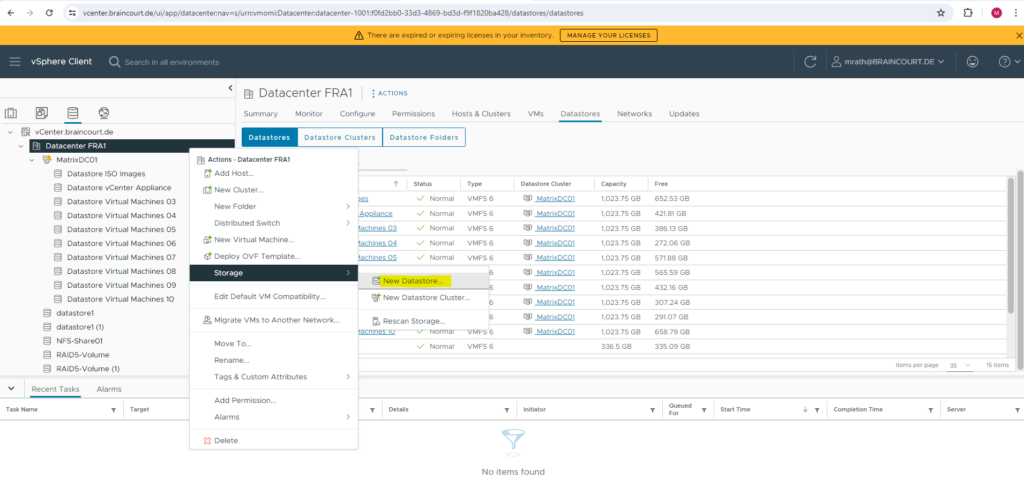

Below I will use the NFS Exports (shares) to create a new NFS datastore in my vSphere lab environment.

The significant presence of Network Filesystem Storage (NFS) in the datacenter today has lead to many people deploying virtualization environments with Network Attached Storage (NAS) shared storage resources.

Network-attached storage typically provide access to files using network file sharing protocols such as NFS, SMB, or AFP.

Running vSphere on NFS is a very viable option for many virtualization deployments as it offers strong performance and stability if configured correctly. The capabilities of VMware vSpher on NFS are very similar to the VMware vSphere on block-based storage. VMware offers support for almost all features and functions on NFS – as it does for vSphere on SAN.

Source: https://core.vmware.com/resource/best-practices-running-nfs-vmware-vsphere

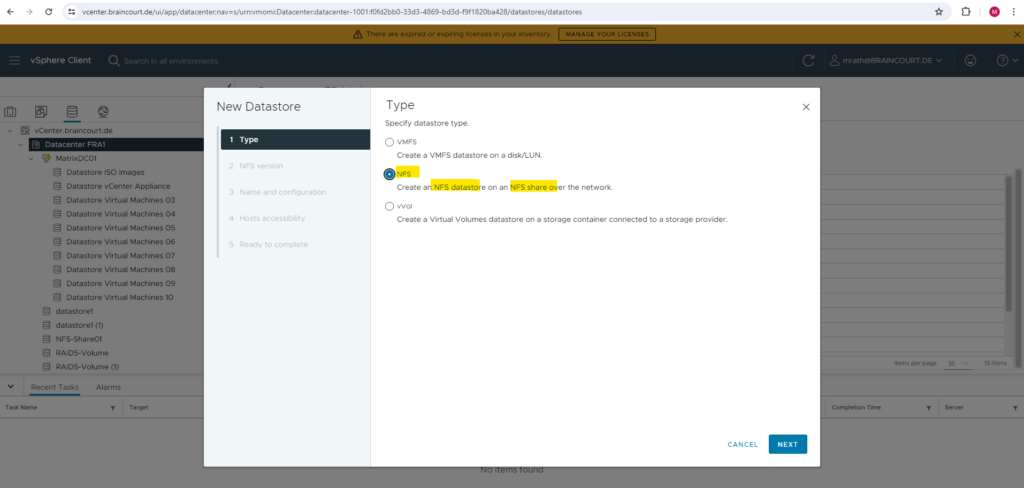

To create a new NFS datastore in vSphere, right click on your datacenter and select Storage -> New Datastore … as shown below.

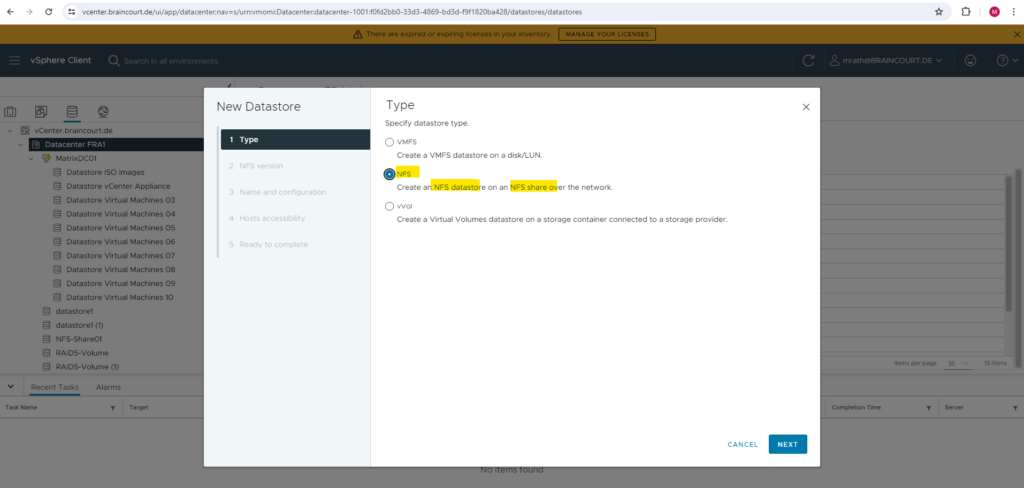

Select NFS for the datastore type.

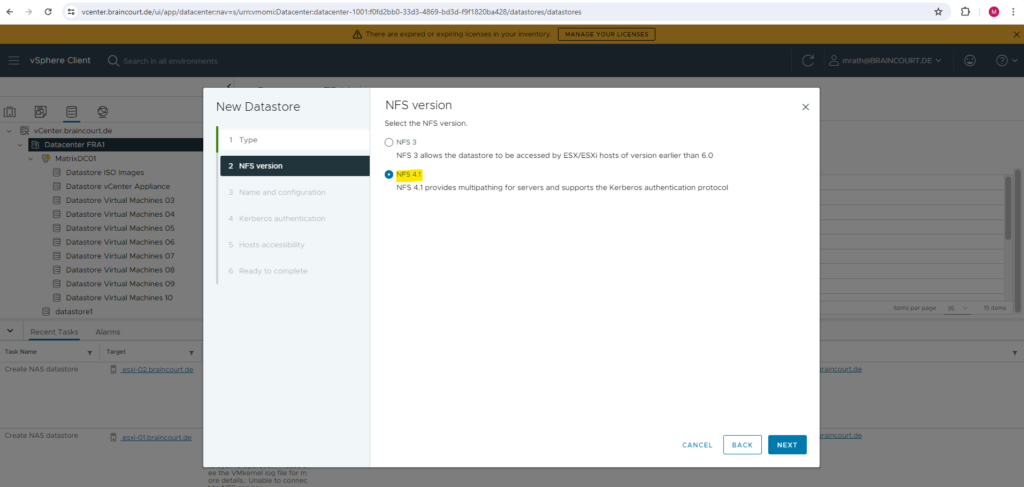

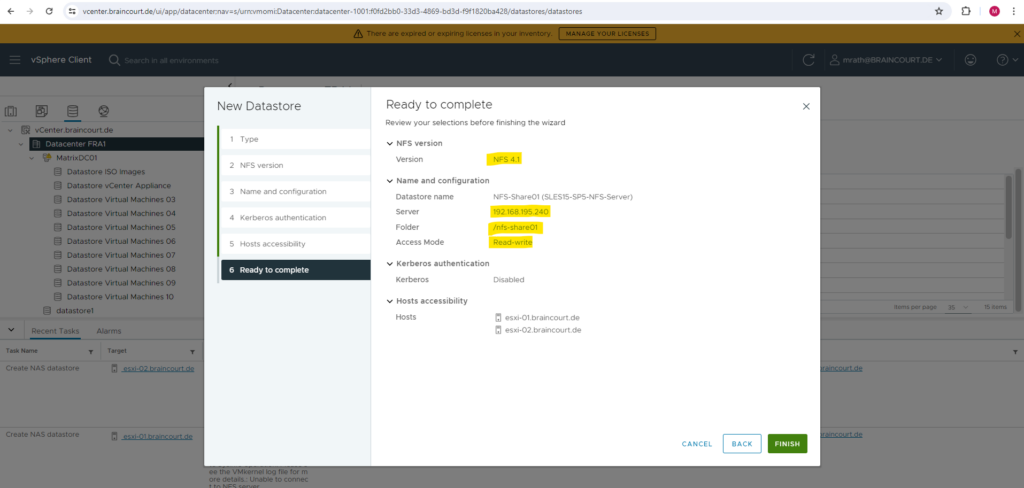

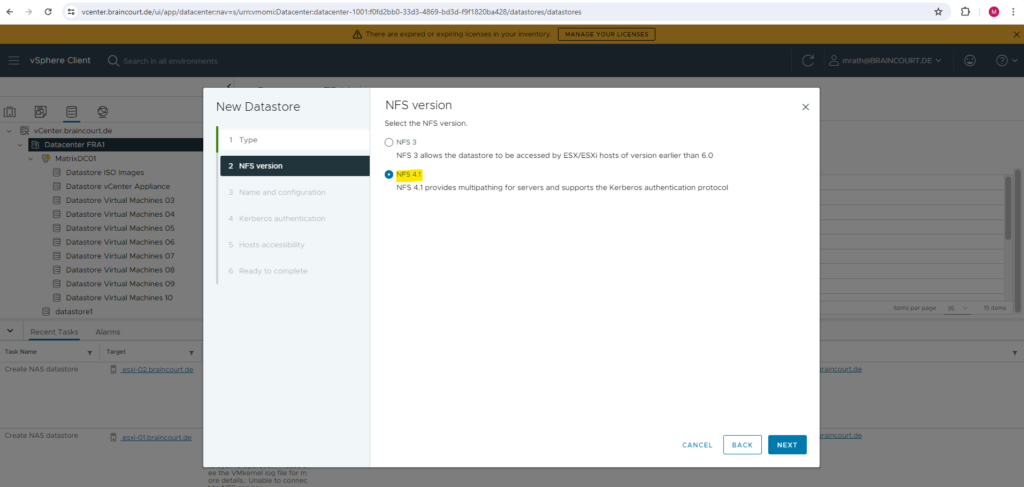

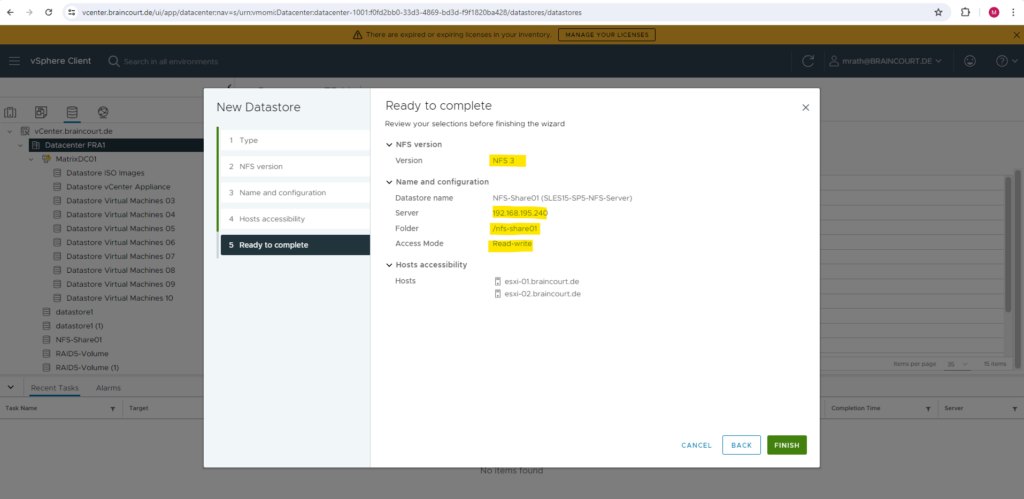

Because we set up previously a NFSv4 server, we need to select below for the NFS version NFS 4.1.

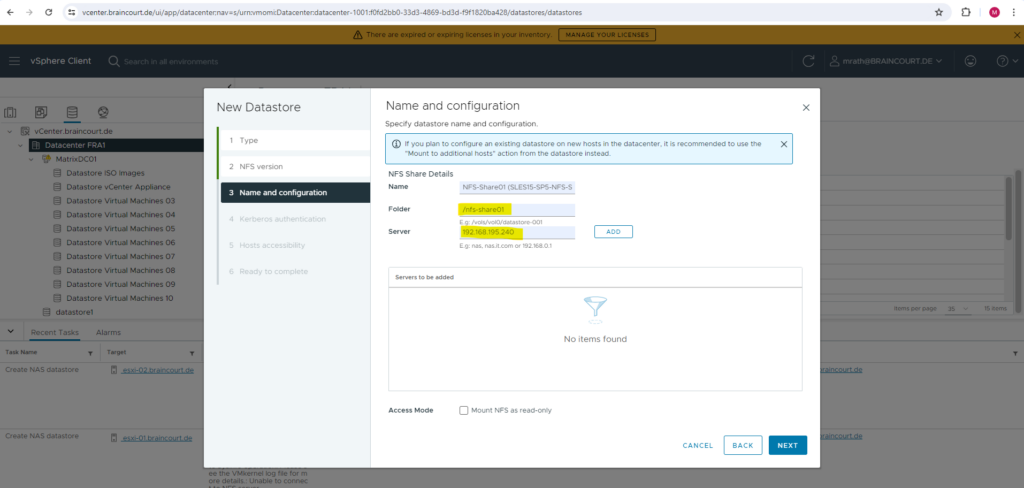

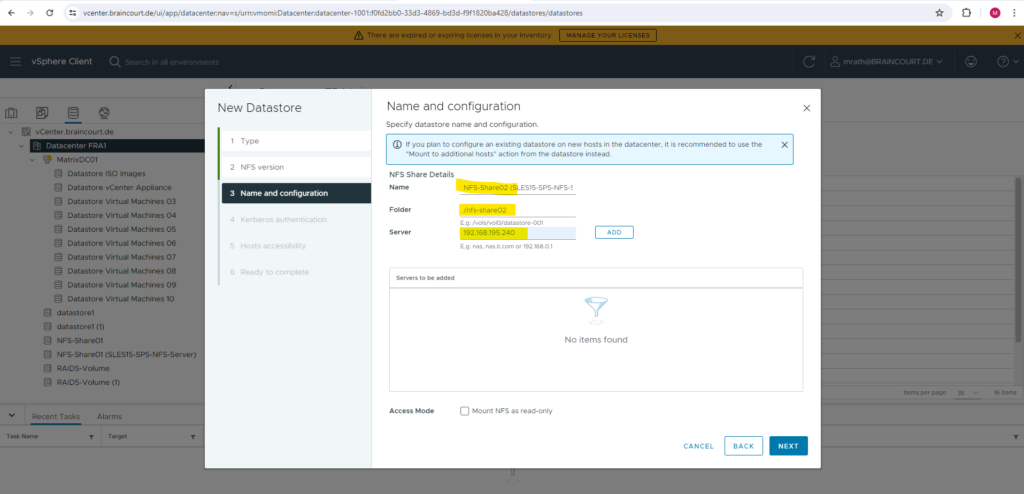

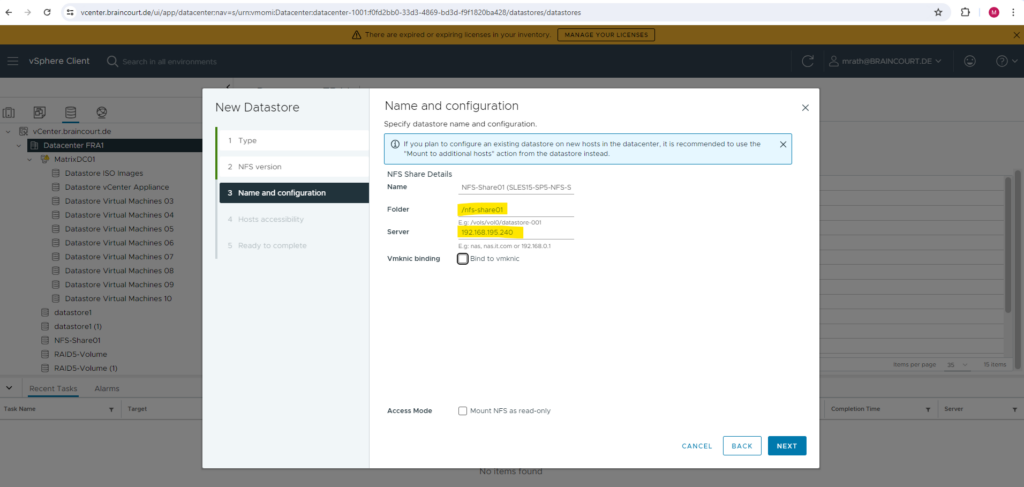

We need to enter the directory we exported on the NFS Server and its FQDN or IP address. I will first create a new NFS datastore by using my first exported directory /nfs-share01 by the NFS Server.

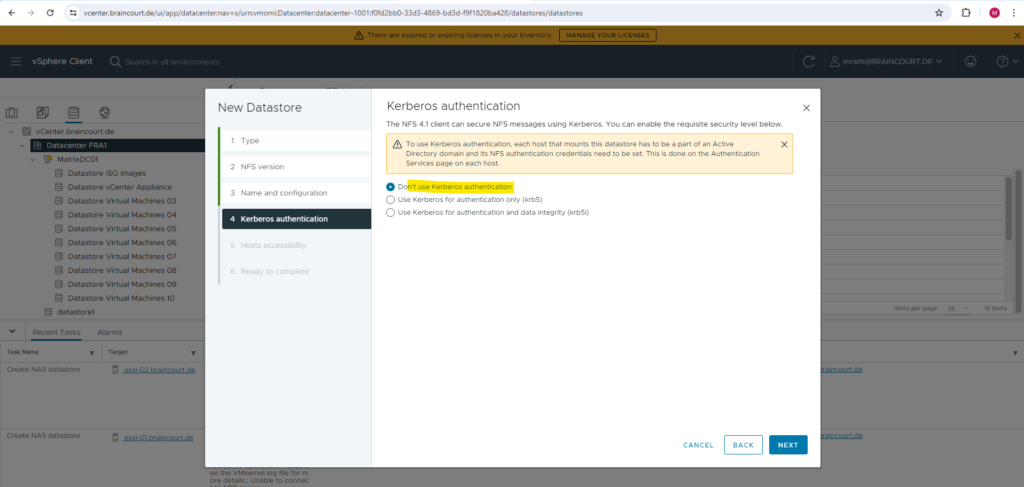

Because we don’t have configured any authentication on the NFS Server and allowing all hosts in our network to access it, we need to select here Don’t use Kerberos authentication.

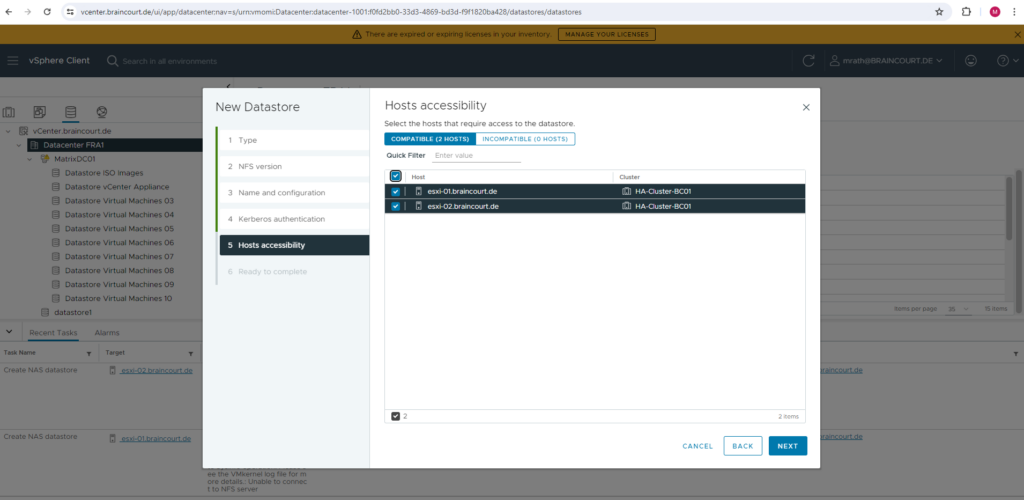

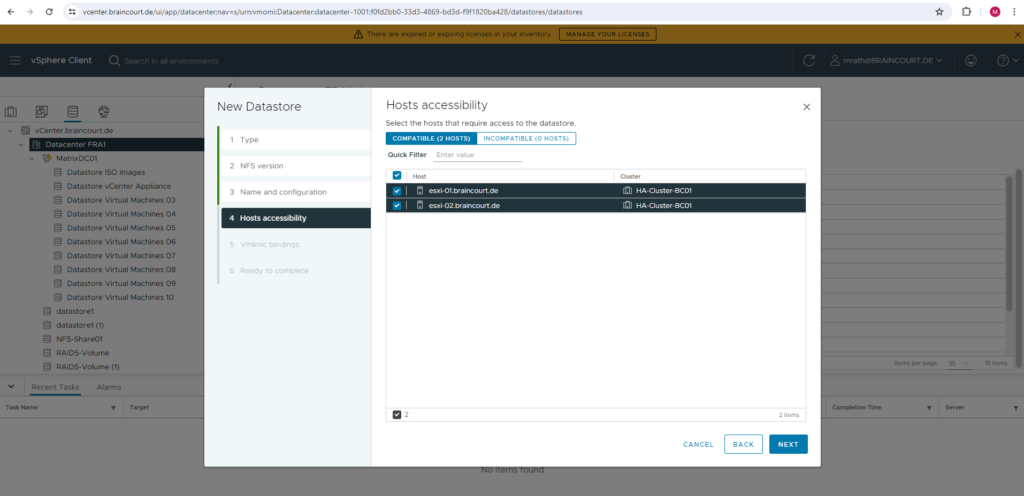

I will select both of my ESXi hosts to have access to the NFS datastore.

Finally we can click on FINISH to create our NFS datastore.

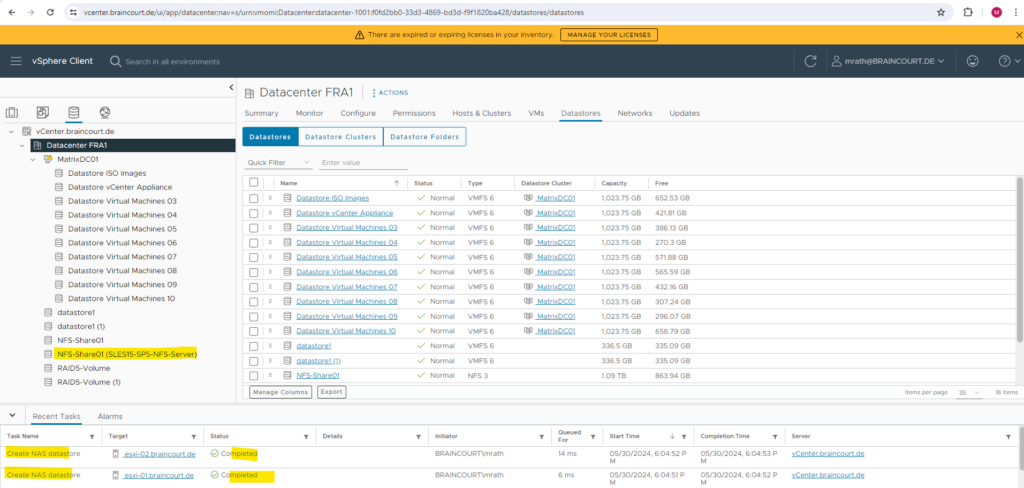

The NFS datastore created successfully resp. actually just mounted for both ESXi hosts.

An NFS client built into ESXi uses the Network File System (NFS) protocol over TCP/IP to access a designated NFS volume that is located on a NAS server. The ESXi host can mount the volume and use it for its storage needs. vSphere supports versions 3 and 4.1 of the NFS protocol.

Typically, the NFS volume or directory is created by a storage administrator and is exported from the NFS server. You do not need to format the NFS volume with a local file system, such as VMFS. Instead, you mount the volume directly on the ESXi hosts and use it to store and boot virtual machines in the same way that you use the VMFS datastores.

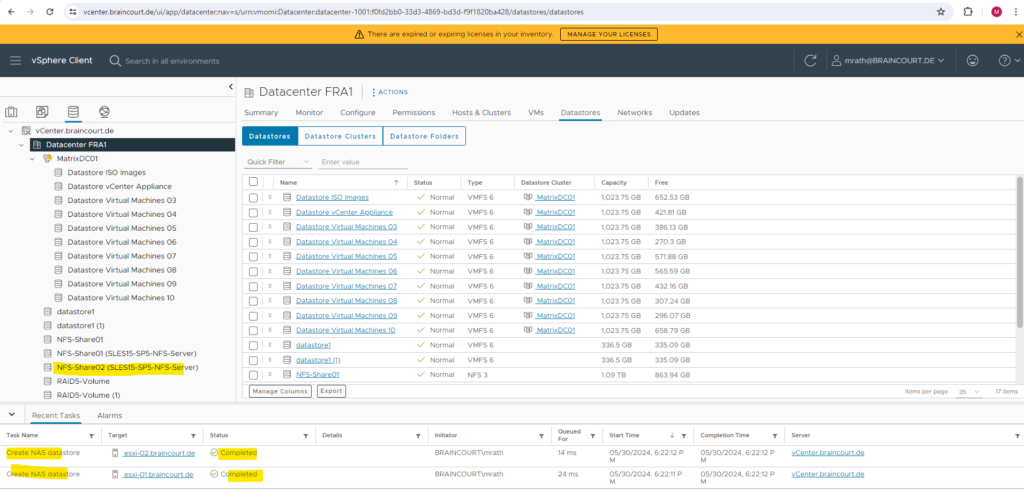

Next I will do the same for my second exported directory /nfs-share02.

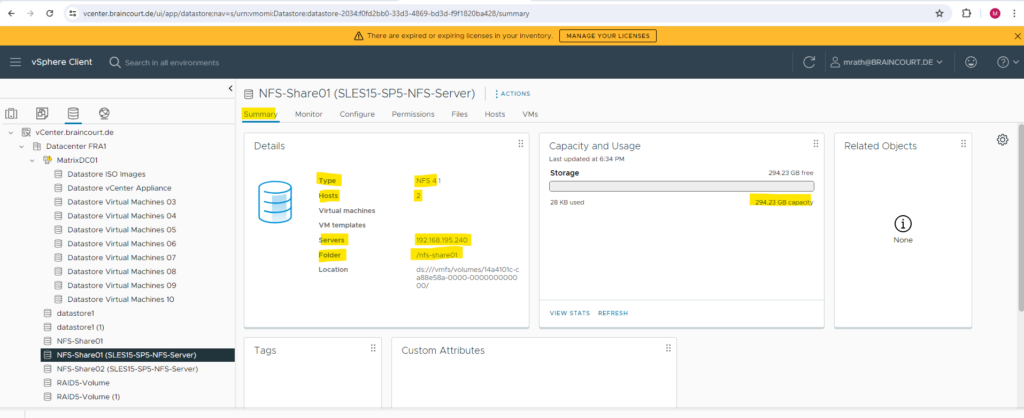

On the Summary tab of our new NFS datastore we can see detailed information about the exported directory and NFS Server.

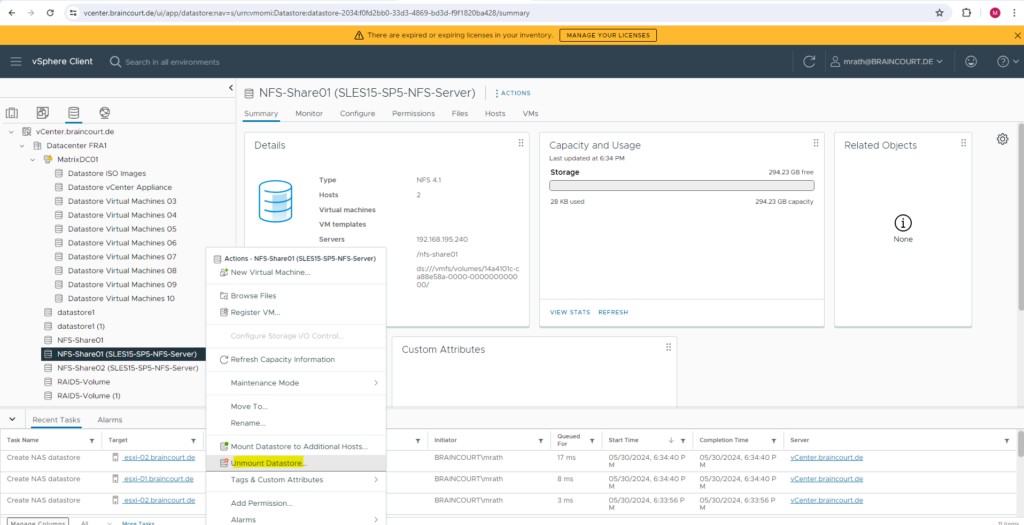

Because this NFS datastore is finally just a mounted directory (share) from the NFS Server, we cannot really delete this datastore and therefore can just unmount it.

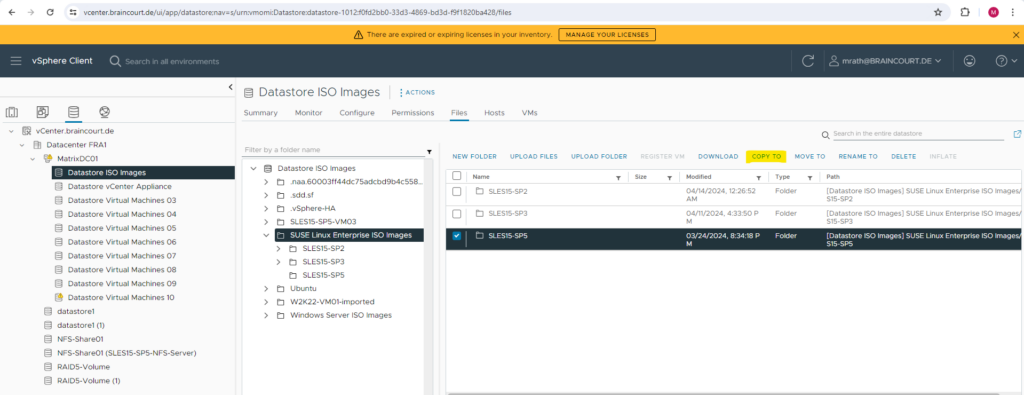

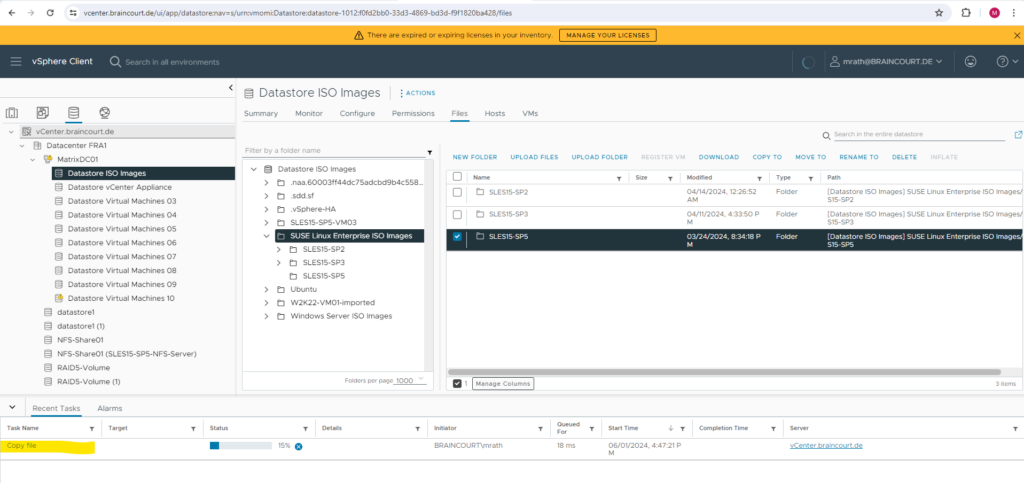

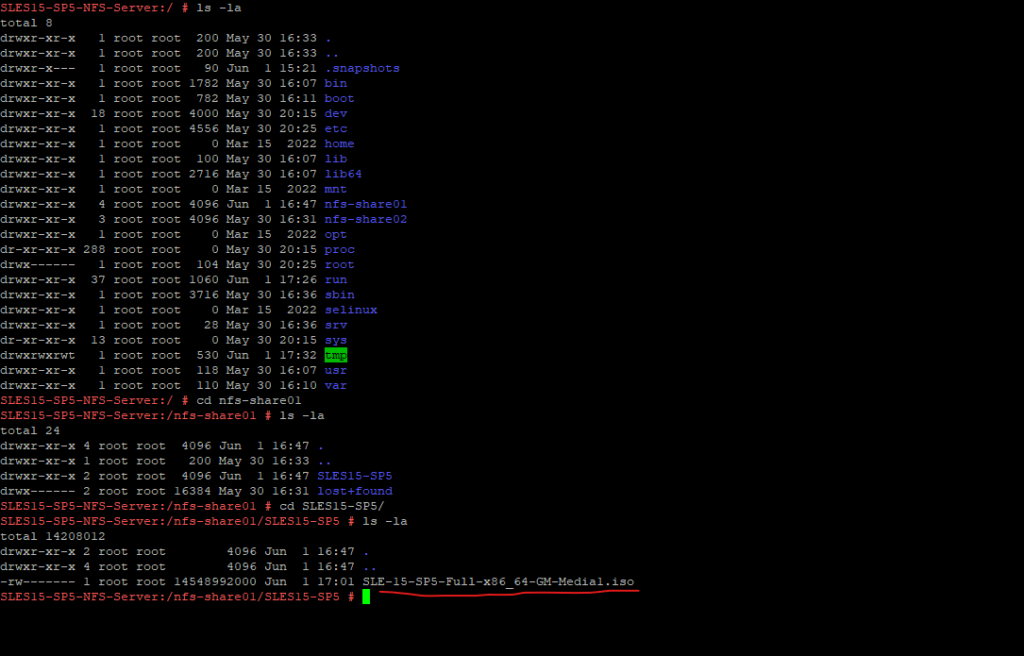

One final check if the NFS datastore really works. Therefore I will copy some files from another datastore to this new NFS datastore.

Here I will copy my SUSE Linux Enterprise ISO images stored on my datastore Datastore ISO images and copy them to the new DFS datastore.

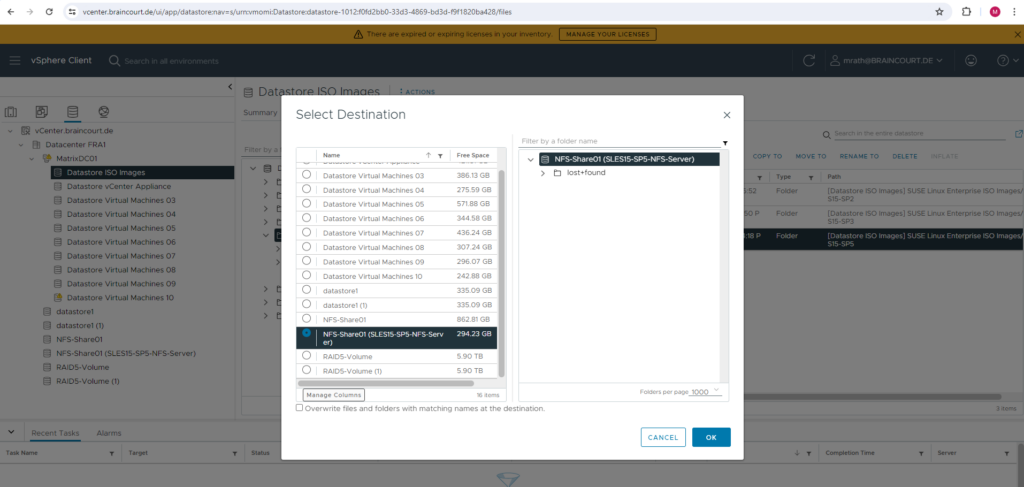

Here I will select my new NFS datastore to copy the files to.

The files will now be copied from my Datastore ISO Images (VMFS datastore) to the my new NFS datastore.

Finally the files were copied to the exported file system and share on my NFS Server.

Troubleshooting

I was first running into some issues and wasn’t able to connect and import the exports (shares) on my NFS Clients or I wasn’t able to access them to create and copy files on because having just read-only access to.

TCP Connectivity

Below I was running into an issue when trying to add a new NFS datastore on vSphere. To come right to the point, finally this issue was related to an enabled firewall on the NFS Server and therefore vSphere could connect to the NFS server and related TCP port it is listening for new connections on.

NFSv4 listens on the well known TCP port 2049.

Below you can also bind an NFS 3 datastore to the VMkernel port.

VMkernel port binding for the NFS 3 datastore on an ESXi host allows you to bind an NFS volume to a specific VMkernel adapter to connect to an NFS server. With NFS 3 datastores, you can isolate NFS traffic to a specific VMkernel adapter. To route the traffic to this adapter, connect the datastore to the adapter.

I will bind this datastore and therefore our NFS Exports (shares) to both of my ESXi hosts.

Finally click on FINISH.

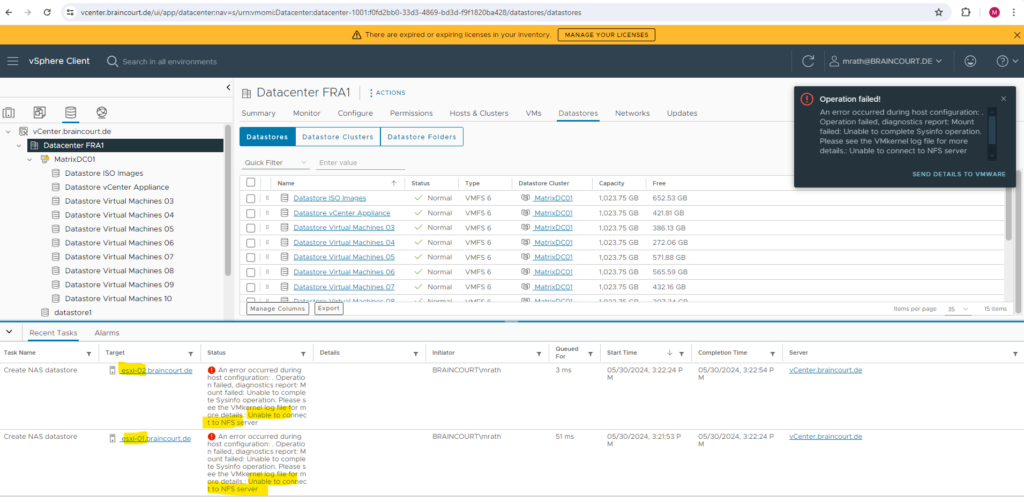

When clicking on FINISH above, I was running immediately into the following error message:

An error occurred during host configuration: . Operation failed, diagnostics report: Mount failed: Unable to complete Sysinfo operation. Please see the VMkernel log file for more details: Unable to connect to NFS server

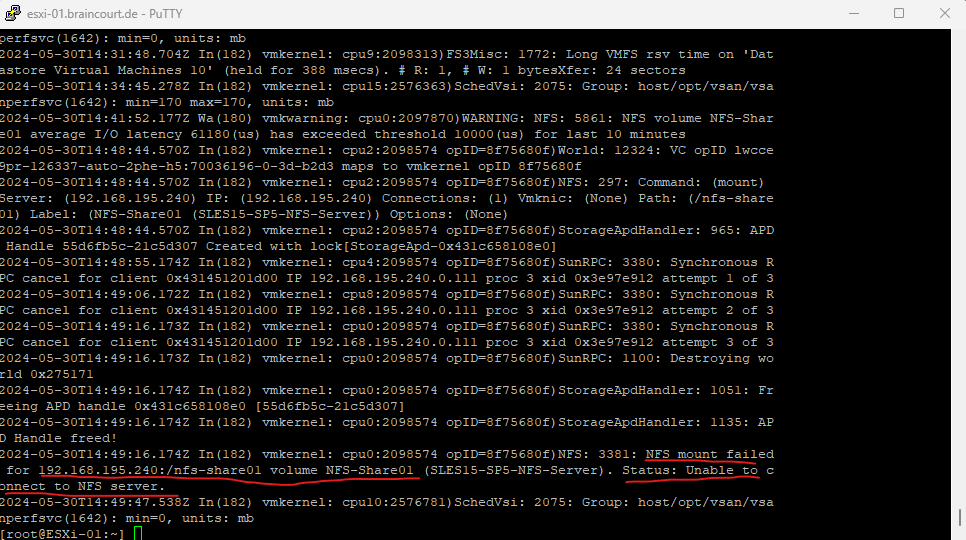

As mentioned in the error message, I was also checking the VMkernel log file directly on the ESXi host but unfortunately also didn’t get much more information about why the ESXi host was unable to connect to the NFS server.

An error occurred during host configuration: . Operation failed, diagnostics report: Mount failed: Unable to complete Sysinfo operation. Please see the VMkernel log file for more details.: Unable to connect to NFS server

ESXi Log File Locations

VMkernel -> /var/log/vmkernel.log

Records activities related to virtual machines and ESXi.

# cat /var/log/vmkernel.log

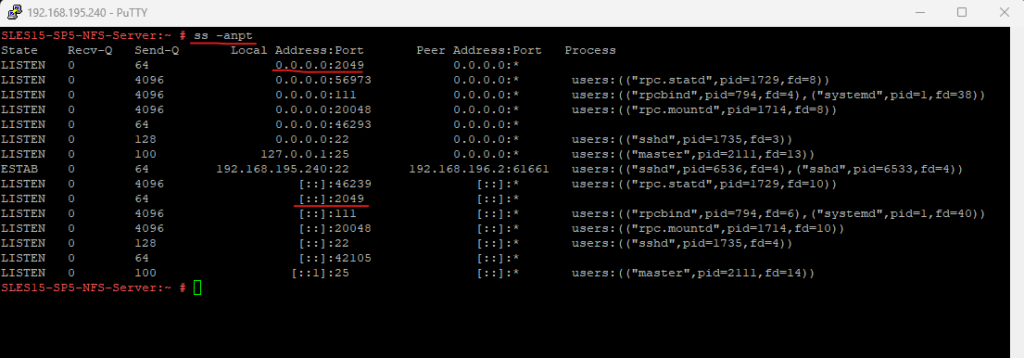

NFSv4 listens on the well known TCP port 2049. Below the ss -anpt command executed on my NFS Server.

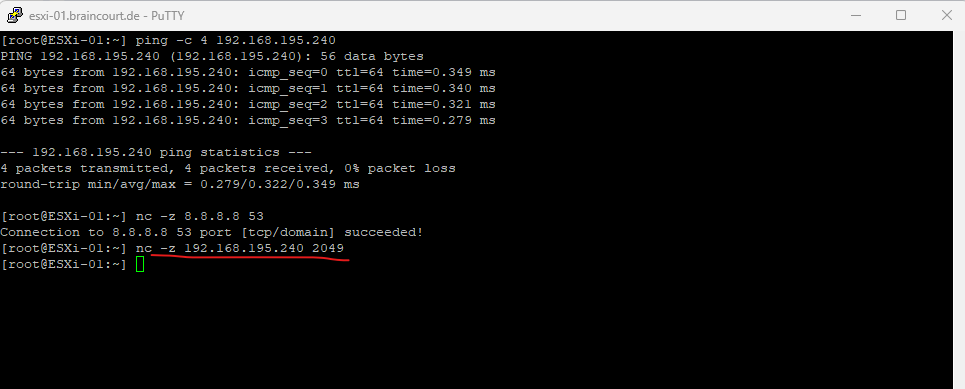

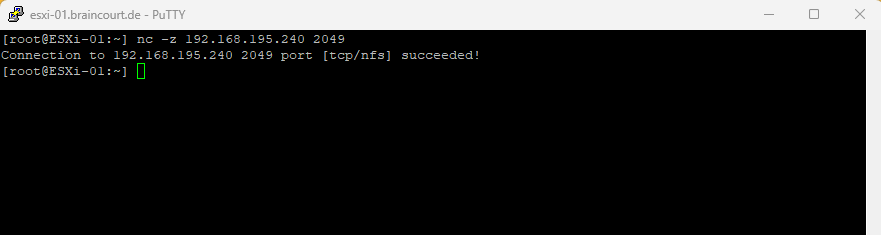

So I will first test if I can connect from the ESXi host to the TCP port 2049 on my NFS Server. Below I was first checking if I could ping the NFS Server which succeeds, then I was first testing the netcat (nc) command which you can use to test TCP connectivity like with telnet or the PowerShell cmdlet Test-NetConnection on Windows.

As you can see, my first test to connect to the Google DNS Server 8.8.8.8 on TCP port 53 succeeded. Then I was trying to connect to my NFS Server (IP 192.168.195.240) on TCP port 2049 which failed. So because the service (daemon on Linux) is running and listening on my NFS Server, I guess the firewall is enabled on the NFS Server and will block access to.

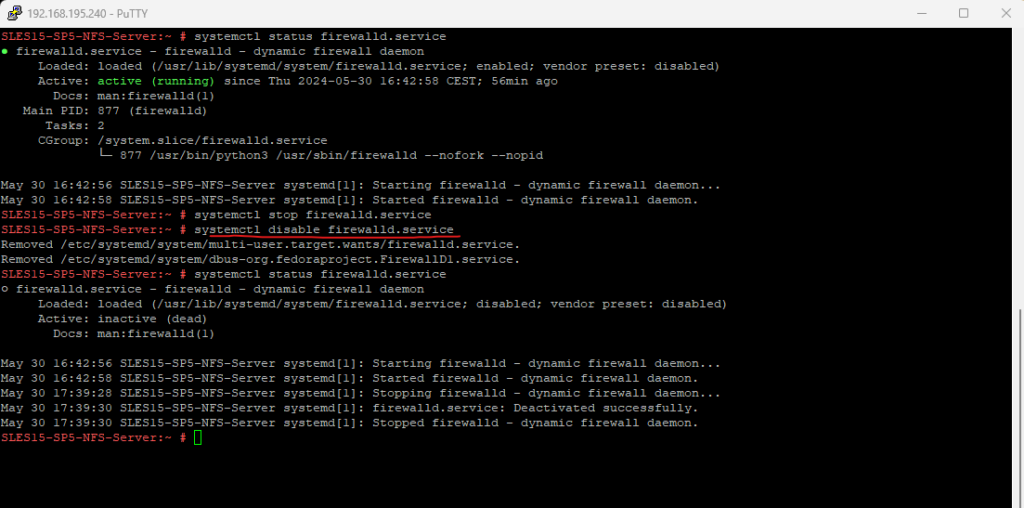

On my NFS Server I will now disable the firewall. For production environments you will of course just open the NFS TCP port 2049 inbound for all NFS Clients they need access to.

More about configuring the firewalld on SUSE Linux Enterprise Server, you will find in my following post https://blog.matrixpost.net/set-up-suse-linux-enterprise-server/#firewall.

Another test to access NFS TCP port 2049 from one of my ESXi hosts now succeeds and I was finally also able to mount the new NFS datastore in vSphere.

Read-only permissions on exported file system

As mentioned I was now able to mount the new NFS datastore in vSphere, unfortunately I wasn’t able to copy some files from vSphere on this new NFS datastore. So it seems so far I just had read-only permissions on the NFS datastore.

By default the exports will have the following options set.

ro,root_squash,sync,no_subtree_check

To finally have read-write access to the NFS exports, we need to change the following two options.

ro -> change to rw | ro mounts the exported file system as read-only. To allow hosts to make changes to the file system, you have to specify the rw read-write option.

root_squash -> change to no_root_squash | root_squash prevents root user connected remotely from having root privileges and assigns them the user ID for the user nfsnobody. This effectively “squashes” the power of the remote root user to the lowest local user, preventing unauthorized alteration of files on the remote server.

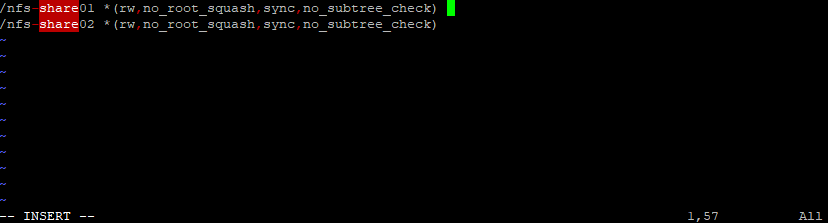

We can also change these options directly in the /etc/exports file.

Finally restart the nfs-server.service so that the changes immediately take effect.

# systemctl restart nfs-server.service

From now on I was also able to copy files from vSphere to the new NFS datastore.

Links

How to create an NFS Server and mount a share to another Linux server

https://www.suse.com/support/kb/doc/?id=000018384Sharing file systems with NFS

https://documentation.suse.com/sles/15-SP3/html/SLES-all/cha-nfs.htmlChapter 24. Exporting NFS shares

https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/8/html/system_design_guide/exporting-nfs-shares_system-design-guideThe /etc/exports Configuration File

https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/5/html/deployment_guide/s1-nfs-server-config-exportsBest Practices For Running NFS with VMware vSphere

https://core.vmware.com/resource/best-practices-running-nfs-vmware-vsphereNFS Datastore Concepts and Operations in vSphere Environment

https://docs.vmware.com/en/VMware-vSphere/8.0/vsphere-storage/GUID-9282A8E0-2A93-4F9E-AEFB-952C8DCB243C.htmlConfigure VMkernel Binding for NFS 3 Datastore on ESXi Host

https://docs.vmware.com/en/VMware-vSphere/8.0/vsphere-storage/GUID-62A8B27B-B1E0-4E99-A001-C815A70746FA.htmlESXi Log File Locations

https://docs.vmware.com/en/VMware-vSphere/7.0/com.vmware.vsphere.monitoring.doc/GUID-832A2618-6B11-4A28-9672-93296DA931D0.html